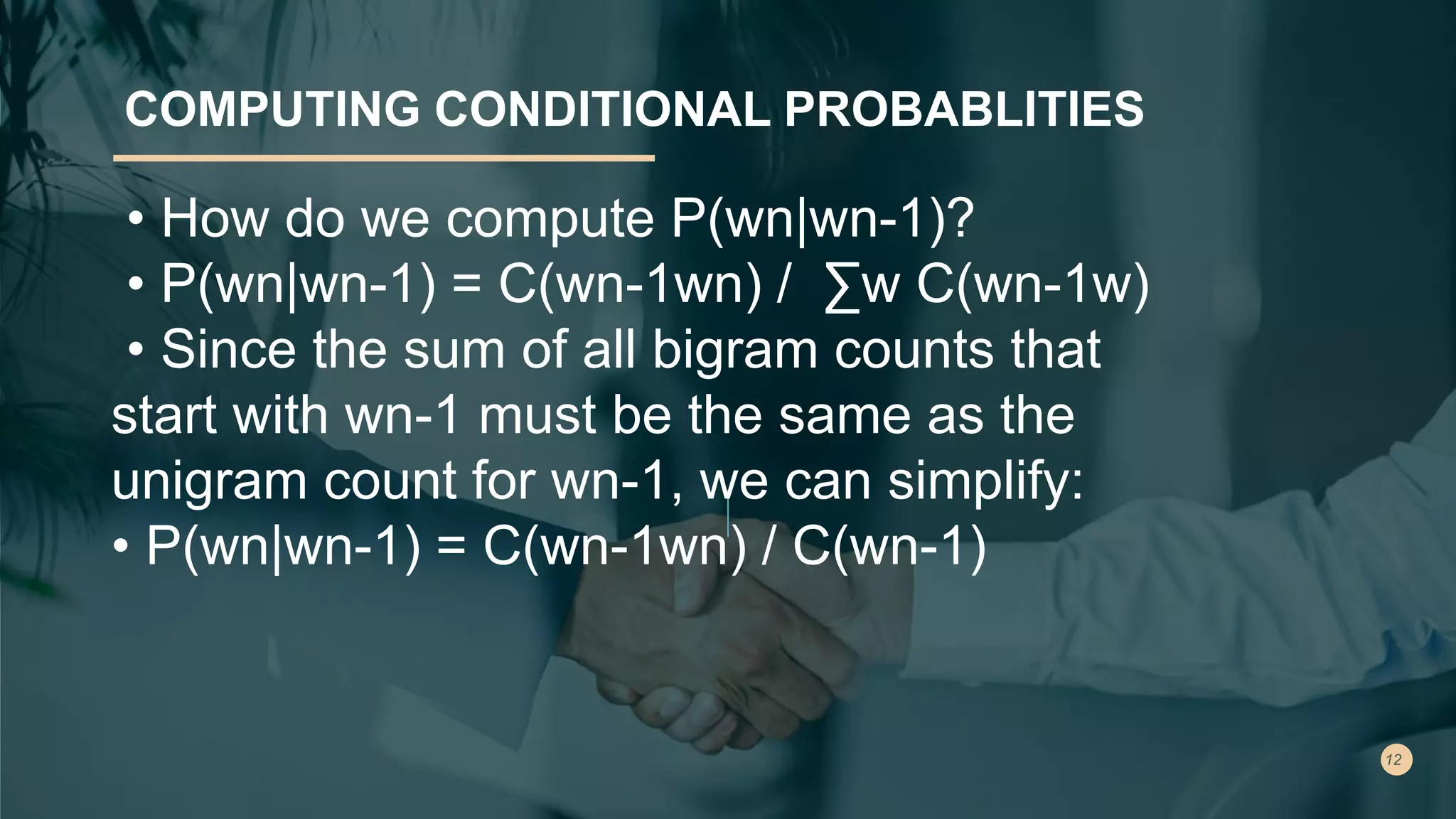

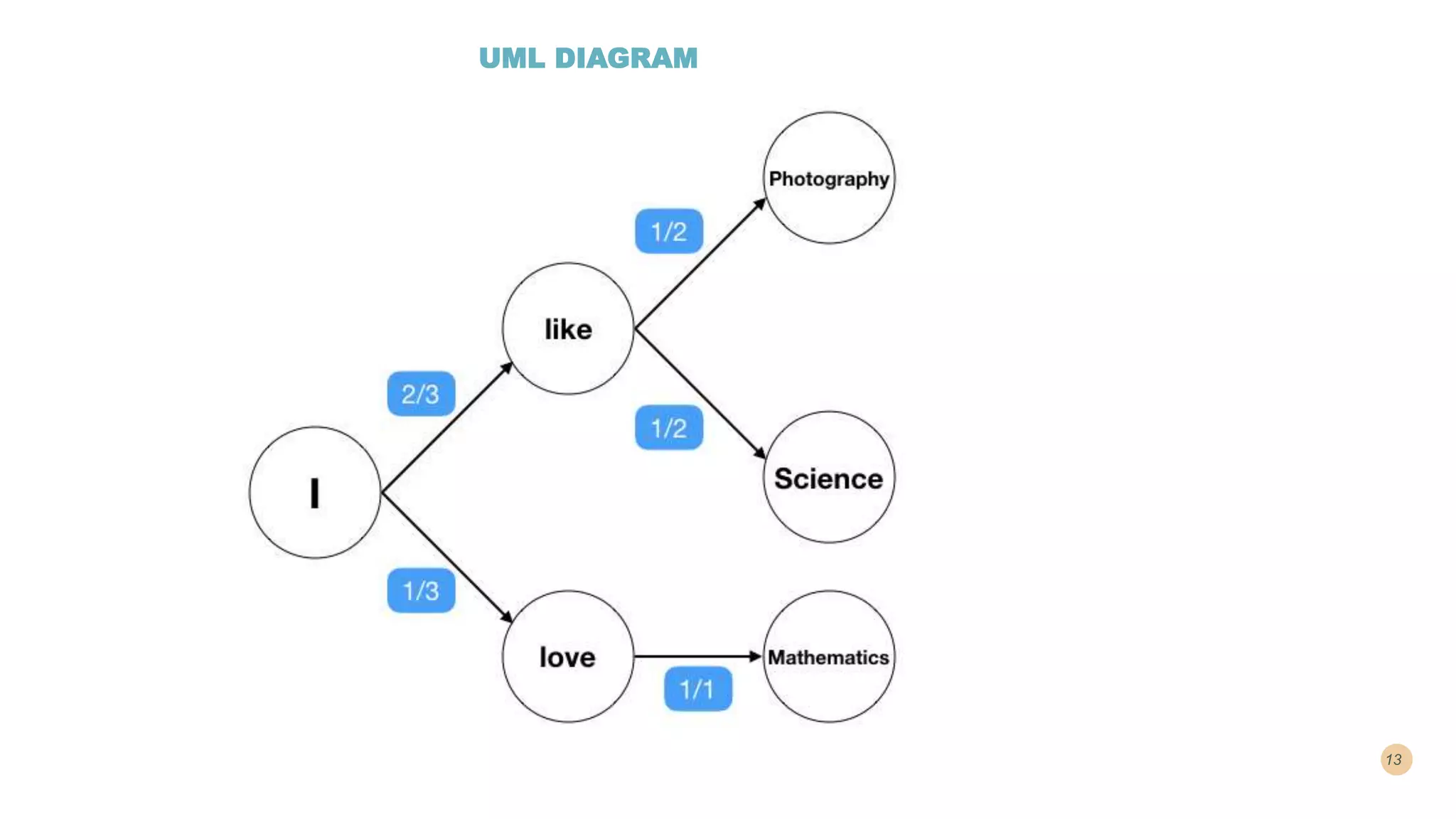

This document discusses building a next word prediction model using deep learning methods like LSTMs. It will take text as input, preprocess and tokenize the data, then build a deep learning model to predict the next word based on the previous words. Simple word prediction models use n-grams to calculate conditional word probabilities based on occurrence counts from text corpora. Bigram and trigram models are discussed as ways to predict the next word based on the previous one or two words in a sequence.

![COUNTING TYPES AND TOKENS IN CORPORA

• Prior to computing conditional probabilities, counts are

needed.

• Most counts are based on word form (i.e. cat and cats

are two distinct word forms).

• We distinguish types from tokens:

• “We use types to mean the number of distinct words

in a corpus”

• We use “tokens to mean the total number of running

words.” [pg. 195]](https://image.slidesharecdn.com/nextword-220119061745/75/Next-word-Prediction-7-2048.jpg)