This document summarizes a presentation on news recommender systems. It discusses:

1) The speaker's background and experience developing recommender systems for news websites.

2) The challenges of building recommender systems for news domains, which have only implicit feedback and where time is important.

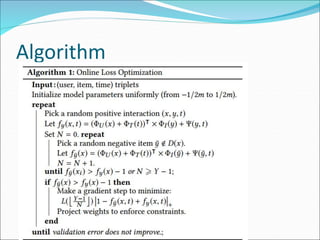

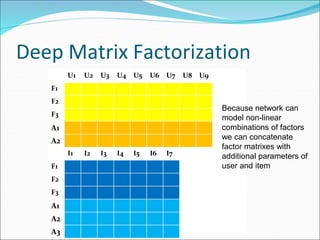

3) The models developed, including matrix factorization models that incorporate time and additional features to address cold start problems for new users and items.

4) How the models were deployed into a full recommendation system using technologies like TensorFlow, Docker, and Flask.