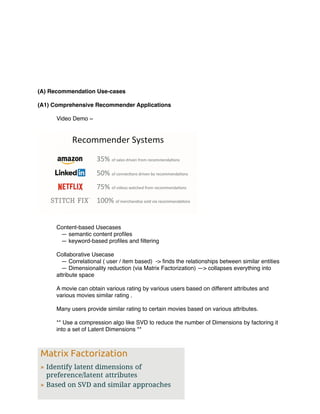

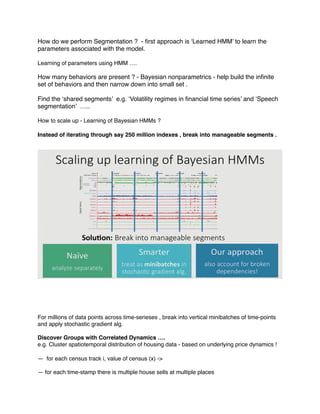

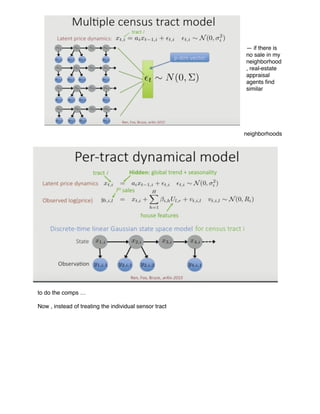

This document summarizes several practical machine learning applications and use cases that were presented at various conferences through video links and slides. It discusses advanced recommender applications, product ranking, natural language understanding, digital marketing, personalized content blending, anomaly and pattern detection in time-series data, and deep learning applications. Specific use cases covered include recommendations at Amazon, StitchFix, Netflix, natural language processing of product reviews, medical data analysis, digital advertising optimization at AOL, personalized recommendations at Pinterest, anomaly detection at Intel, and question answering, image recognition and dialogue systems using deep learning.