This document provides instructions for setting up a 4-node Hadoop cluster on Amazon EC2 instances in 4 major steps:

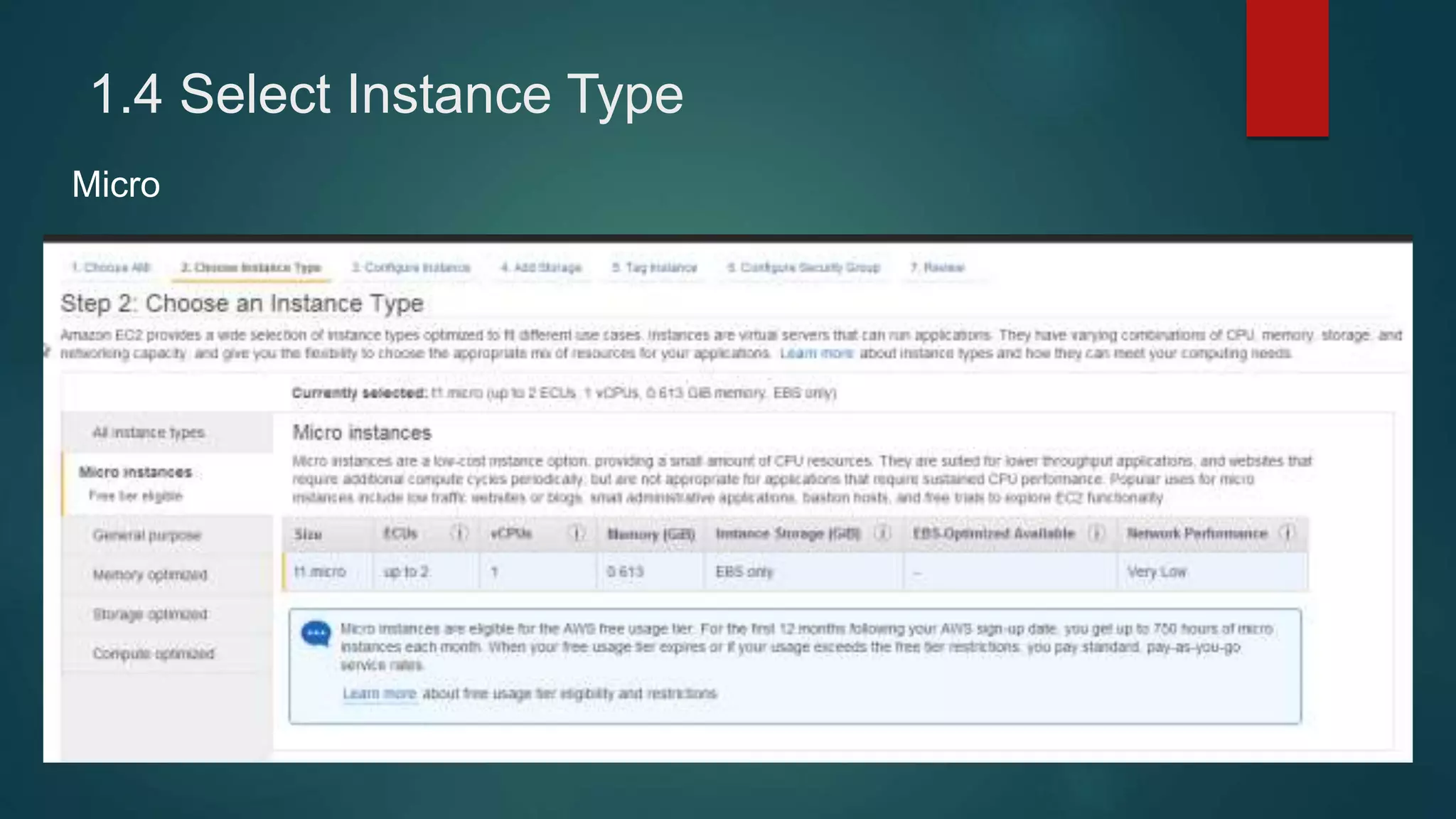

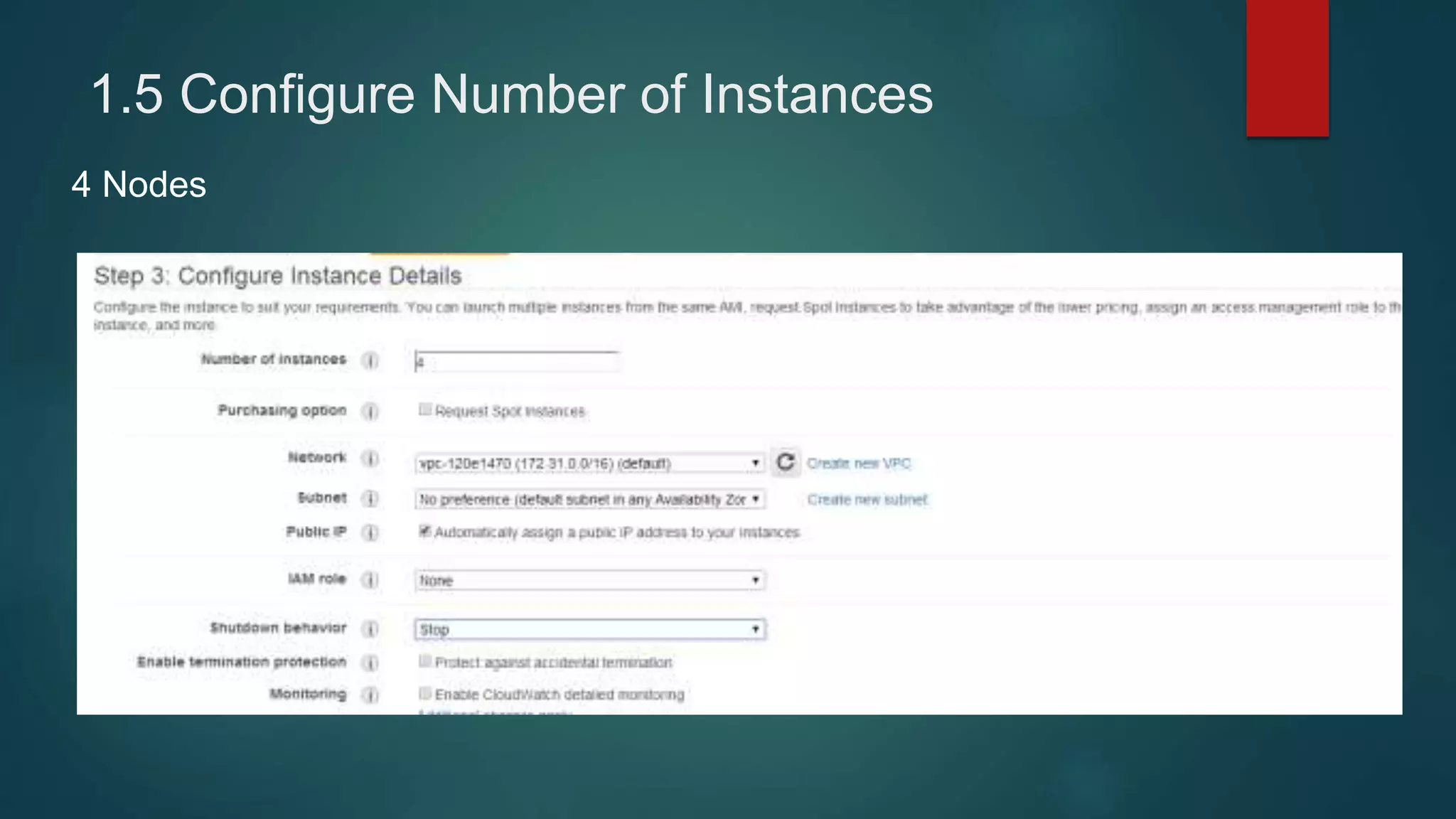

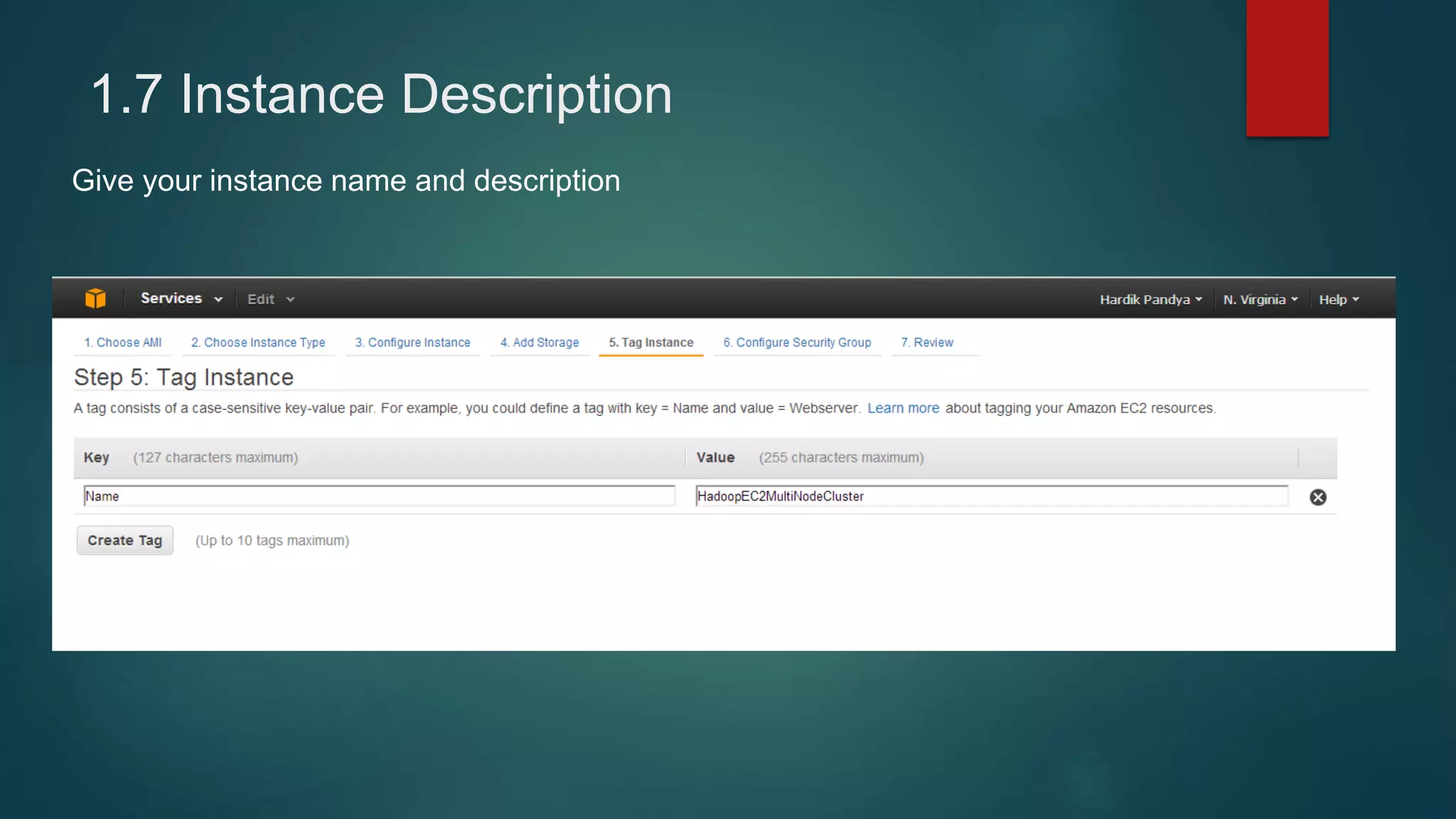

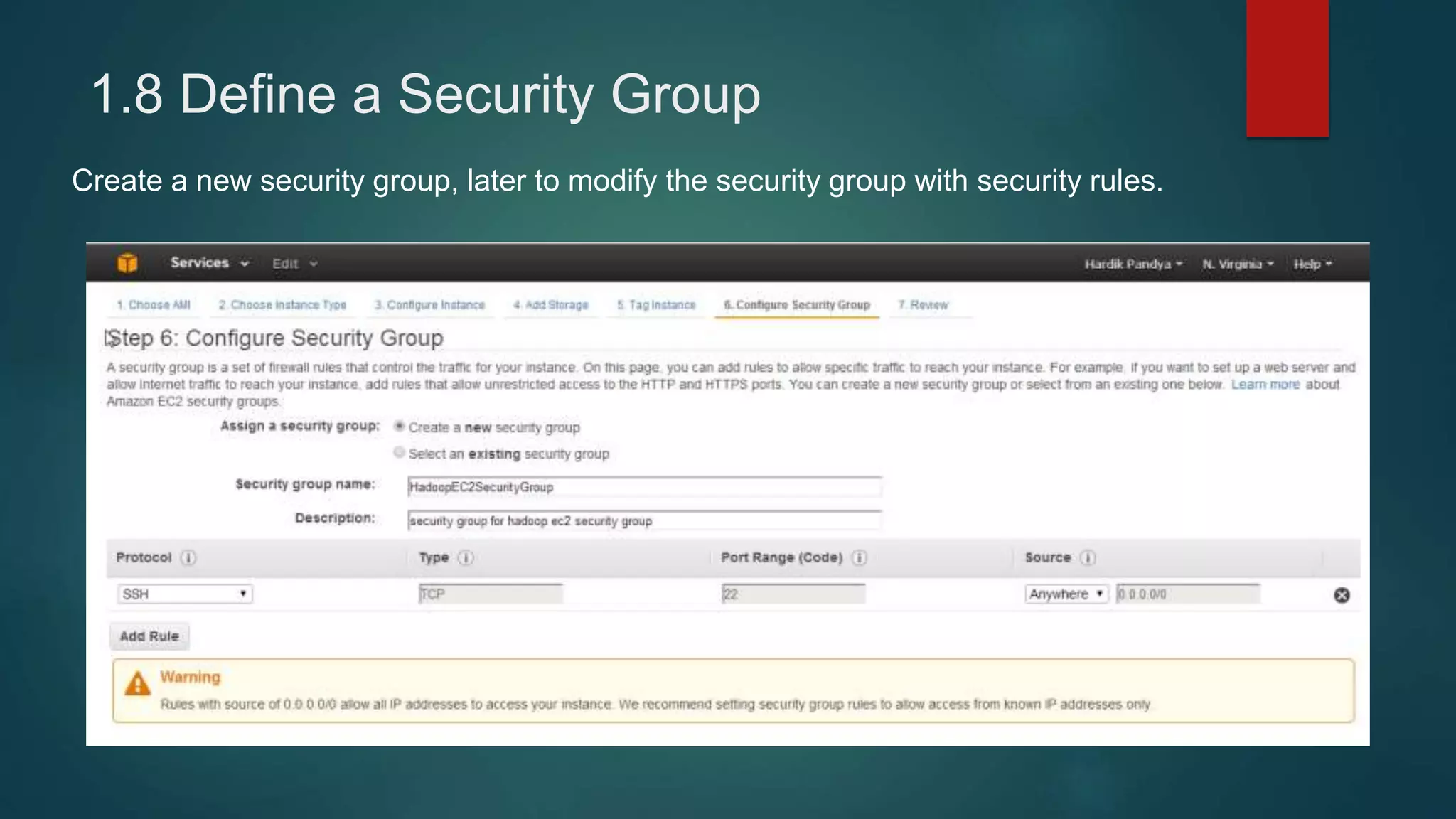

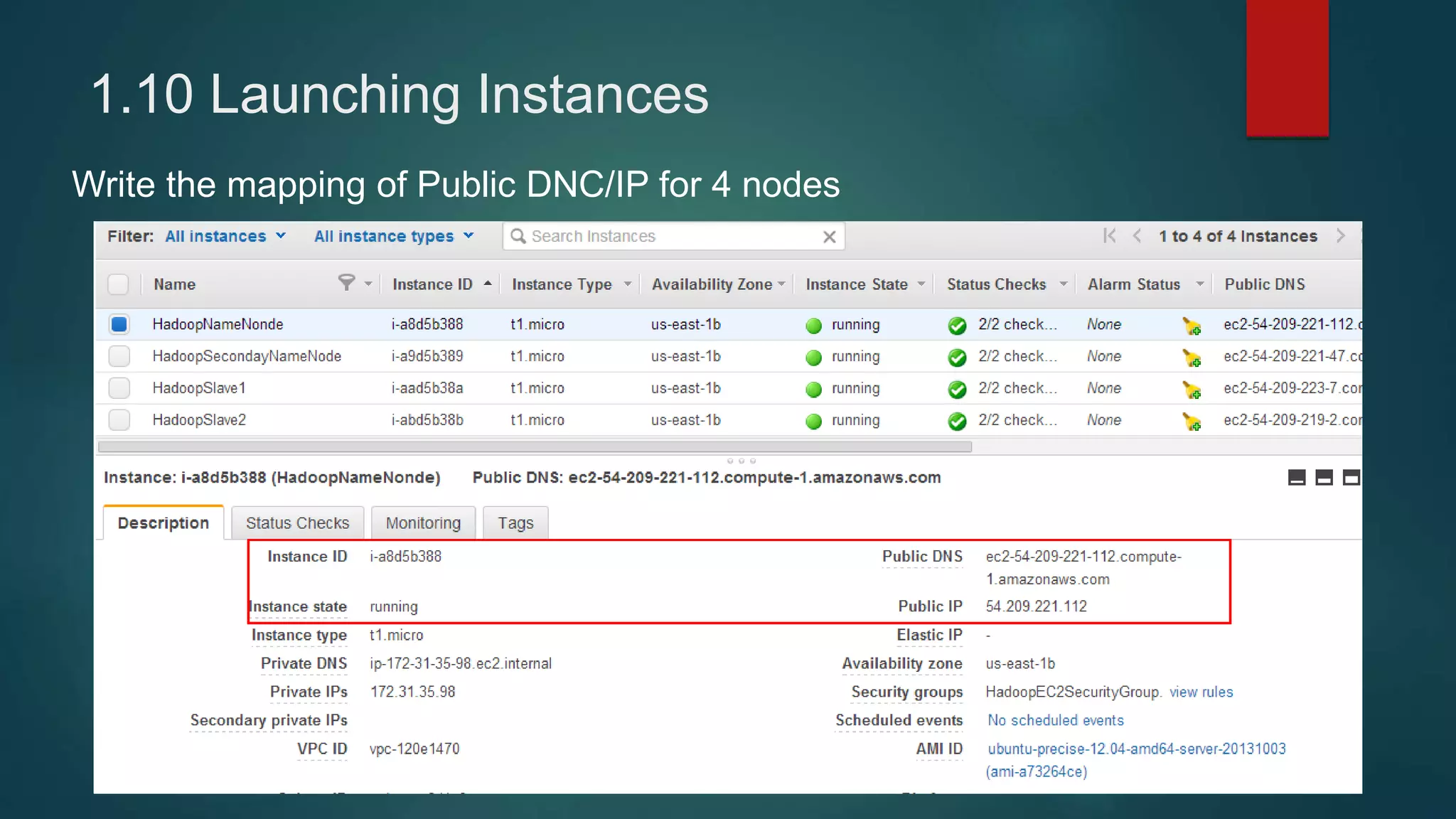

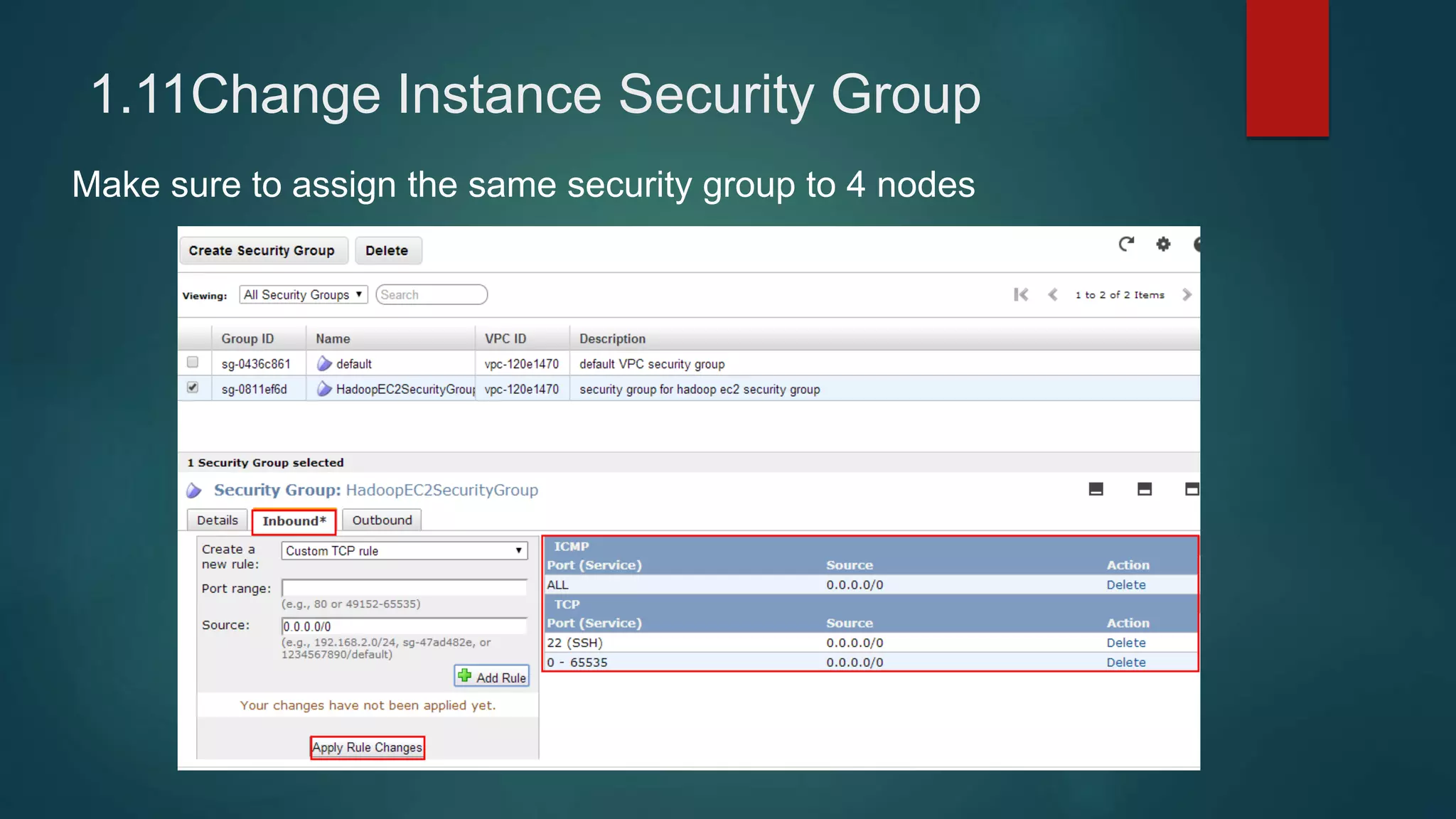

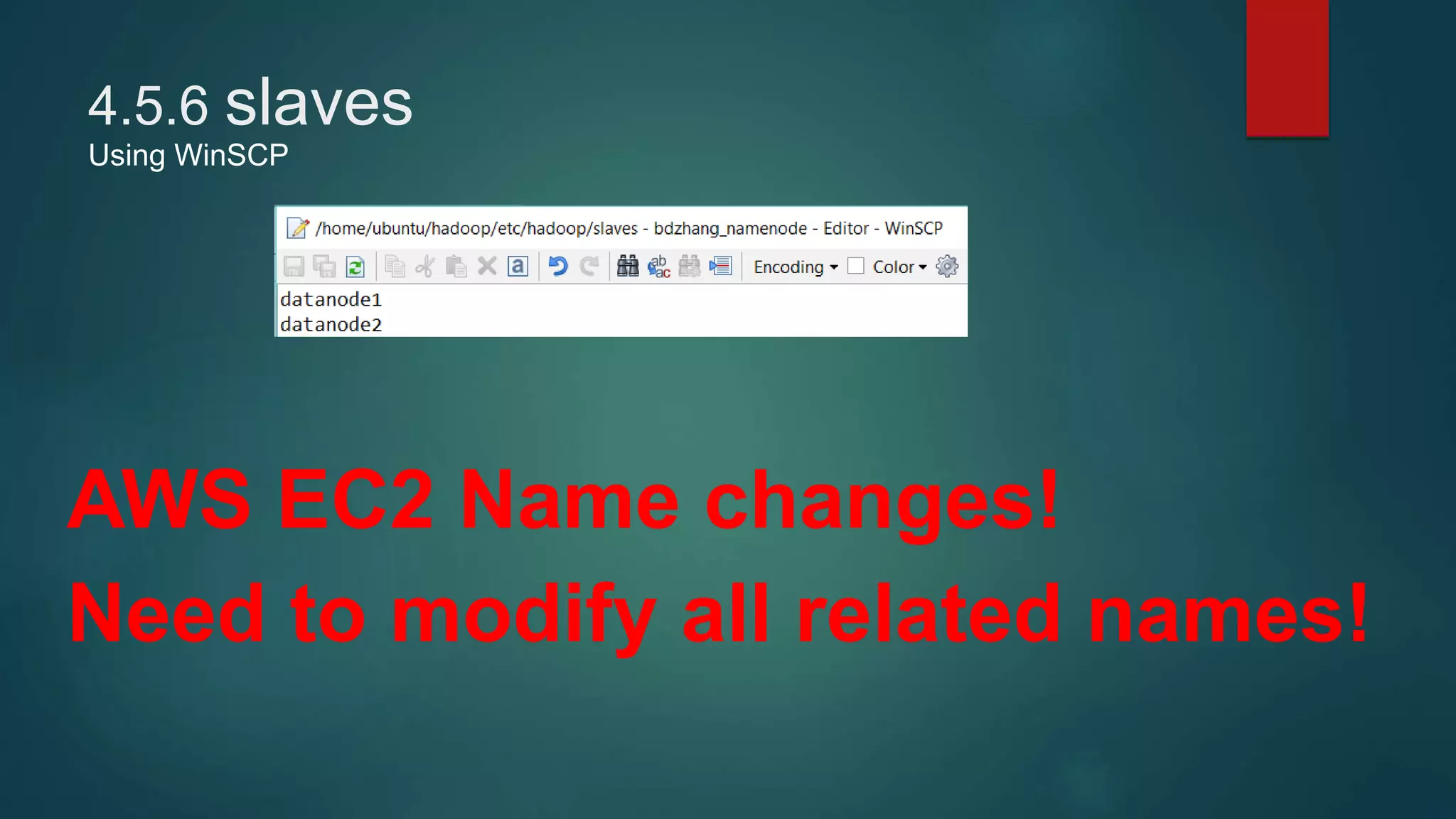

1) Setting up 4 EC2 instances and configuring security groups

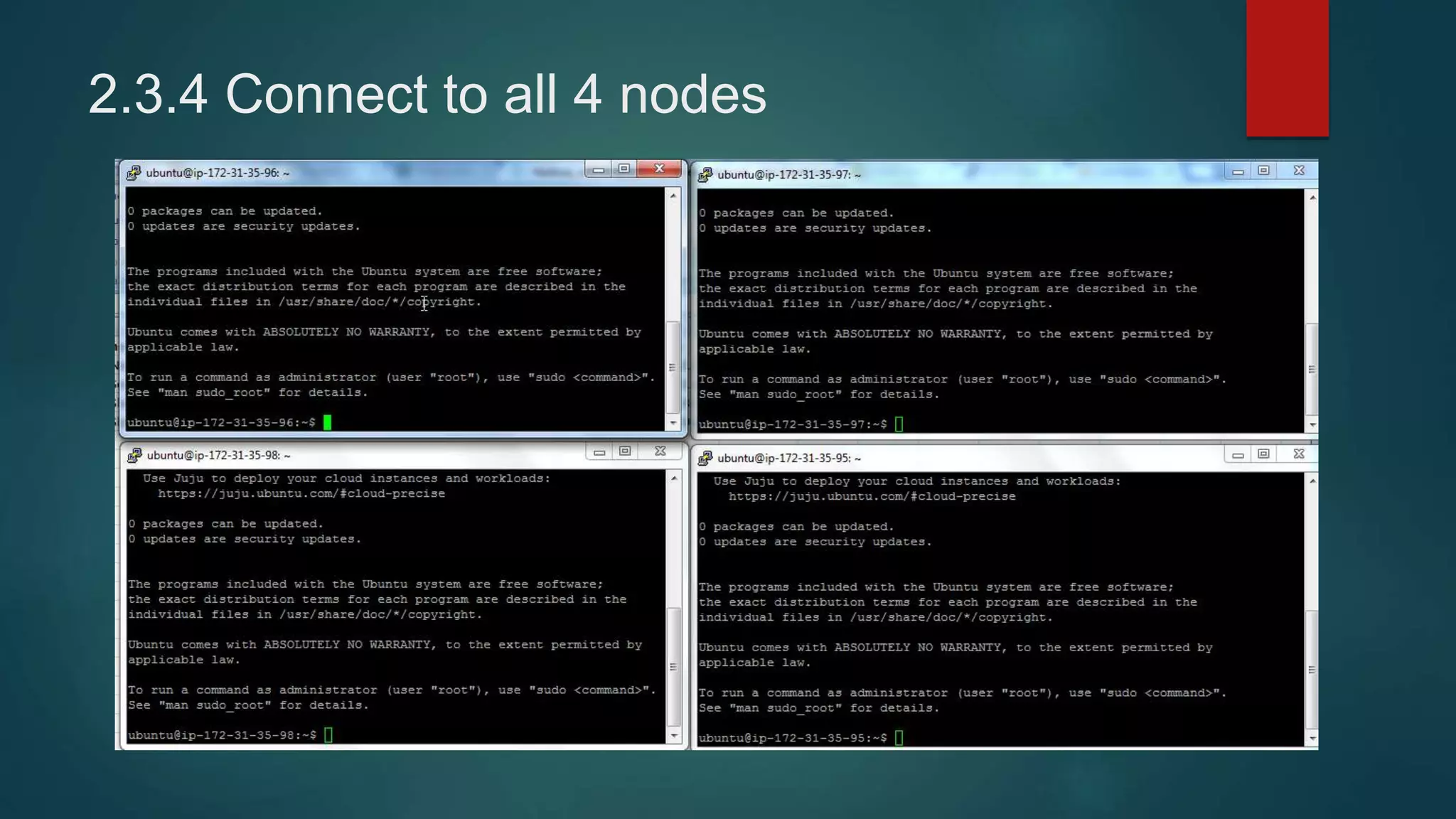

2) Setting up client access to the instances using Putty and generating keys

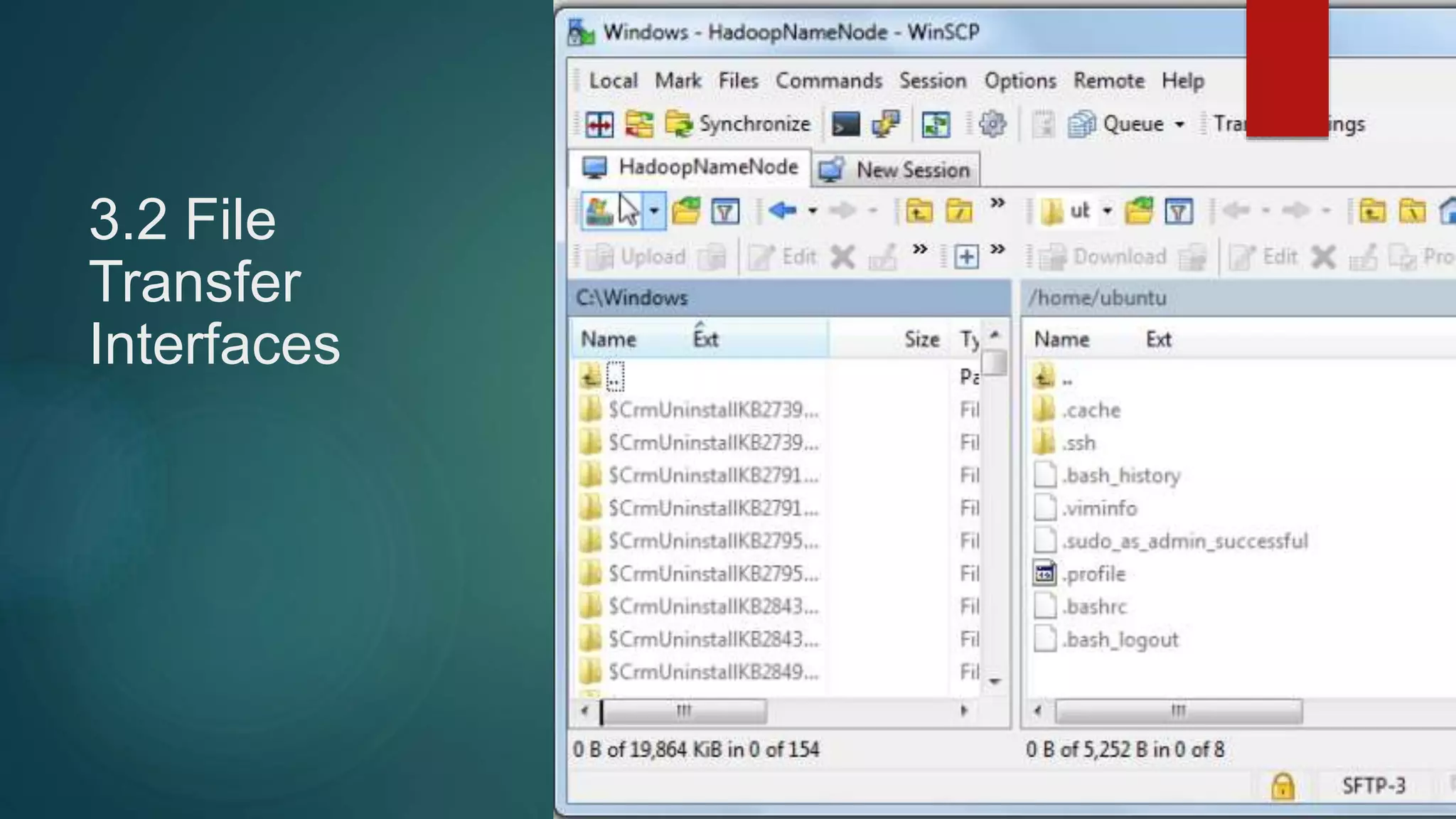

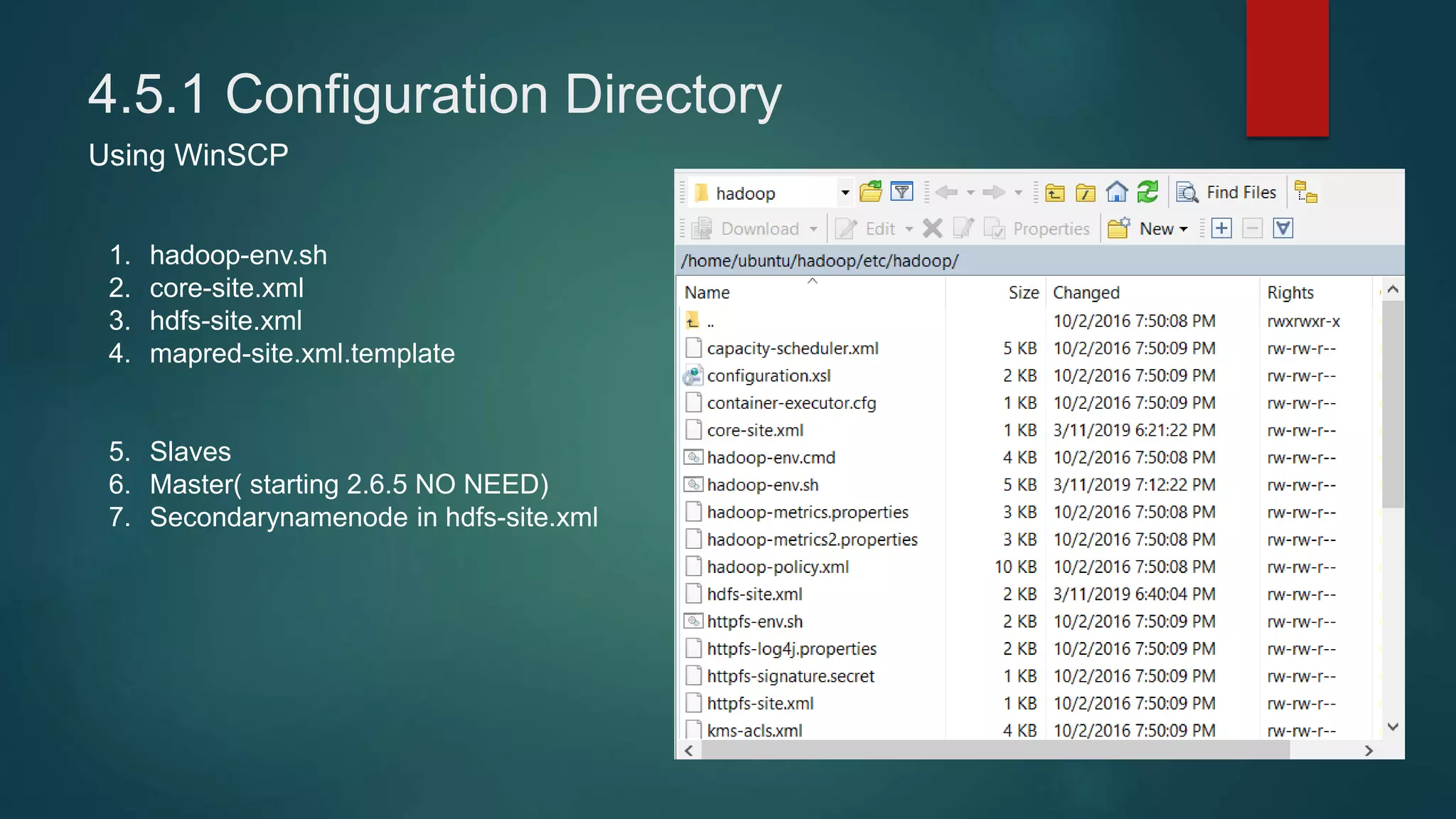

3) Setting up WinSCP access using the generated keys

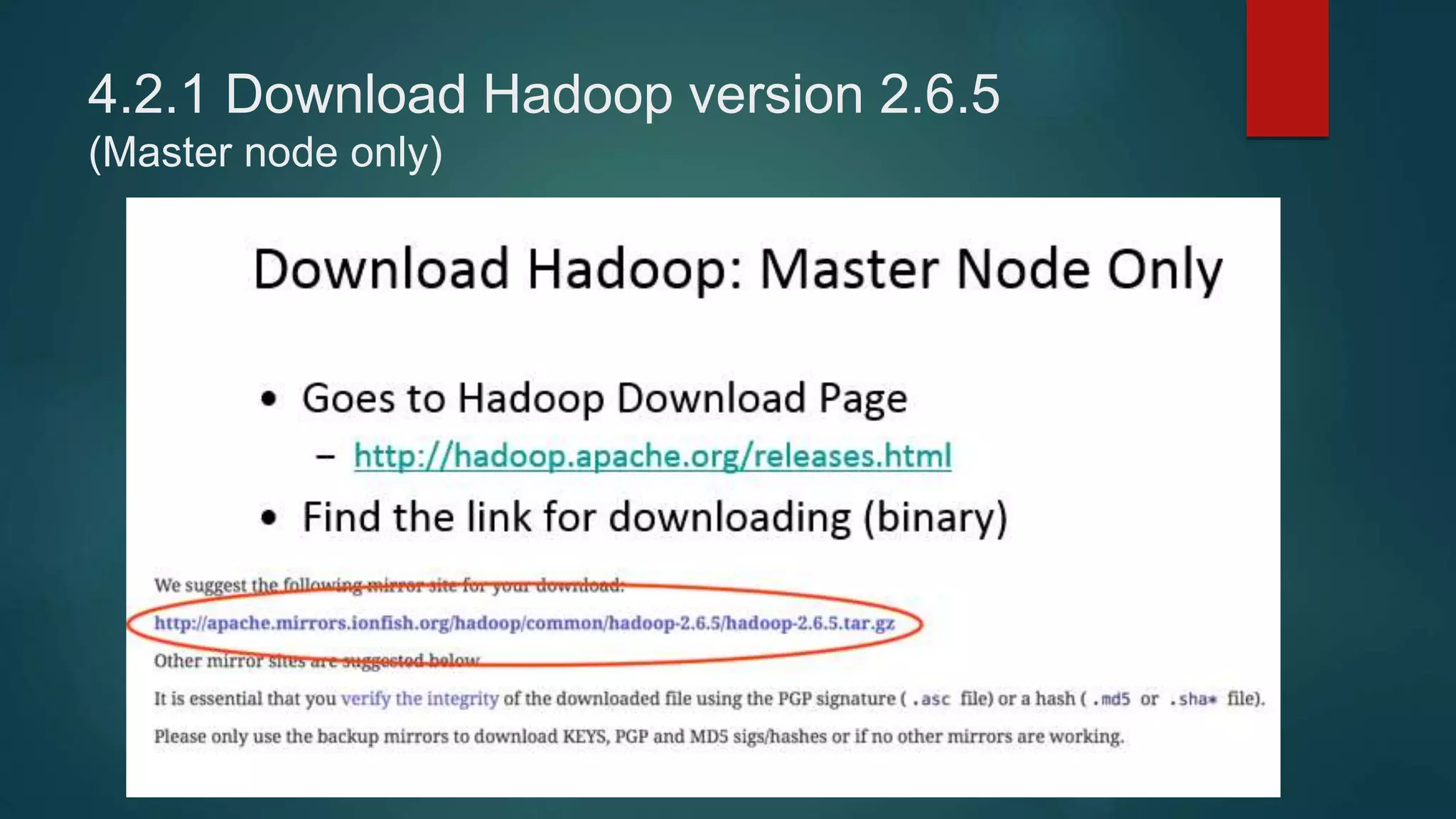

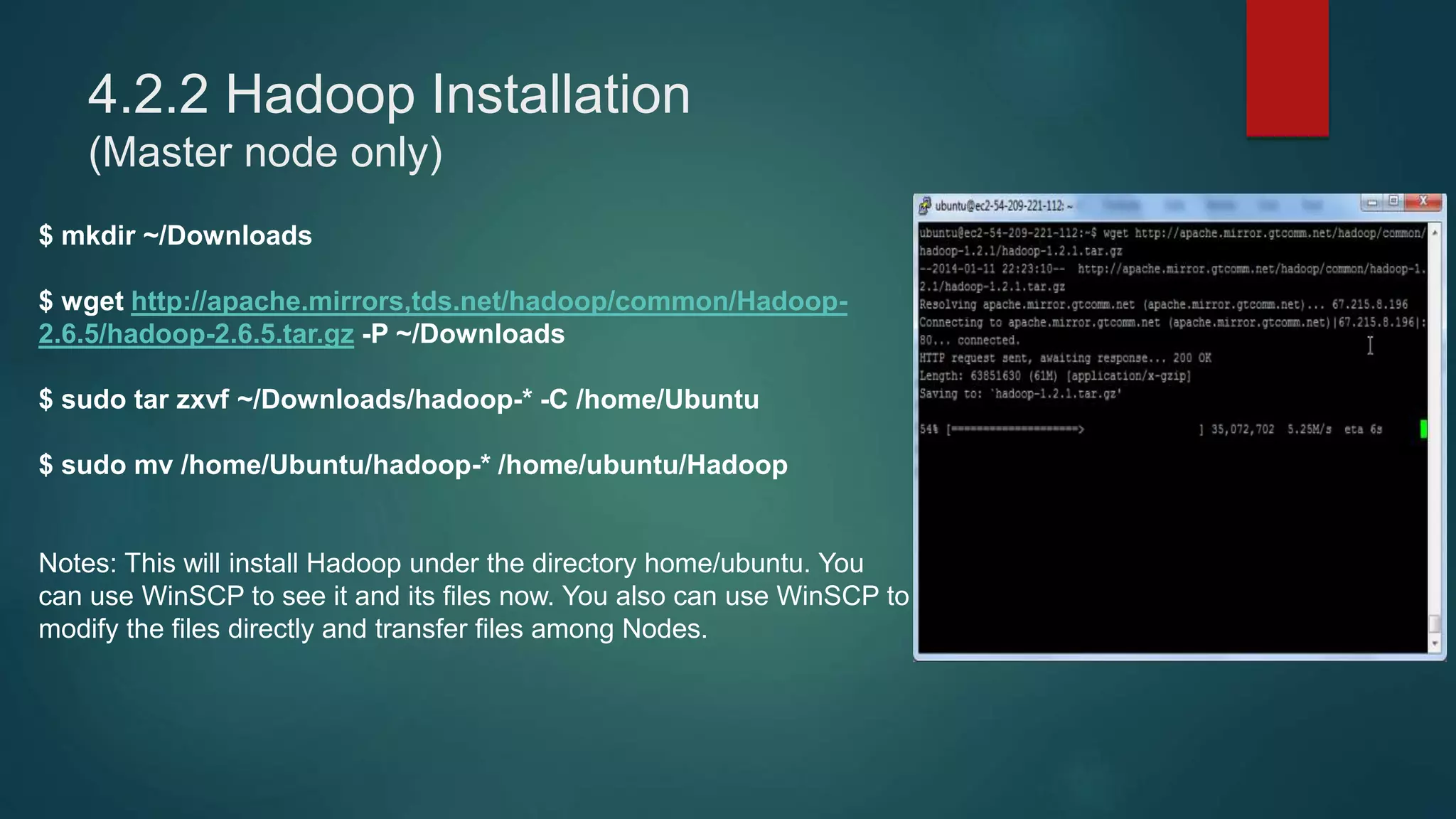

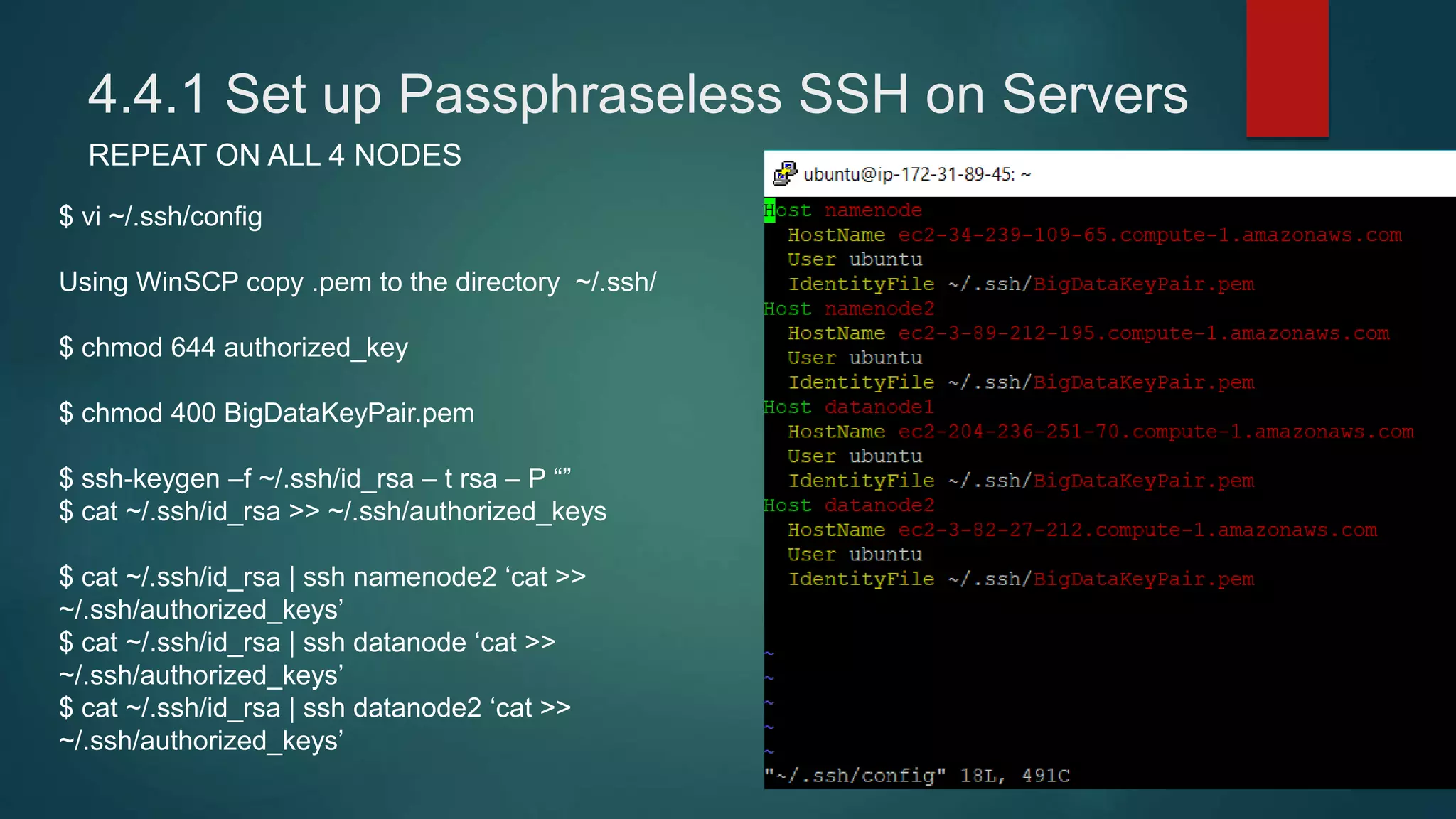

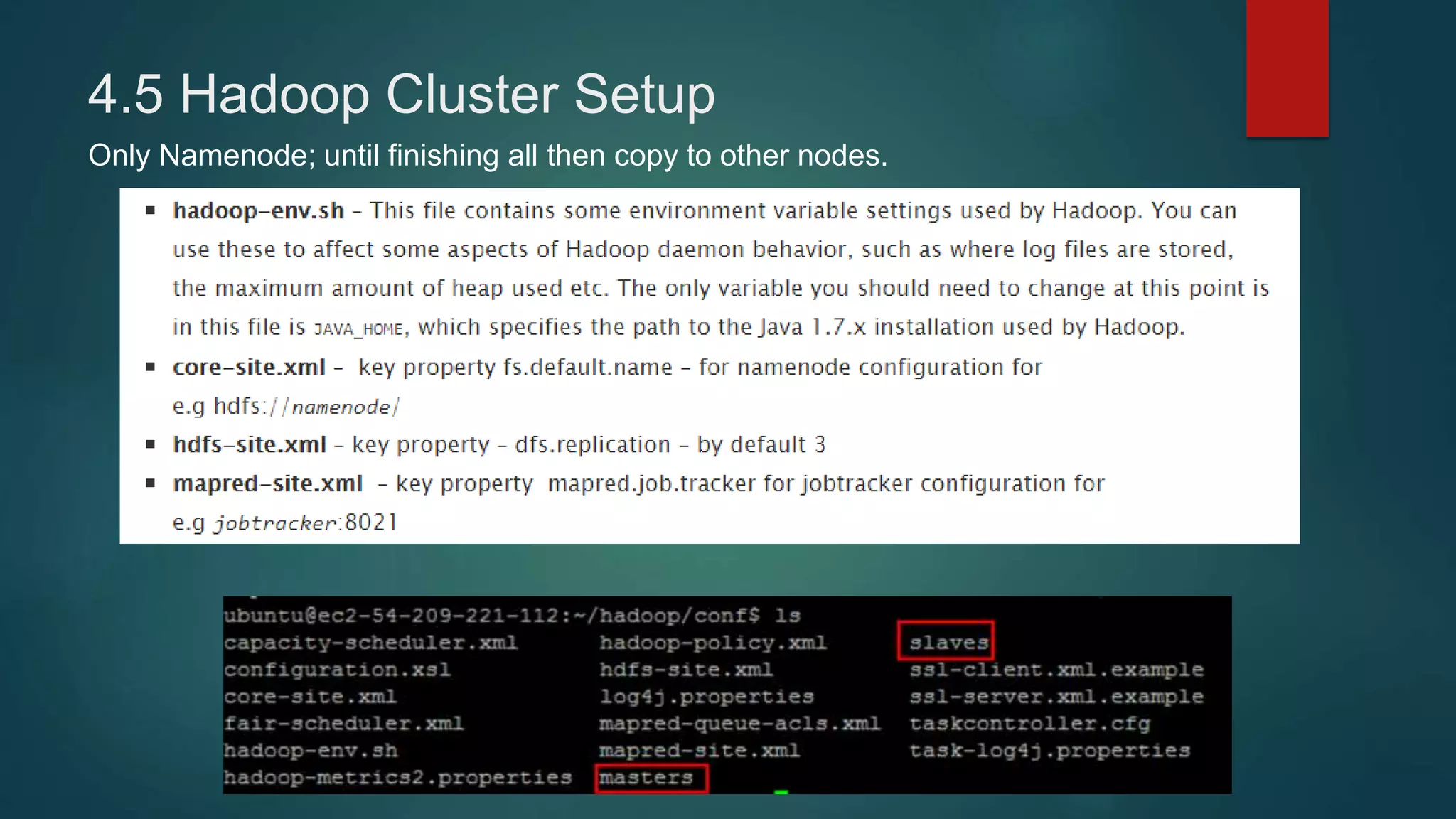

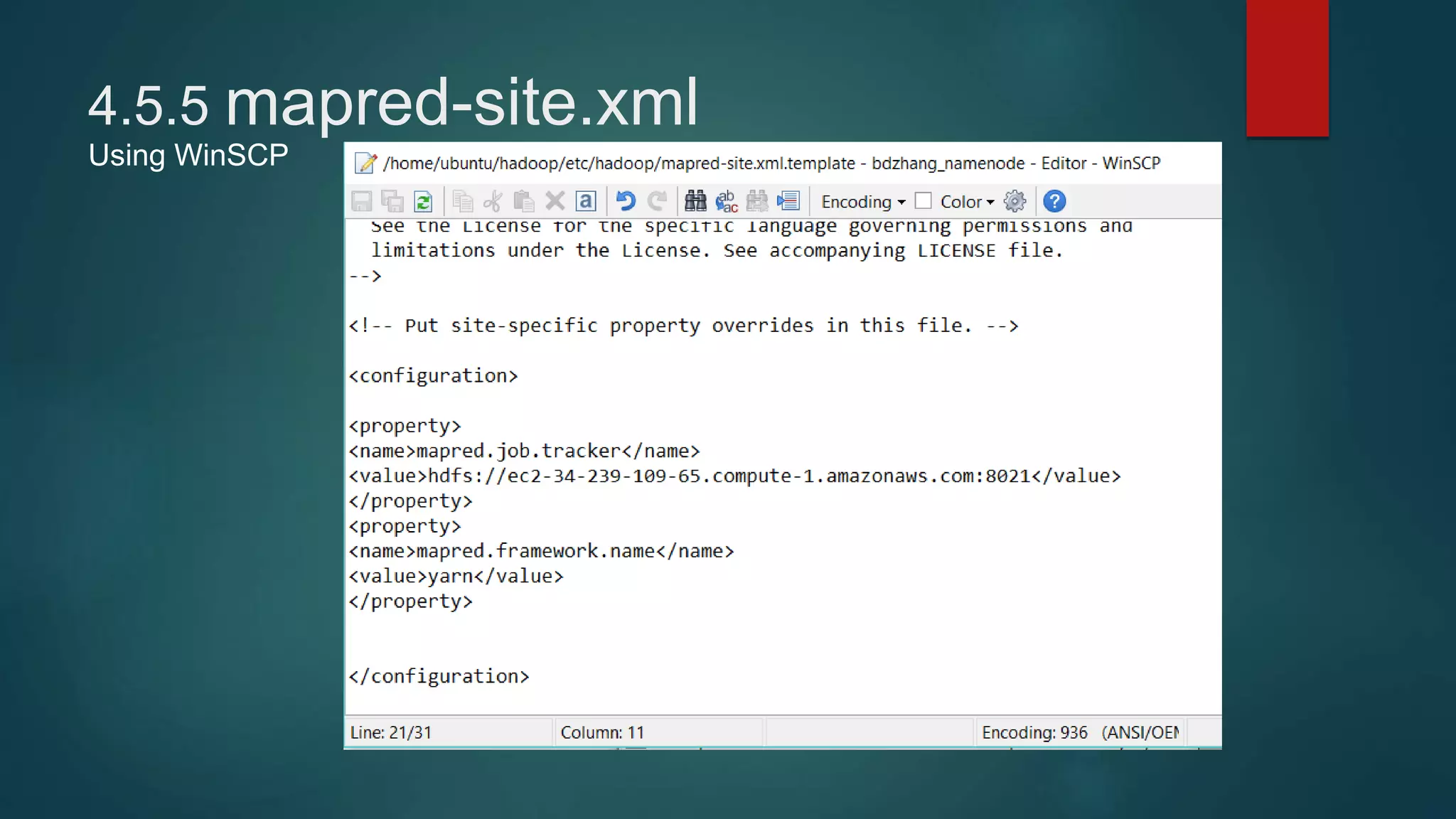

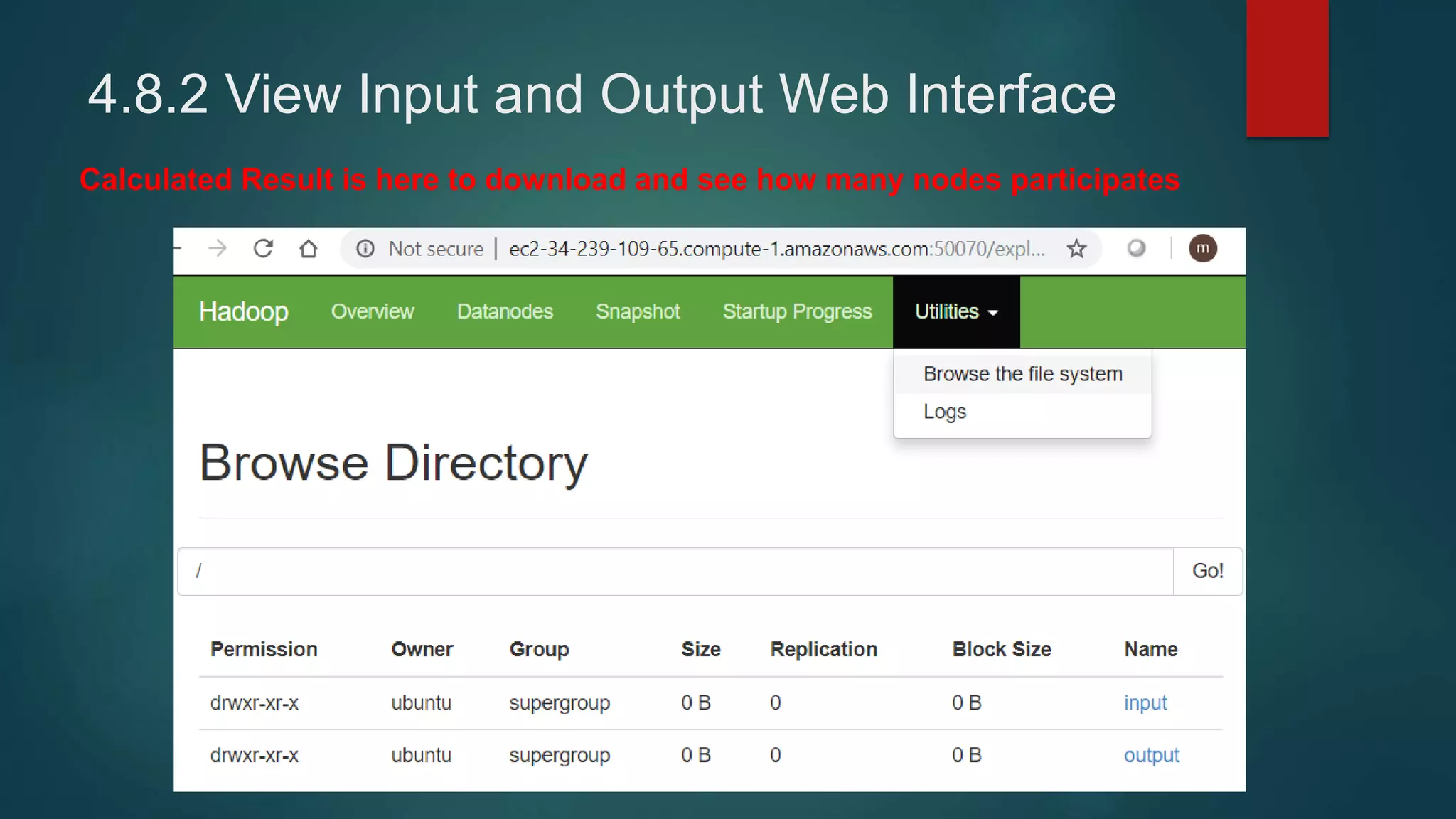

4) Installing Java, Hadoop, and configuring the Hadoop distributed filesystem and YARN, including enabling passphraseless SSH access between nodes.