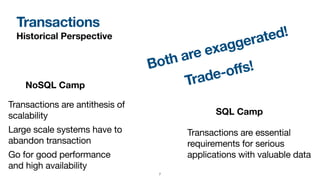

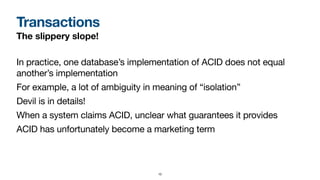

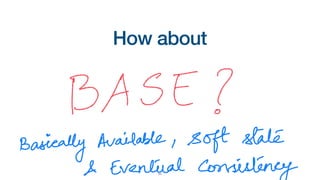

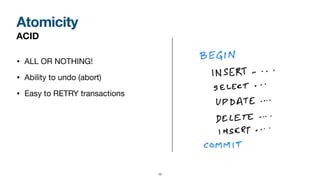

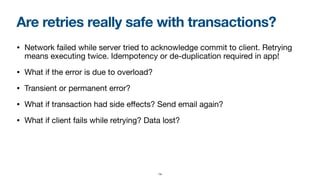

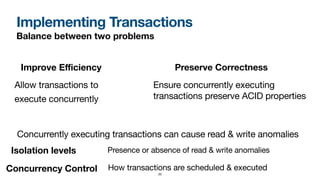

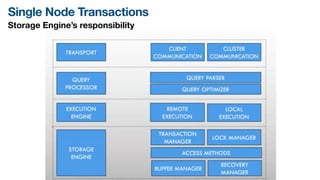

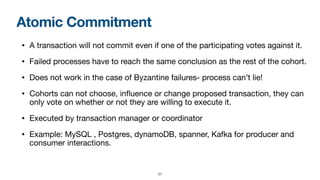

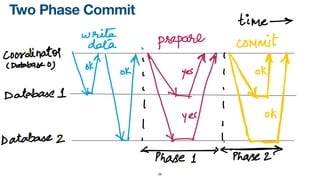

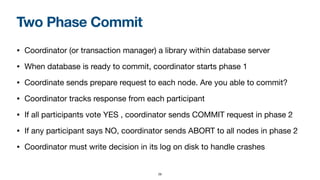

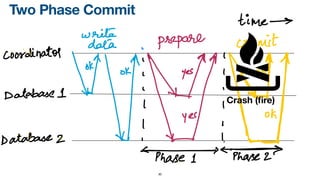

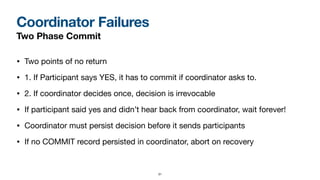

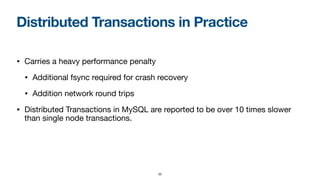

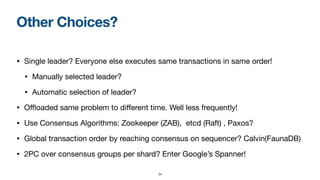

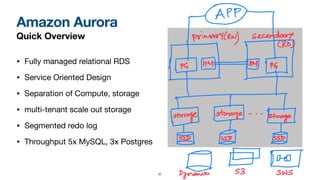

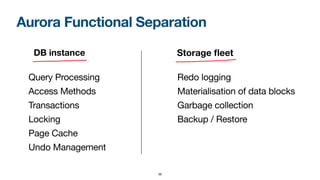

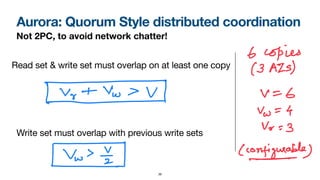

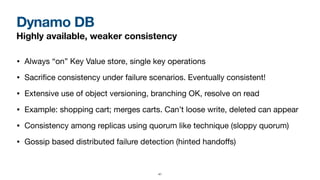

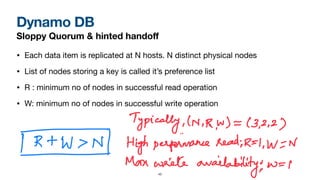

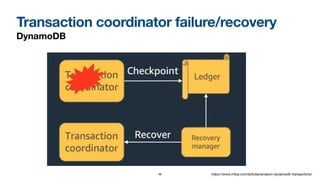

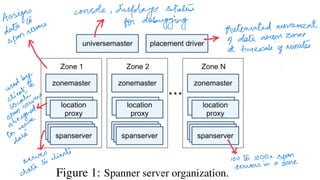

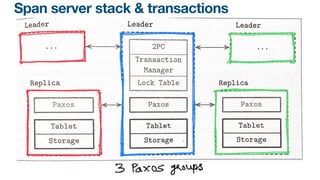

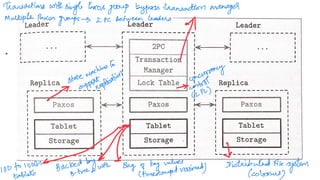

The document discusses the complexities of implementing transactions in modern databases while considering ACID properties, scalability, and performance trade-offs. It explores historical perspectives on transaction systems, details on distributed transactions, and various implementation challenges, including two-phase commit and its alternatives. Additionally, it highlights specific database solutions such as Amazon Aurora, DynamoDB, and Google Spanner, focusing on their transaction handling and coordination methods.