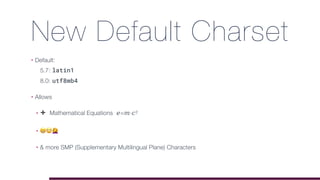

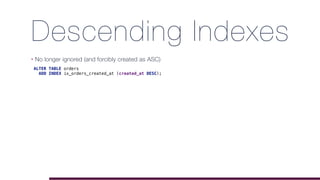

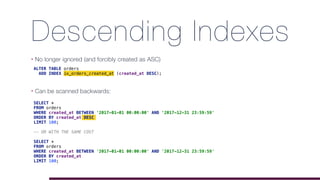

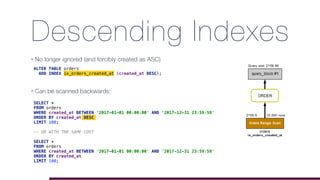

The document provides an overview of new features and improvements in MySQL 8.0, including changes to the default character set and collation, enhancements to the data dictionary, and various performance improvements in InnoDB. Key features highlighted are atomic DDL, invisible and descending indexes, and the introduction of Common Table Expressions (CTEs) and window functions. It also discusses practical examples and SQL queries that illustrate the new capabilities in the context of data analysis.

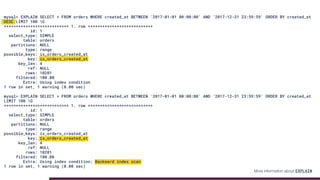

![Step 1: Count Appearances

[42000][1055] Expression #1 of SELECT list is not in GROUP BY clause

and contains nonaggregated column 'phpworld.daily_show_guests.id'

which is not functionally dependent on columns in GROUP BY clause;

this is incompatible with sql_mode=only_full_group_by

SELECT *, COUNT(*) AS appearances FROM daily_show_guests GROUP BY year, occupation;

More information about only_full_group_by](https://image.slidesharecdn.com/phpworld-171115160558/85/MySQL-8-0-Preview-What-Is-Coming-29-320.jpg)

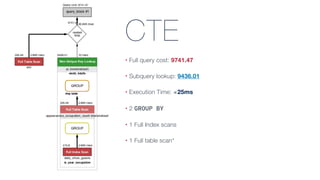

![CTE

• Similar to CREATE [TEMPORARY] TABLE

• Doesn’t need CREATE privilege

• Can reference other CTEs

• Can be recursive

• Easier to read](https://image.slidesharecdn.com/phpworld-171115160558/85/MySQL-8-0-Preview-What-Is-Coming-35-320.jpg)

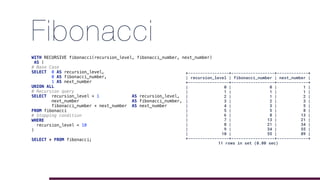

![Fibonacci

WITH RECURSIVE fibonacci(fibonacci_number, next_number)

AS (

# Base Condition

SELECT

0 AS fibonacci_number,

1 AS next_number

UNION ALL

# Recursion Query

SELECT

next_number AS fibonacci_number,

fibonacci_number + next_number AS next_number

FROM fibonacci

)

SELECT * FROM fibonacci LIMIT 10;

[22001][1690] Data truncation: BIGINT value is out of

range in '(`fibonacci`.`fibonacci_number` +

`fibonacci`.`next_number`)'

Details](https://image.slidesharecdn.com/phpworld-171115160558/85/MySQL-8-0-Preview-What-Is-Coming-44-320.jpg)

![5.7: First Duplicate Wins

• In 5.7 the first definition is stored

SELECT JSON_OBJECT('clients', 32, 'options', '[active, inactive]',

'clients', 64, 'clients', 128) AS result;

+---------------------------------------------------+

| result |

+---------------------------------------------------+

| {"clients": 32 , "options": "[active, inactive]"} |

+---------------------------------------------------+

1 row in set (0.00 sec)](https://image.slidesharecdn.com/phpworld-171115160558/85/MySQL-8-0-Preview-What-Is-Coming-57-320.jpg)

![8.0: Last Duplicate Wins

• In 8.0 the last definition is stored

SELECT JSON_OBJECT('clients', 32, 'options', '[active, inactive]',

'clients', 64, 'clients', 128) AS result;

+---------------------------------------------------+

| result |

+---------------------------------------------------+

| {"clients": 128, "options": "[active, inactive]"} |

+---------------------------------------------------+

1 row in set (0.00 sec)](https://image.slidesharecdn.com/phpworld-171115160558/85/MySQL-8-0-Preview-What-Is-Coming-58-320.jpg)

![Preserve & Patch

SELECT PRESERVE PATCH

('[1, 2]', '[true, false]') [1, 2, true, false] [true, false]

('{"name": "x"}', '{"id": 47}') {"id": 47, "name": "x"} {"id": 47, "name": "x"}

('1', 'true') [1, true] true

('[1, 2]', '{"id": 47}') [1, 2, {"id": 47}] {"id": 47}

('{"a": 1, "b": 2}', '{"a": 3, "c": 4}') {"a": [1, 3], "b": 2, "c": 4} {"a": 3, "b": 2, "c": 4}

('{"a": 1, "b":2}','{"a": 3, "c":4}',

'{"a": 5, "d":6}')

{"a": [1, 3, 5], "b": 2, "c": 4, "d": 6} {"a": 5, "b": 2, "c": 4, "d": 6}

Examples taken from the MySQL 8.0 documentation.](https://image.slidesharecdn.com/phpworld-171115160558/85/MySQL-8-0-Preview-What-Is-Coming-61-320.jpg)

![Roles - Notes

• It's possible to set up mandatory_roles in the MySQL config file:

• Or set mandatory_roles at runtime:

• It’s possible to revoke a role for a user:

• Default role for root is NONE (SELECT CURRENT_ROLE())

[mysqld]

mandatory_roles='developer,readonly@localhost,dba@%'

SET PERSIST mandatory_roles = 'developer,readonly@localhost,dba@%';

REVOKE readonly FROM 'bart_simpson'@'localhost';](https://image.slidesharecdn.com/phpworld-171115160558/85/MySQL-8-0-Preview-What-Is-Coming-67-320.jpg)