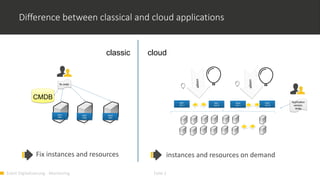

1) Monitoring cloud applications presents unique challenges compared to traditional on-premise applications due to the dynamic and scalable nature of cloud infrastructure.

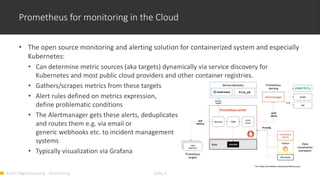

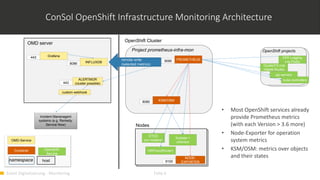

2) Prometheus is an open source monitoring solution suited for containerized and cloud-native applications like Kubernetes due to its ability to dynamically discover targets and collect many metrics.

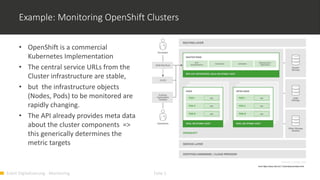

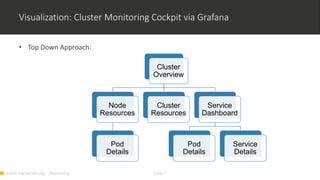

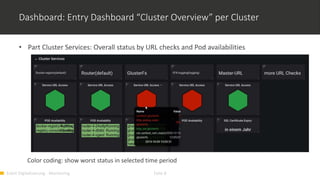

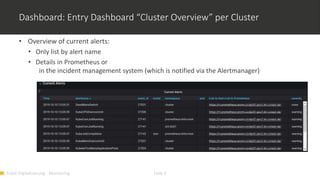

3) OMD Labs integrates tools like Prometheus and Grafana to monitor complex and dynamically changing infrastructures like OpenShift clusters, providing dashboards that visualize the health and performance of cluster components from nodes to pods and services.