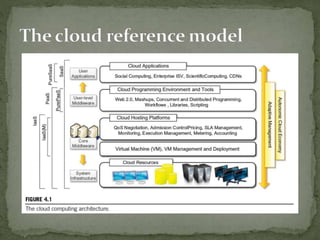

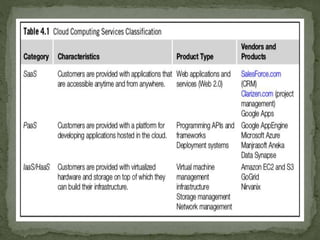

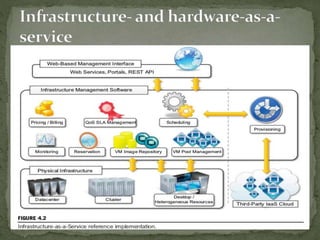

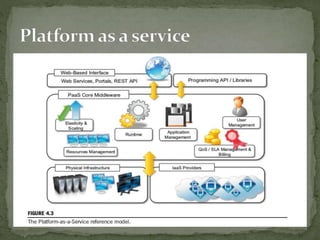

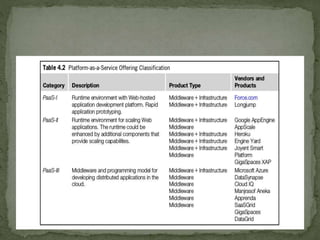

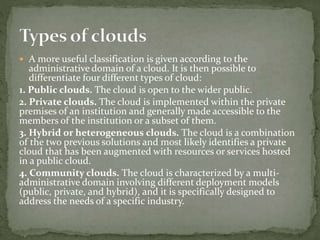

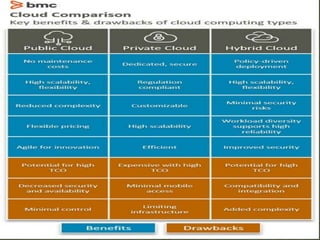

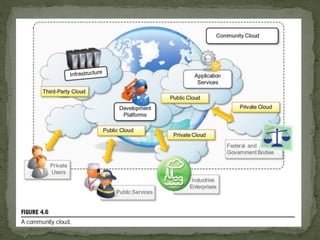

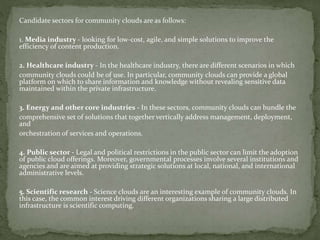

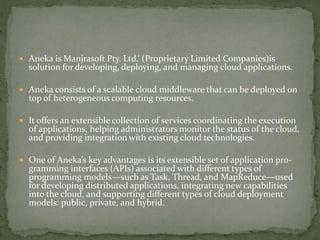

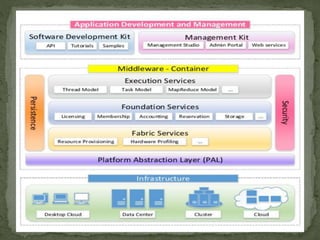

This document discusses cloud computing architecture and platforms. It defines cloud computing as delivering IT services over the internet on demand, including hardware, software, and platforms. It describes the characteristics and categories of cloud computing solutions like SaaS, PaaS, IaaS. PaaS provides development platforms and automates application deployment. Community clouds can benefit specific industries by removing vendor dependency and allowing open competition.

![ The core infrastructure of the system is based on the .NET

technology and allows the Aneka container to be portable

over different platforms and operating systems.

Any platform featuring an ECMA-334 [52] and ECMA-335

[53] compatible environment can host and run an instance

of the Aneka container

The Common Language Infrastructure (CLI), which is the

specification introduced in the ECMA-334 standard,

defines a common runtime environment and application

model for executing programs but does not provide any

interface to access the hardware or to collect performance

data from the hosting operating system.](https://image.slidesharecdn.com/module2-cloudcomputingarchitecture-220603070940-8a790638/85/Module-2-Cloud-Computing-Architecture-pptx-38-320.jpg)