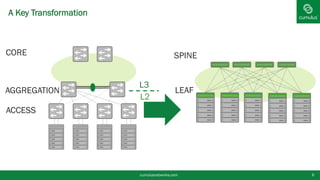

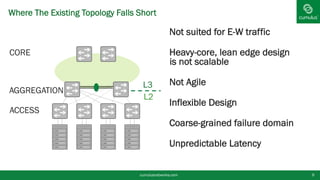

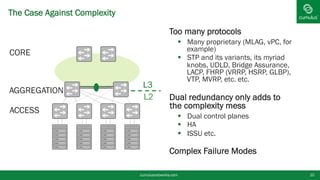

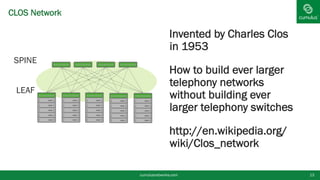

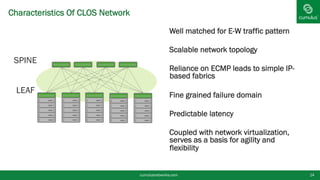

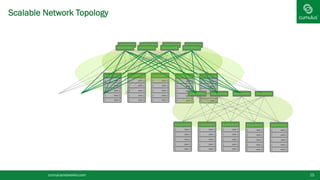

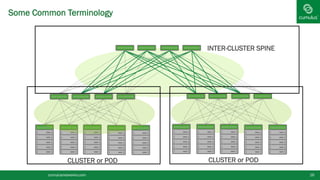

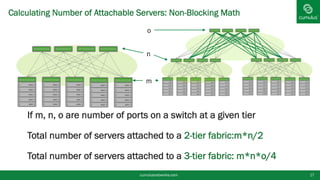

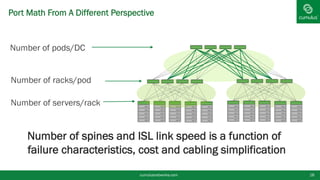

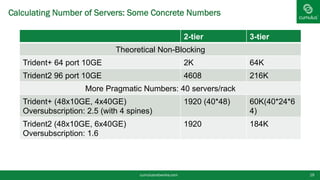

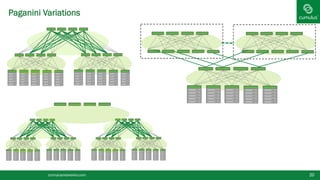

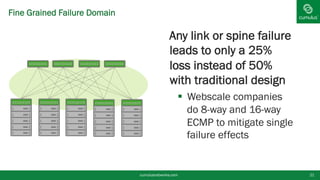

The document discusses the rise of the modern data center and CLOS networks as the new architecture that is well-suited for modern data center needs. A CLOS network topology is scalable, provides fine-grained failure domains, and simplifies network design using only IP without other complex protocols. This architecture coupled with network virtualization enables agility, flexibility, and simplified management of large scale data center networks.