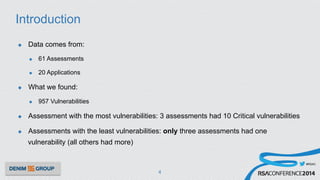

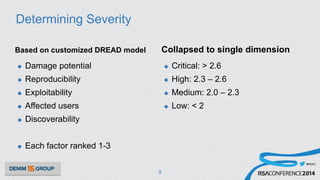

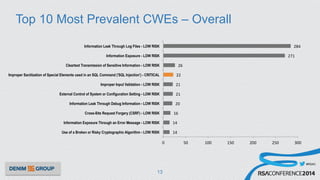

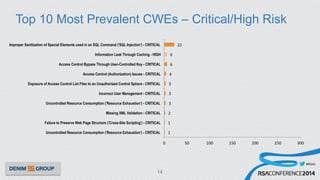

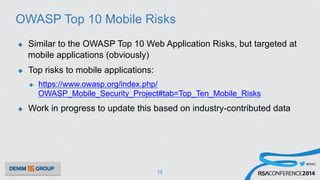

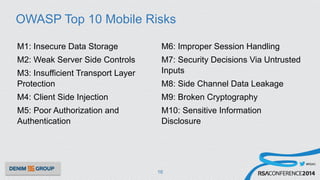

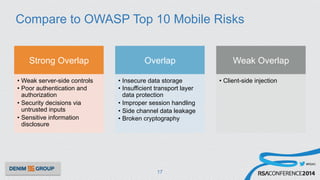

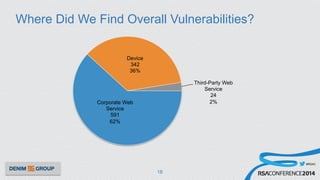

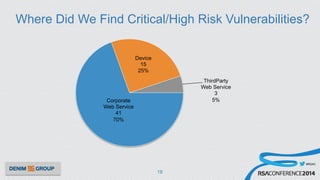

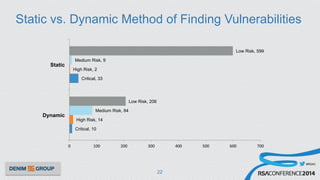

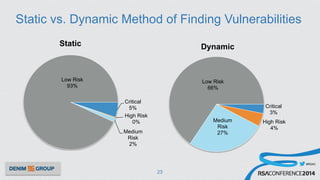

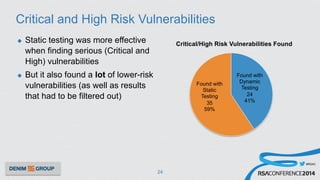

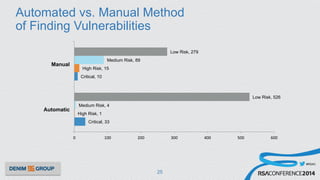

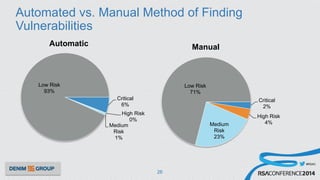

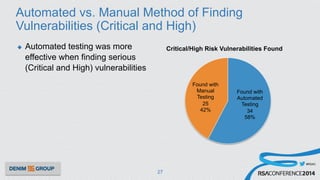

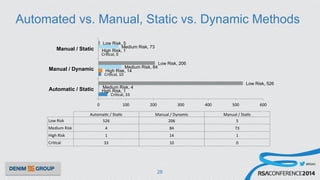

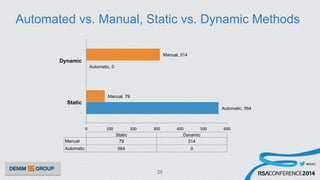

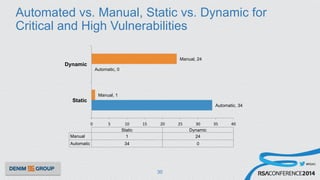

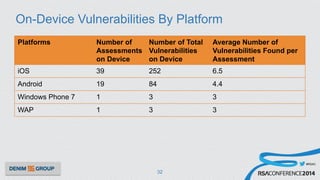

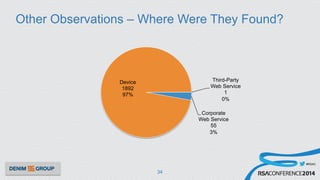

The document details a mobile application security assessment conducted across 61 assessments, revealing a total of 957 vulnerabilities, with significant findings regarding critical vulnerabilities primarily found in corporate web services. It discusses the methodology used, including static and dynamic testing approaches, and the taxonomy of vulnerabilities based on MITRE's Common Weakness Enumeration. Recommendations emphasize the need for a combination of automated and manual testing to ensure comprehensive coverage and address the complexities of mobile application security.