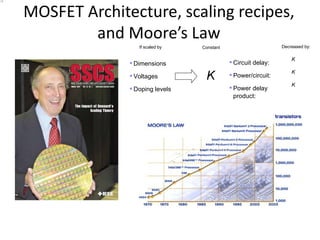

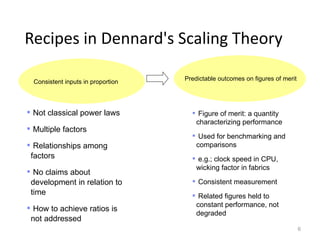

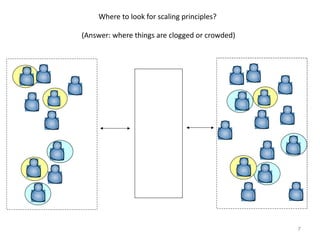

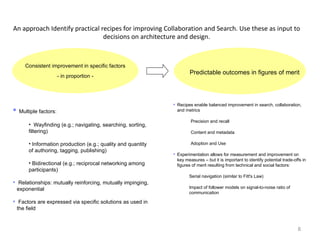

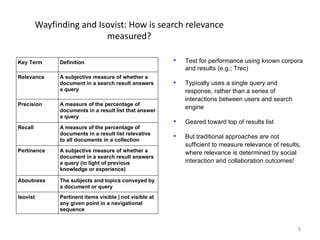

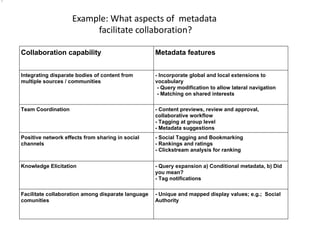

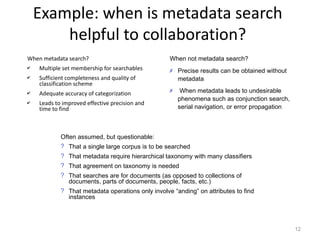

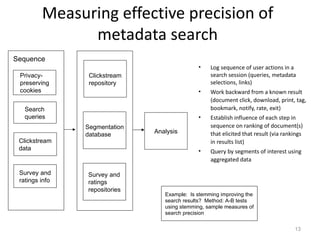

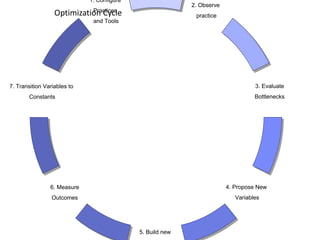

The document discusses optimization cycles for improving collaboration and search practices, noting that multiple factors should be maintained in constant ratios to achieve predictable outcomes in key metrics, and experiments allow measuring these figures of merit to identify tradeoffs and potential improvements. It provides examples of how aspects of metadata and measuring effective precision can facilitate collaboration.