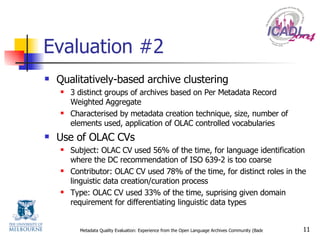

The document discusses the development of tools to evaluate metadata quality in the Open Language Archives Community (OLAC). It outlines the motivation, algorithm design, and implementation of a metadata quality evaluation suite. Key metrics were developed to score individual records and entire archives based on standards adherence and usage of controlled vocabularies. Evaluation of OLAC data showed variability in quality and vocabulary usage across archives of different sizes.

![Metadata Quality Evaluation: Experience from the Open Language Archives Community Baden Hughes Department of Computer Science and Software Engineering University of Melbourne [email_address]](https://image.slidesharecdn.com/metadata-quality-evaluation-experience-from-the-open-language-archives-community-1205876612500465-5/75/Metadata-Quality-Evaluation-Experience-from-the-Open-Language-Archives-Community-1-2048.jpg)

![Demo Metadata quality report on all OLAC Data Providers [ Live ] [ Local ] Metadata quality report for a single OLAC data provider (PARADISEC) [ Live ] [ Local ]](https://image.slidesharecdn.com/metadata-quality-evaluation-experience-from-the-open-language-archives-community-1205876612500465-5/85/Metadata-Quality-Evaluation-Experience-from-the-Open-Language-Archives-Community-9-320.jpg)