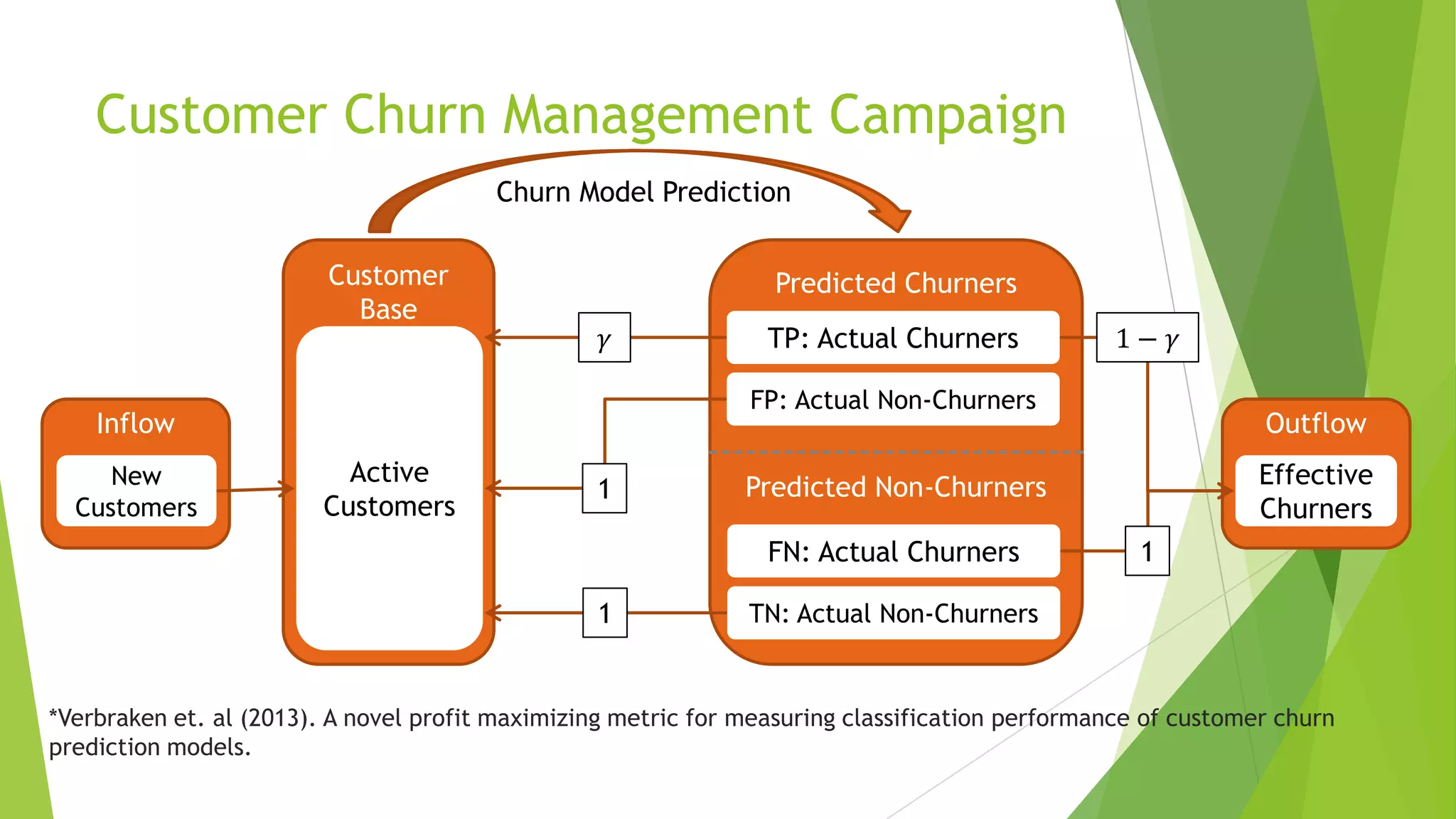

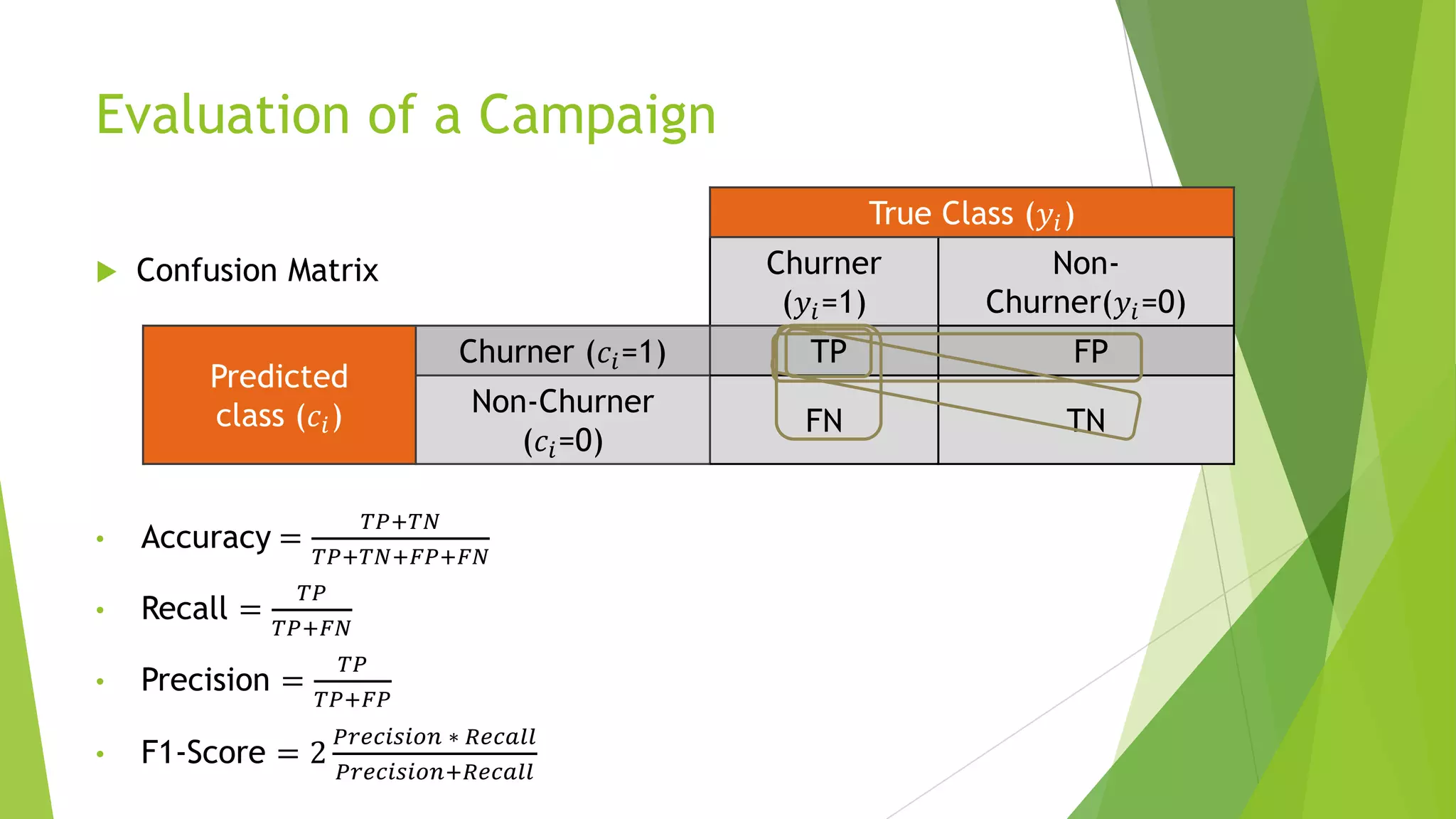

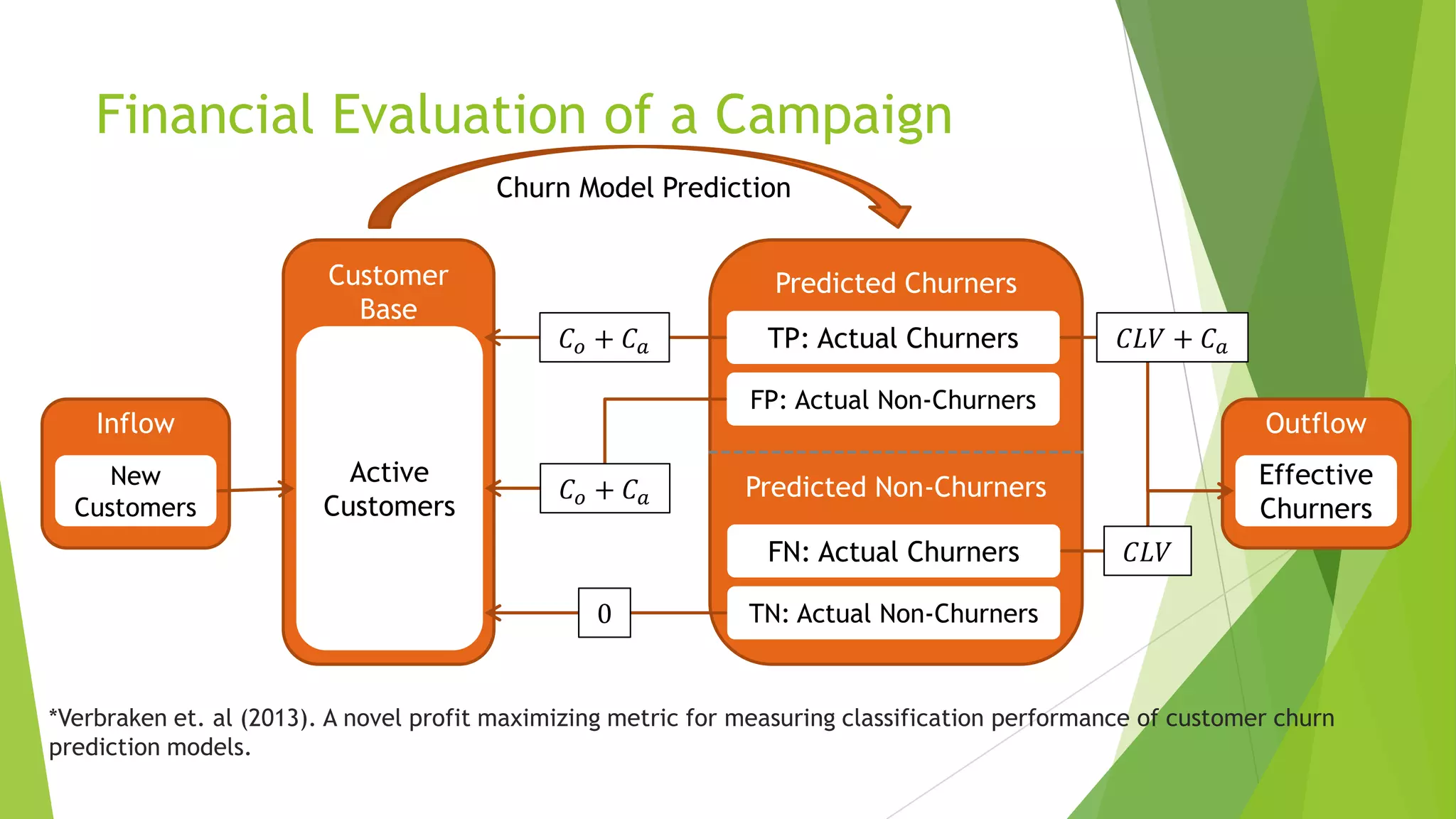

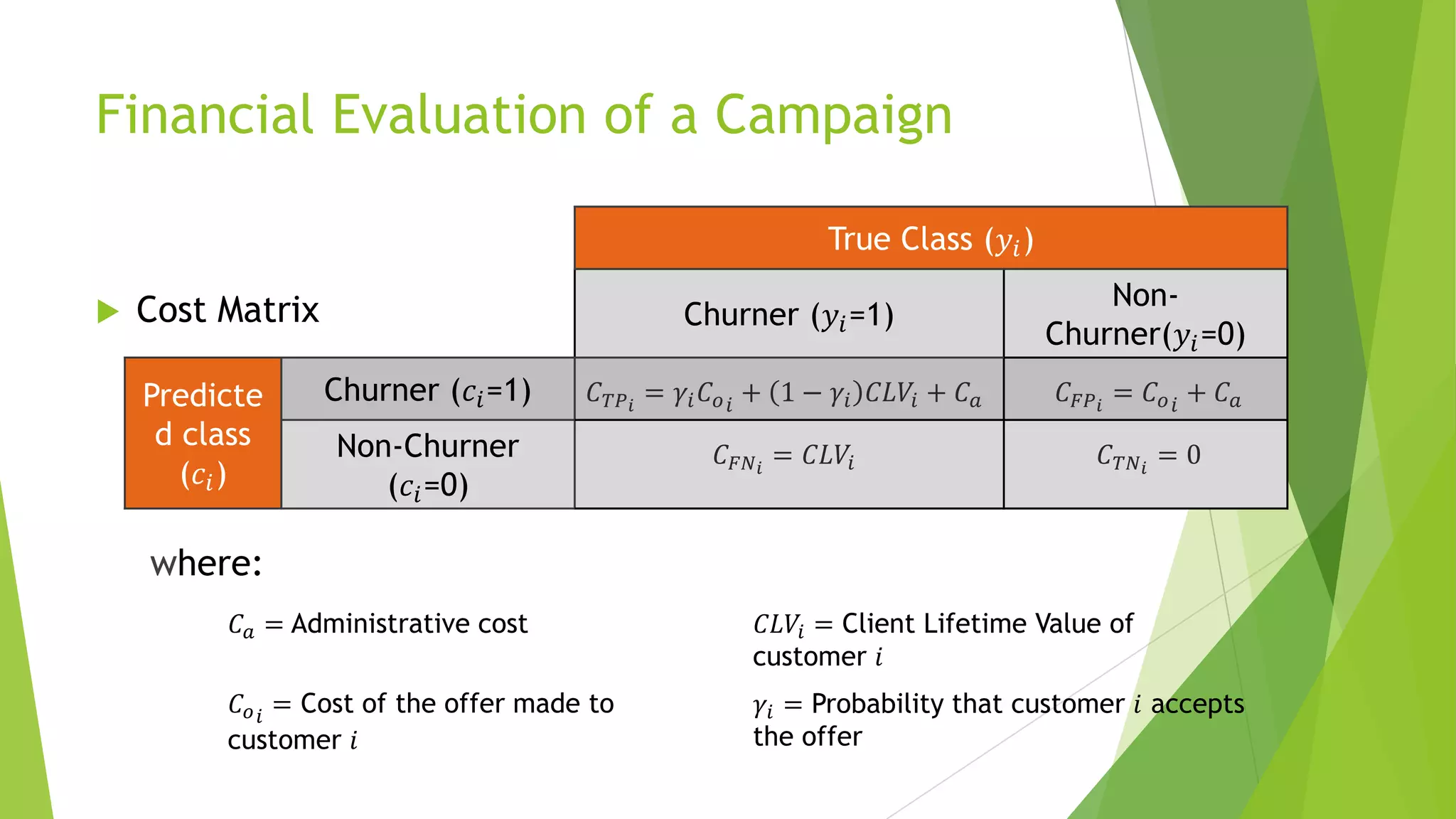

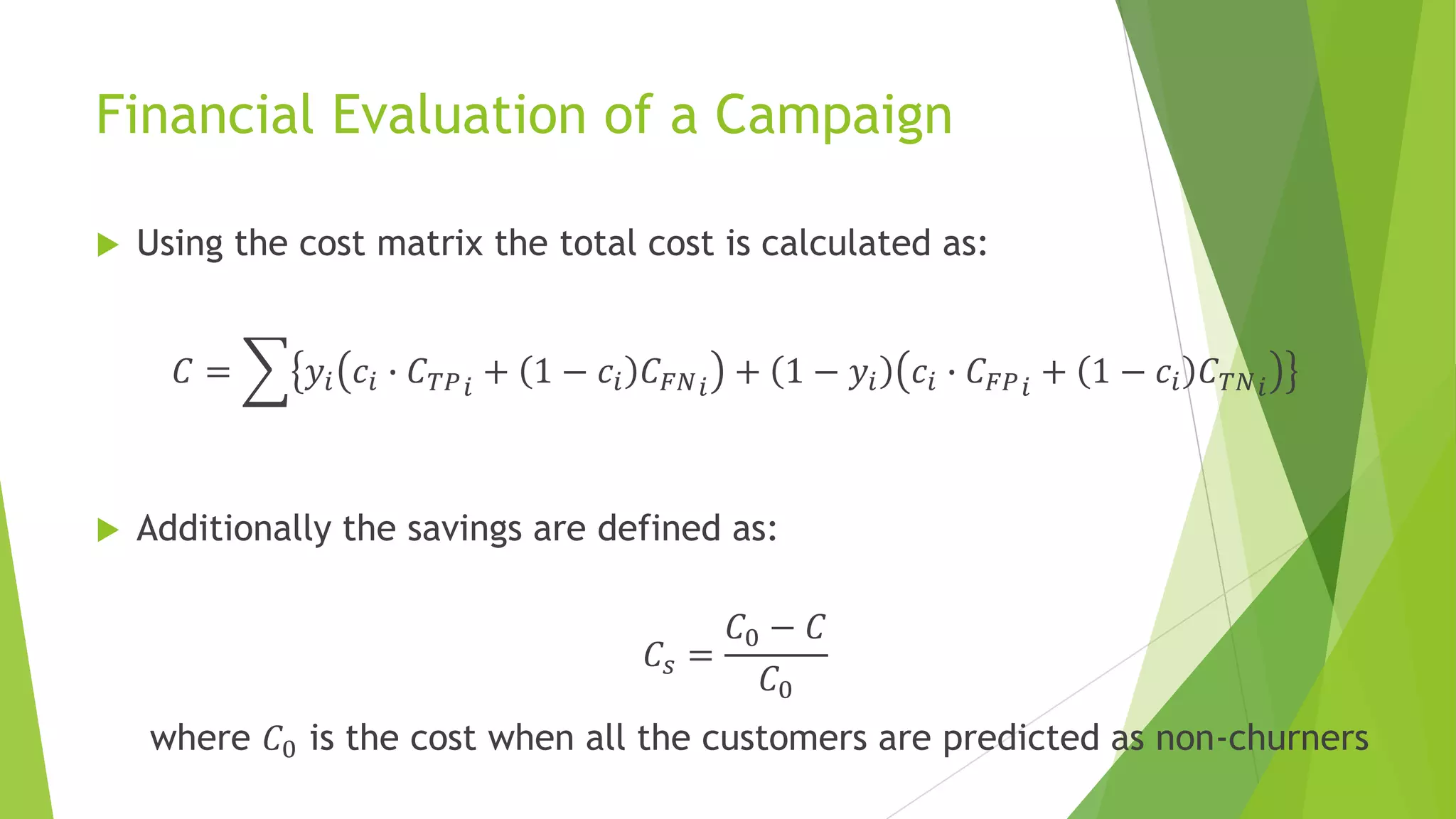

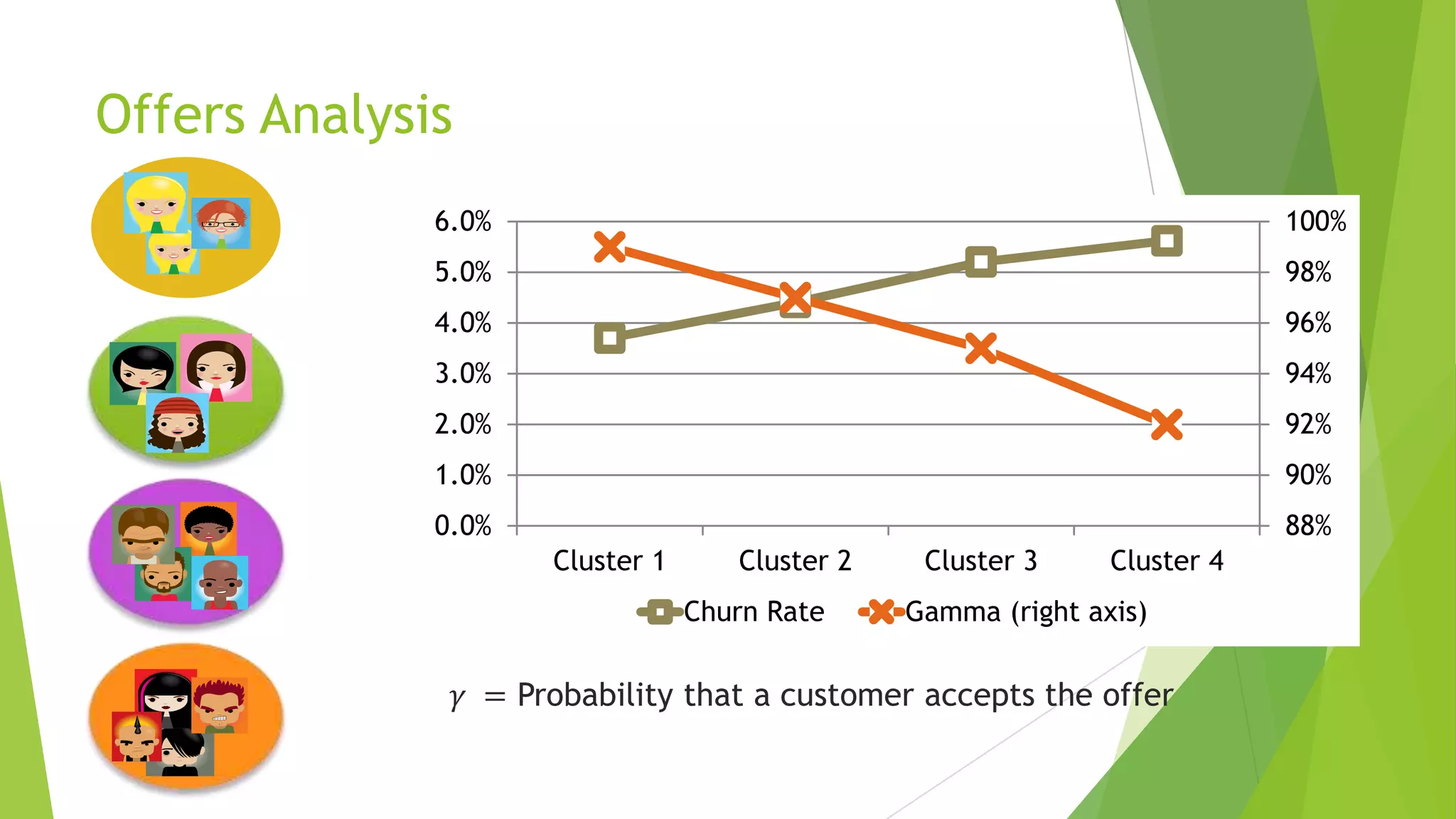

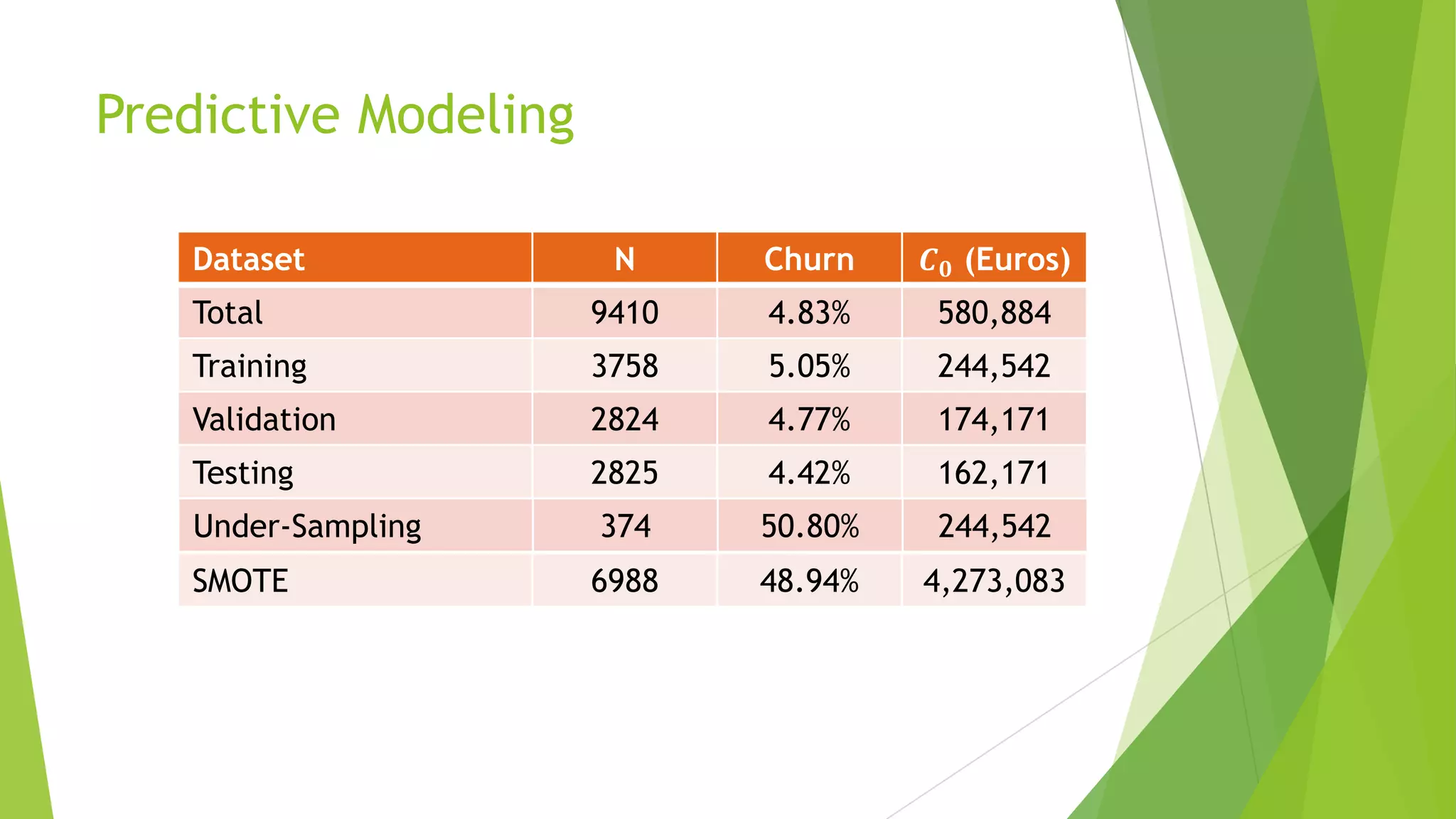

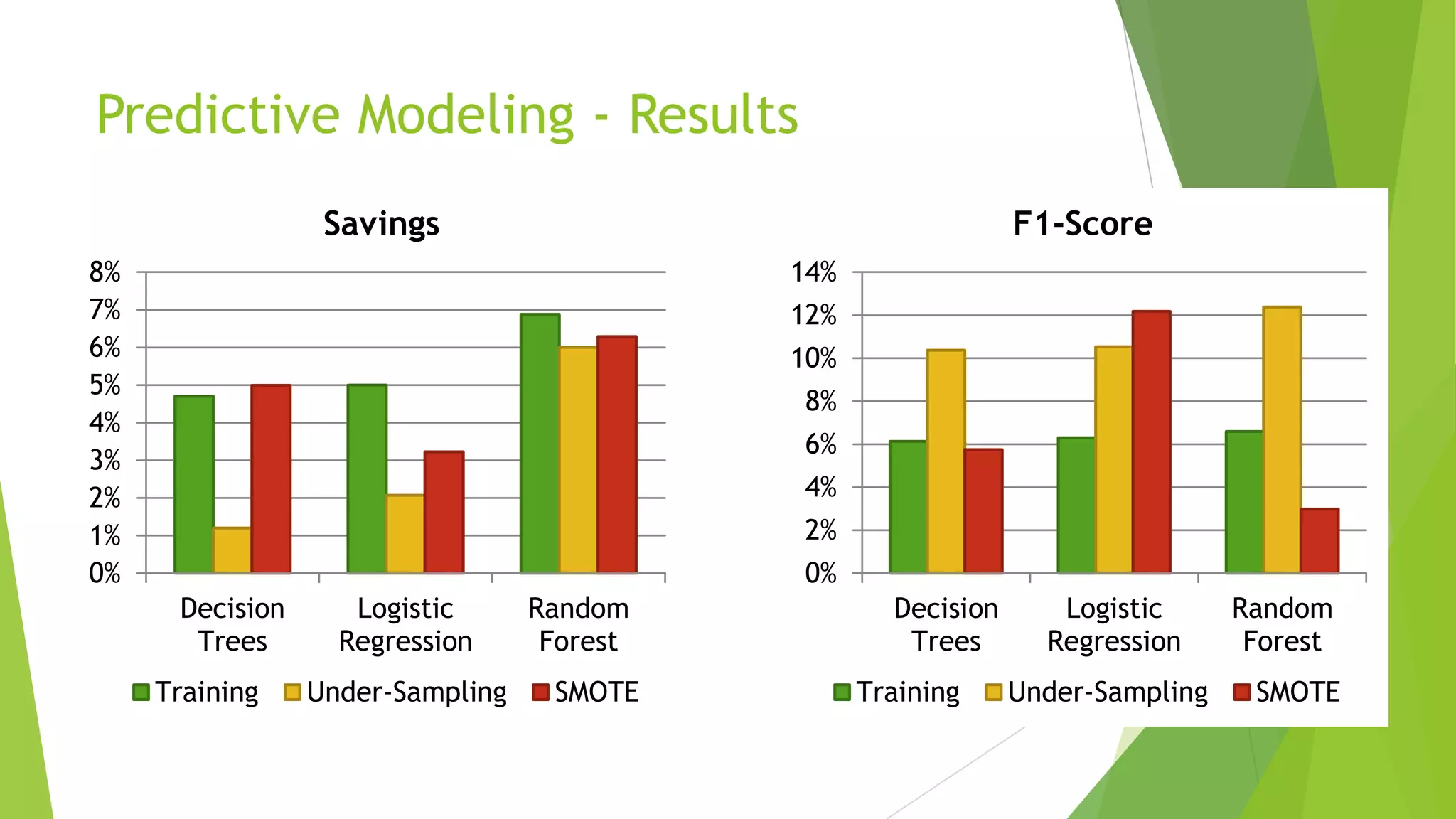

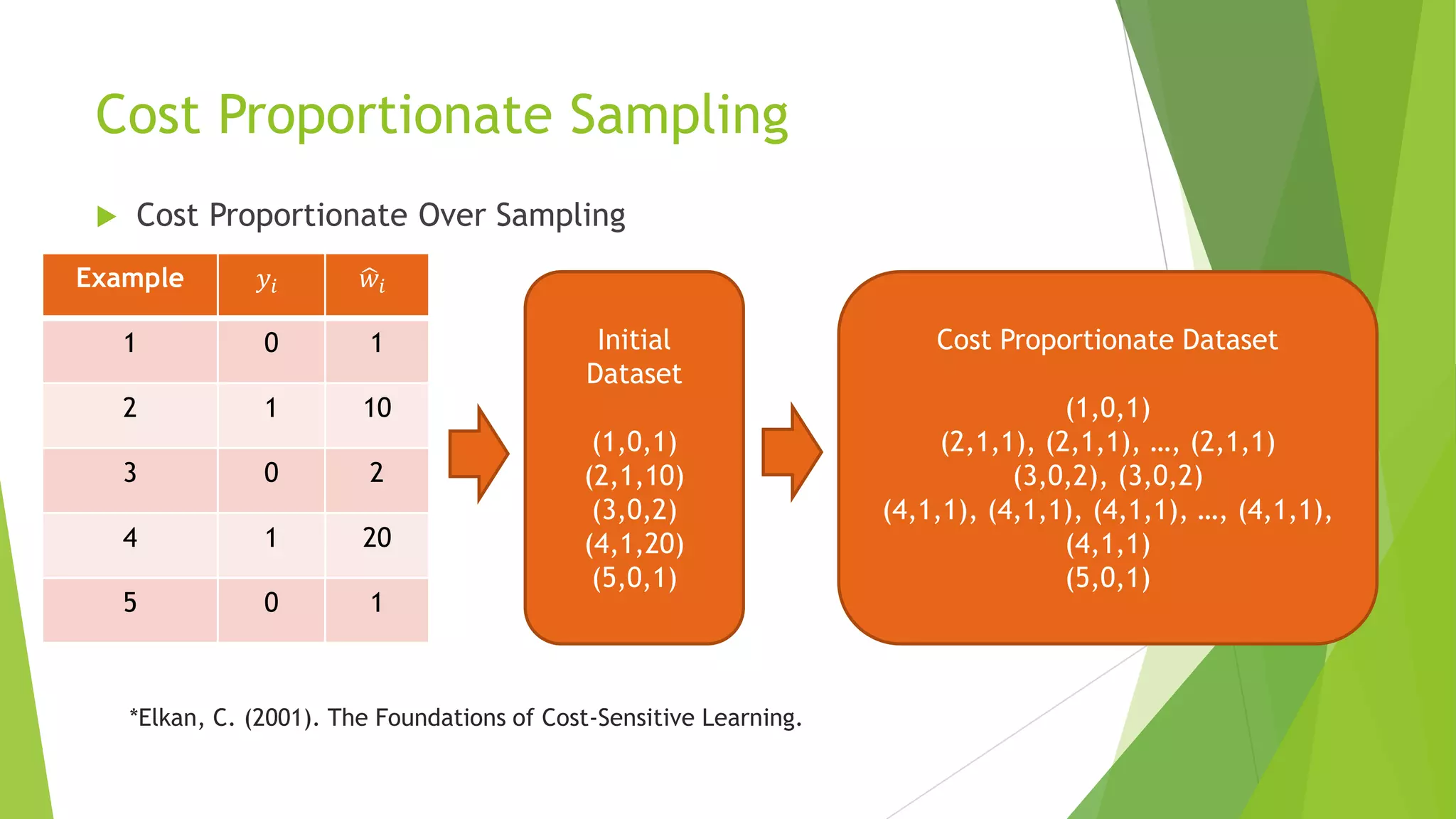

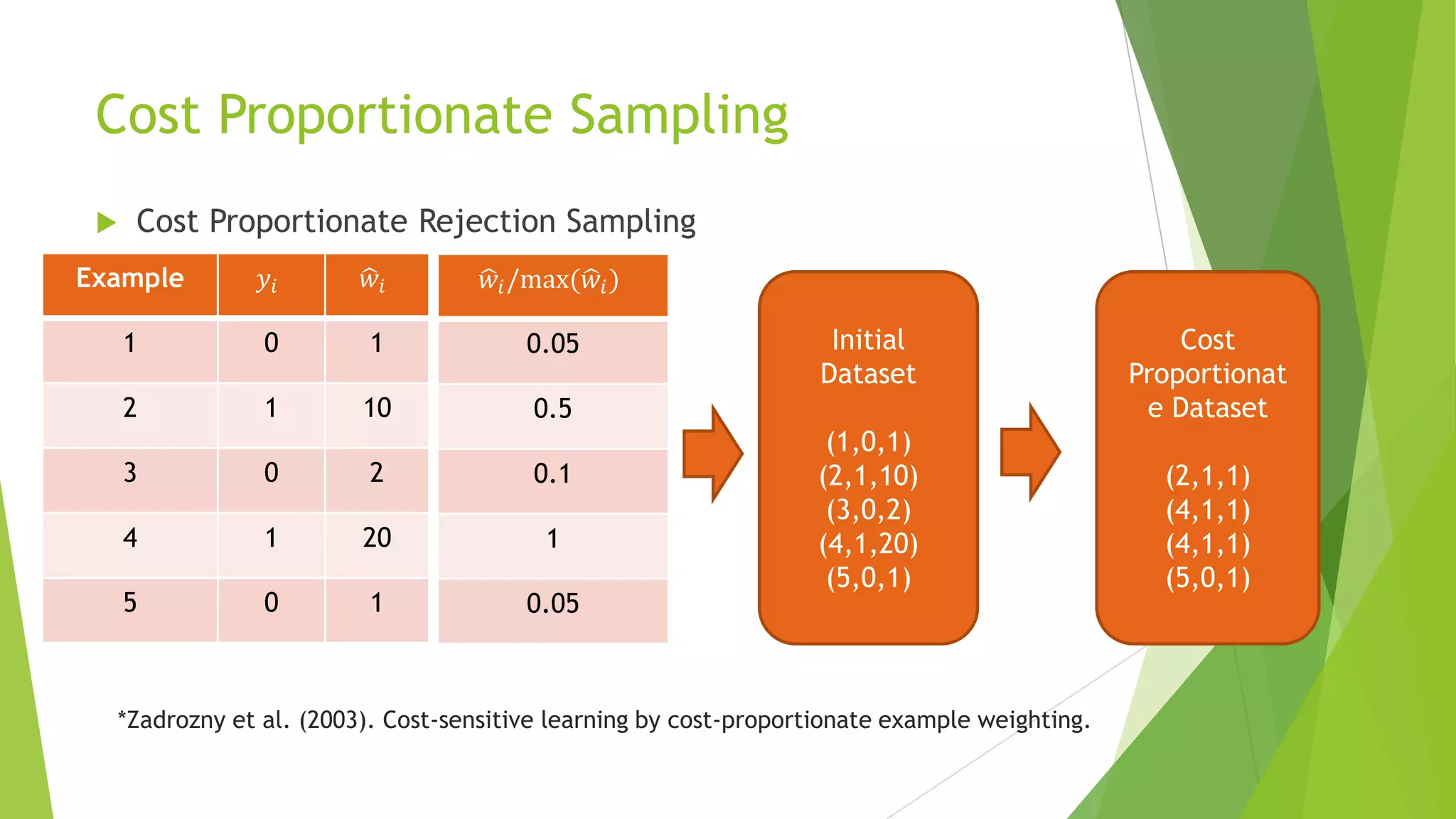

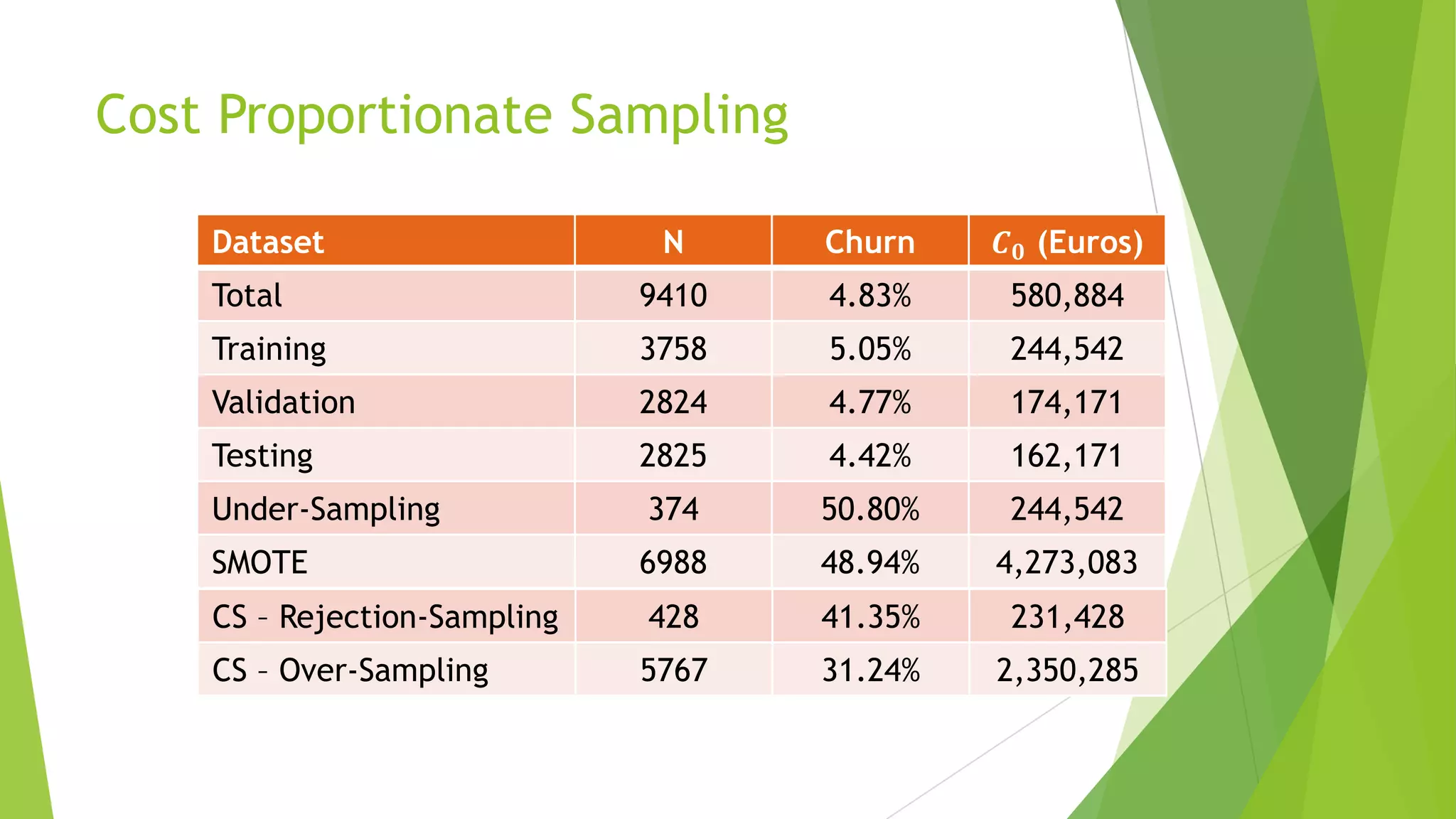

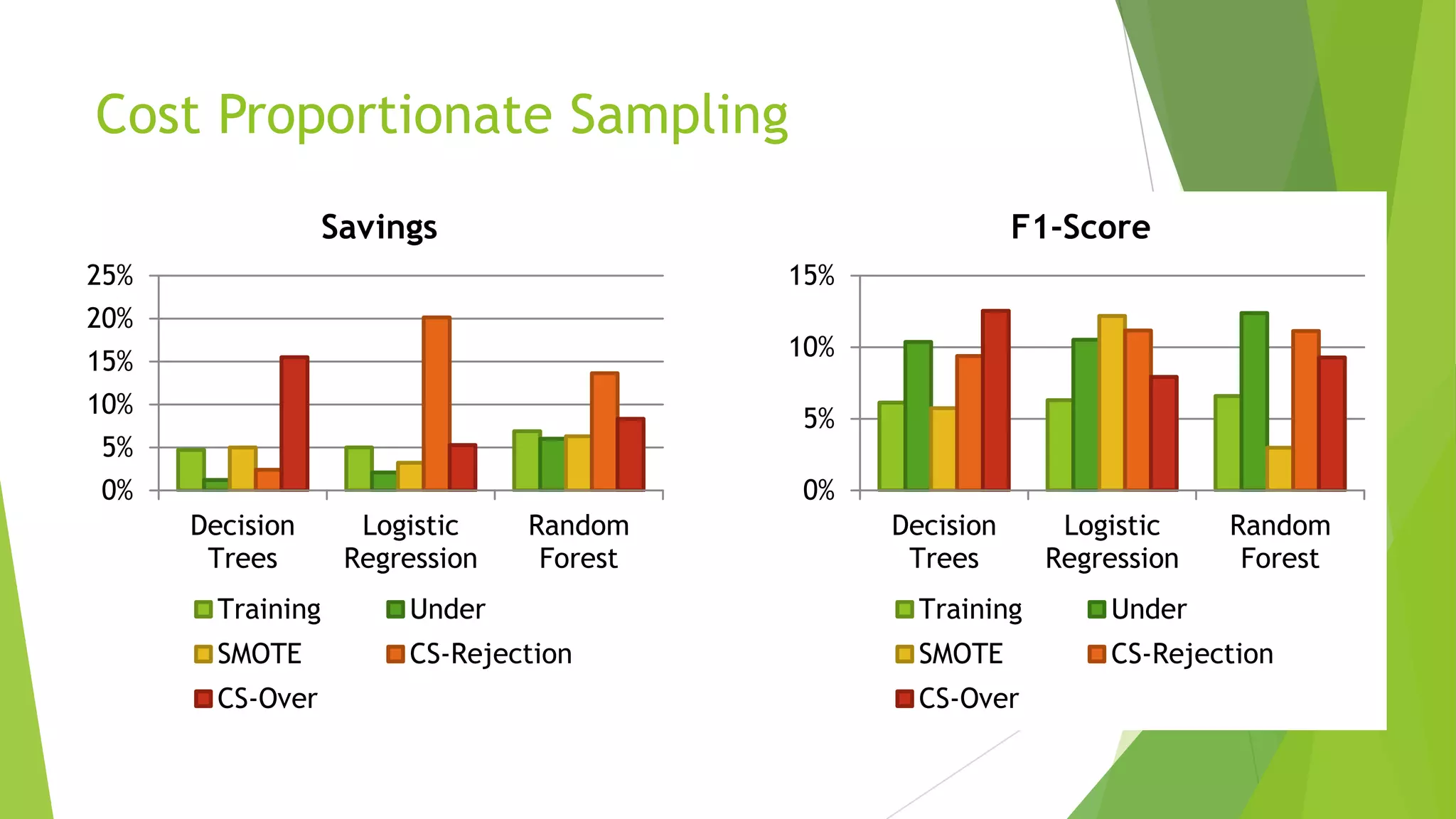

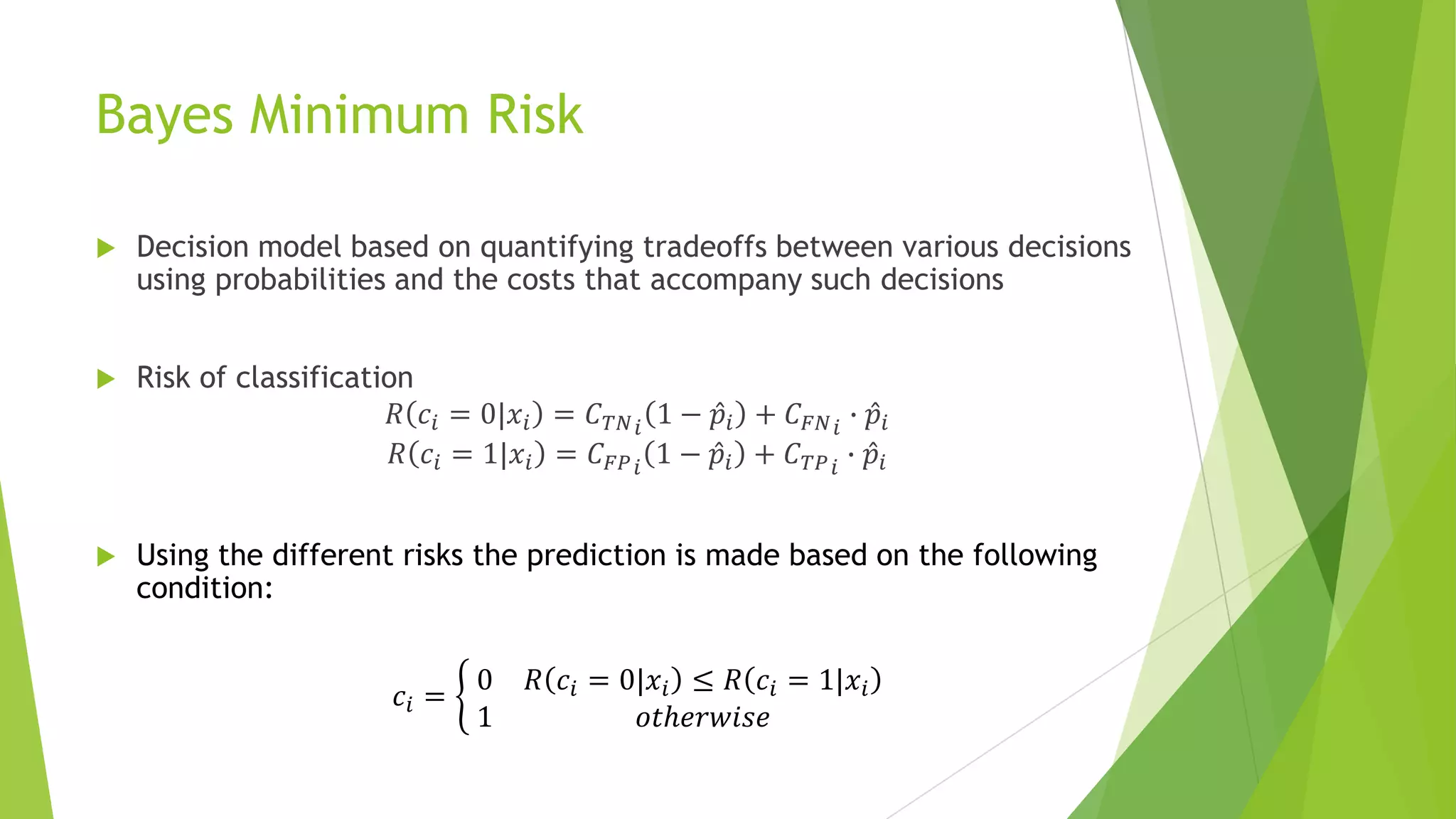

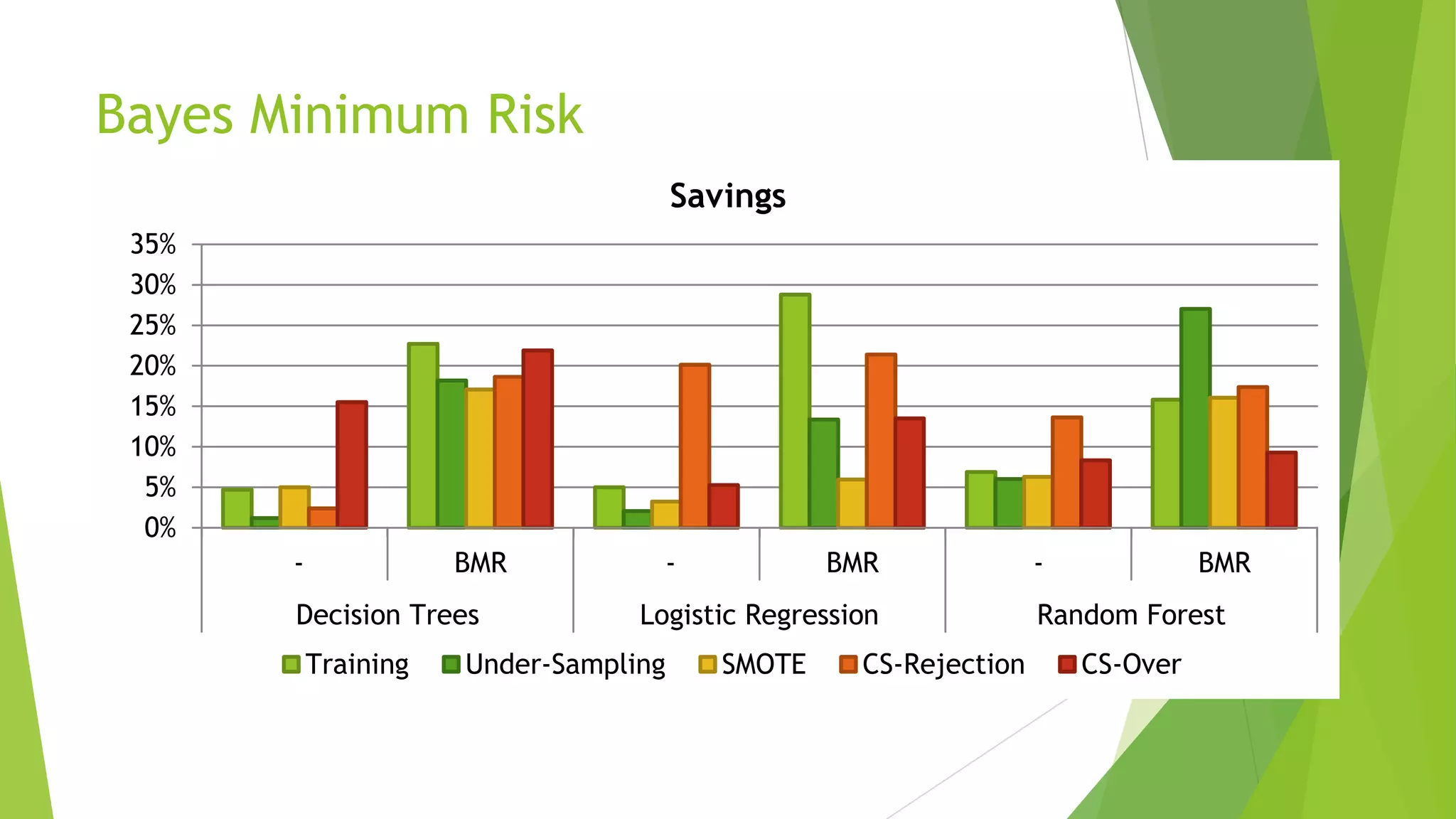

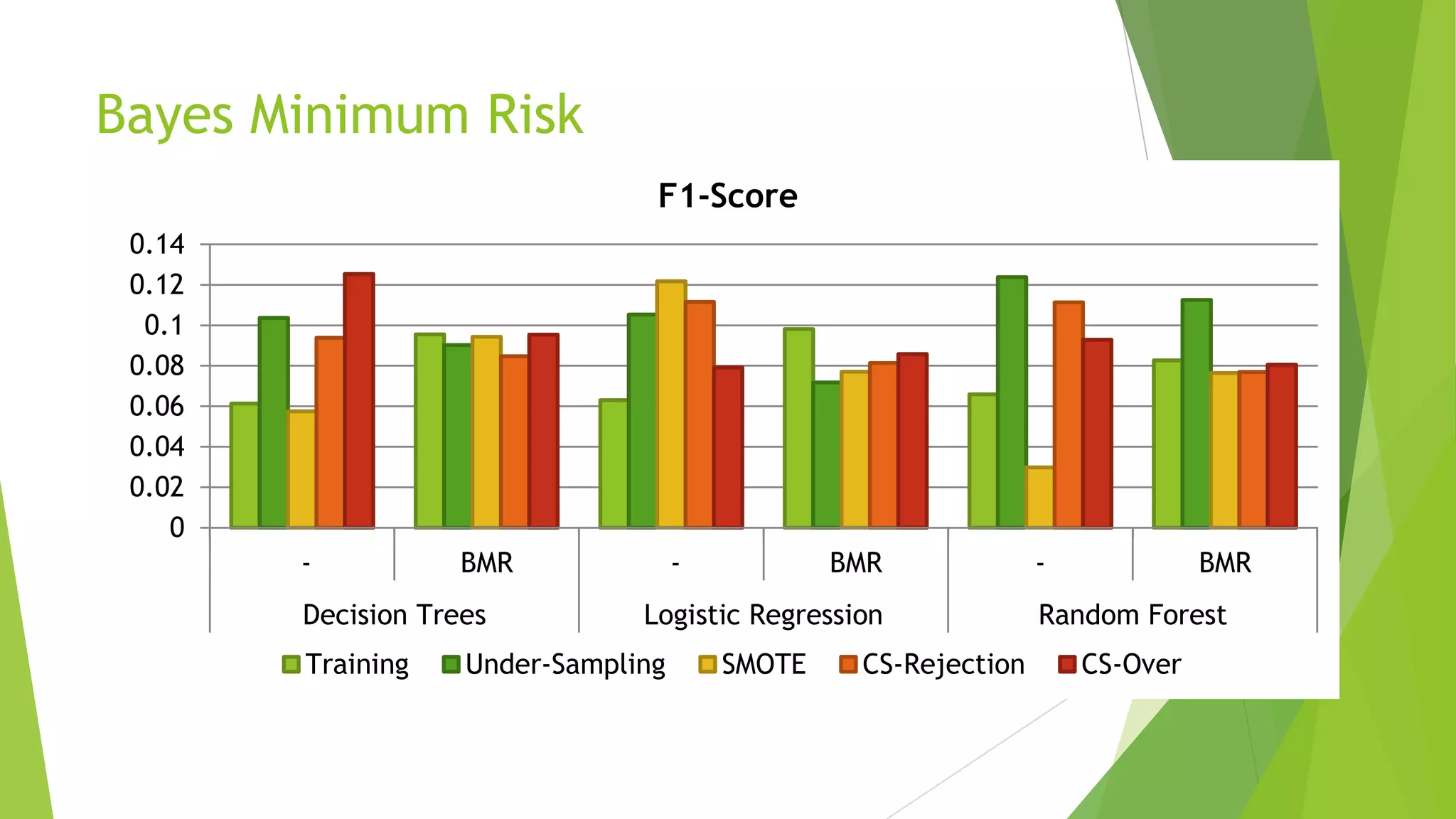

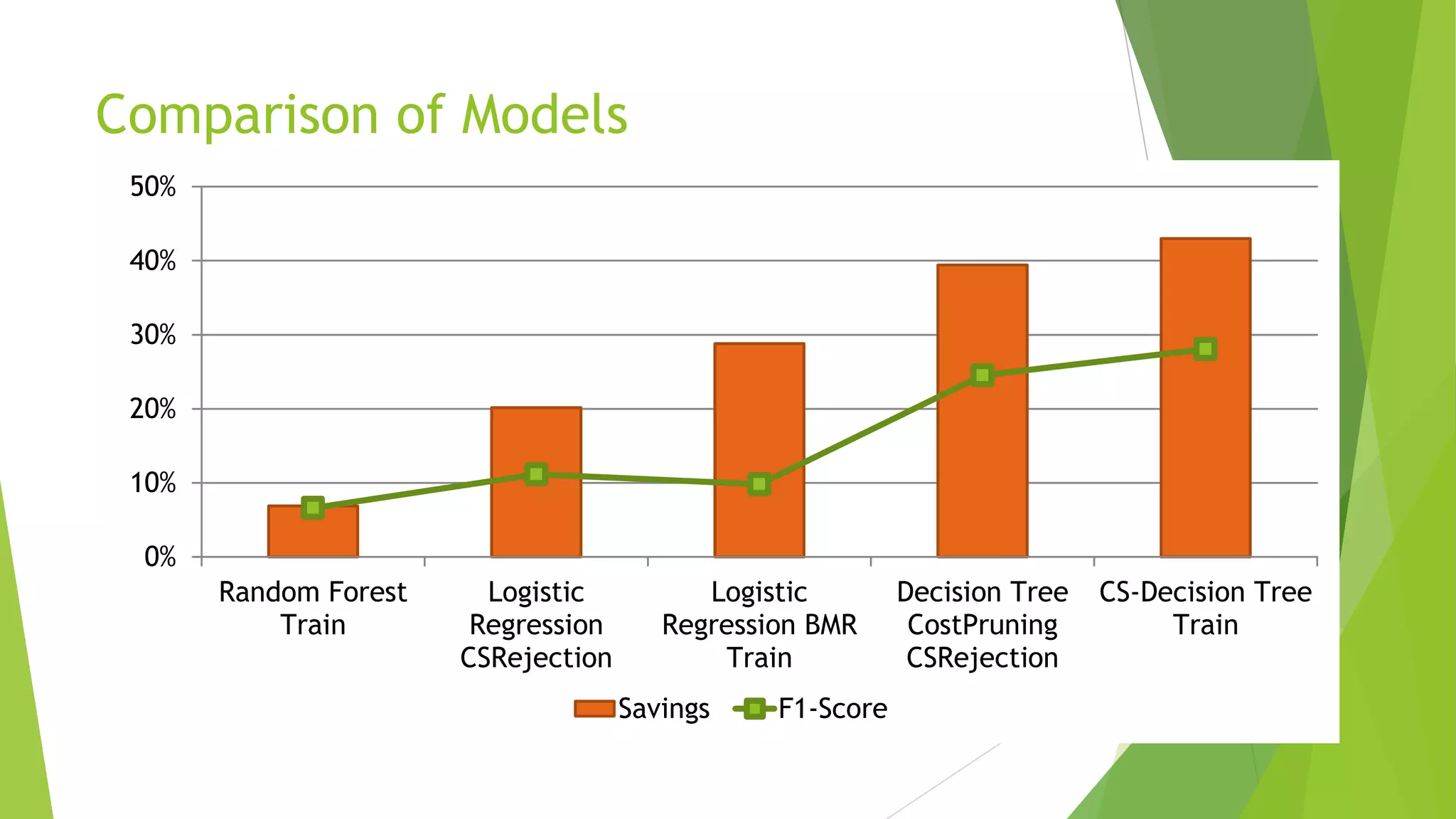

This document summarizes a presentation on maximizing profit from customer churn prediction models using cost-sensitive machine learning techniques. It discusses how traditional evaluation measures like accuracy do not account for different costs of prediction errors. It then covers cost-sensitive approaches like cost-proportionate sampling, Bayes minimum risk, and cost-sensitive decision trees. The results show these cost-sensitive methods improve savings over traditional models and sampling approaches when the business costs are incorporated into the predictive modeling.