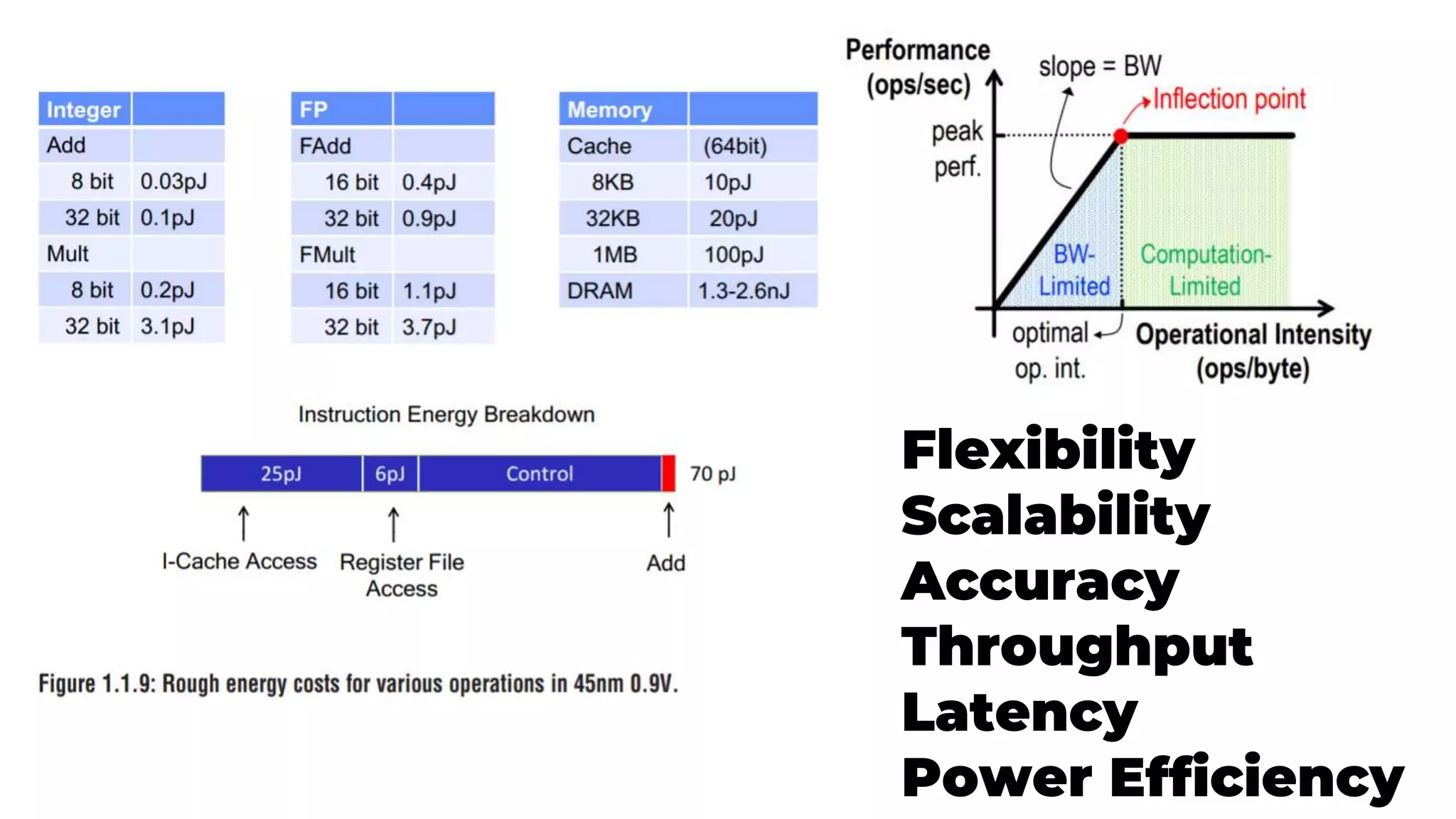

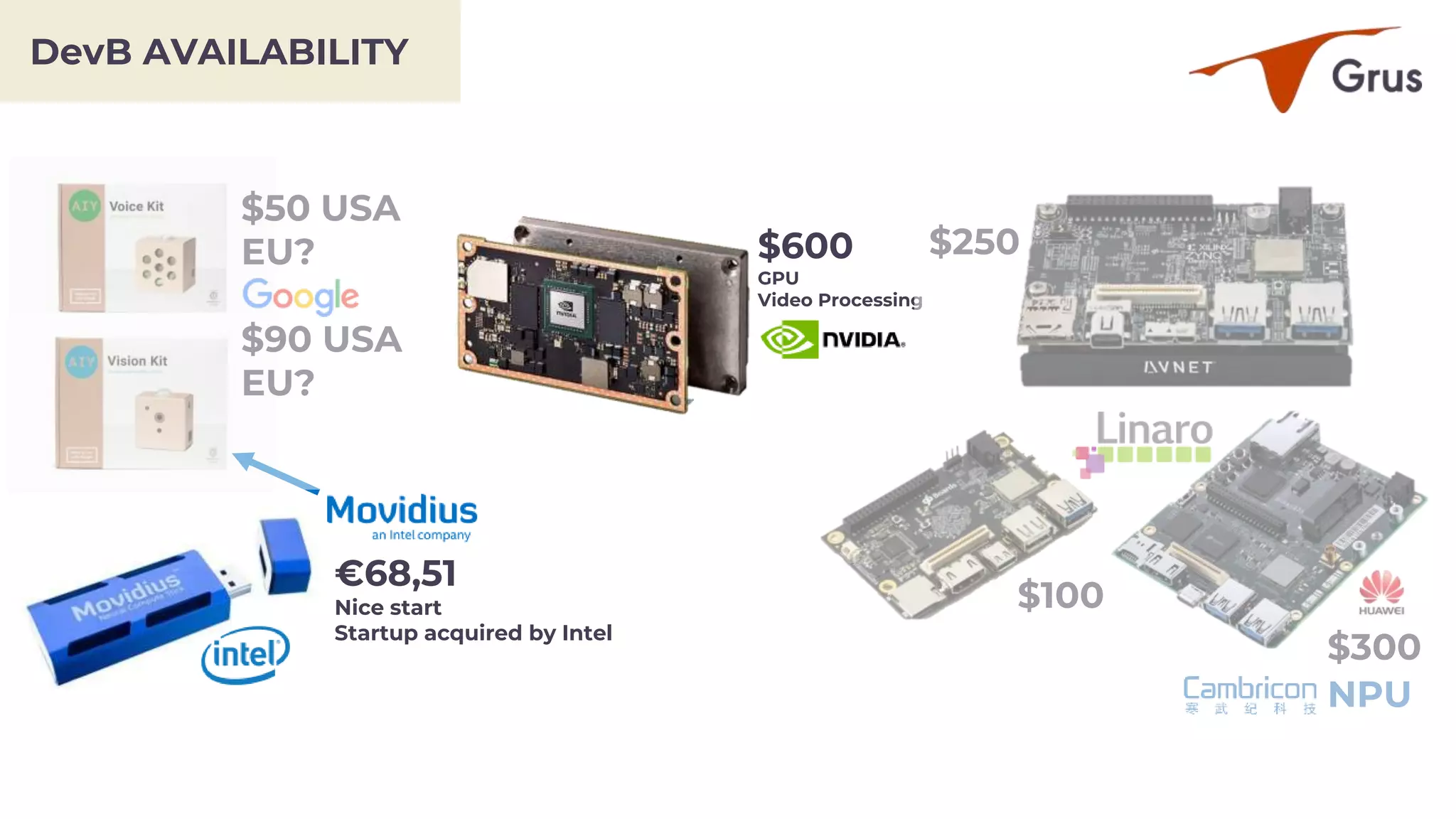

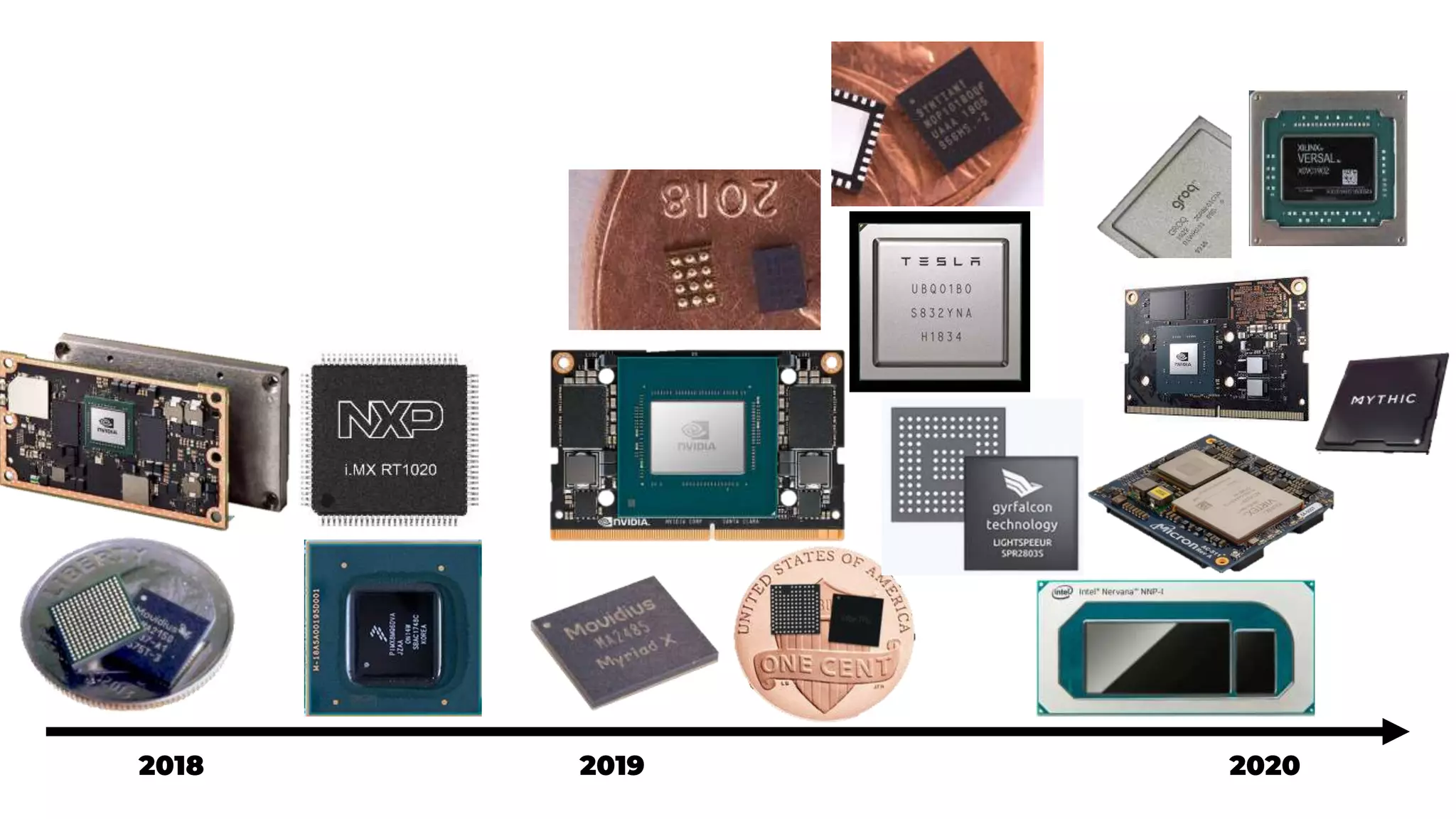

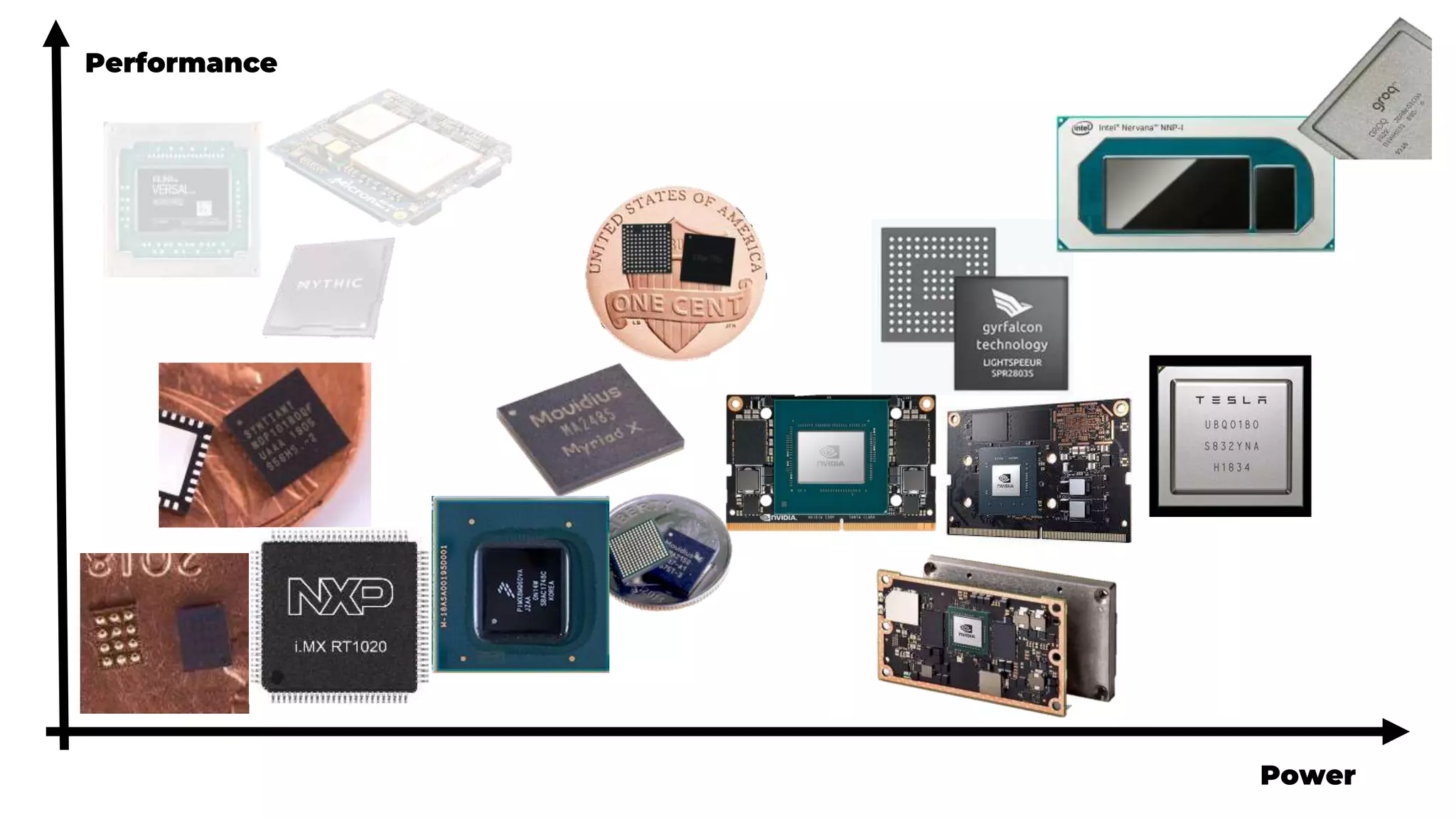

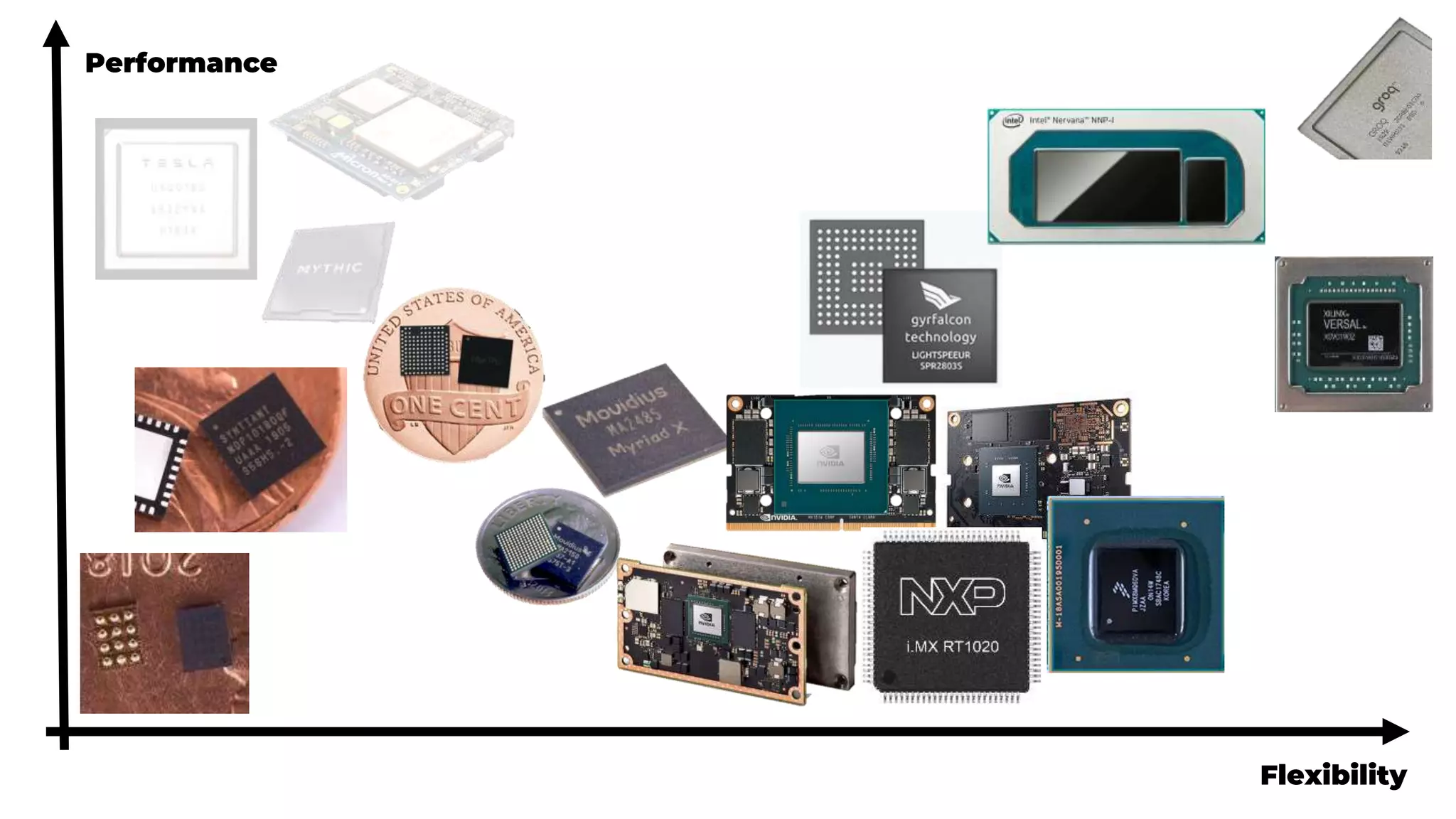

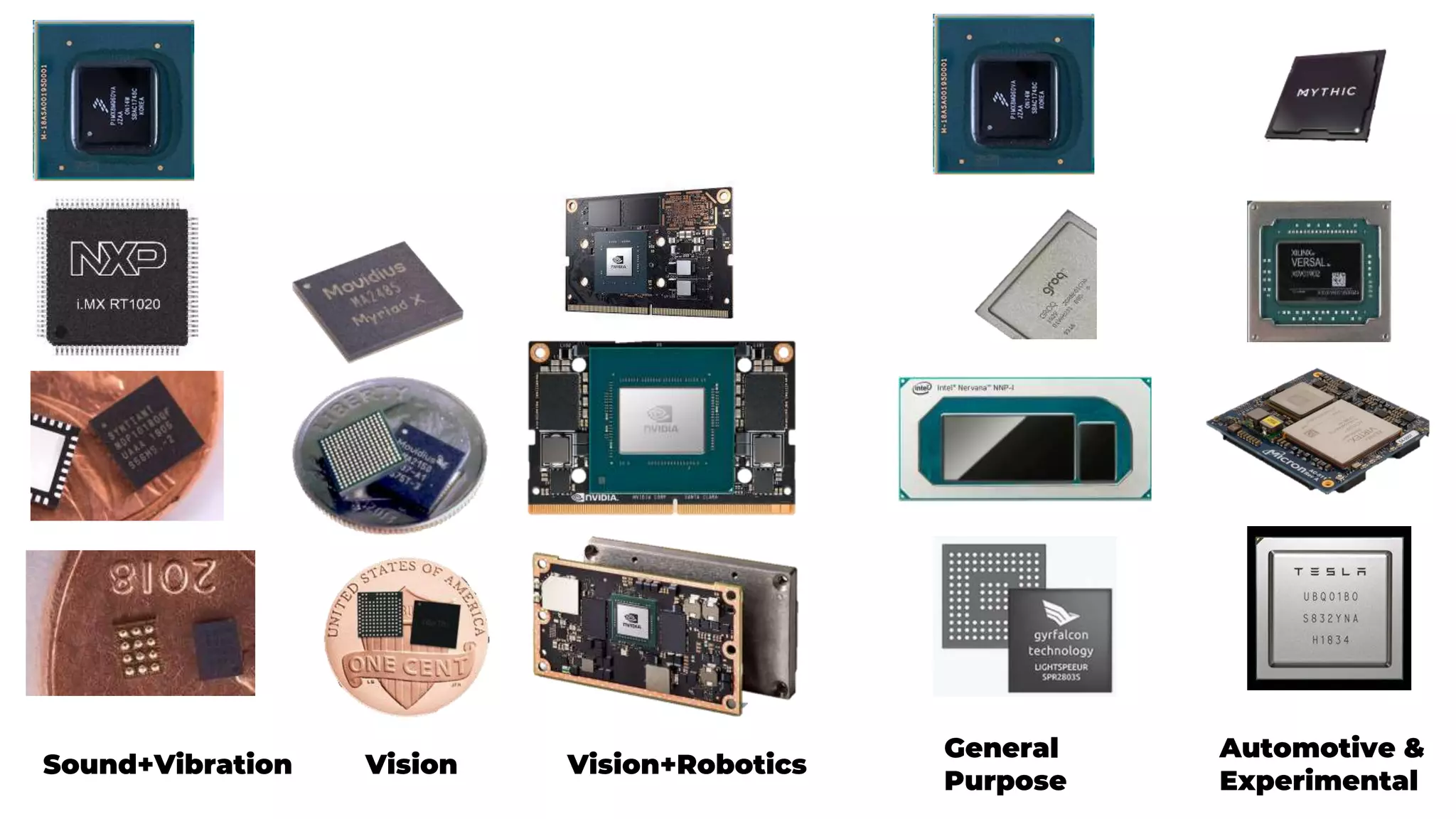

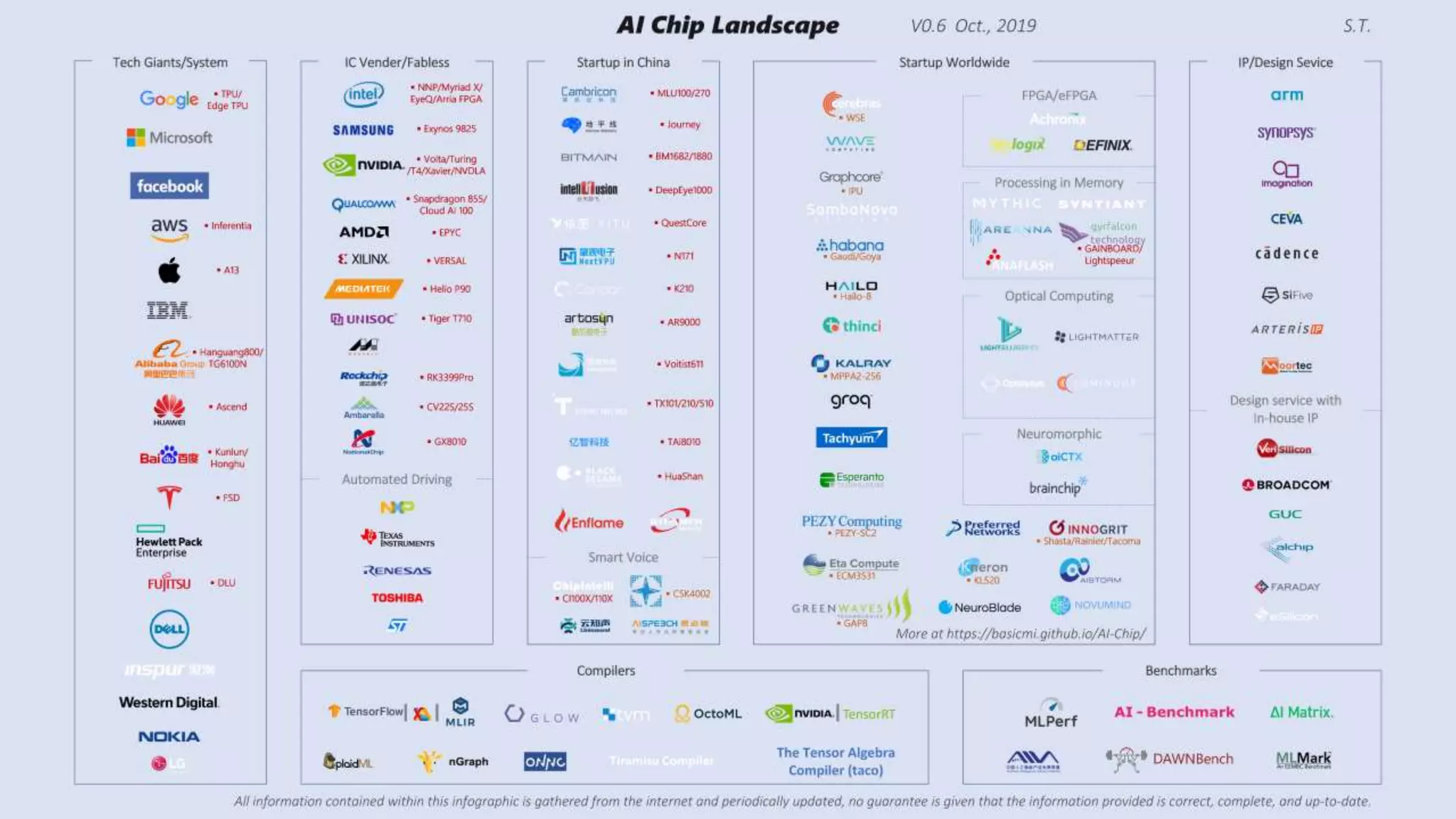

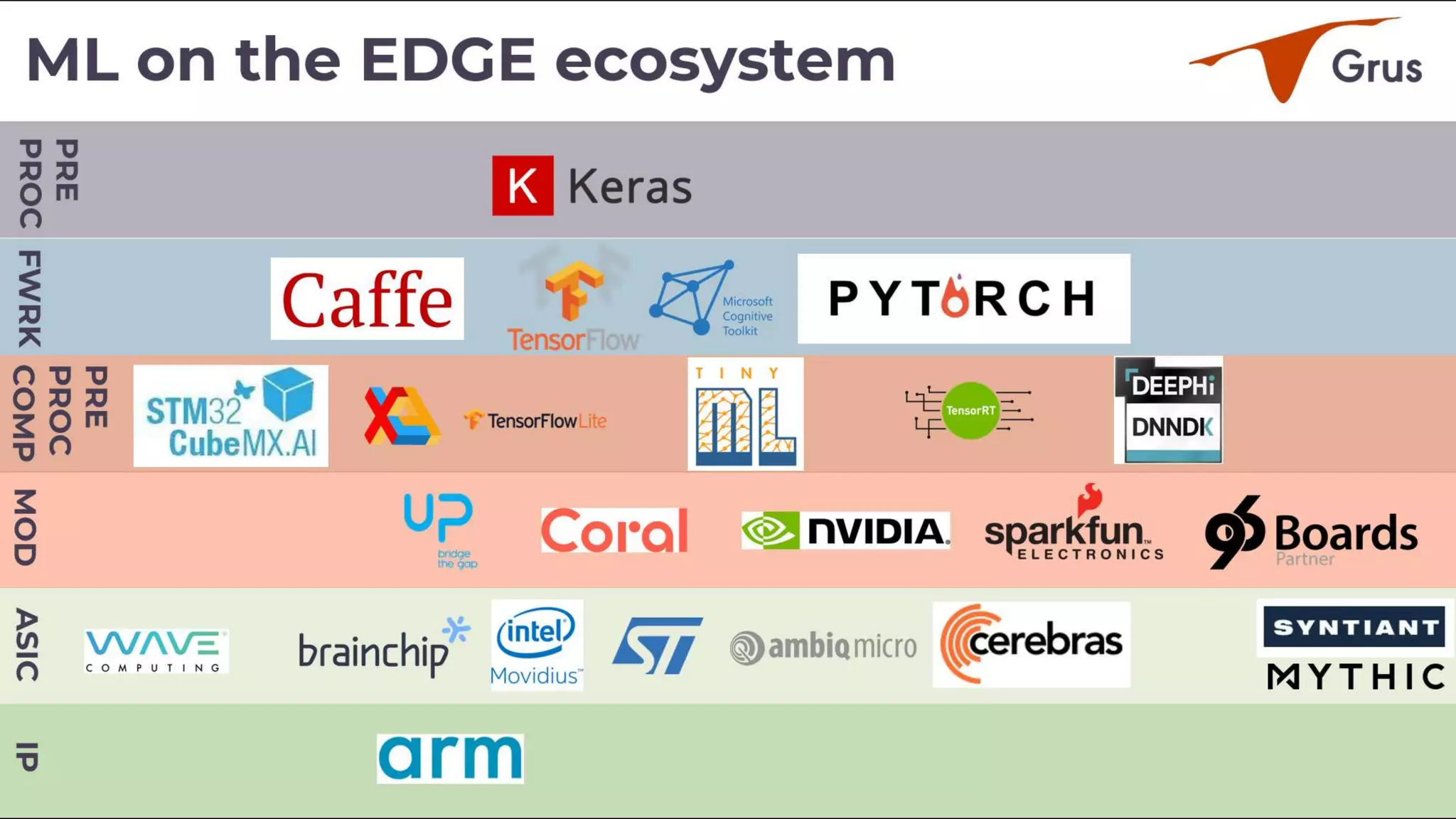

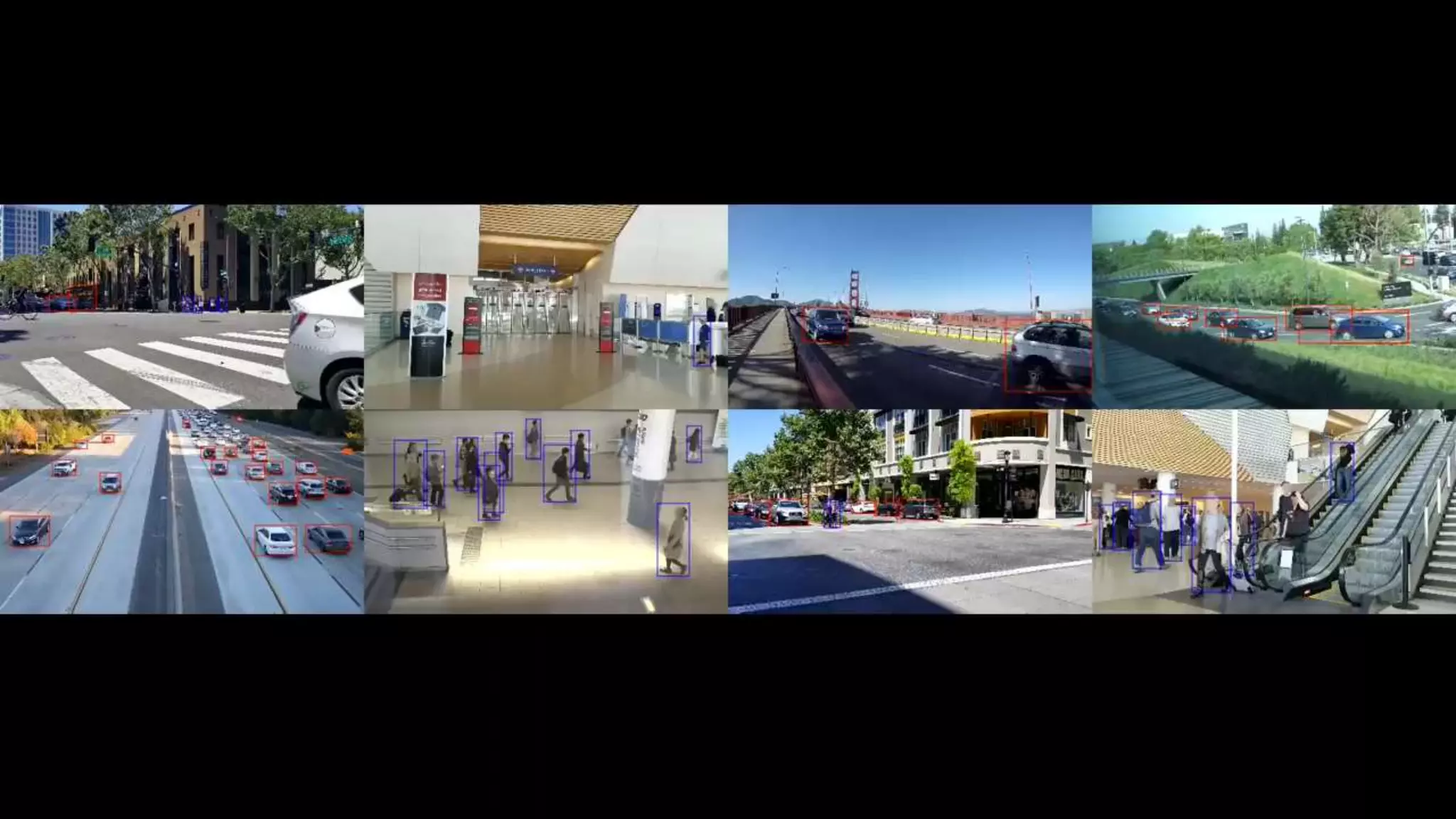

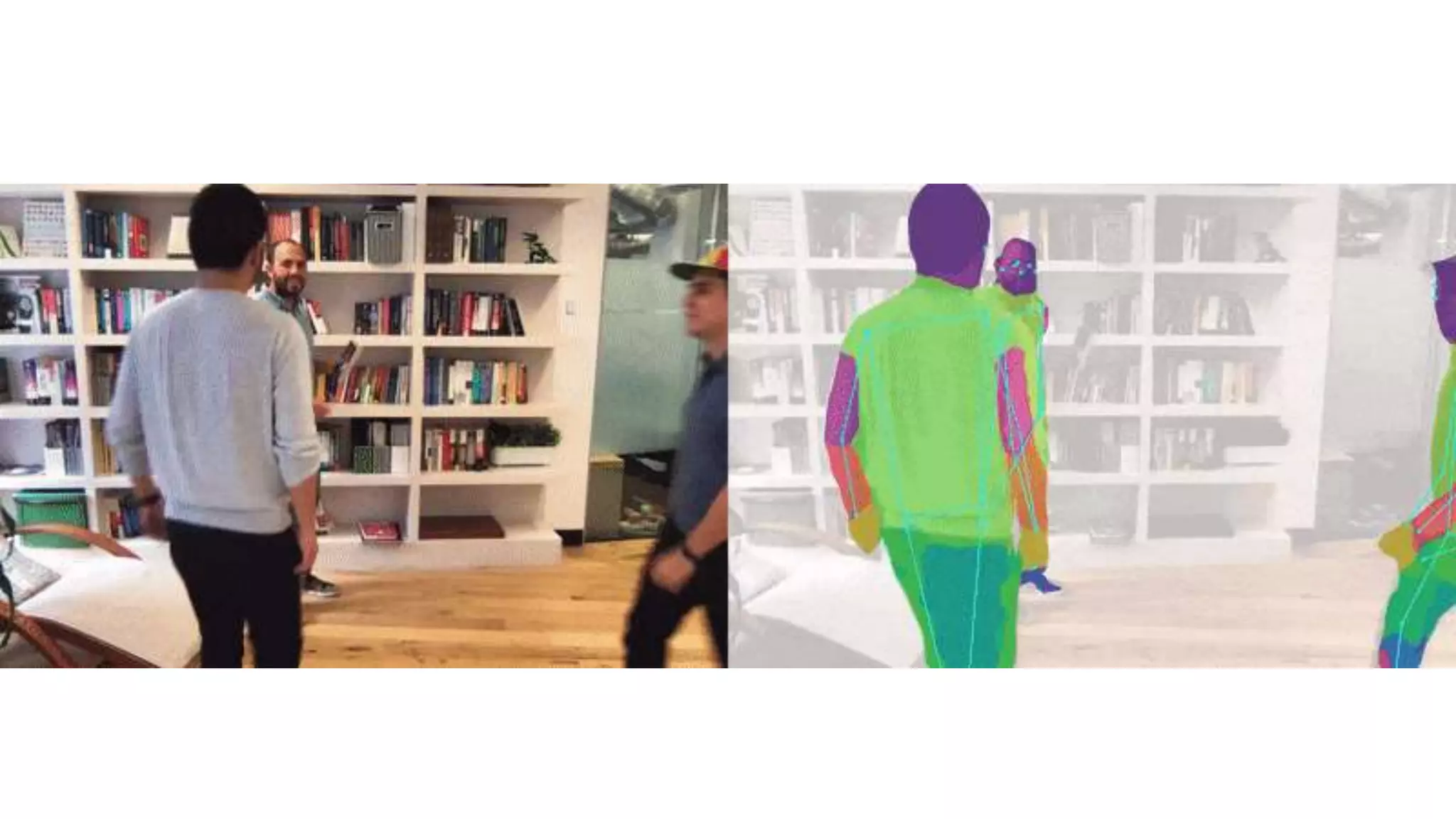

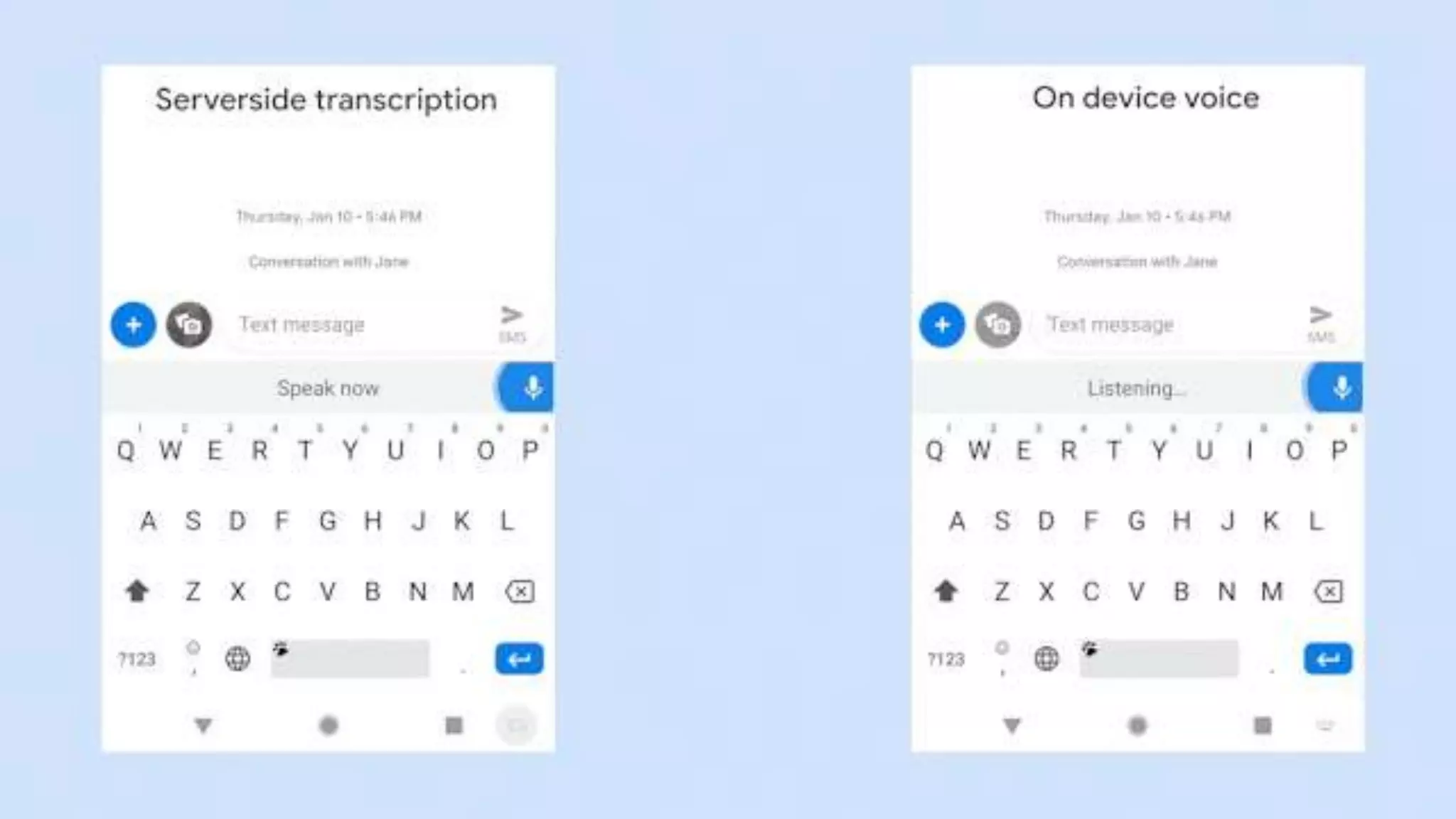

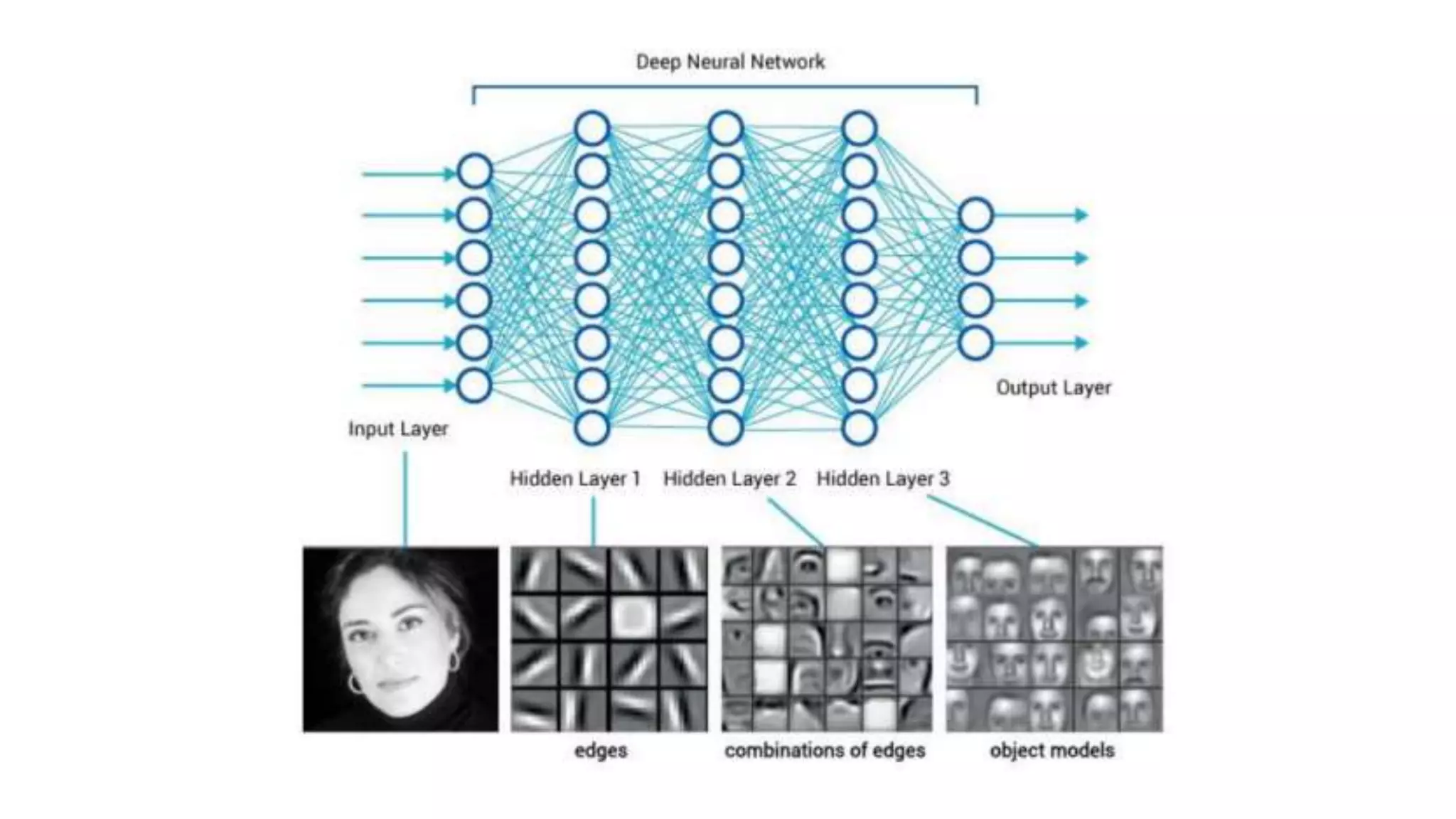

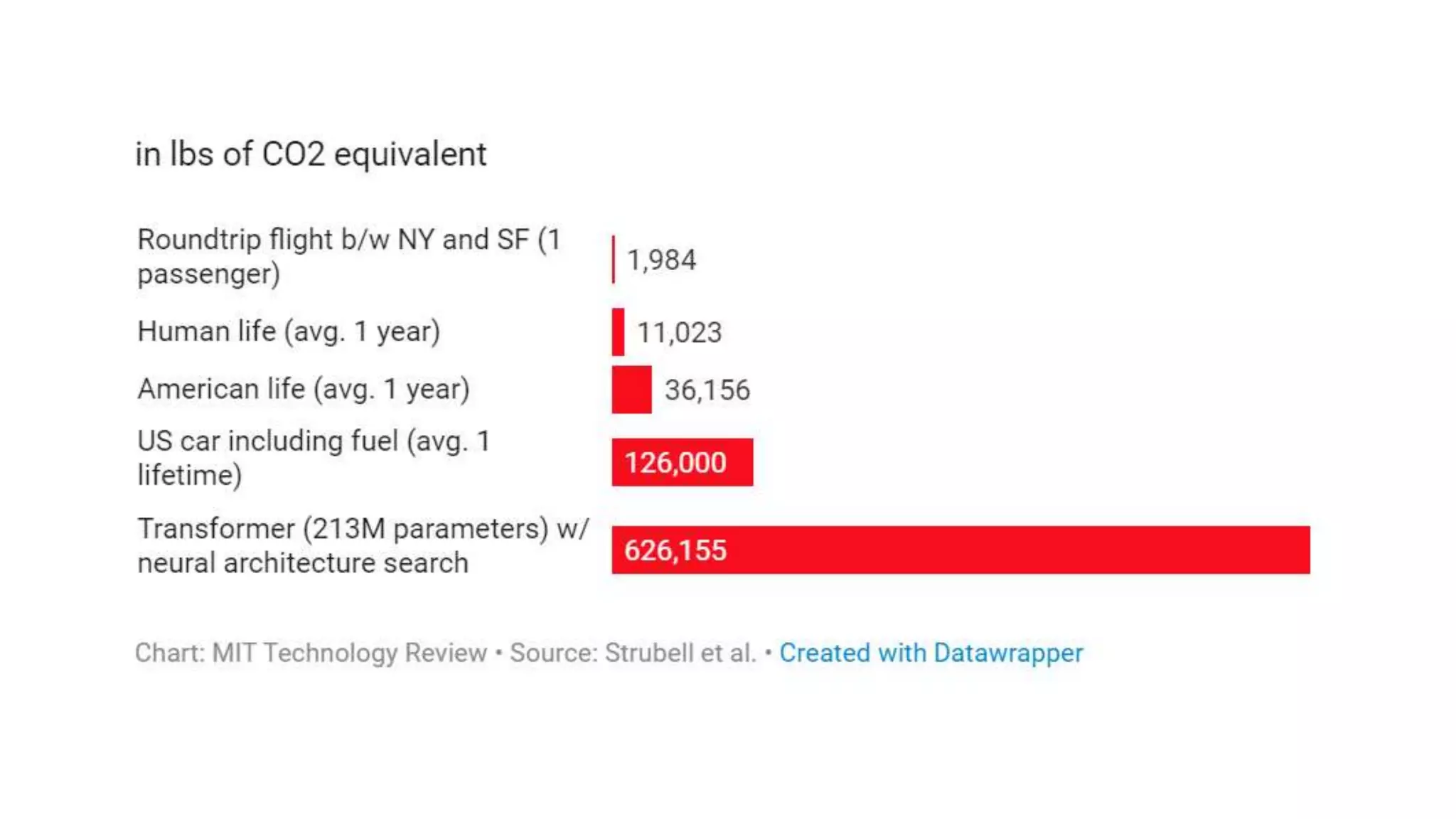

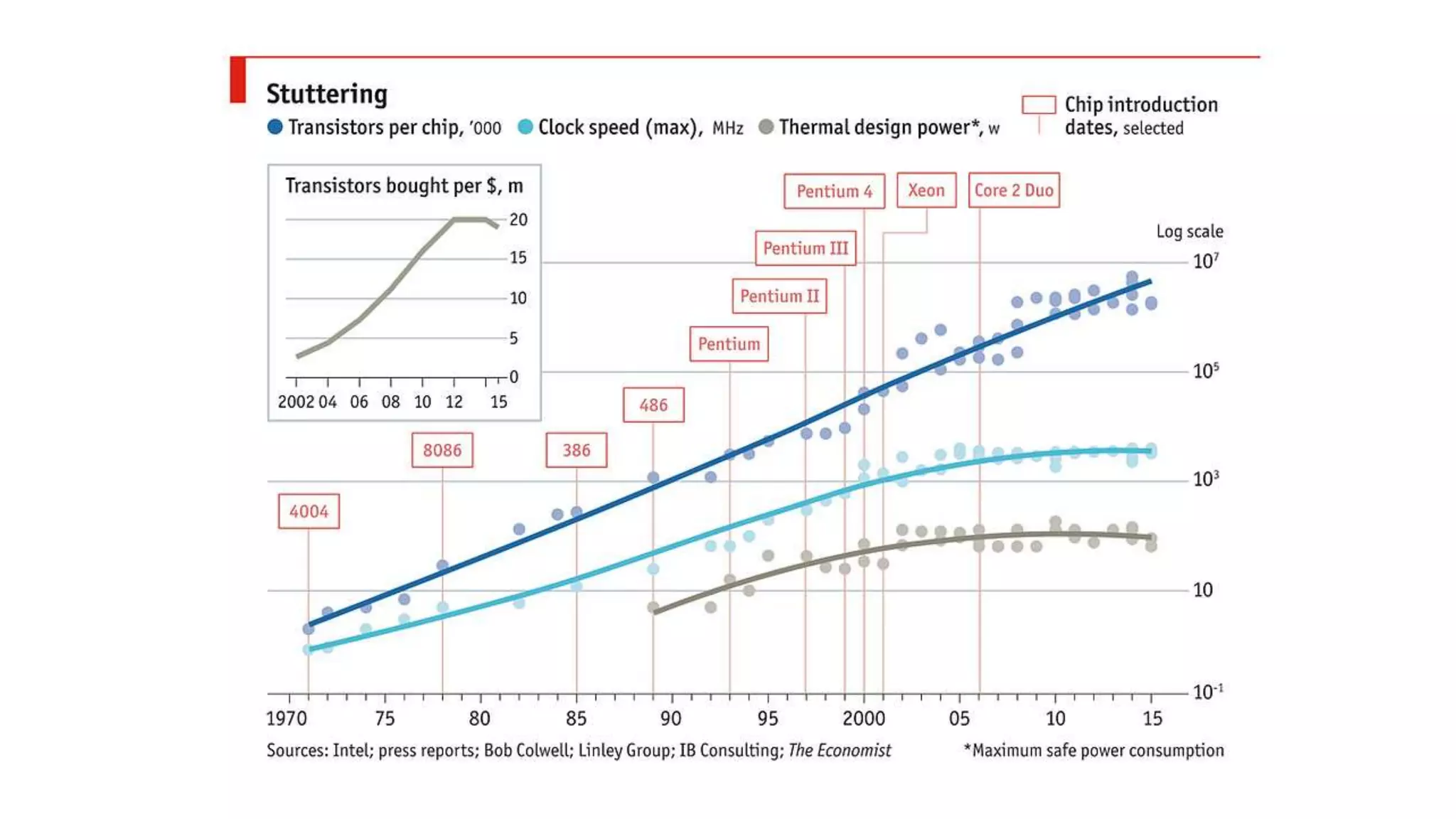

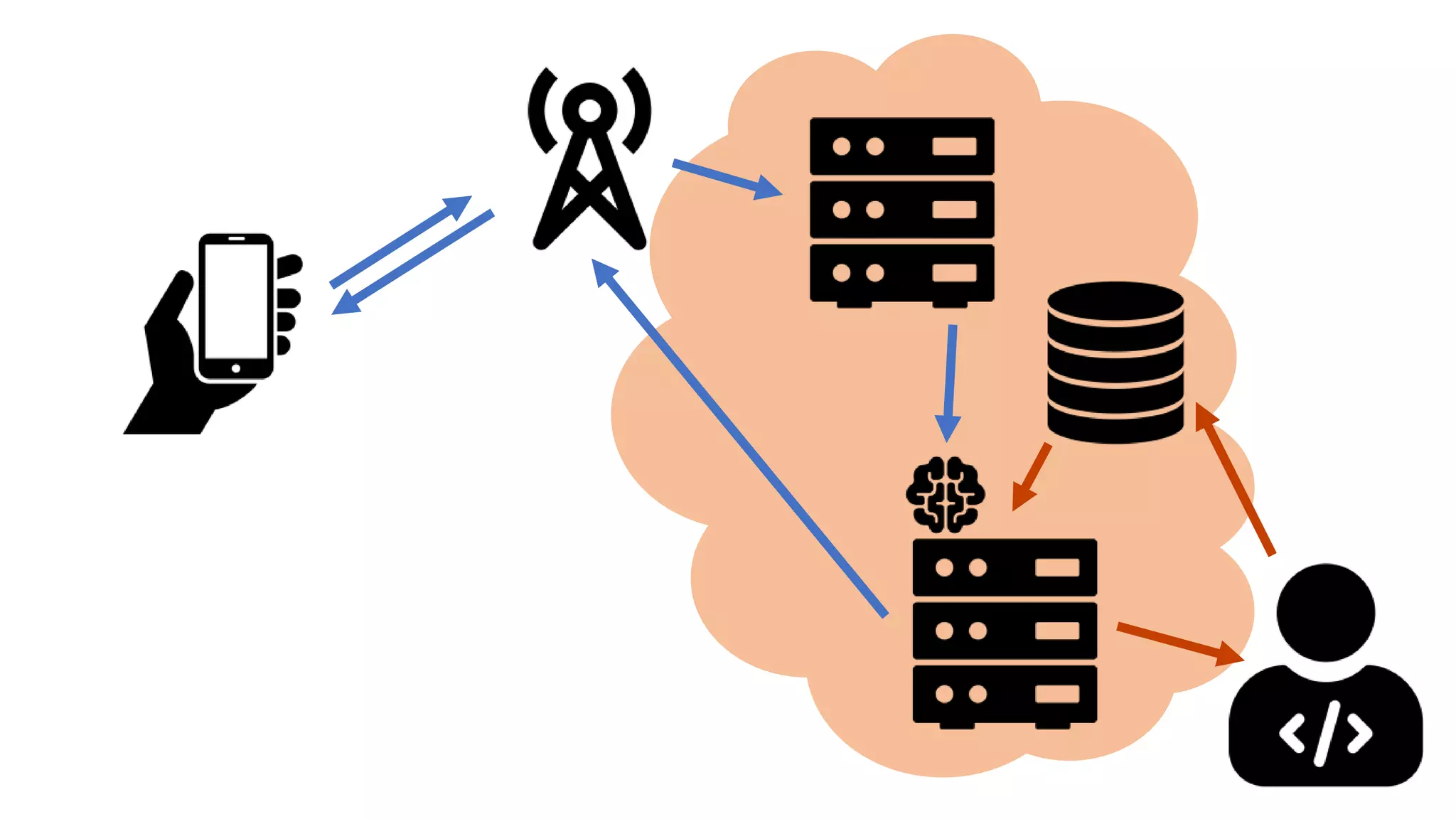

The document discusses a machine learning event focused on 'machine learning at the edge,' highlighting its potential applications, challenges, and the shift from cloud-based products due to issues like latency and data privacy. Key factors for success include computational power and innovative hardware design for edge applications. The future of machine learning is seen as evolving towards smaller, more efficient models that adapt to edge environments.

![“HW design was getting a little bit

boring. […]

The rise of machine learning (deep

learning) has changed all that.”

Pete Warden - https://petewarden.com/2019/04/14/what-machine-learning-needs-from-hardware/](https://image.slidesharecdn.com/mlontheedgecompressed-191212144027/75/Machine-learning-at-the-edge-33-2048.jpg)