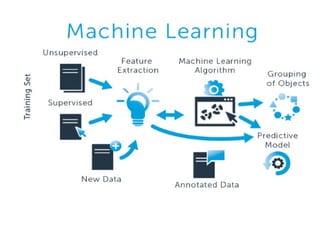

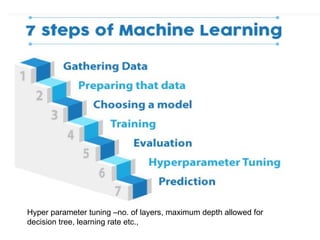

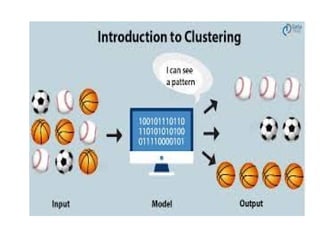

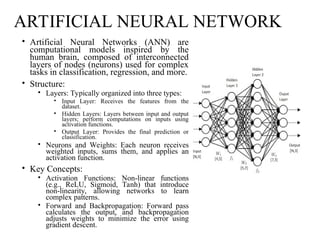

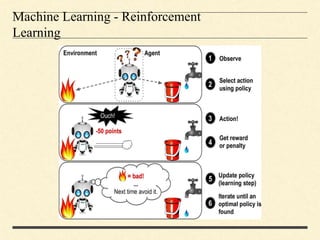

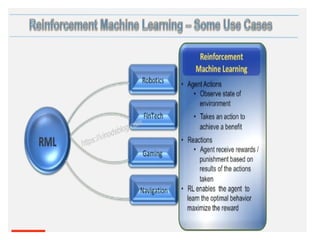

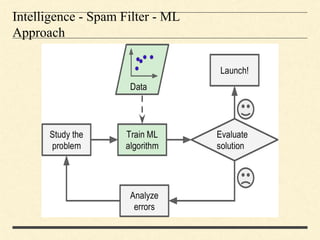

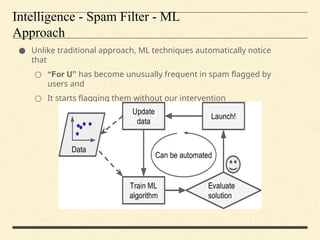

The document provides an introduction to machine learning, detailing its fundamental concepts, types (supervised, unsupervised, reinforcement learning), and real-world applications. It covers algorithms such as naive Bayes, random forest, and artificial neural networks, discussing their workings and challenges in performance. Additionally, it highlights the advantages and disadvantages of various machine learning approaches and their impact on areas like spam filtering, self-driving cars, and personalized recommendations.

![What is Machine Learning?

“… said to learn from experience with

respect to some class of tasks, and a

performance measure P, if [the learner’s]

performance at tasks in the class as

measured by , improves with experience.”

Tom Michelle 1997.

Field of study that gives "computers

the ability to learn without being explicitly

programmed“

Arthur Samuel, 1959](https://image.slidesharecdn.com/mlppt-241124153955-d03a55bb/85/Machine-Learning-and-its-Appplications-3-320.jpg)