This document proposes a reference architecture for providing high availability across multiple data centers using MariaDB and related open source tools. It summarizes:

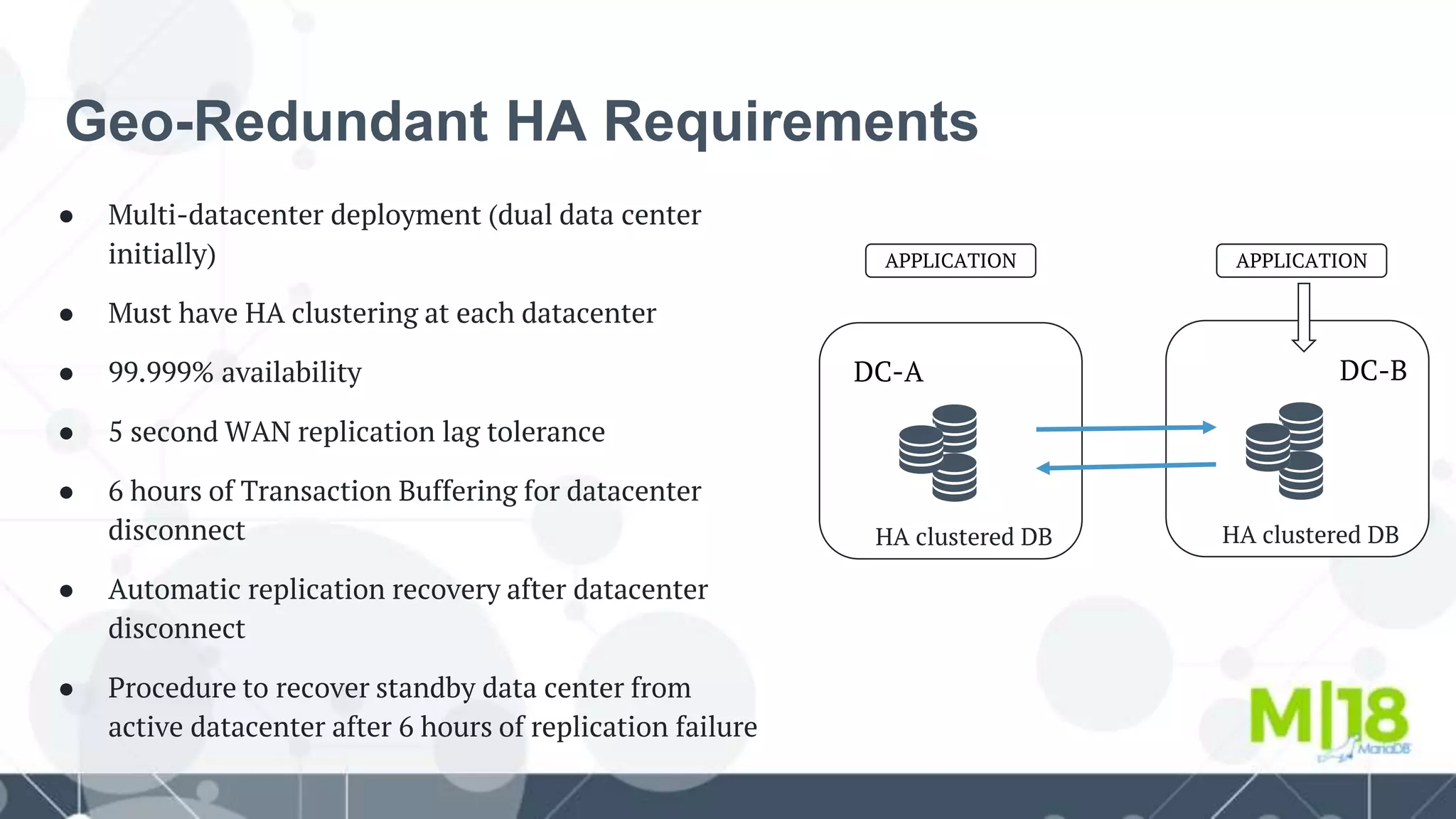

- The need for a geo-redundant highly available database architecture at Nokia to support multiple product units.

- An evaluation of alternatives including Galera clusters and master-master replication between data centers.

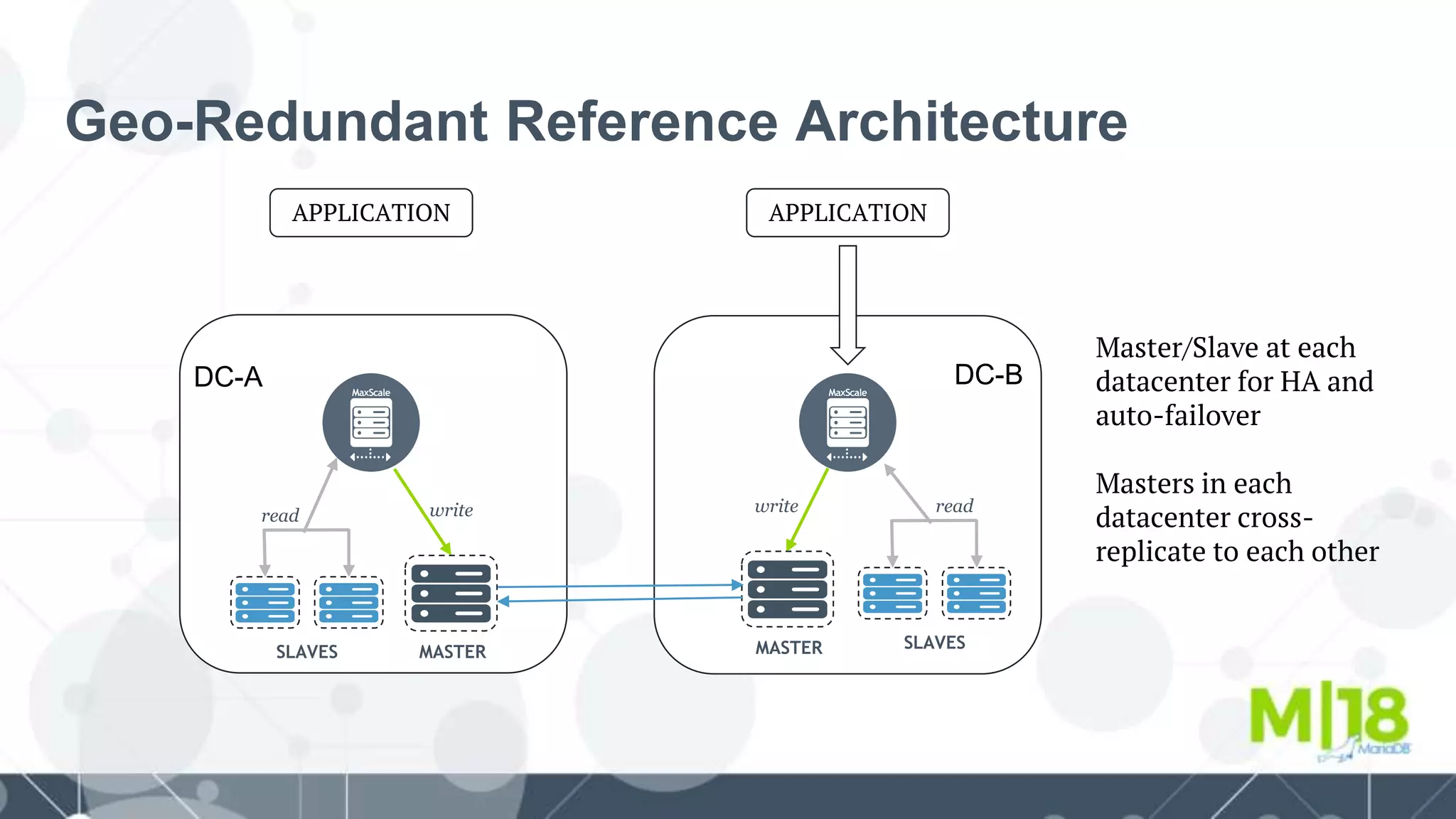

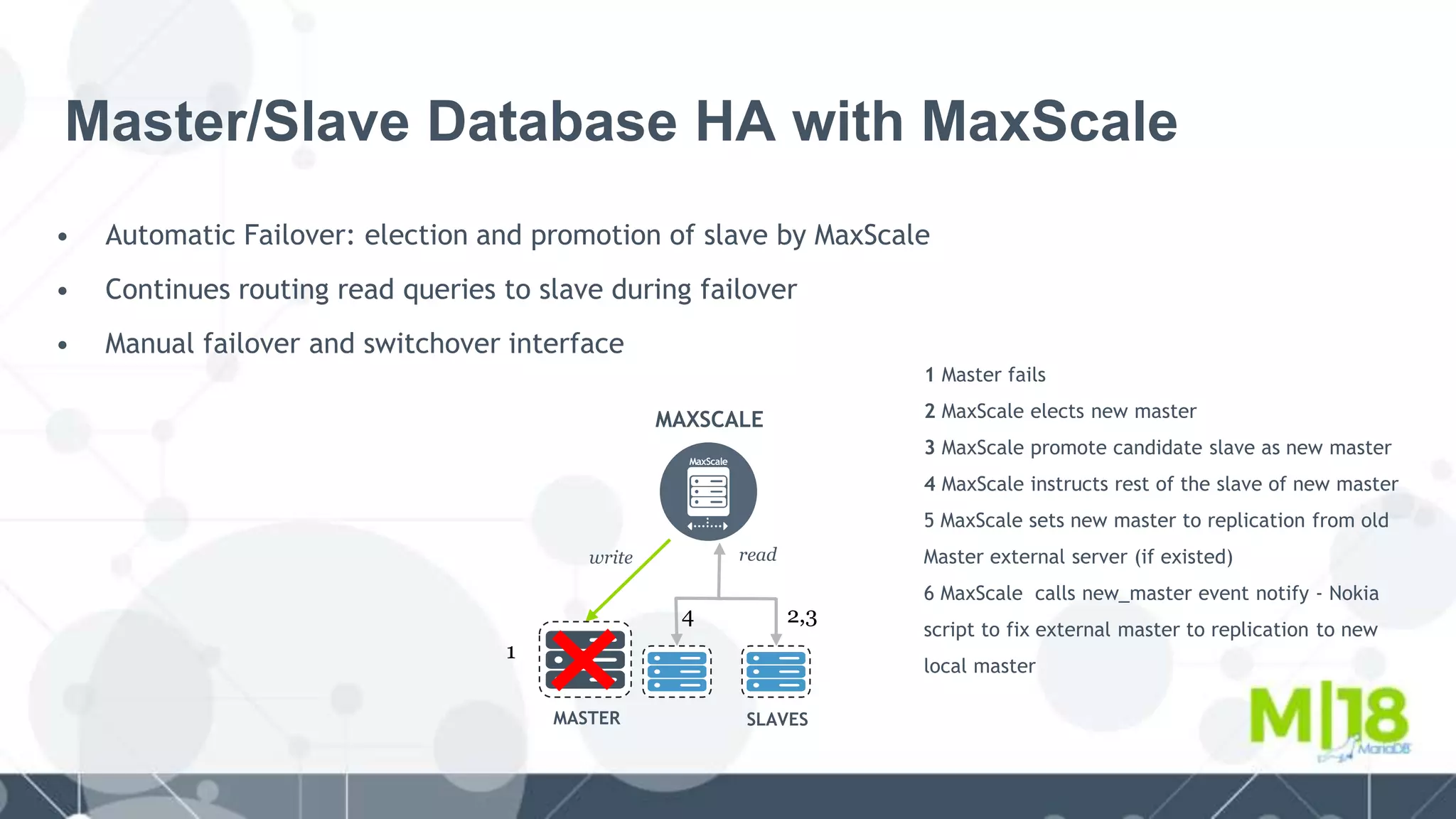

- A proposed architecture using MaxScale for local master-slave replication within each data center and cross-data center replication between masters for redundancy.

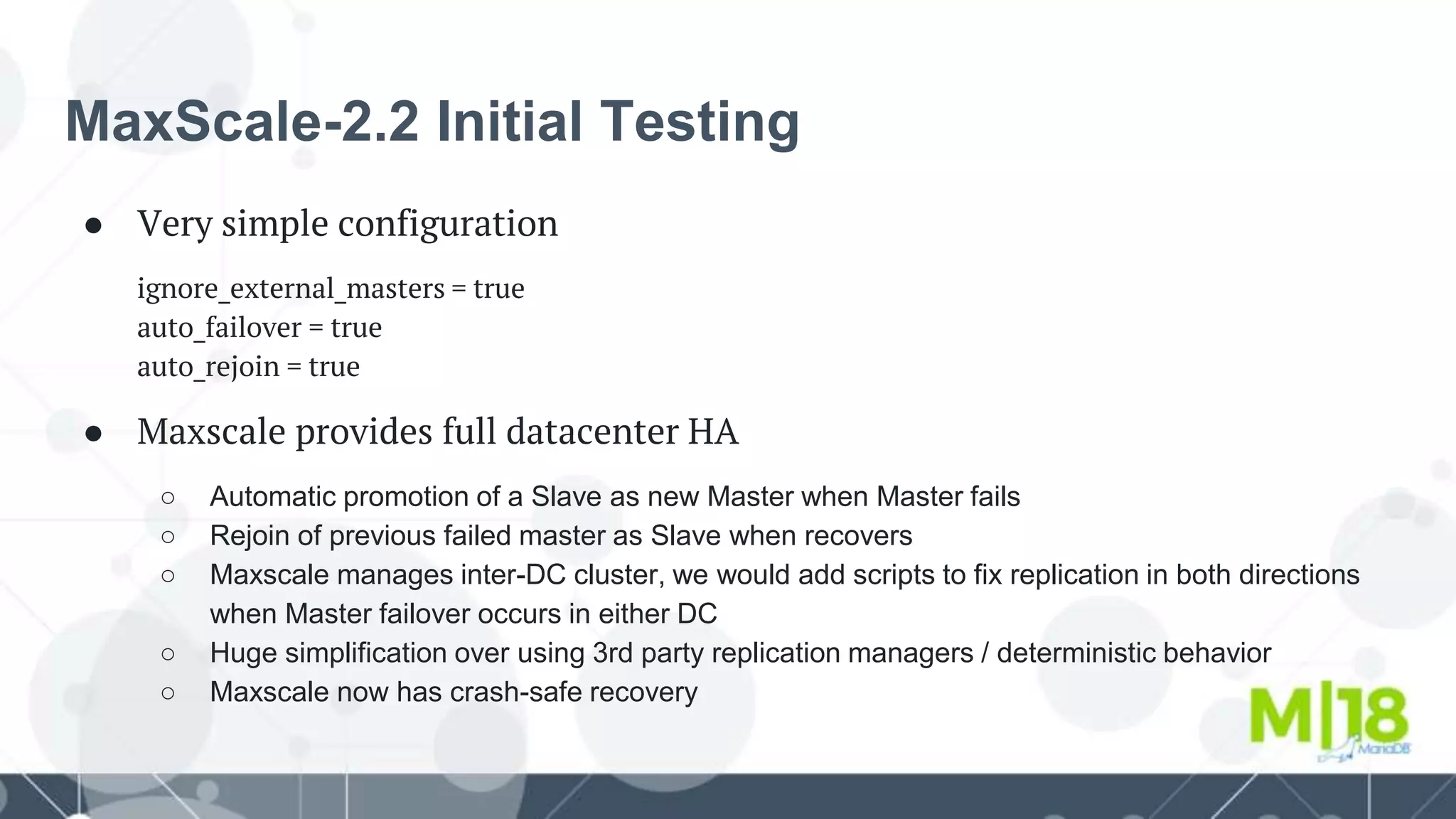

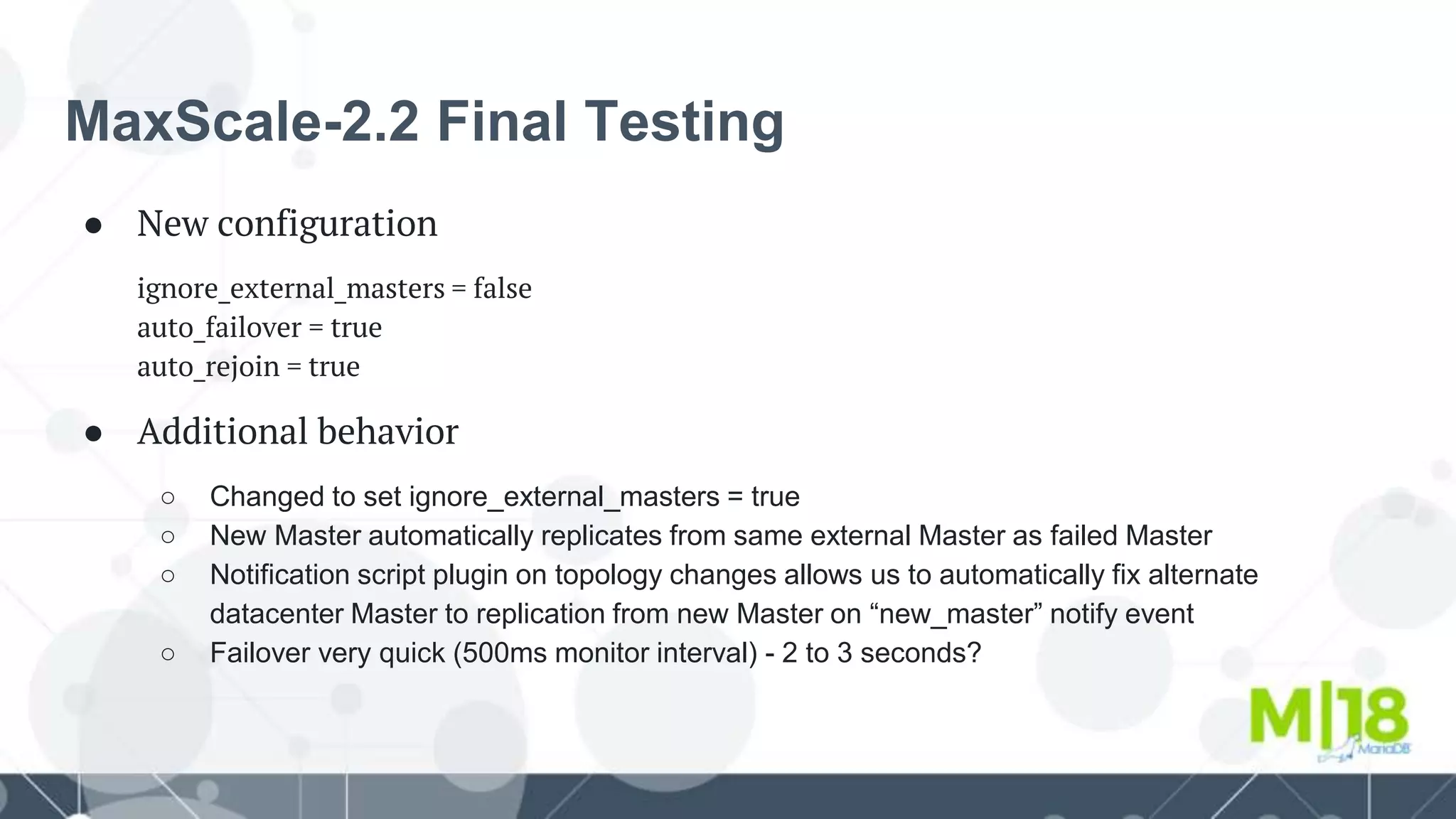

- Testing and development of MaxScale plugins and scripts to support automatic failover and recovery after failures within or between data centers.

- Plans for containerized deployment of the database clusters and MaxScale using Kubernetes with additional