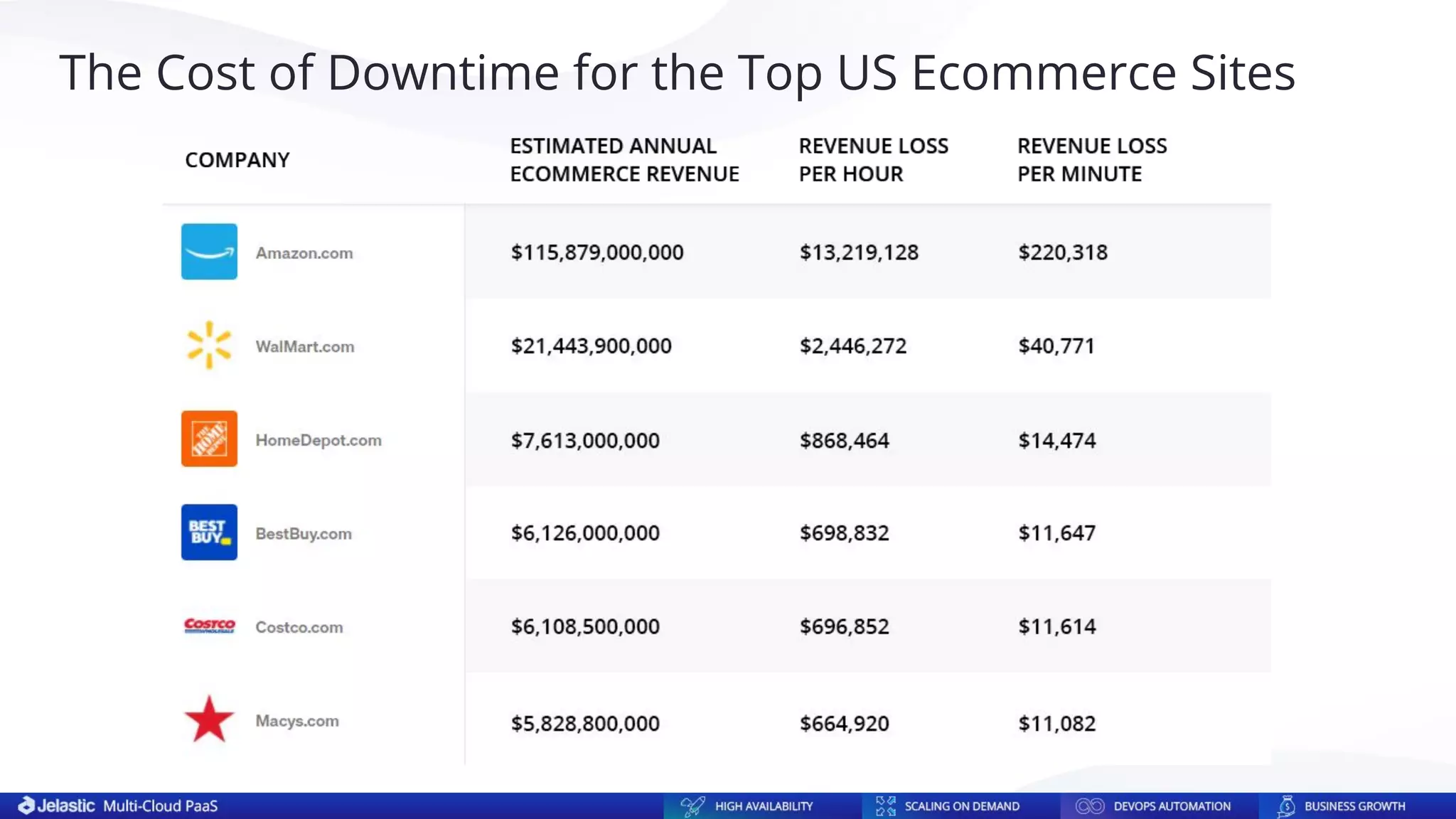

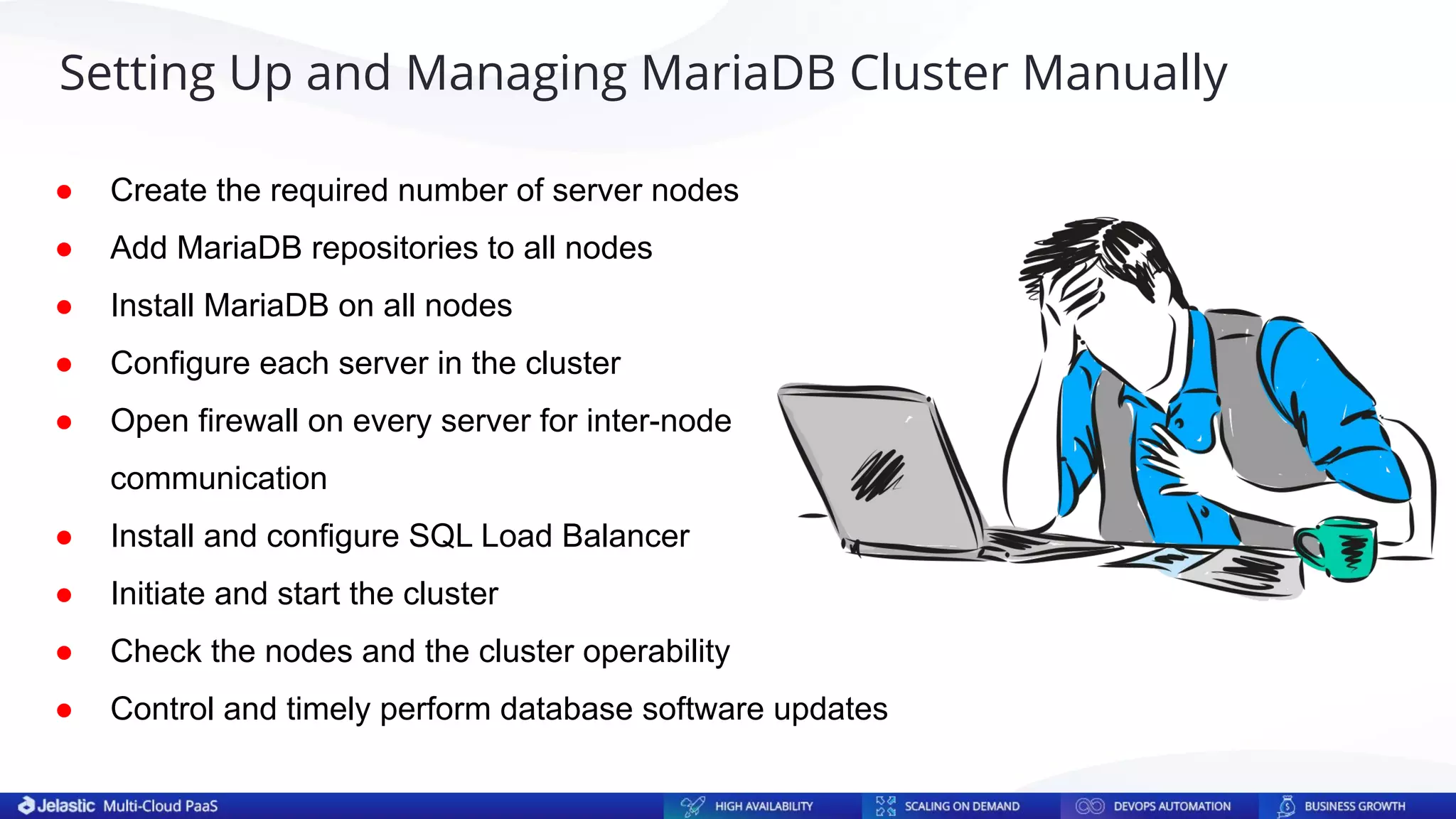

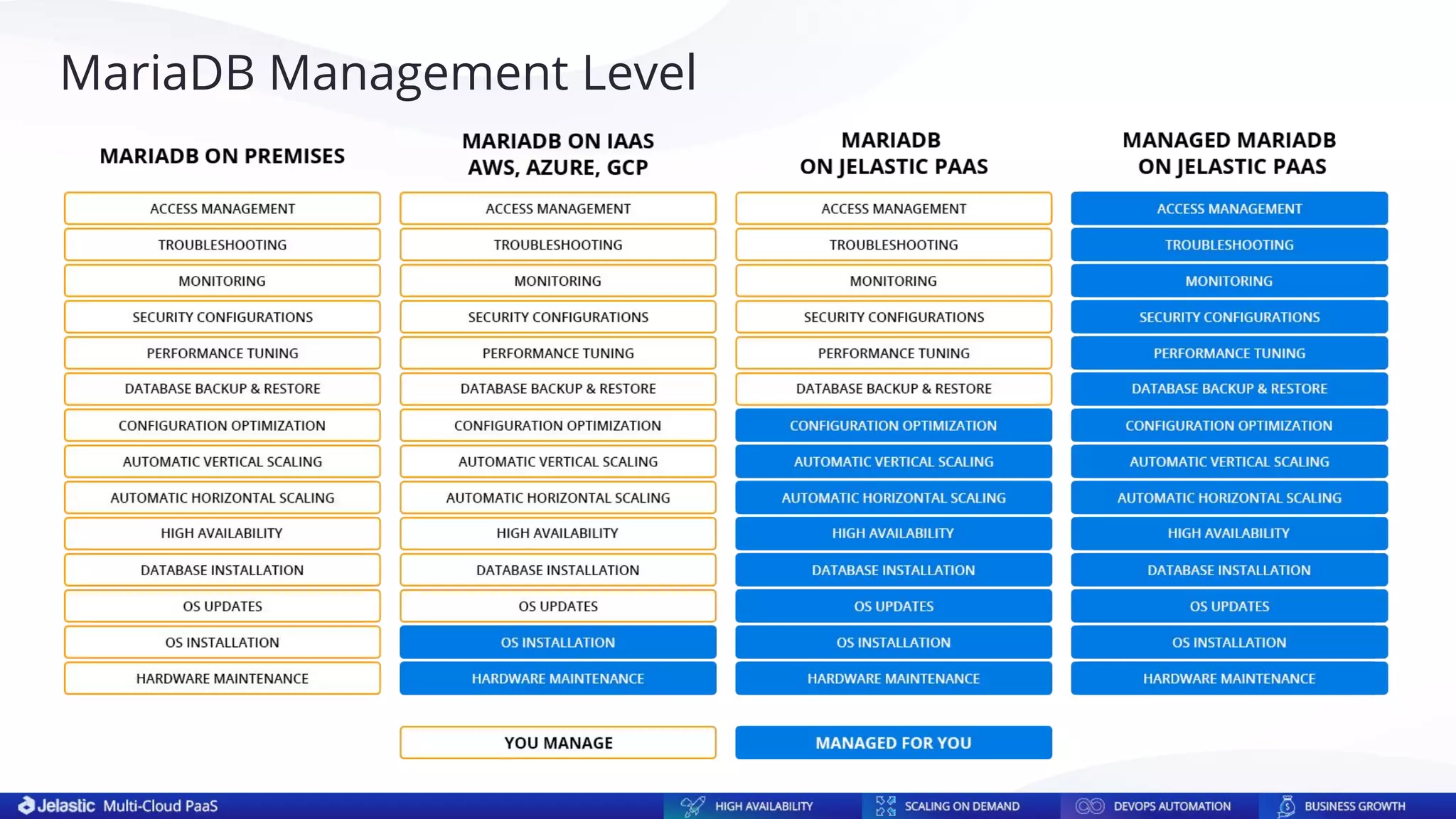

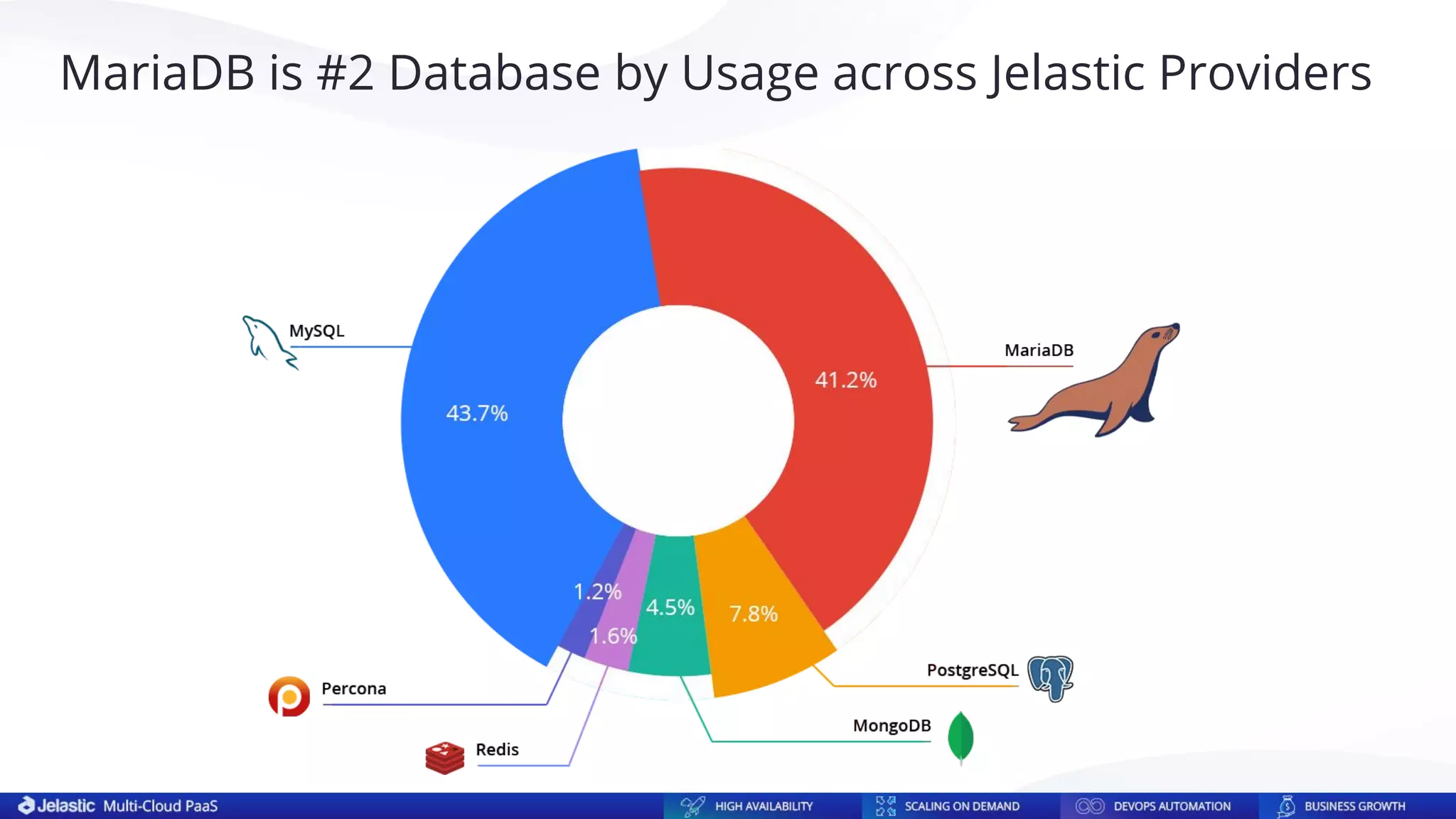

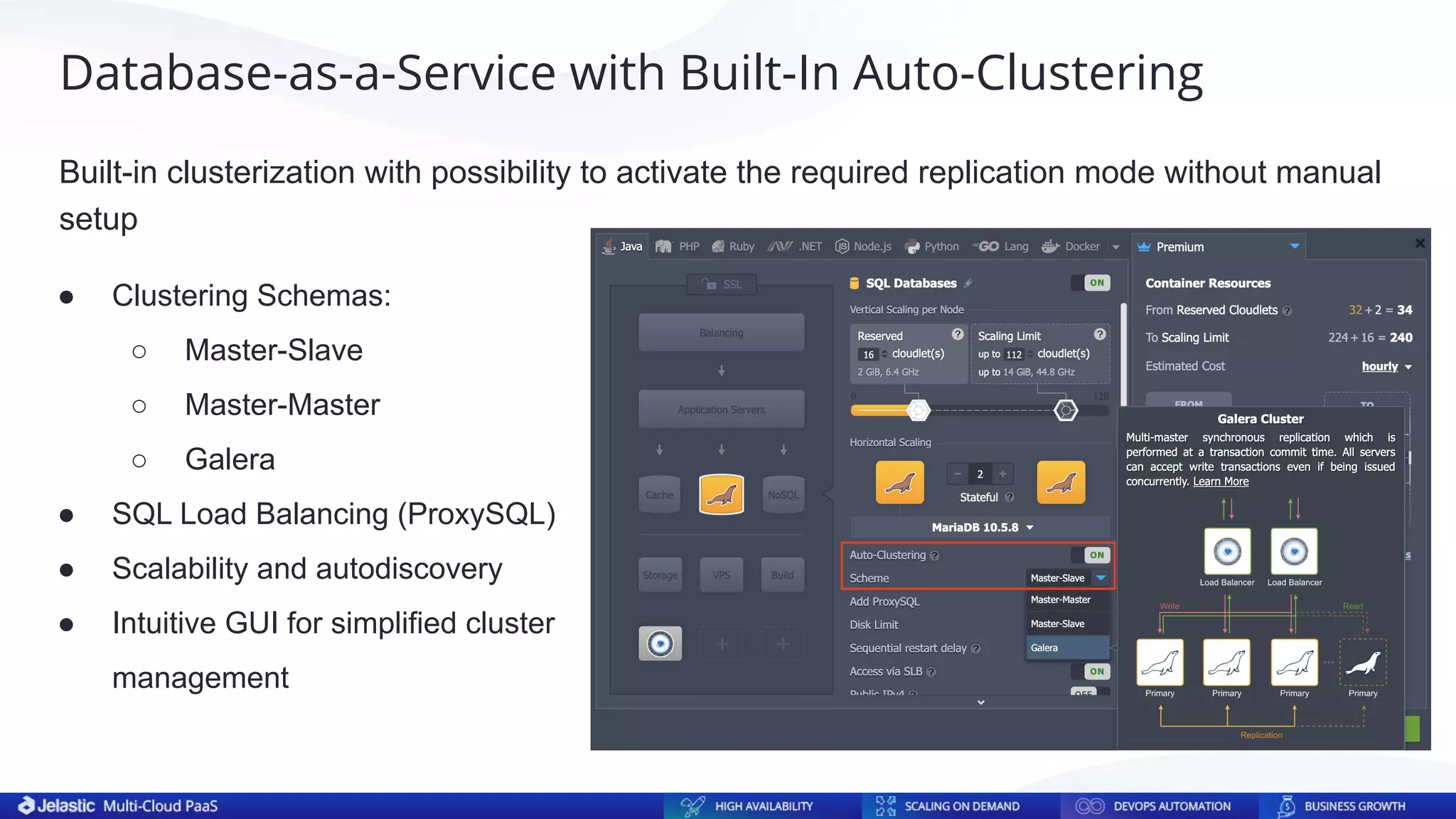

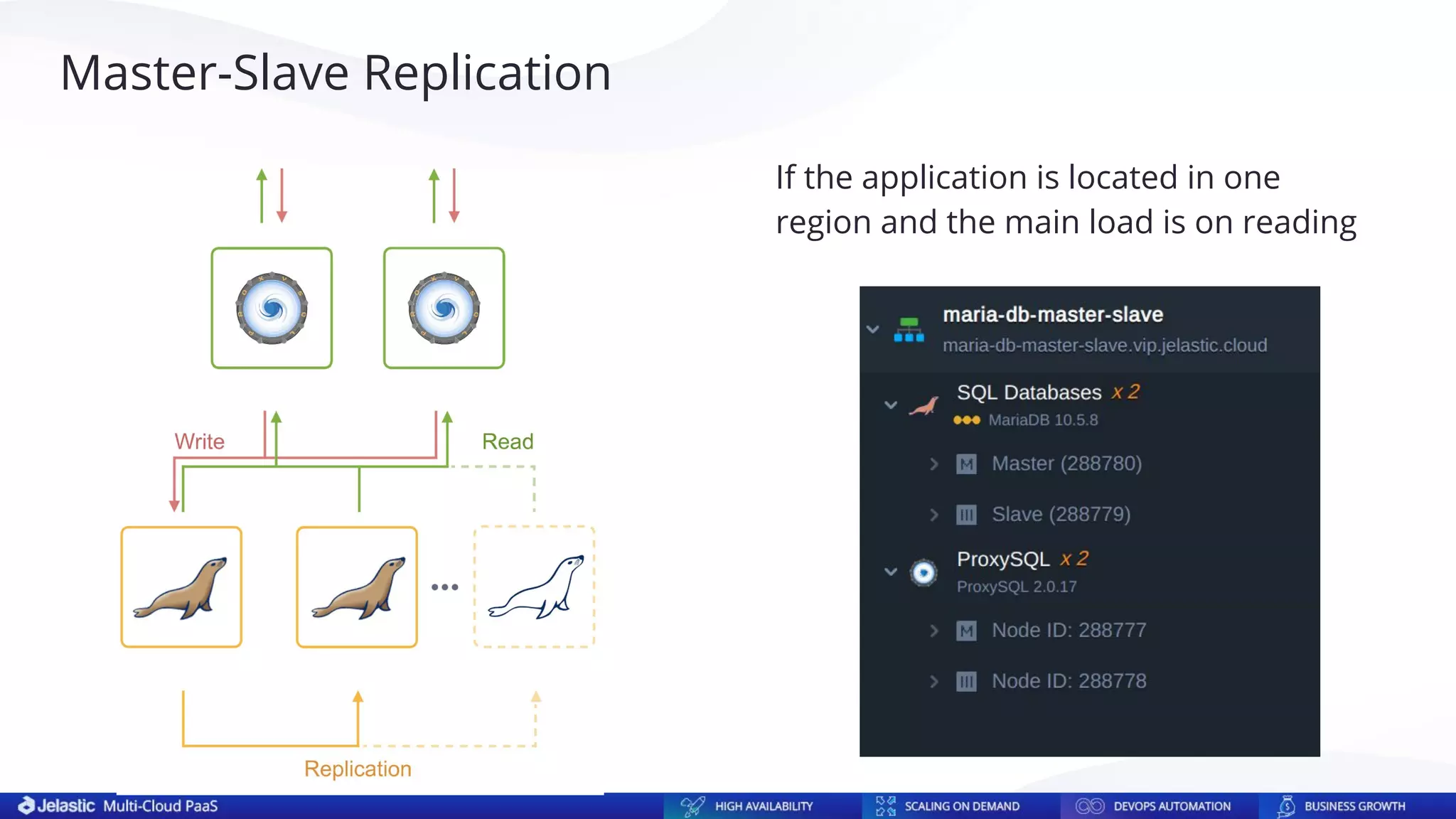

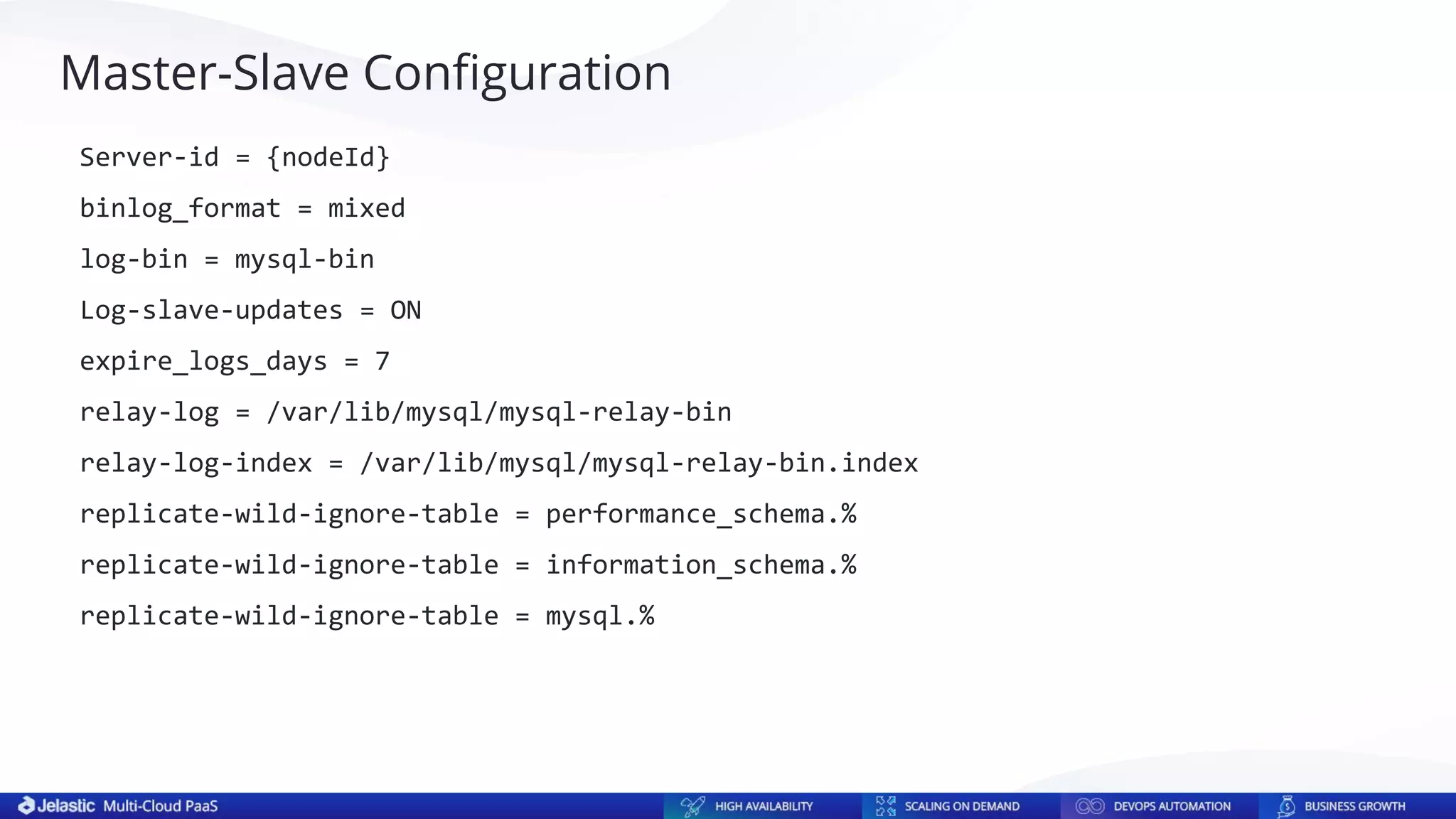

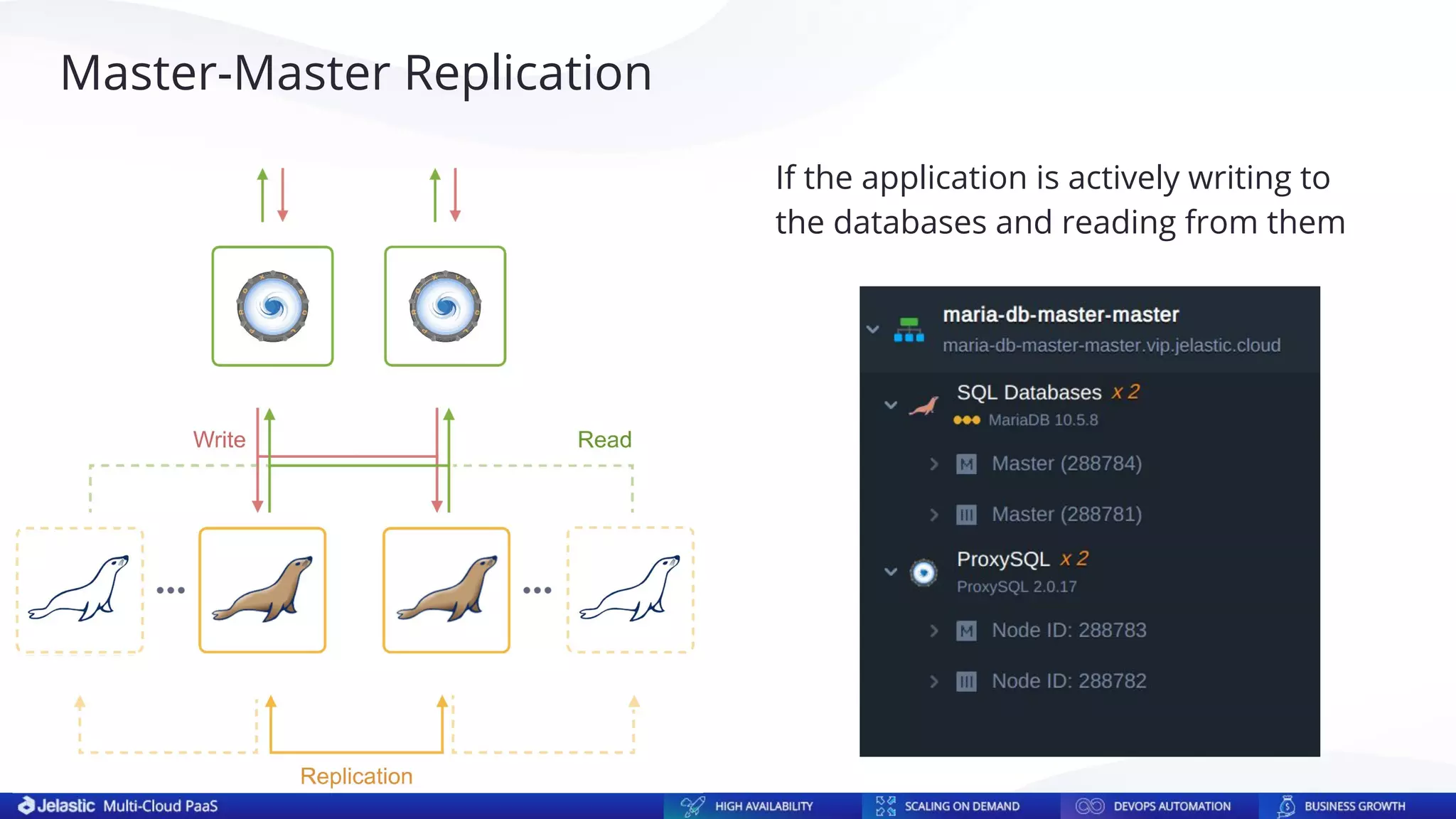

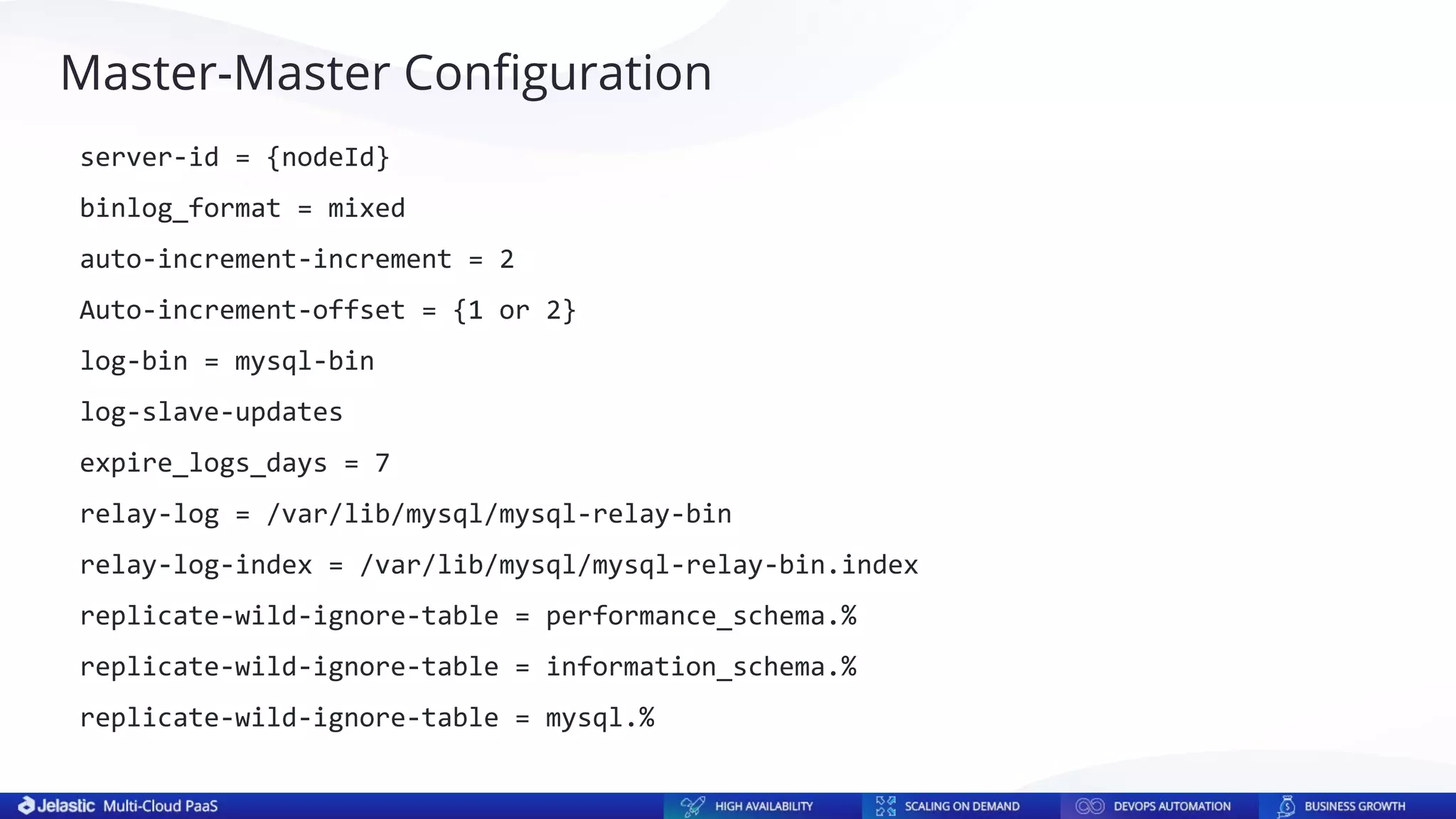

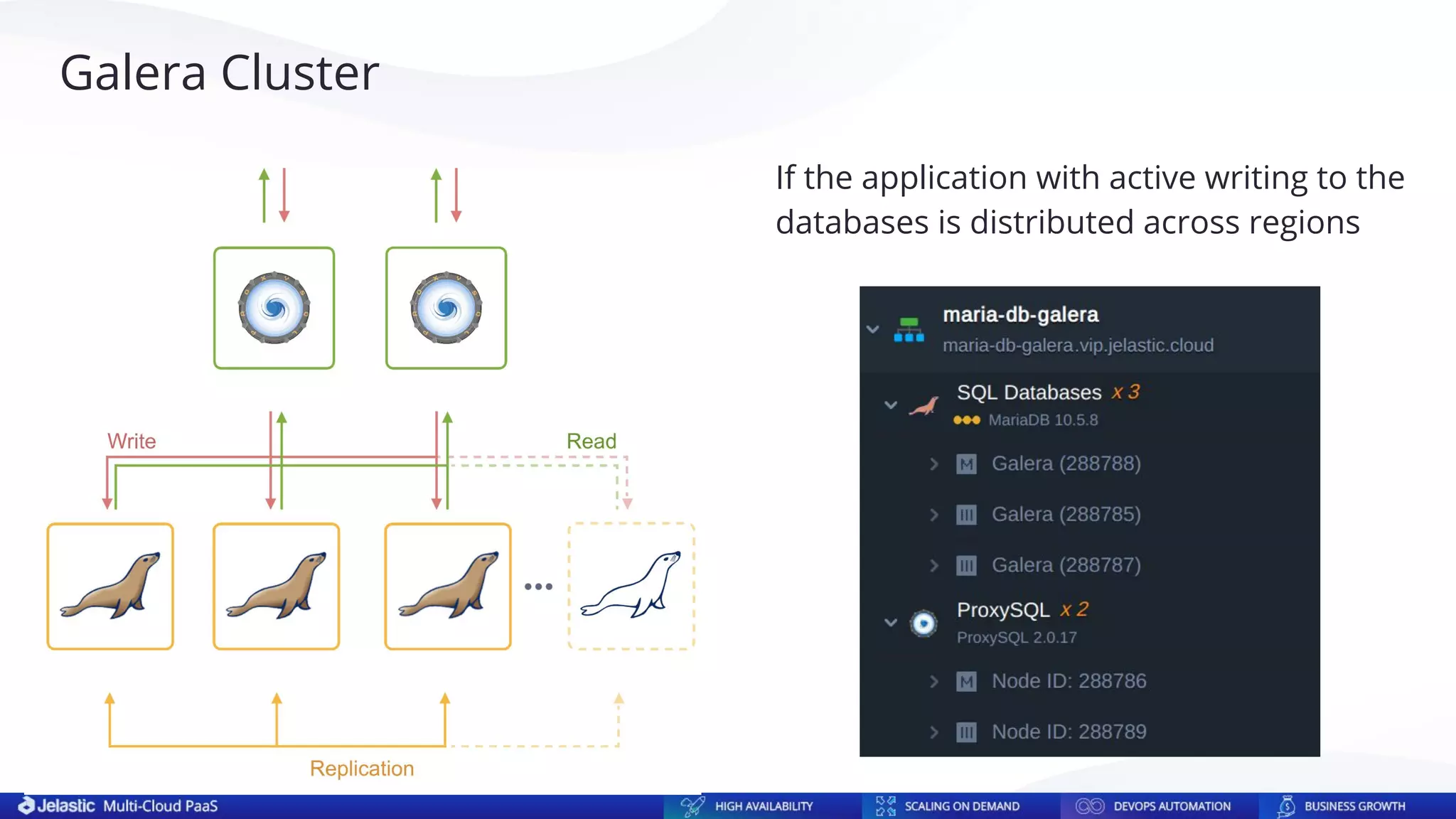

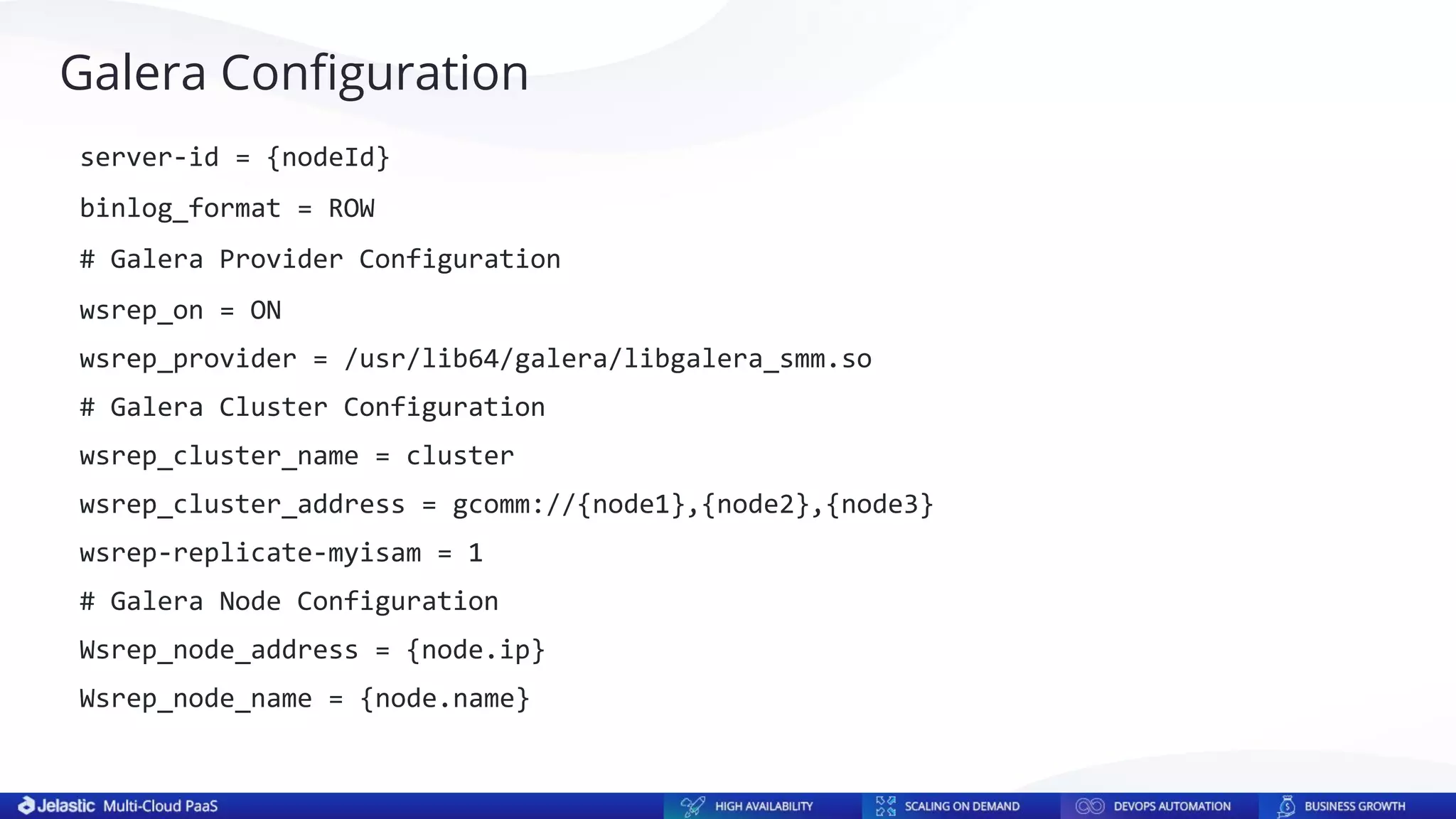

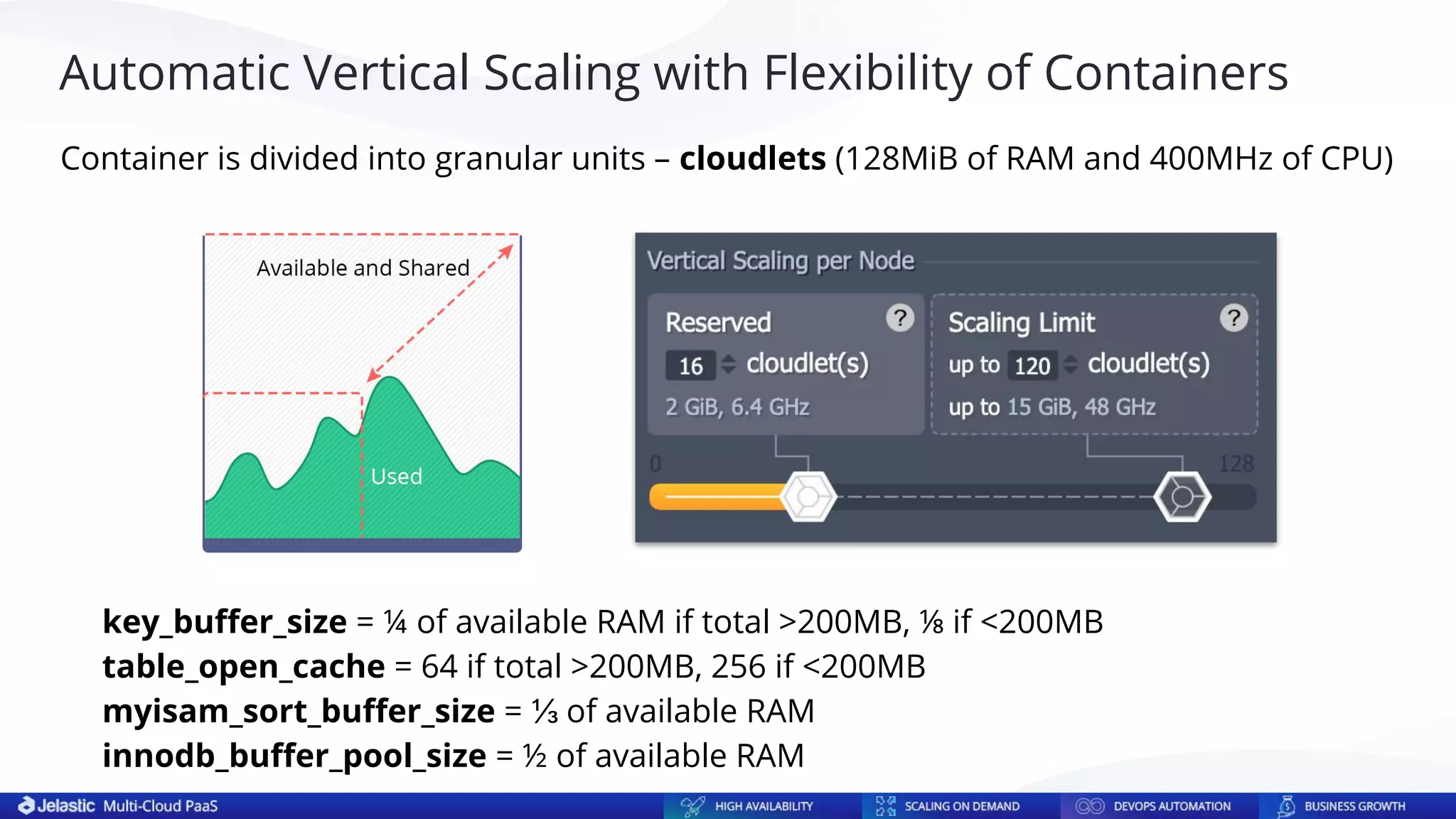

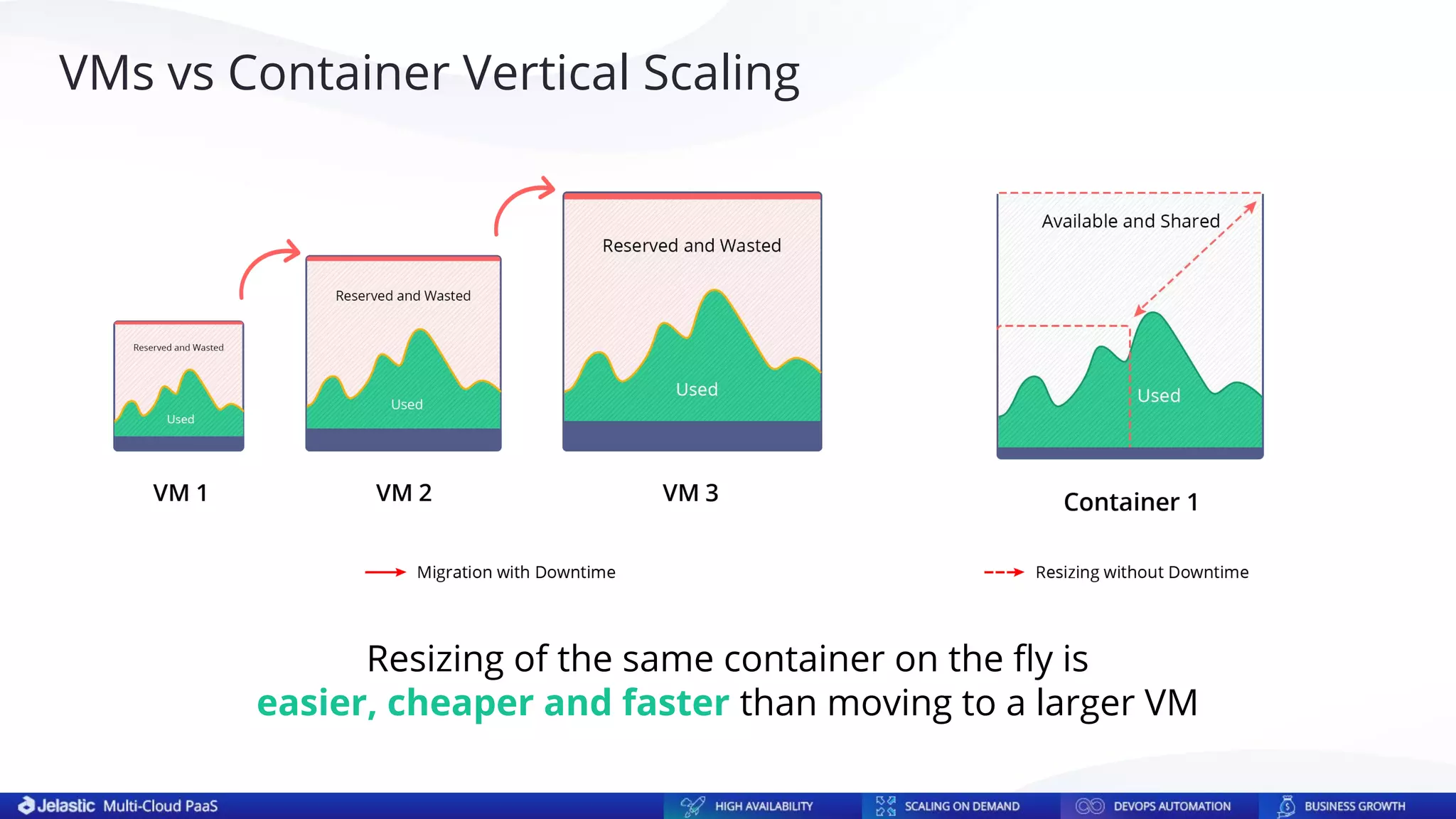

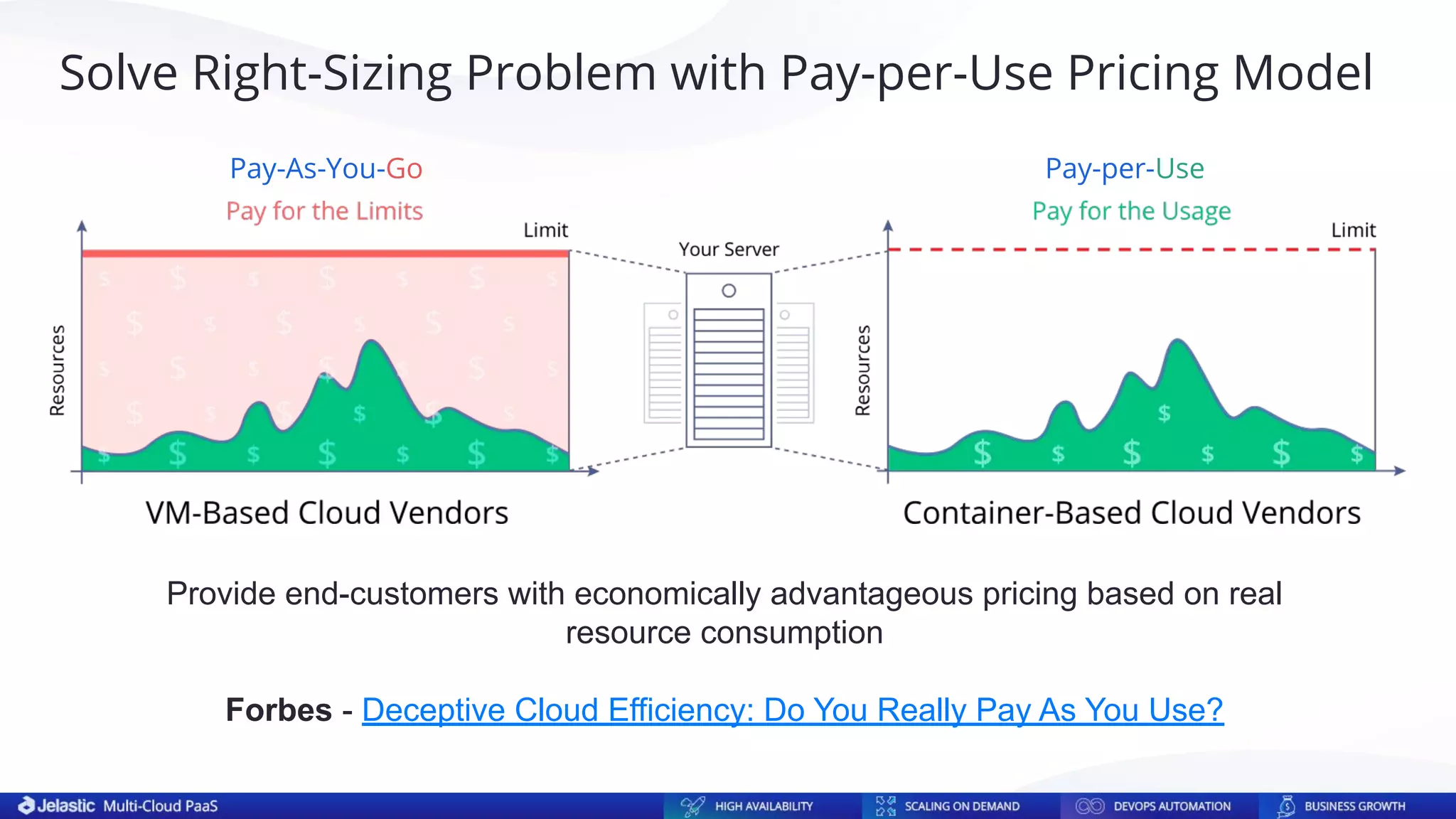

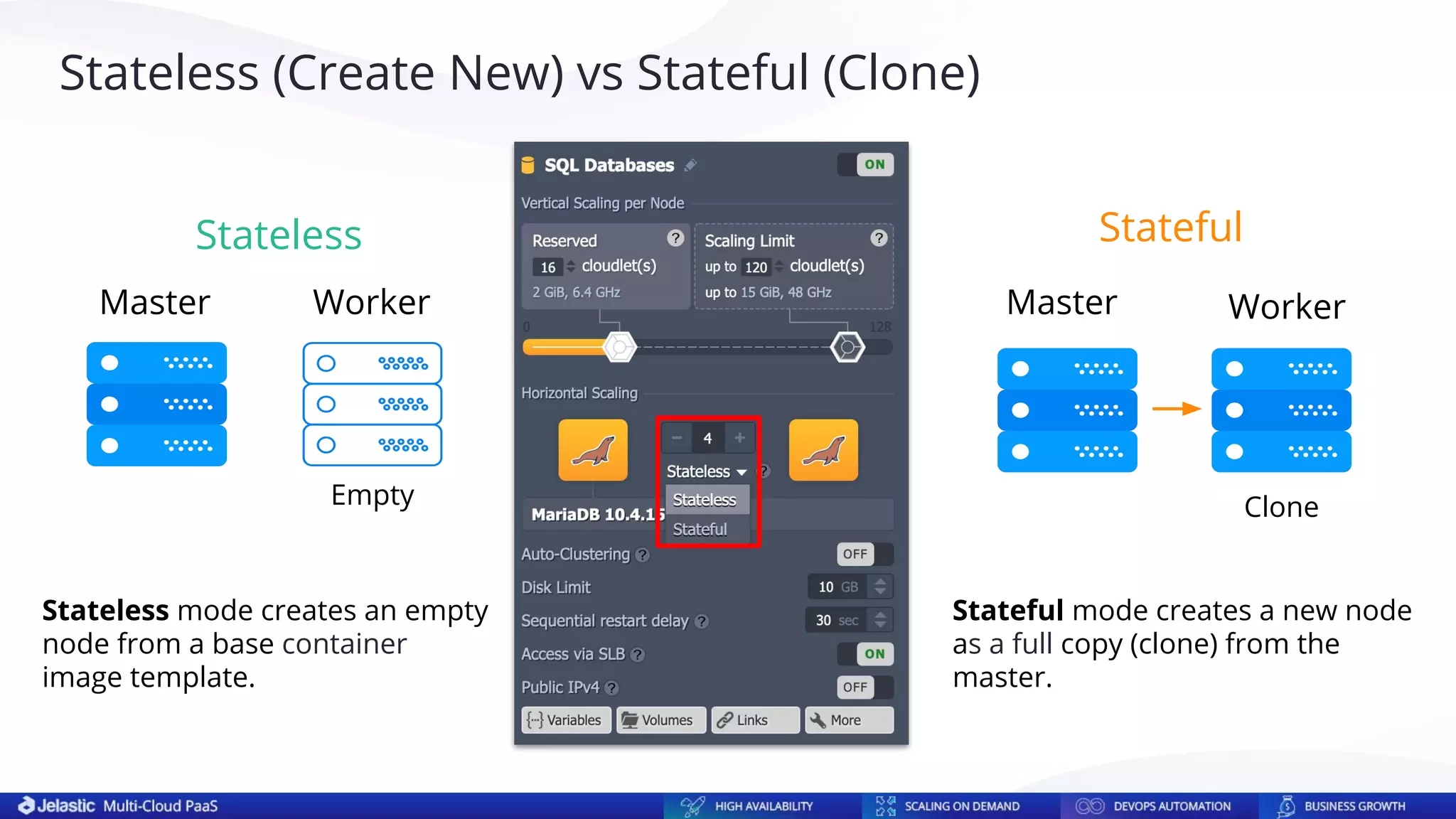

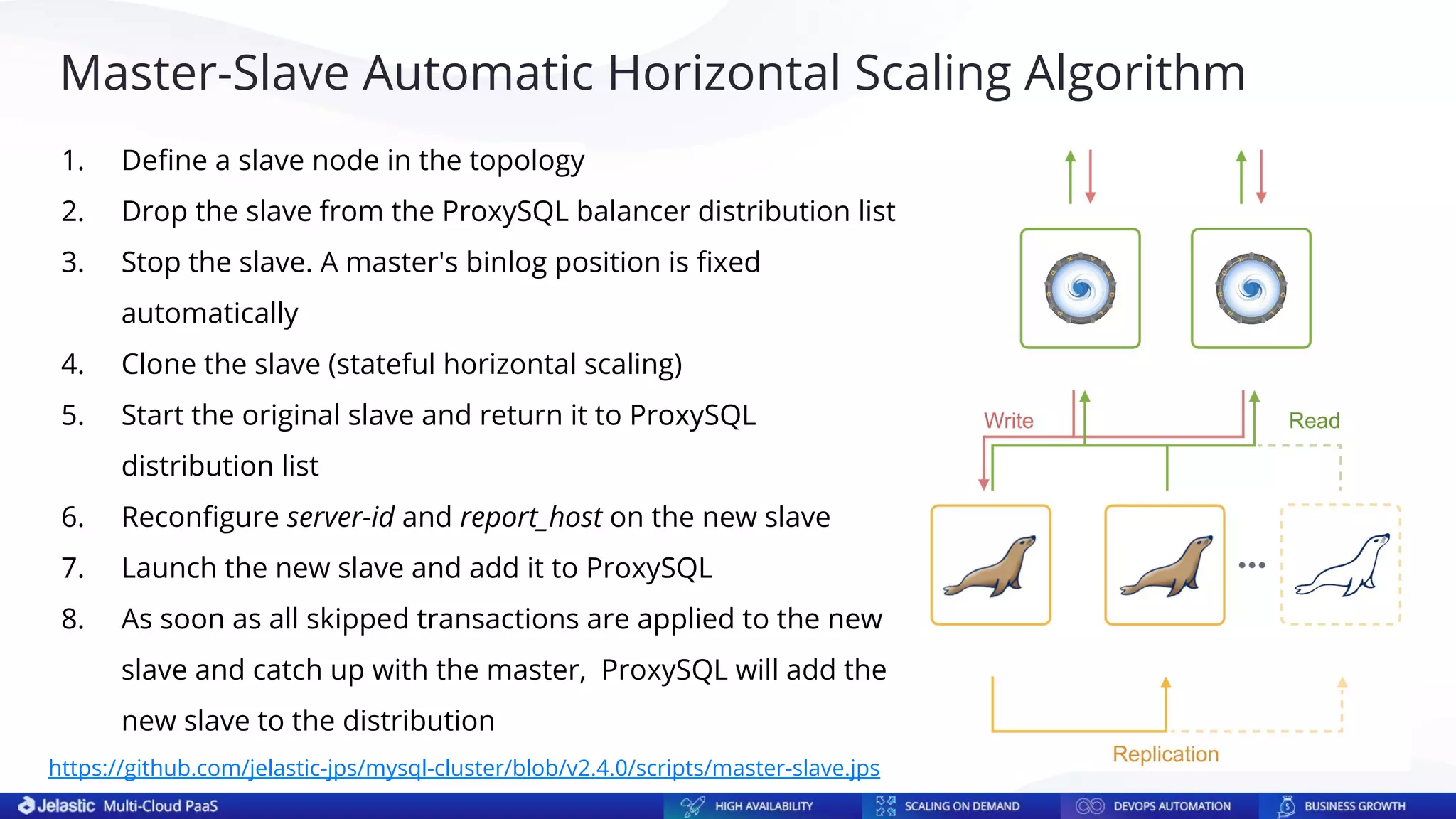

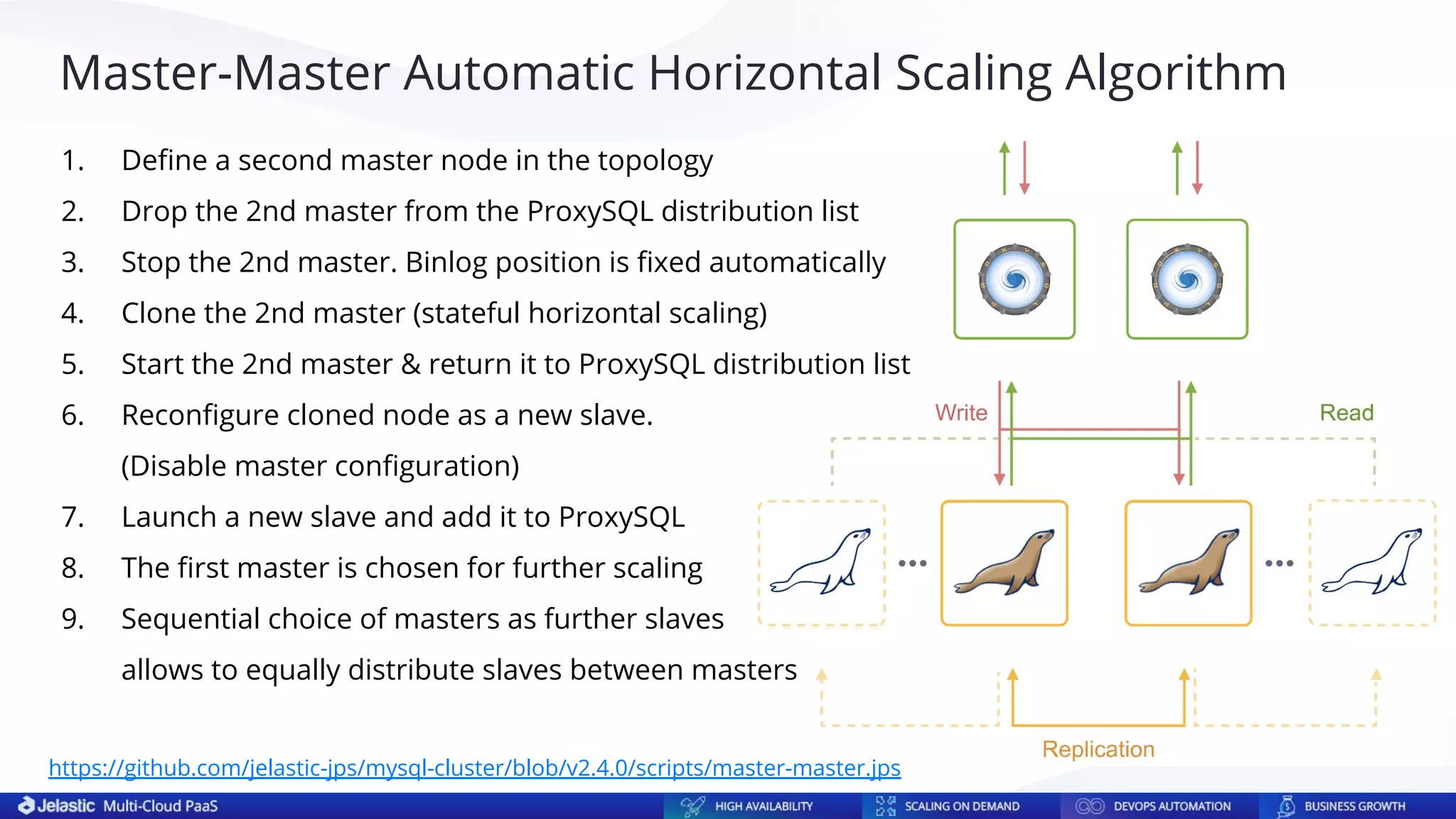

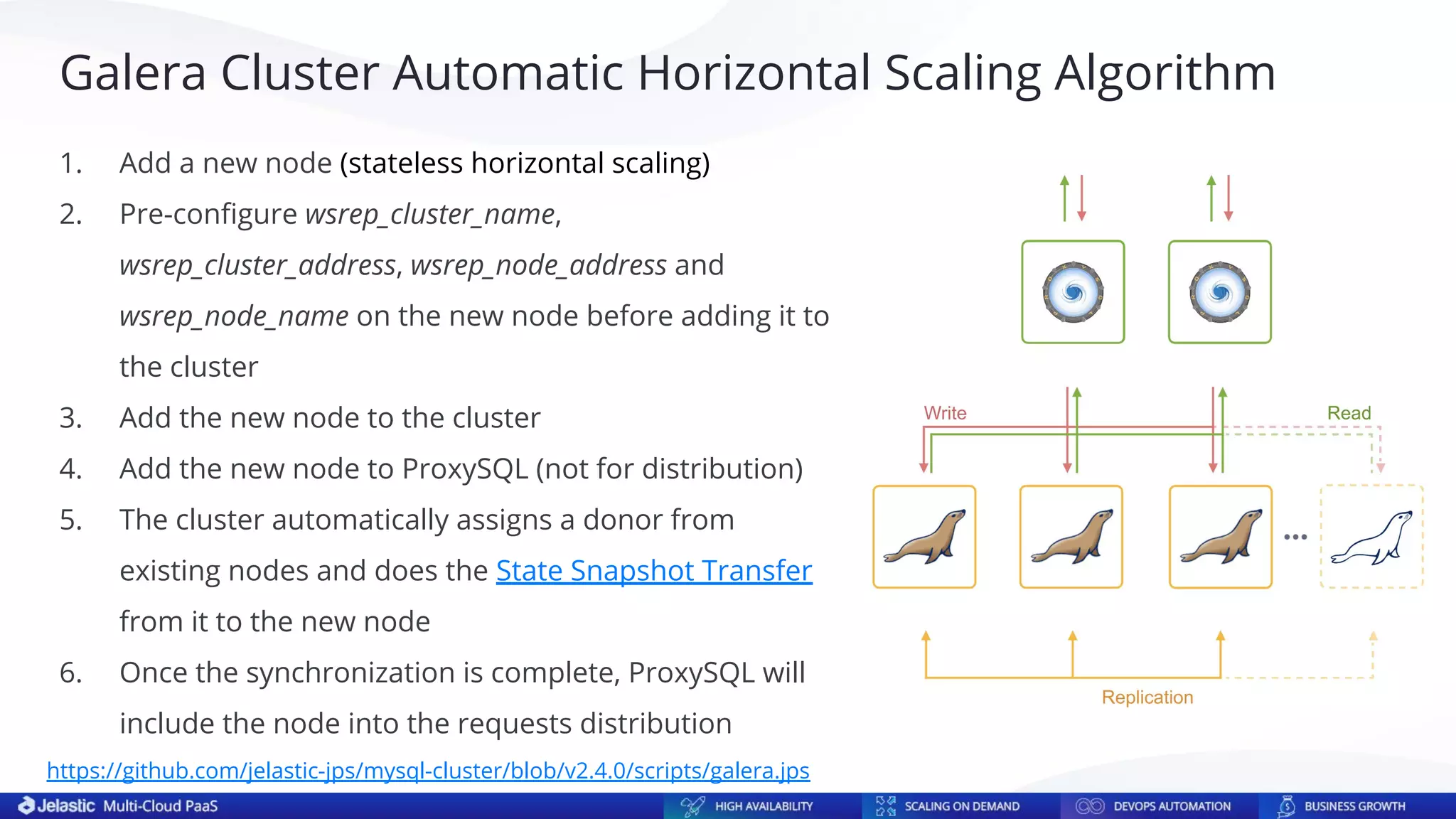

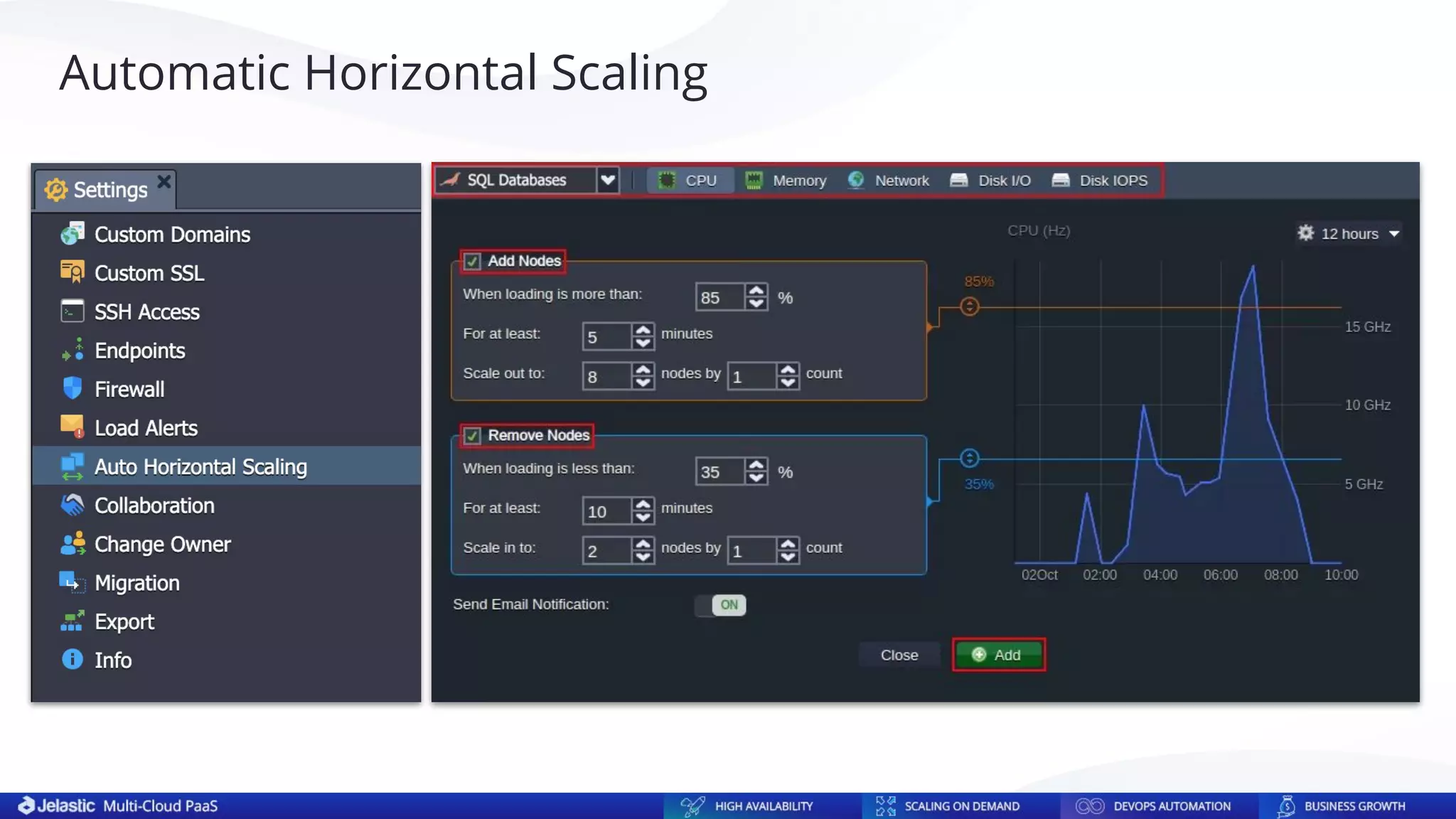

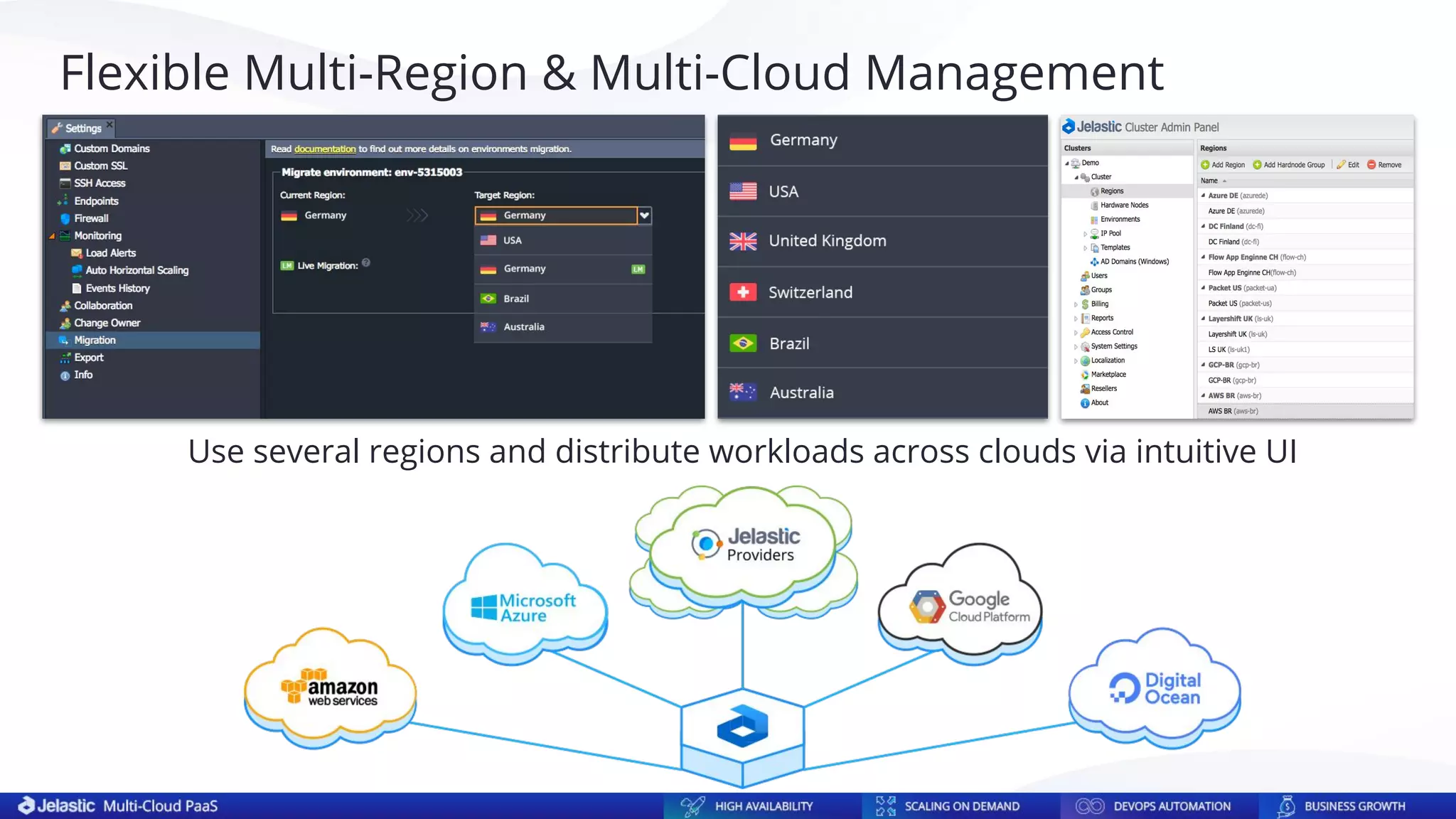

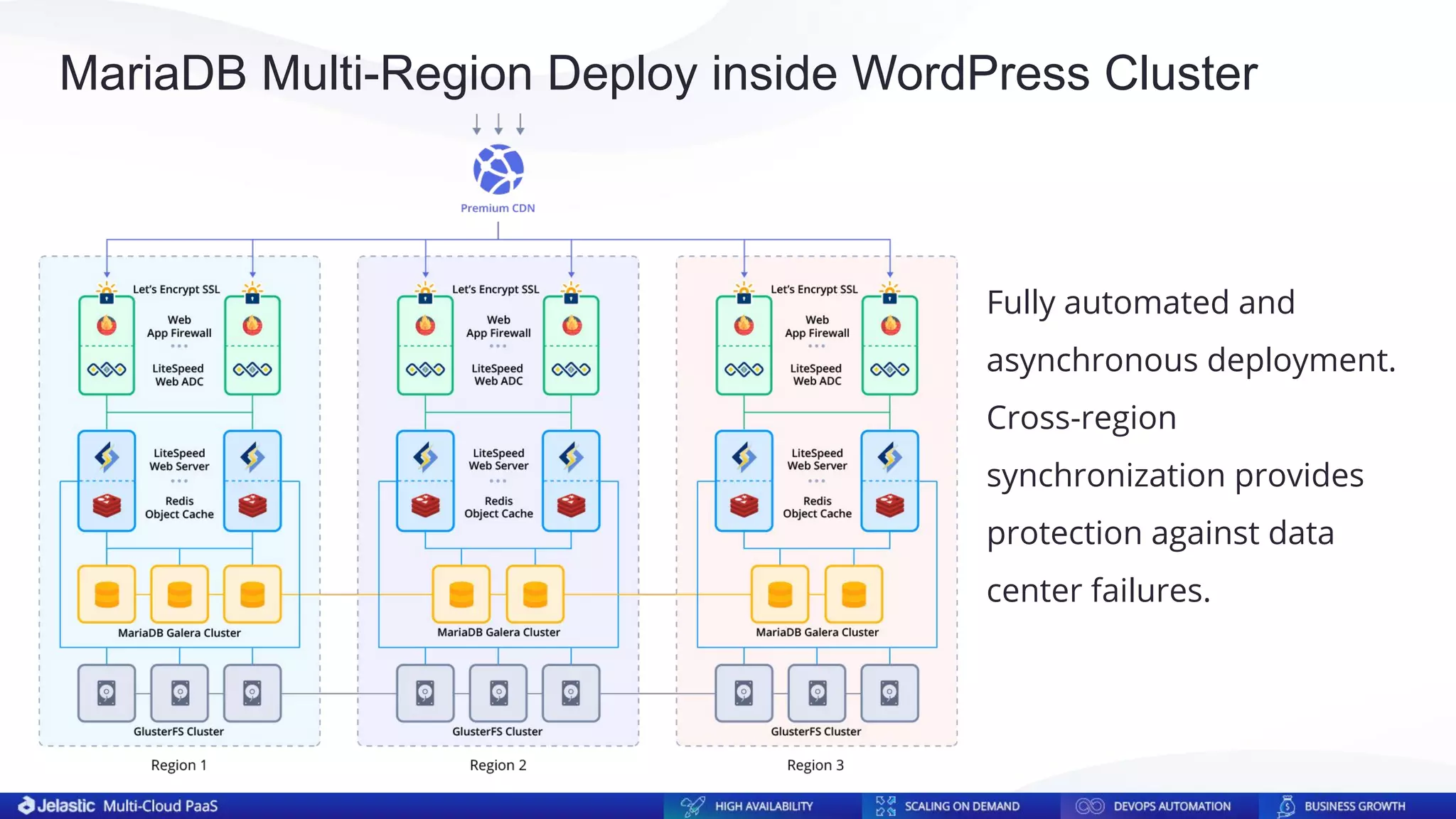

The document discusses the features and configurations of MariaDB as-a-Service within the Jelastic PaaS environment, highlighting its auto-clustering capabilities, scalability, and management efficiency. It outlines the complexities of manual setup versus the convenience of built-in clustering schemas like master-slave and Galera, as well as automatic horizontal scaling algorithms. Additionally, it emphasizes the importance of data protection against downtimes through multi-region and multi-cloud management strategies.