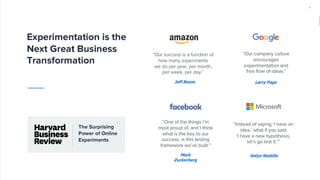

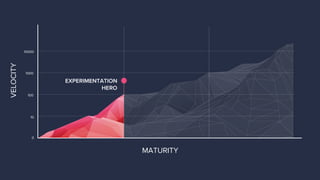

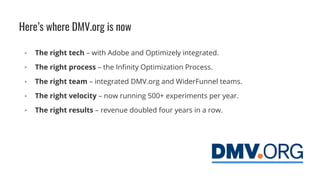

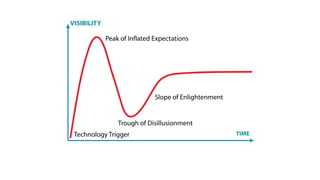

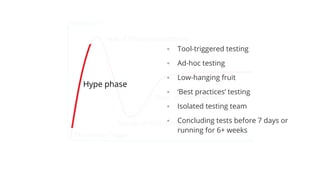

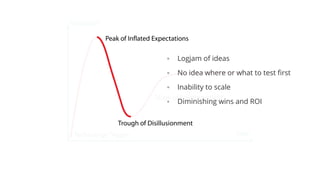

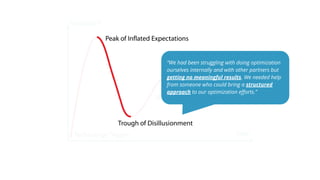

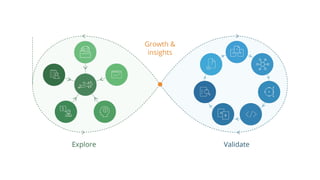

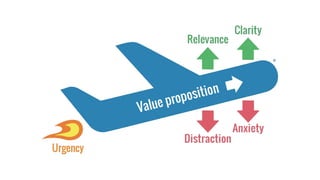

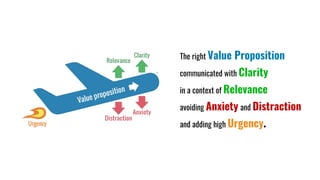

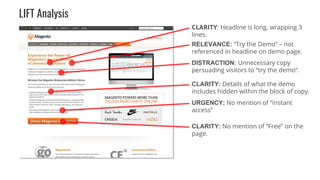

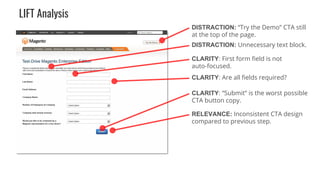

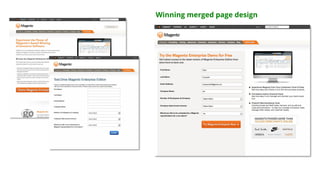

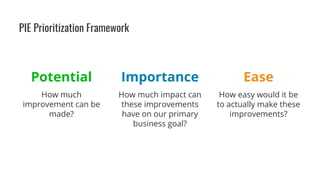

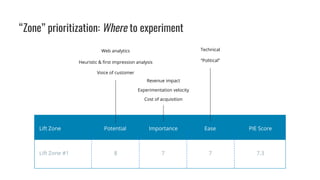

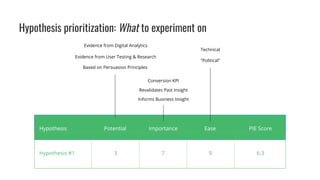

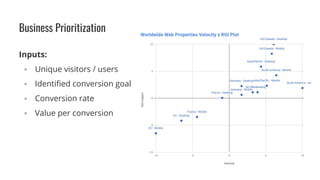

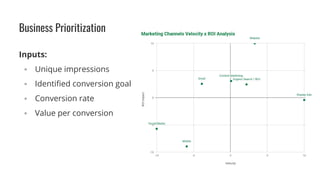

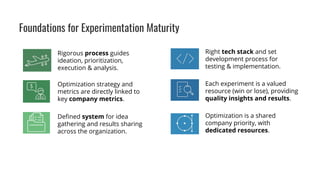

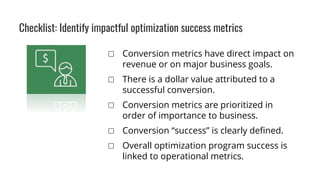

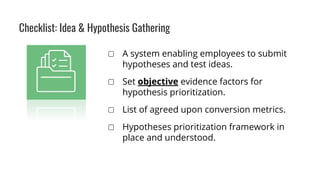

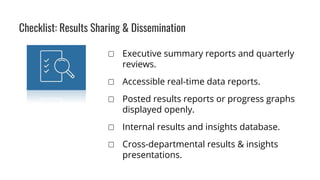

The document discusses the evolution of IBM into a culture of experimentation, highlighting a successful case study of a client's journey from minimal optimization to a robust experimentation program that conducted over 500 experiments a year and doubled revenue for four consecutive years. It outlines the importance of structured approaches, the right technology, and prioritization frameworks in achieving experimentation maturity. Key elements include integrating teams, defining metrics, and maintaining a focus on clarity and relevance in testing practices.