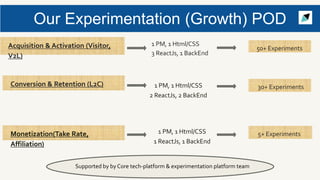

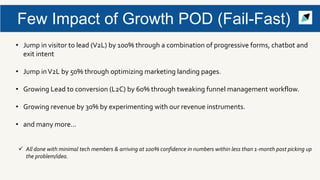

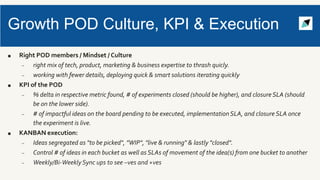

The document details Prabhat Gupta's extensive experience in leading growth through hypothesis-driven development and experimentation at TravelTriangle. It highlights the importance of an experimentation culture, the process for conducting experiments, and practical case studies demonstrating significant improvements in key metrics like visitor conversion and revenue growth. Additionally, it emphasizes the need for effective prioritization of ideas, robust data infrastructure, and learning from both successes and failures in experimentation.

![Initial Hypothesis needs Work

Based on Data

[ Past Learnings | User Behavior

Global Standards ]

Based on Intuition

[ Personal Learnings | User

Understanding ]](https://image.slidesharecdn.com/hddhowtofail-fastpublic-200907093655/85/Hypothesis-Driven-Development-How-to-Fail-Fast-Hacking-Growth-4-320.jpg)

![Experiment 5: Testing variations for form color & CTA color on form [Kerala]

ORIGINAL](https://image.slidesharecdn.com/hddhowtofail-fastpublic-200907093655/85/Hypothesis-Driven-Development-How-to-Fail-Fast-Hacking-Growth-17-320.jpg)

![Experiment 5: Testing variations for form color & CTA color on form [Kerala]

VARIATION 2](https://image.slidesharecdn.com/hddhowtofail-fastpublic-200907093655/85/Hypothesis-Driven-Development-How-to-Fail-Fast-Hacking-Growth-18-320.jpg)

![Experiment 5: Testing variations for form color & CTA color on form [Kerala]

VARIATION 4](https://image.slidesharecdn.com/hddhowtofail-fastpublic-200907093655/85/Hypothesis-Driven-Development-How-to-Fail-Fast-Hacking-Growth-19-320.jpg)

![Experiment 5: Testing variations for form color & CTA color on form [Kerala]

VARIATION 3](https://image.slidesharecdn.com/hddhowtofail-fastpublic-200907093655/85/Hypothesis-Driven-Development-How-to-Fail-Fast-Hacking-Growth-20-320.jpg)