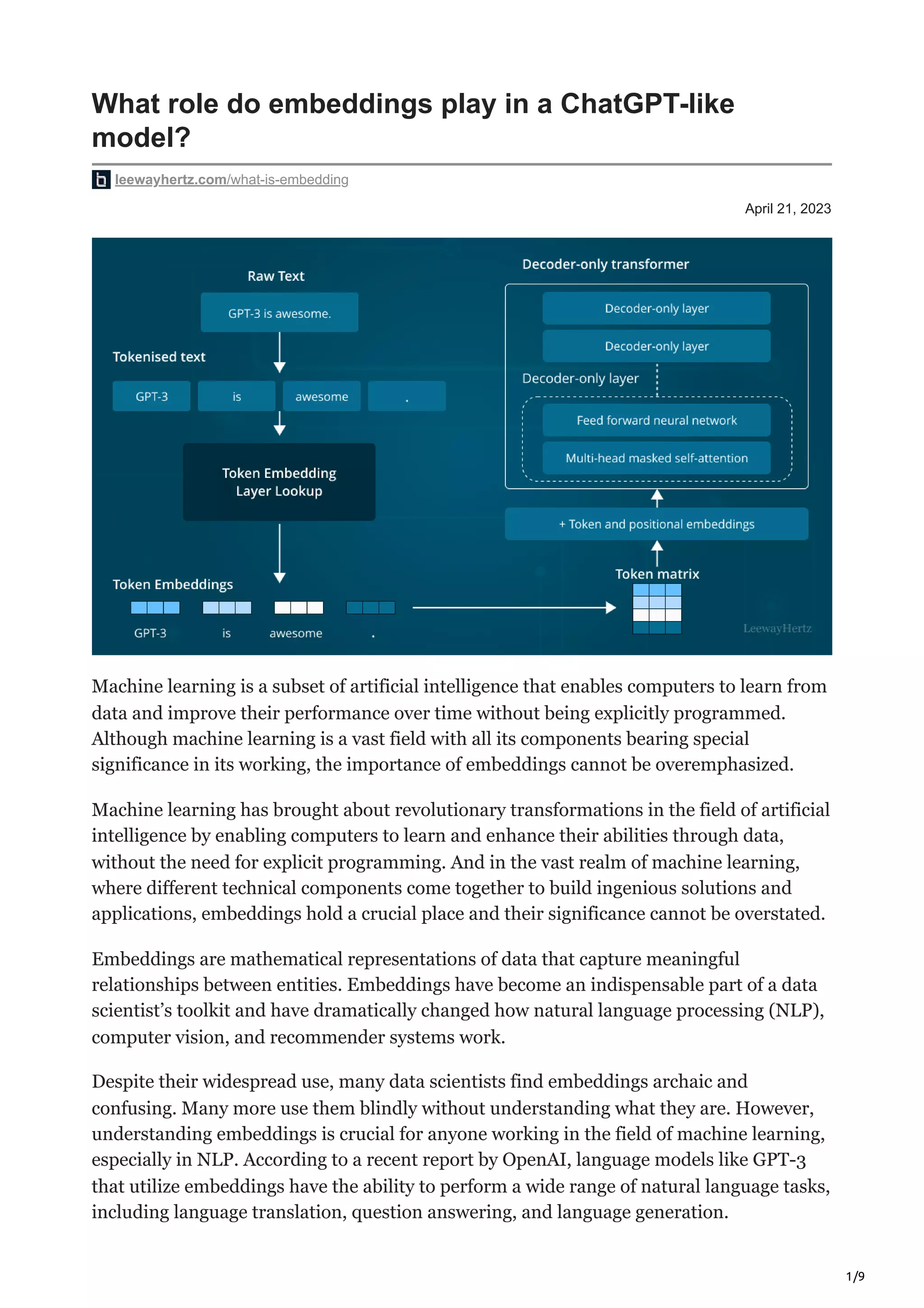

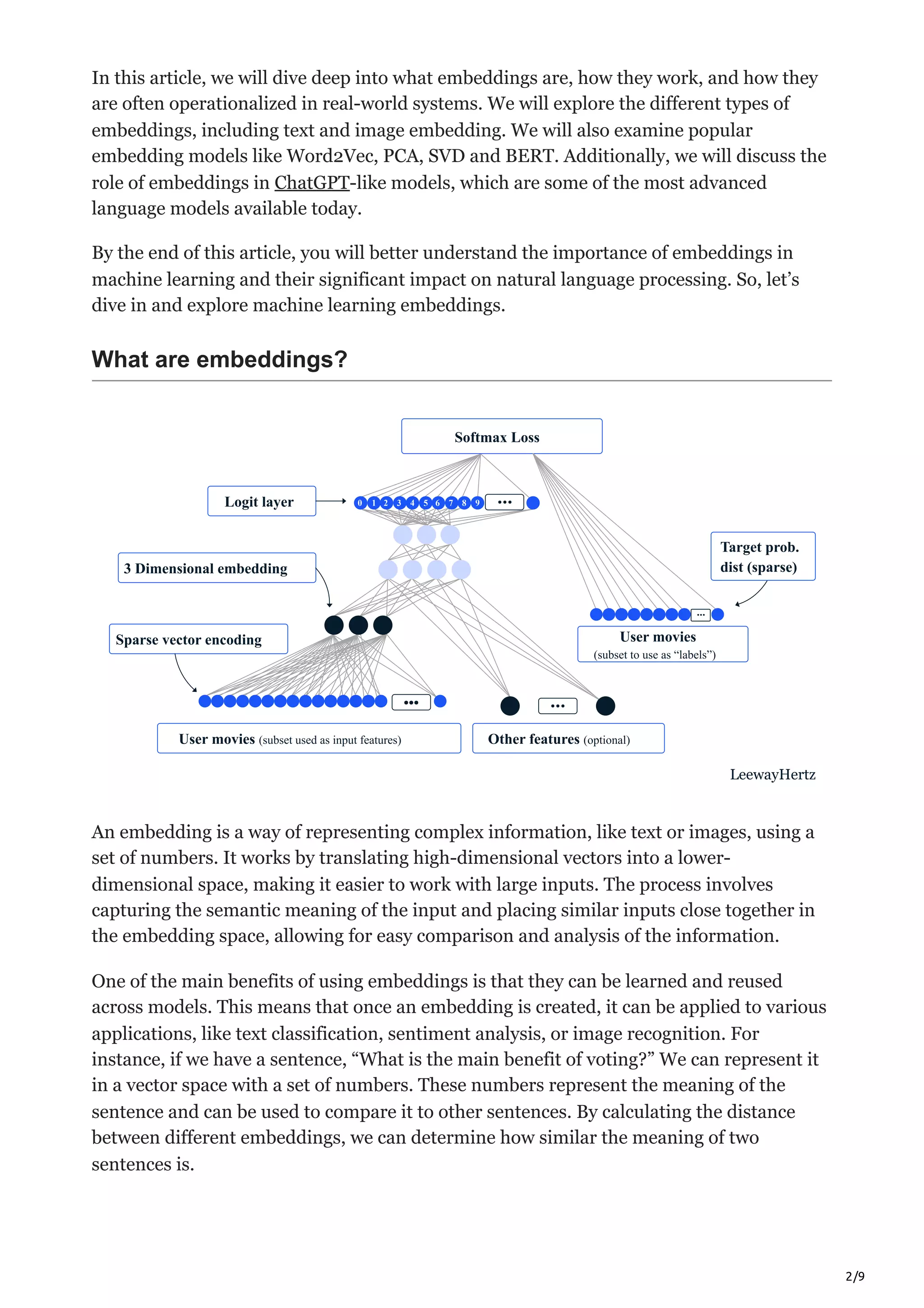

Embeddings play a crucial role in language models like ChatGPT by creating a lower-dimensional representation of data to improve analysis of complex inputs like text. Embeddings capture relationships between entities and allow similar inputs to be grouped together. They are widely used in applications involving natural language processing, computer vision, and recommender systems. Popular embedding models include Word2Vec, SVD, and BERT, with each handling embeddings in different ways like analyzing word co-occurrence or bidirectional training.