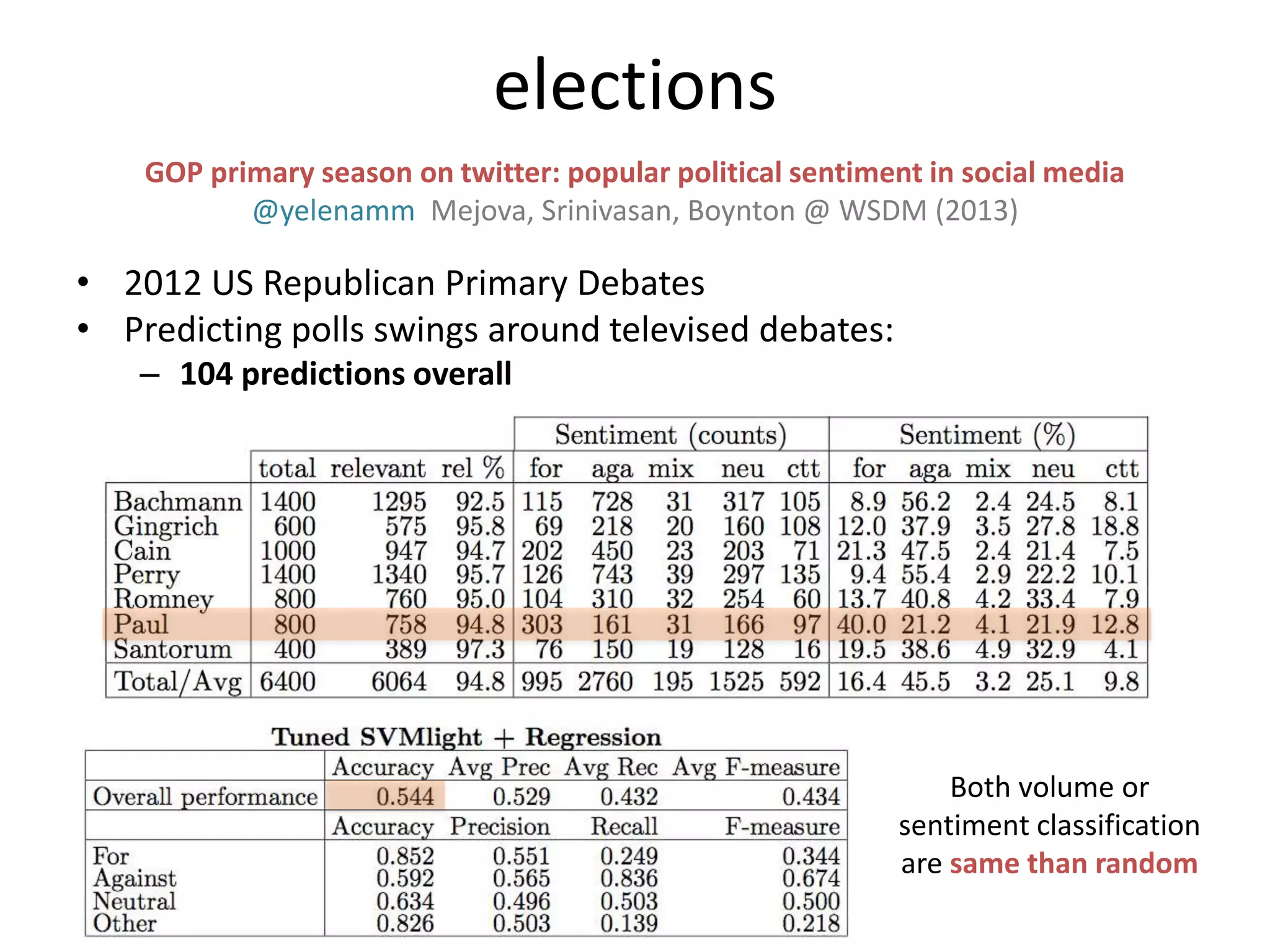

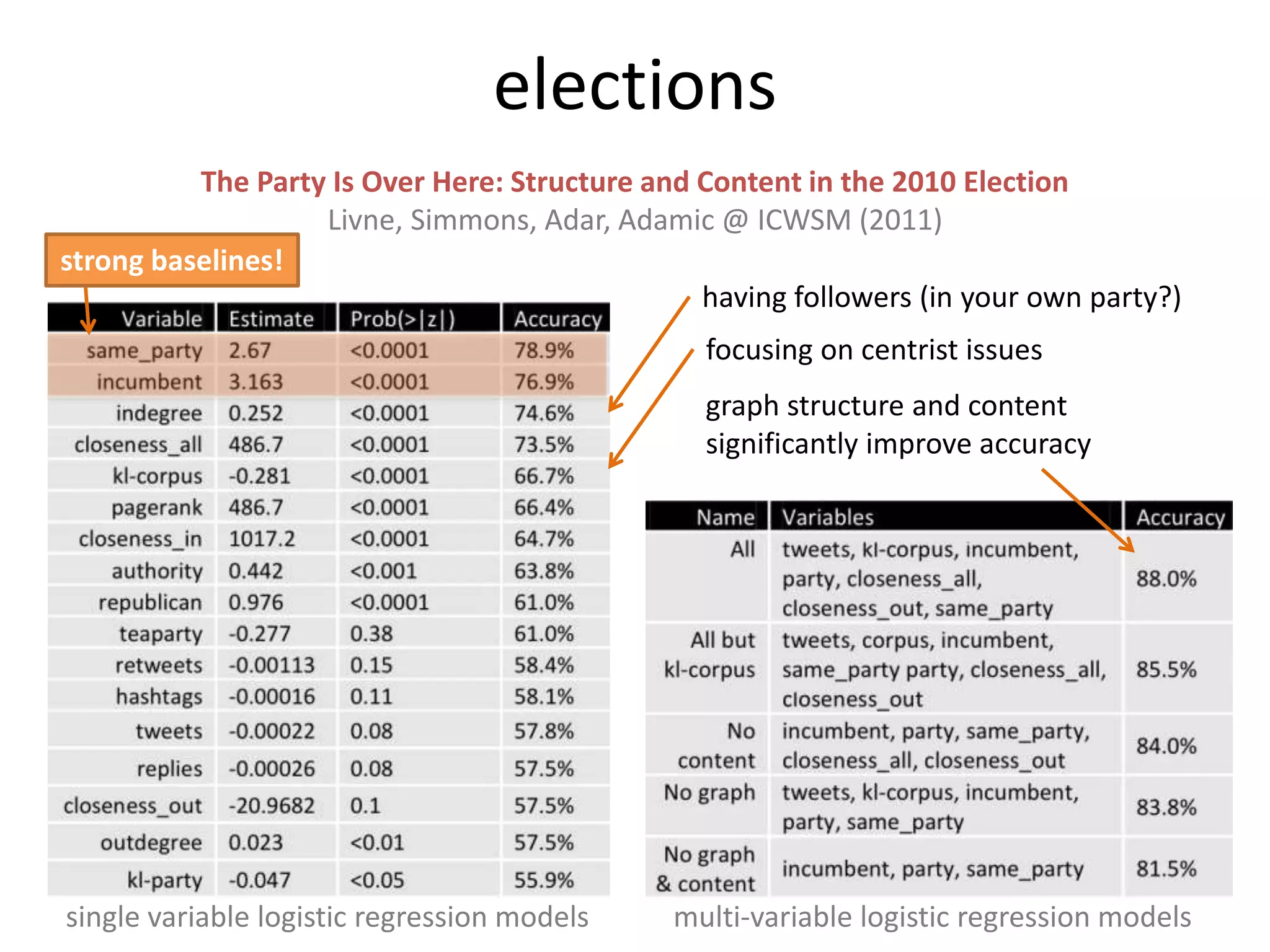

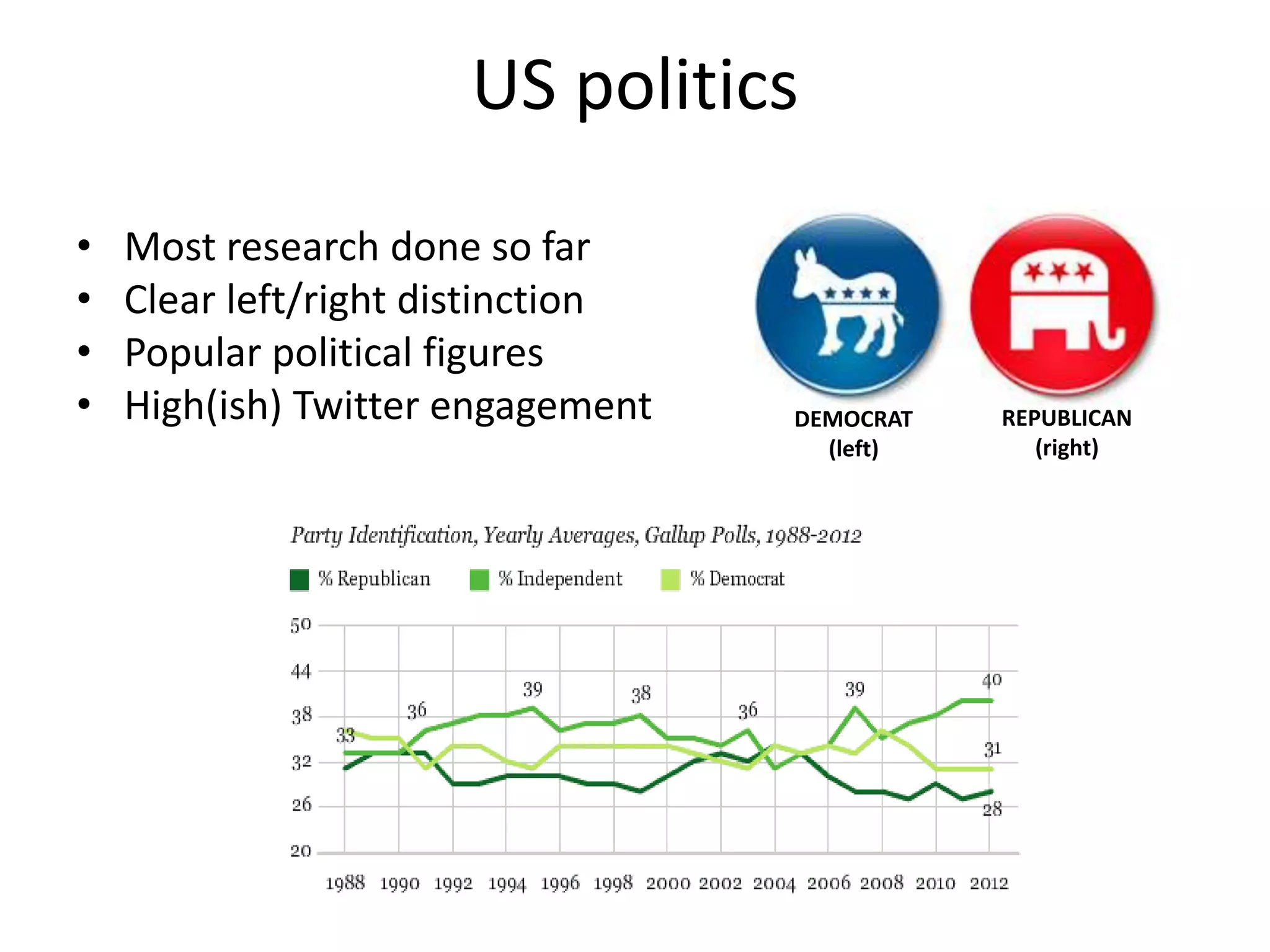

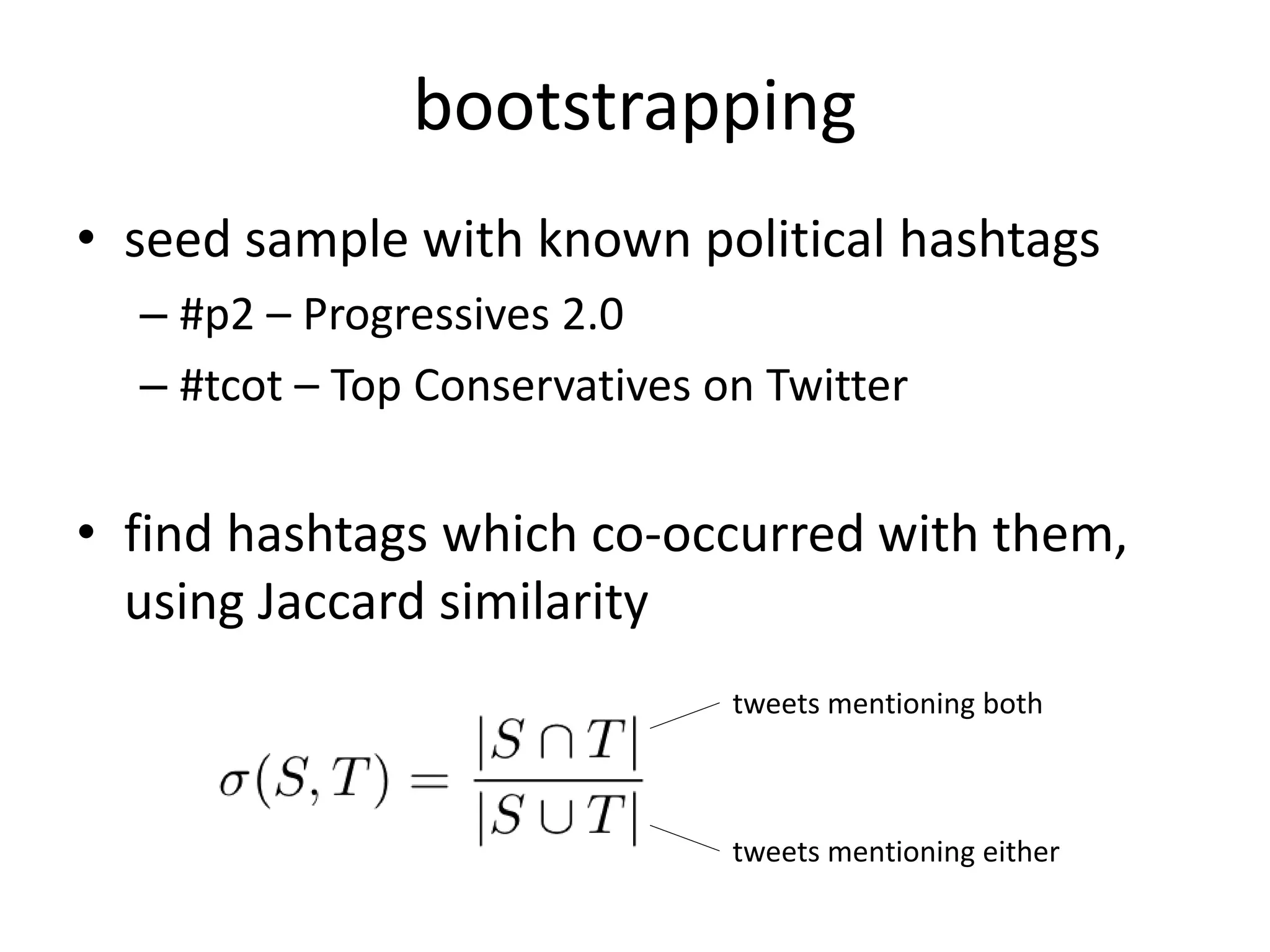

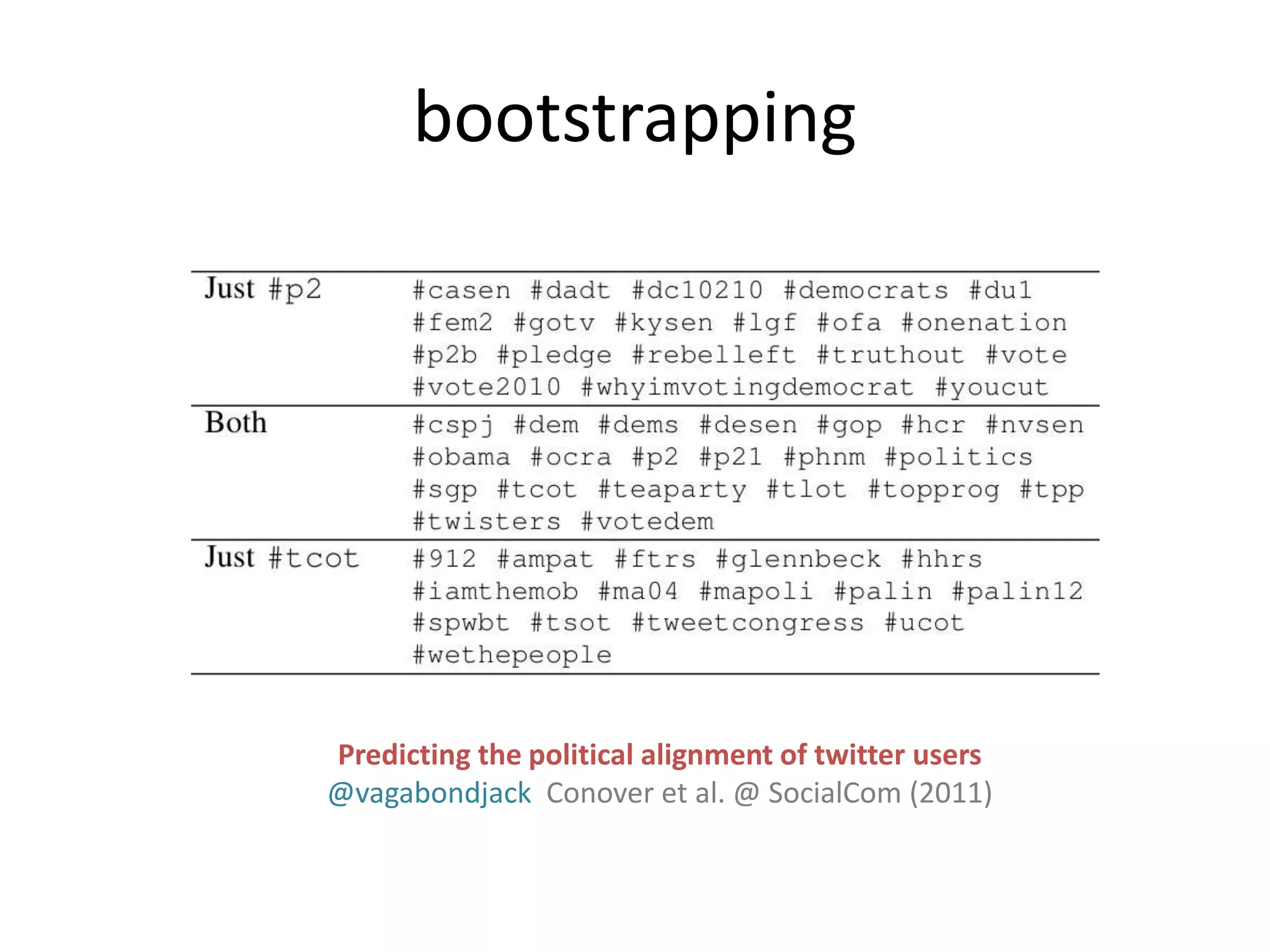

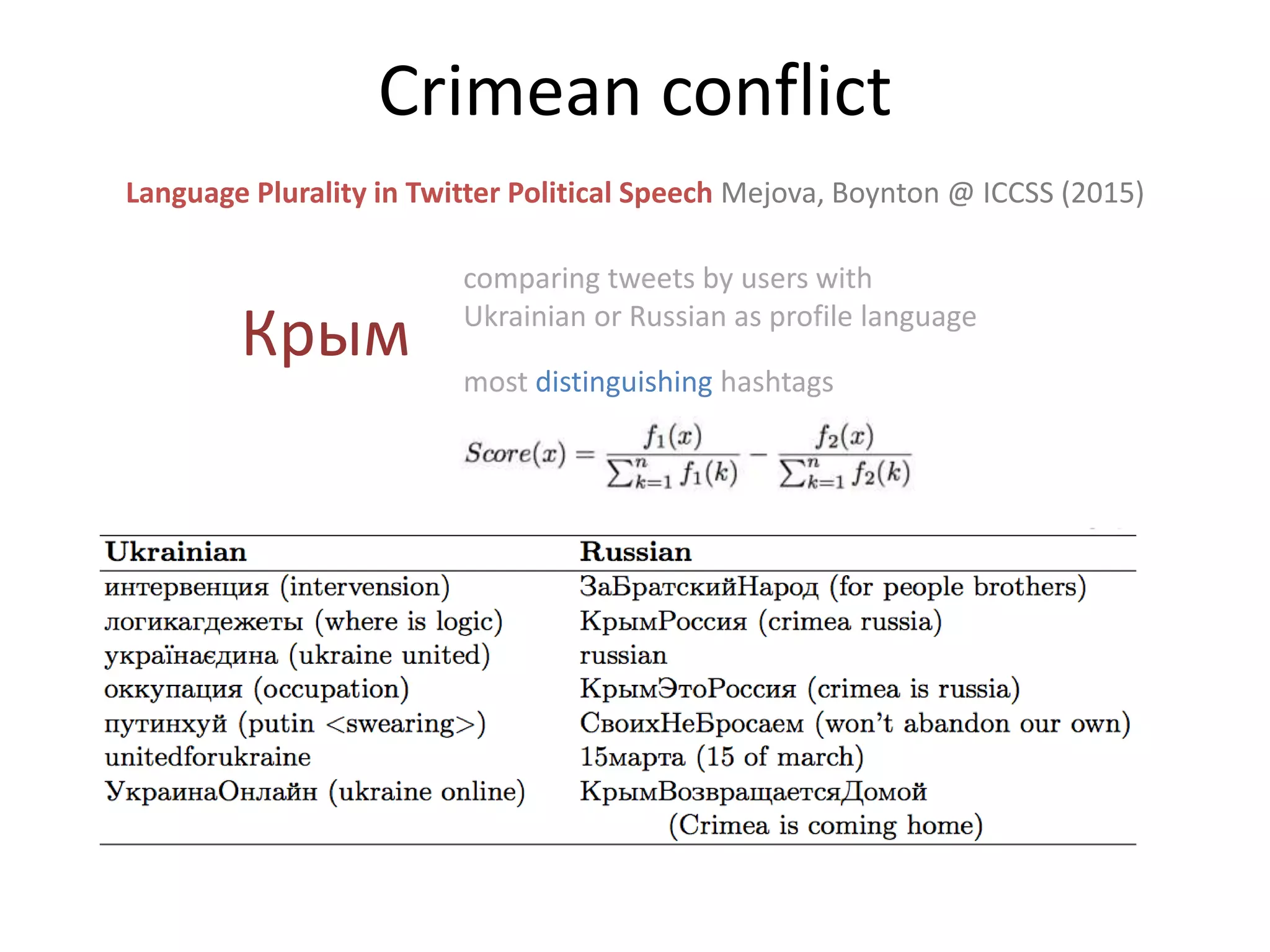

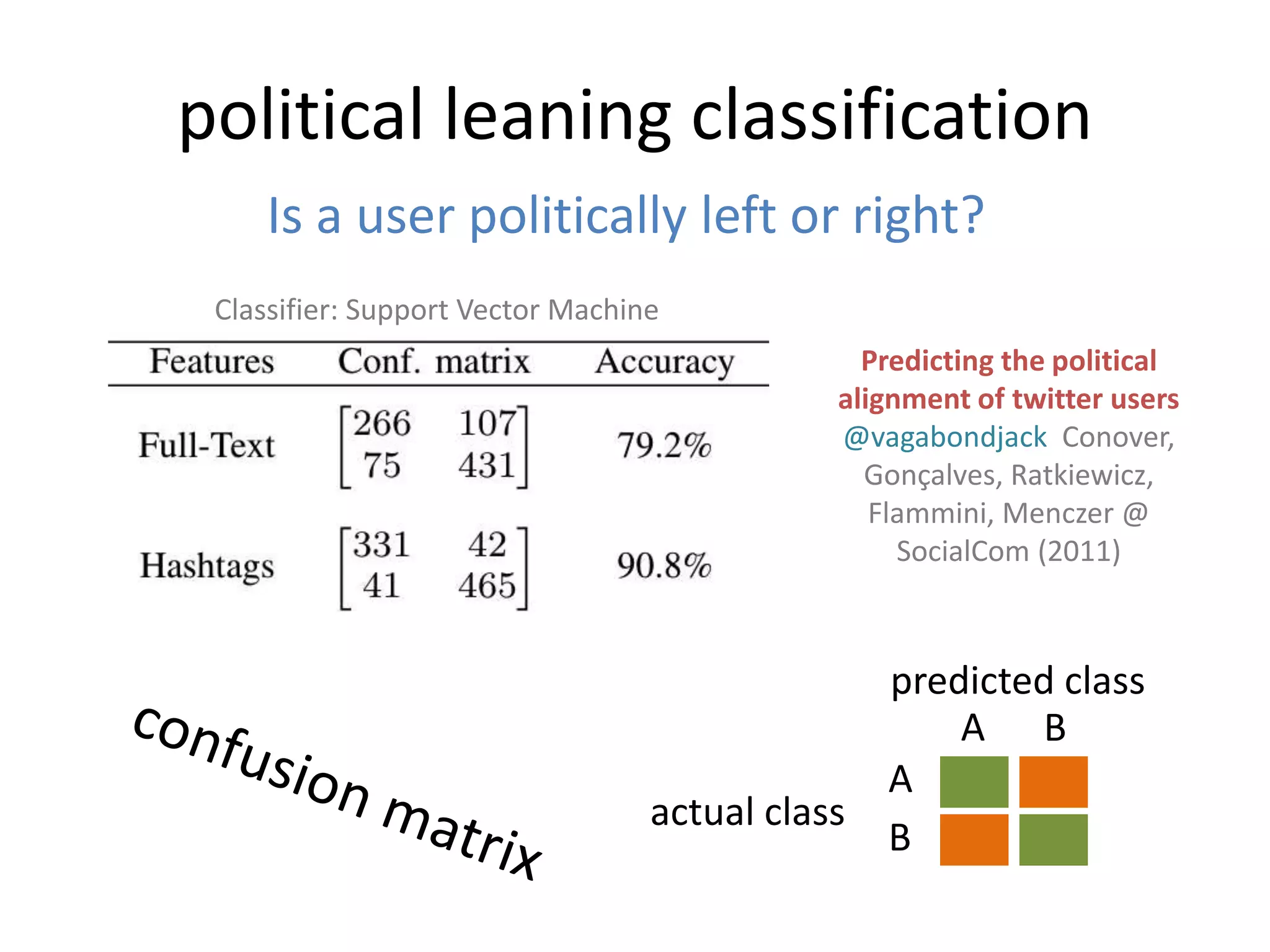

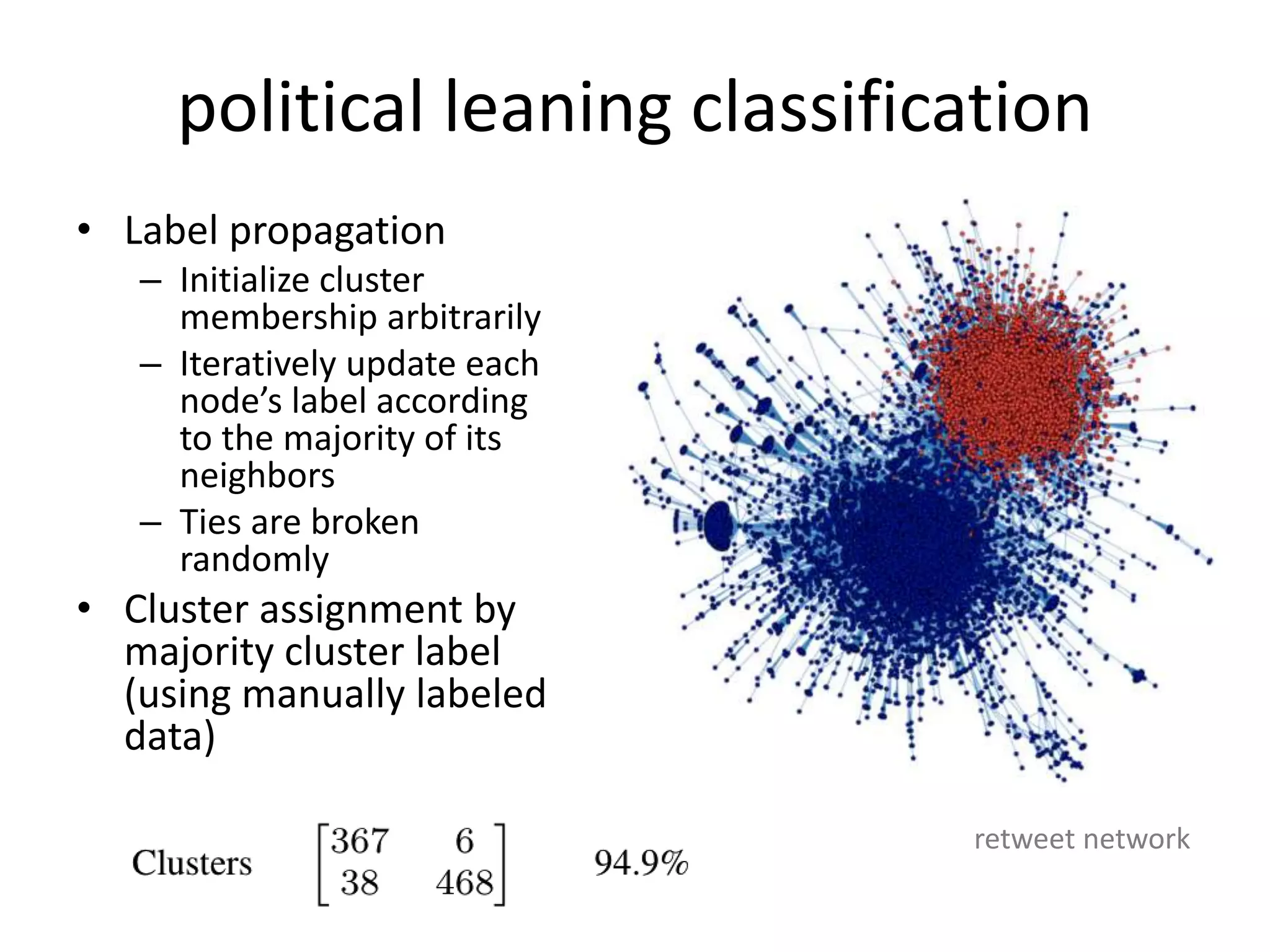

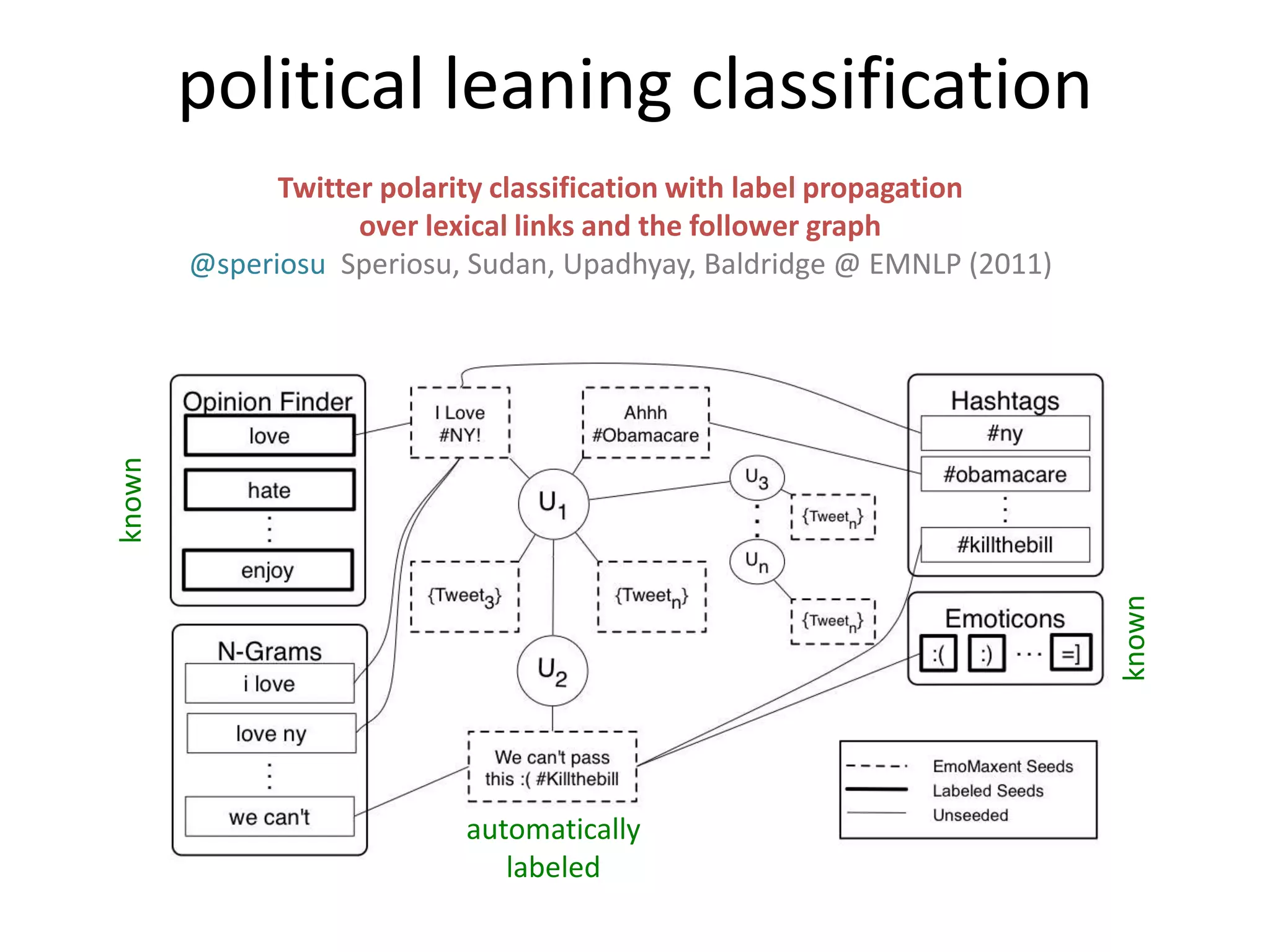

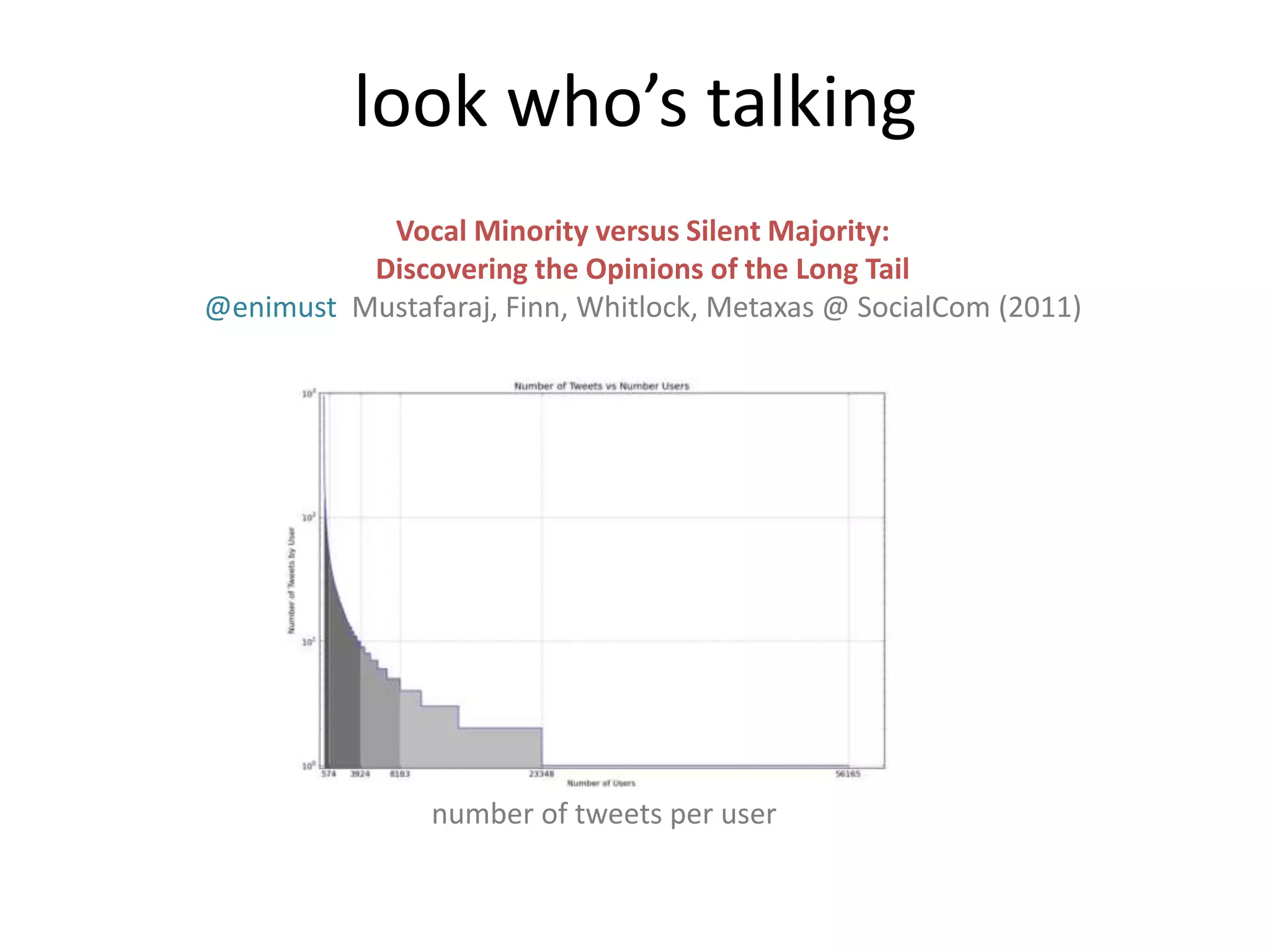

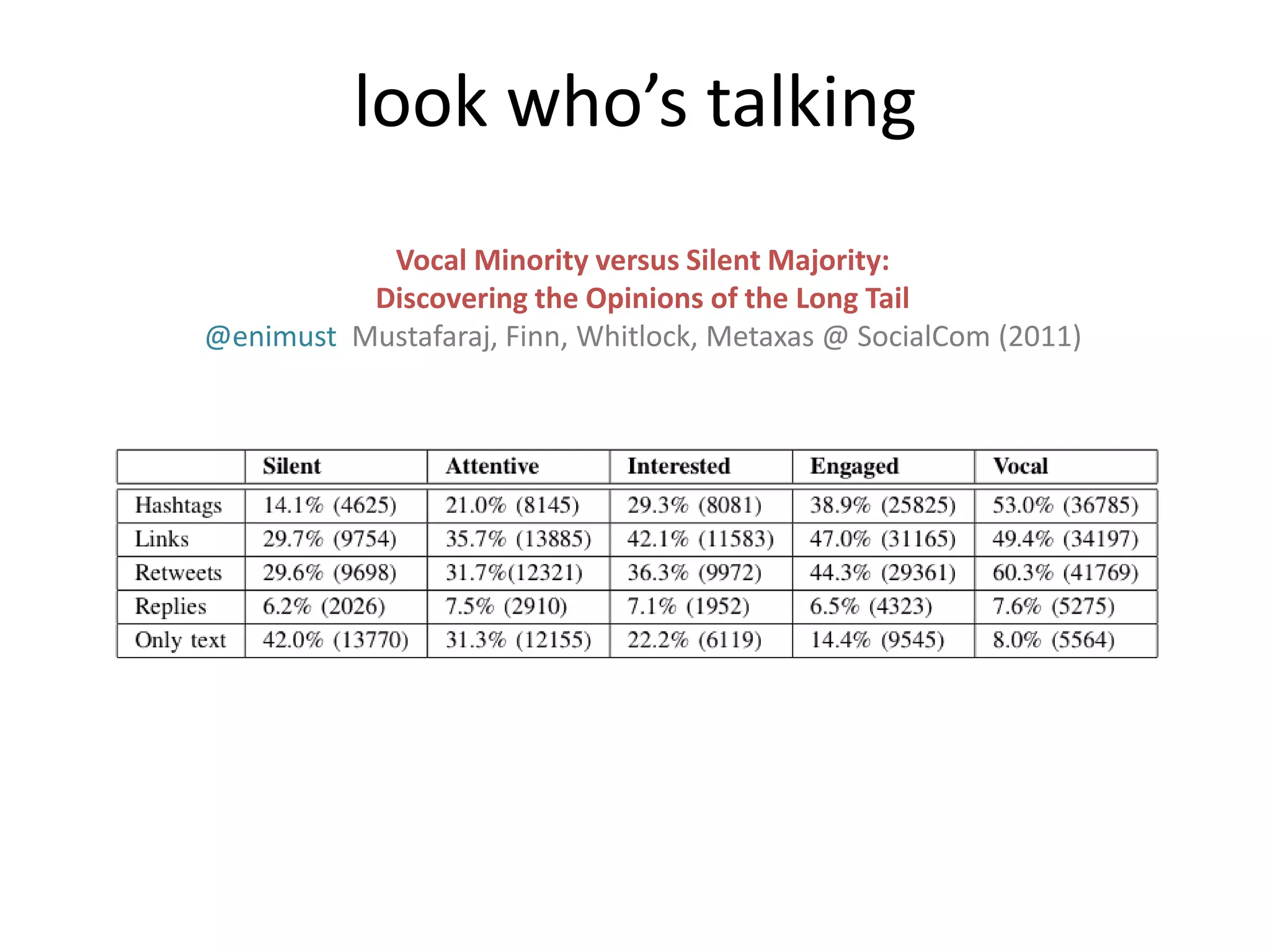

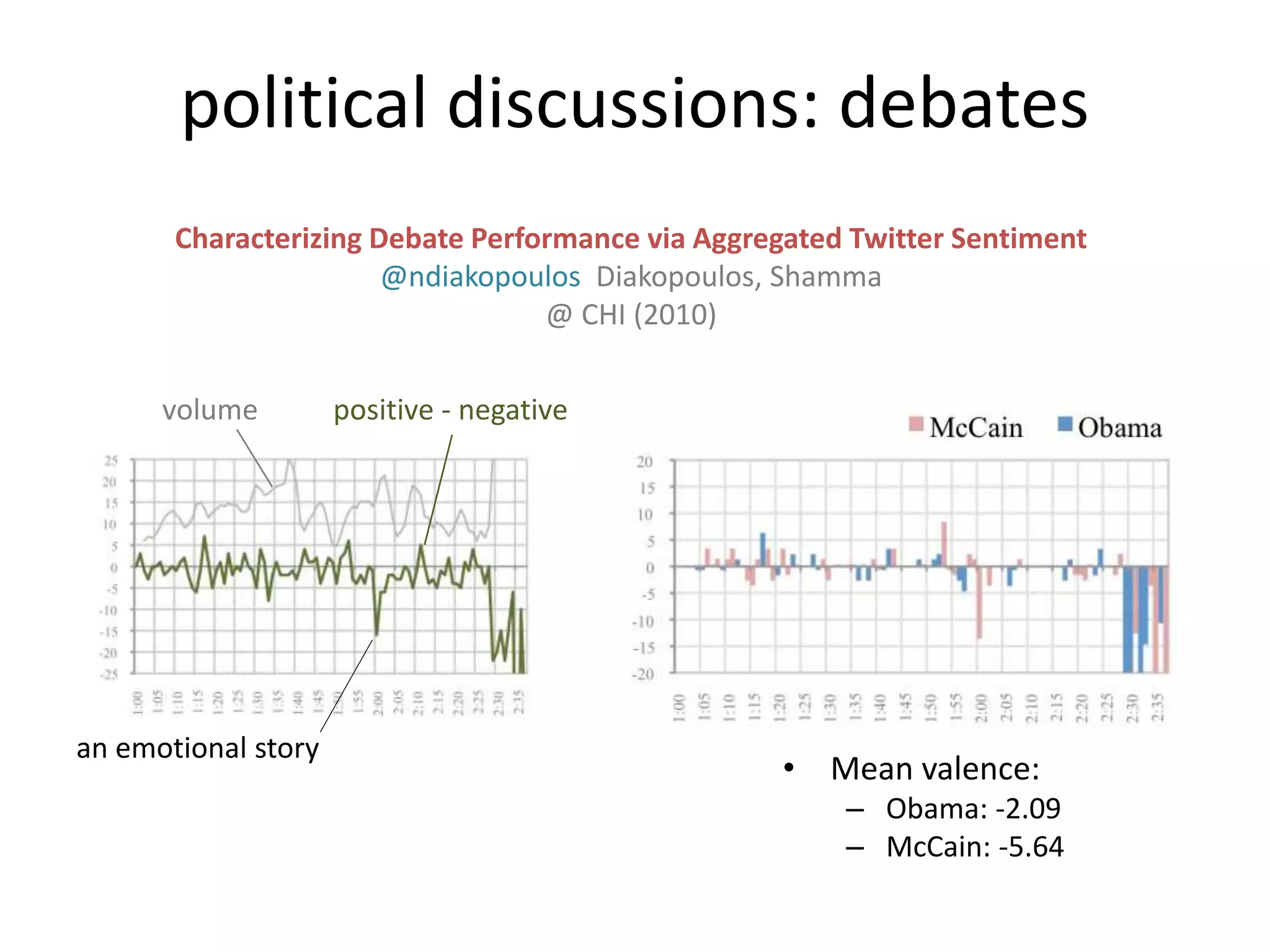

This document provides an overview of research on analyzing political language on Twitter. It discusses sampling political tweets, classifying users' political leanings through text analysis, networks, and crowdsourcing, and predicting election outcomes. It also covers analyzing sentiment around debates, distinguishing vocal from silent users, detecting misinformation, and predicting primary results. The document aims to introduce key studies in this area and highlight challenges in using Twitter for political prediction.

![got your #tag!

hashtag week party

aggregated user volume for (h,w)aggregated user volume for (*,w)

• Given set of users with known leaning:

Political hashtag hijacking in the US Hadgu, Garimella, Weber @ WWW (2013)

[some figures from authors’ original slides]](https://image.slidesharecdn.com/03analysis-150621072448-lva1-app6891/75/Language-of-Politics-on-Twitter-03-Analysis-9-2048.jpg)

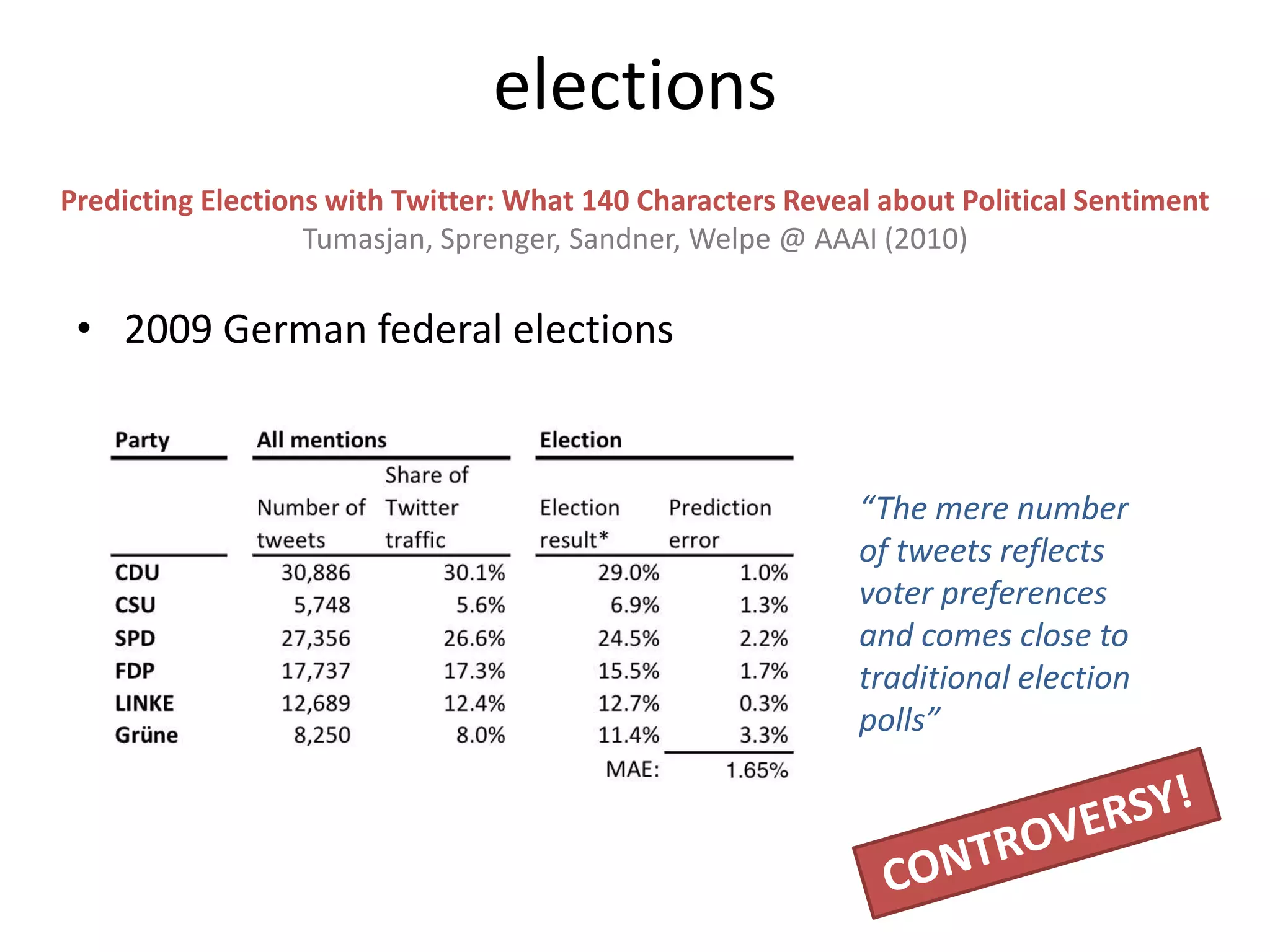

![elections

Why the Pirate Party won the German election of 2009 or the trouble with predictions: A

response to Tumasjan, Sprenger, Sander, & Welpe, "Predicting elections with twitter: What

140 characters reveal about political sentiment"

@ajungherr Jungherr, Jürgens, Schoen @ SSCR V30/N2 (2012)

“arbitrary choices”

If results of polls played a role in

deciding upon the inclusion of particular

parties, the TSSW method is dependent

on public opinion surveys

Choice of Parties Choice of Dates

prediction analysis […] between [13.9]

and [27.9], the day of the election,

produces a MAE of of 2.13, significantly

higher than the MAE for TSSW](https://image.slidesharecdn.com/03analysis-150621072448-lva1-app6891/75/Language-of-Politics-on-Twitter-03-Analysis-53-2048.jpg)