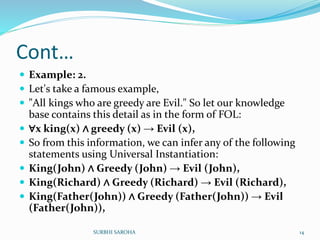

The document outlines key concepts in artificial intelligence, focusing on propositional logic, first-order logic, and various inference methods such as forward and backward chaining. It also discusses probabilistic reasoning and Bayesian networks, providing a framework for knowledge representation and uncertainty handling in AI. Additionally, it covers fundamental inference rules and resolution techniques used to prove logical statements.

![Inference in First order logic

Inference in First-Order Logic is used to deduce new facts

or sentences from existing sentences.

Before understanding the FOL inference rule, let's

understand some basic terminologies used in FOL.

Substitution:

Substitution is a fundamental operation performed on

terms and formulas.

It occurs in all inference systems in first-order logic.

The substitution is complex in the presence of quantifiers

in FOL.

If we write F[a/x], so it refers to substitute a constant "a" in

place of variable "x".

9SURBHI SAROHA](https://image.slidesharecdn.com/knowledgerepresentationreasoningai3-201022053041/85/Knowledge-Representation-Reasoning-AI-UNIT-3-9-320.jpg)