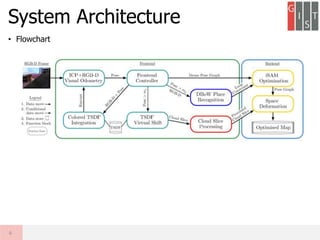

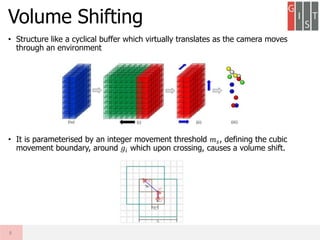

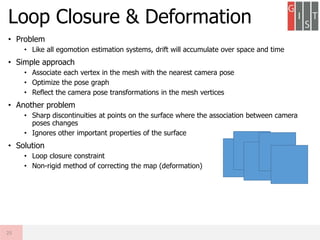

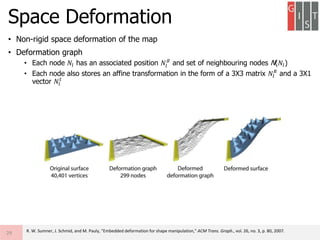

Real-time large scale dense RGB-D SLAM with volumetric fusion extends KinectFusion to larger scales. It represents the volumetric reconstruction as a rolling buffer that translates as the camera moves. It estimates camera pose through combined geometric and photometric constraints. It closes loops by non-rigidly deforming the map with constraints from loop closures and jointly optimizes the camera poses and map. Evaluation shows it produces large, globally consistent, real-time dense reconstructions.

![• Pose graph optimisation

• Carried out by the iSAM framework

• Map deformation

• cost functions over the deformation graph

• 1) maximising rigidity in the deformation

• 2) Regularisation term

• 3) Constraint term that minimises the error on a set of user specified vertex position constraints

Q

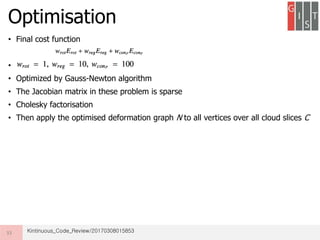

Optimisation

32

Graph node

neighbors

[𝑁𝑙

𝑅

, 𝑁𝑙

𝑡

, 𝑁𝑙

𝑔

]

[𝑁 𝑛

𝑡

, 𝑁 𝑛

𝑔

]](https://image.slidesharecdn.com/kintinuousreview-170521105711/85/Kintinuous-review-26-320.jpg)