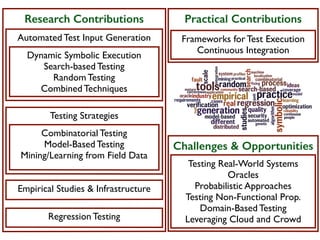

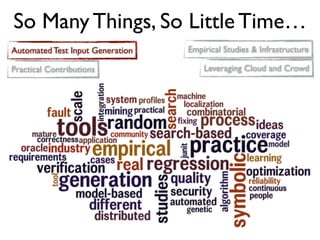

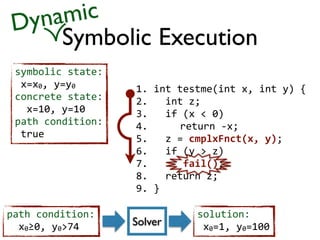

The document discusses the major developments and contributions in the field of software testing research over the past 14 years from 2000 to 2014. It identifies the most significant research contributions as automated test input generation techniques like symbolic execution, search-based testing, and random testing. It also discusses important testing strategies, empirical studies and infrastructure developments, and practical contributions to software testing. Open challenges and opportunities discussed include testing real-world systems, handling oracles, testing non-functional properties, and leveraging cloud and crowd-based approaches.