This document summarizes a research paper that proposes a key frame extraction methodology to facilitate video annotation. The methodology uses edge difference between consecutive video frames to determine if the content has significantly changed. Frames where the edge difference exceeds a threshold are selected as key frames. The algorithm calculates edge differences for all frame pairs in a video. It then computes statistics like mean and standard deviation to determine a threshold. Frames with differences above this threshold are extracted as key frames. The key frames extracted represent important content changes in the video. Extracting key frames reduces processing requirements for video annotation compared to analyzing all frames. The methodology was tested on videos from domains like transportation and performed well at selecting representative frames.

![International Journal of Computer Engineering and Technology (IJCET), ISSN 0976-

6367(Print), ISSN 0976 – 6375(Online) Volume 4, Issue 2, March – April (2013), © IAEME

Video annotation is a promising and essential step for content-based video search and

retrieval. It refers to attaching a metadata to the video for its faster and easier access.

Extraction of key frames from the video and to analyze only these frames instead of all the

frames present in the video can greatly improve the performance of the systems. Analysis of

these key frames can help in forming the annotations for the video.

Key frame is the frame which can represent the salient content and information of the

video. The key frames extracted must summarize the characteristics of the video, and the image

characteristics of a video can be tracked by all the key frames in time sequence. A basic rule of

key frame extraction is that key frame extraction would rather be wrong than not enough [1].

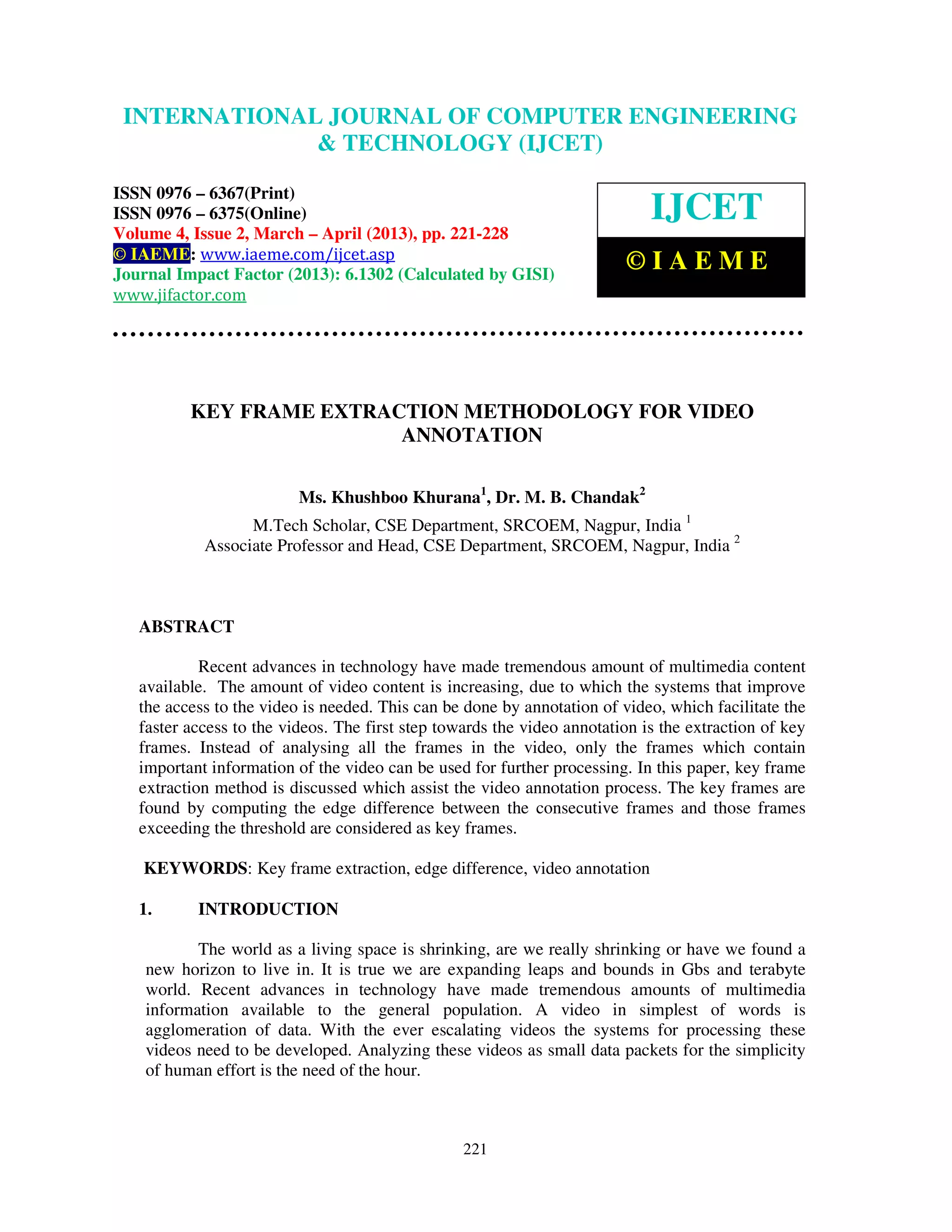

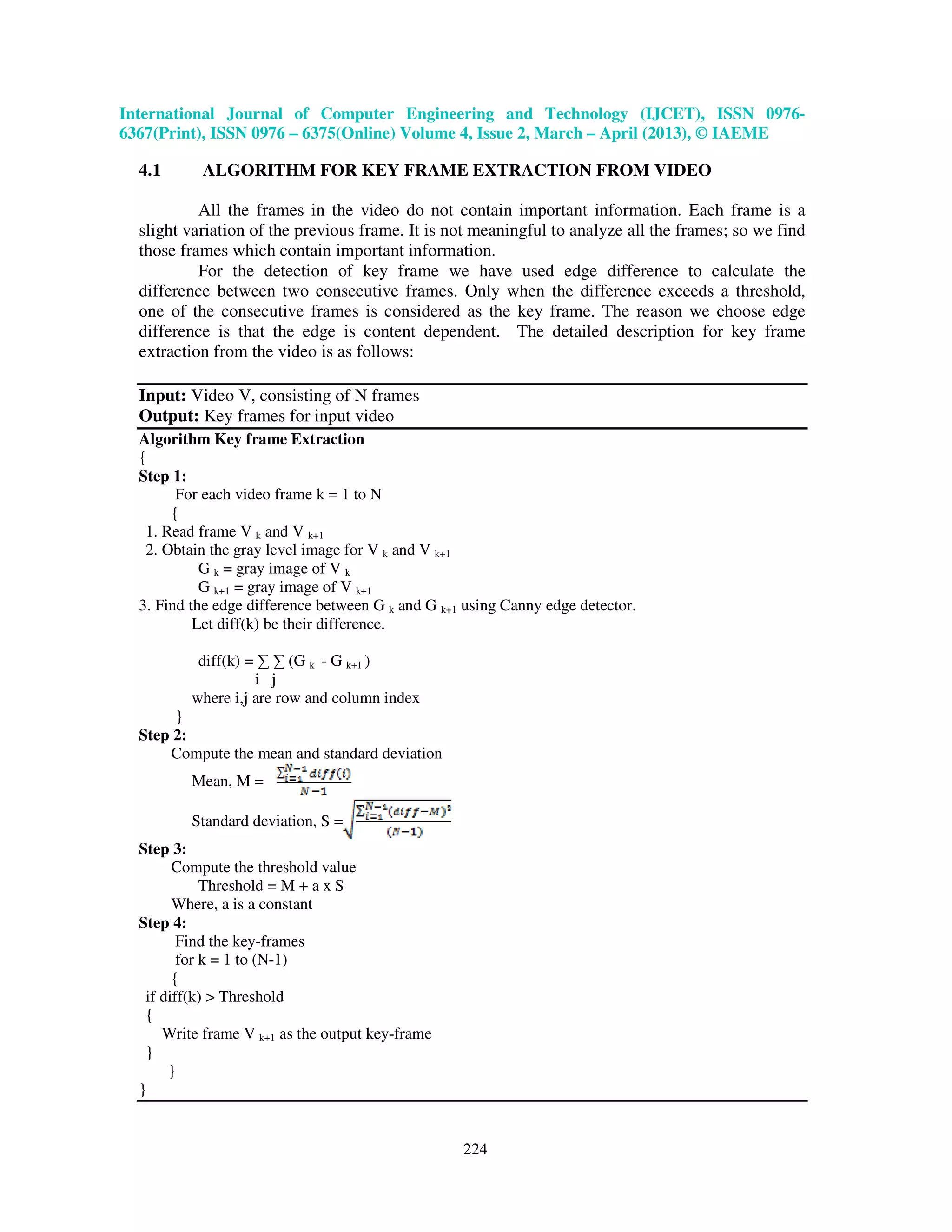

In this paper, we have proposed an algorithm for key frame extraction to facilitate the

video annotation process. The algorithm uses edge difference between the two consecutive

frames to find the difference between their contents. Our approach is shot-based. In shot based

method shots of the original video are first detected, and then one or more key frames are

extracted from each shot.

Methods of shot transition detection are: pixel-based comparison, template matching

and histogram-based method [2-3]. The pixel-based methods are susceptible to motion of

objects. So it is suitable to detect segmentation transition of the camera and object movement.

But in this method as each pixel is compared the time required is more. Template matching is

apt to result in error detection if only this method is used. The Histogram-based methods

entirely lose the location information. For example, two images with similar histograms may

have completely different content. So we have used the edge- based method. This method

considers the content of the frames.

The rest of this paper is organized as follows. Section 2 describes the uses of key frame

extraction. Section 3 presents the related work in the field of key frame extraction. In Section 4,

the proposed approach is described with the help of algorithm and flowchart. In section 5 the

results are specified and finally, we conclude in Section 6.

2. USES OF KEY-FRAME EXTRACTION

• Video transmission: In order to reduce the transfer stress in network and invalid information

transmission, the transmission, storage and management techniques of video information

become more and more important [1].

When a video is being transmitted, the use of key frames reduces the amount of data

required in video indexing and provides the framework for dealing with the video content [4].

In [5], a key frame based on-line coding video transmission is proposed. Key-frames

are fixed in advance. Each frame can only choose the latest coded and reconstructed key frame

as its reference frame. After coding and packetisation, compressed video packets are

transmitted with differentiated service classes. Key frame along with difference values are sent

from the source, using the key frame picture and the difference values the picture is

reconstructed at the destination.

• Video summarization: Video summarization is a compact representation of a video sequence.

It is useful for various video applications such as video browsing and retrieval systems. A

video summarization can be a preview sequence which can be a collection of key frames

which is a set of chosen frames of a video. Key-frame-based video summarization may lose

the spatio-temporal properties and audio content in the original video sequence; it is the

simplest and the most common method. When temporal order is maintained in selecting the

key frames, users can locate specific video segments of interest by choosing a particular key

222](https://image.slidesharecdn.com/keyframeextractionmethodologyforvideoannotation-130410094812-phpapp02/75/Key-frame-extraction-methodology-for-video-annotation-2-2048.jpg)

![International Journal of Computer Engineering and Technology (IJCET), ISSN 0976-

6367(Print), ISSN 0976 – 6375(Online) Volume 4, Issue 2, March – April (2013), © IAEME

frame using a browsing tool. Key frames are also effective in representing visual content of a

video sequence for retrieval purposes. Video indexes may be constructed based on visual

features of key frames, and queries may be directed at key frames using image retrieval

techniques [6].

• Video annotation: Video annotation is the extraction of the information about video, adding

this information to the video which can help in browsing, searching, analysis, retrieval,

comparison, and categorization. Annotation is to attach data to some other piece of data (i.e.

add metadata to data) [7].

To fasten the access of video, it is annotated. It is not momentous to analyze each video frame

for this, so key frames are found and only these are analyzed for annotation purpose.

• Video indexing: Key frames reduce the amount of data required in video indexing and

provides framework for dealing with the video content.

• Before downloading any video over the internet, if key frames are shown besides it, users can

predict the content of the video and decide whether it is pertinent to his search.

• Other applications such as creating chapter titles in DVDs and prints from video.

3. RELATED WORK

The work in the area of key frame extraction is either in the spatial domain or in the

compressed domain. In [8] key frames are extracted using histogram difference between two

consecutive frames.

Jin-Woo Jeong, Hyun-Ki Hong, and Dong-Ho Lee have proposed an approach for the

detection of a video shot and its corresponding key frame can be performed based on the

visual similarity between adjacent video frames.They used Euclidean distance measure to

visual similarity between video frames. First frame of each shot is selected as a key frame [9].

Janko Calic and Ebroul Izquierdo proposed an algorithm for scene change detection

and key frame extraction [10]. It generates the frame difference metrics by analyzing statistics

of the macro-block feature extracted from MPEG videos. Temporal segmentation is used to

detect the scene change.

A more elaborate method is employed by [11] that propose an approach which uses

shot boundary detection to segment the video into shots and the k-means algorithm to

determine cluster representatives for each shot that are used as key frames. MPEG-7 Color

Layout Descriptor (CLD) is used as a feature to compute differences between consecutive

frames. As k-means is employed after finding shot boundary its complexity increases.

4. THE PROPOSED APPROACH

The first step towards video annotation is the extraction of key frames. The key

frames must contain the important frames so as to describe the contents of the video in the

later processing stages. After the extraction of important frames, instead of analyzing the

contents of all video frames, only the key frame images are analyzed to give the annotation.

The number of frames should not be reduced to an extent that important information is not

covered by the key frames. As the key frames are analyzed after the key frame extraction

process, the algorithm for extraction should not be very complex or time consuming.

223](https://image.slidesharecdn.com/keyframeextractionmethodologyforvideoannotation-130410094812-phpapp02/75/Key-frame-extraction-methodology-for-video-annotation-3-2048.jpg)

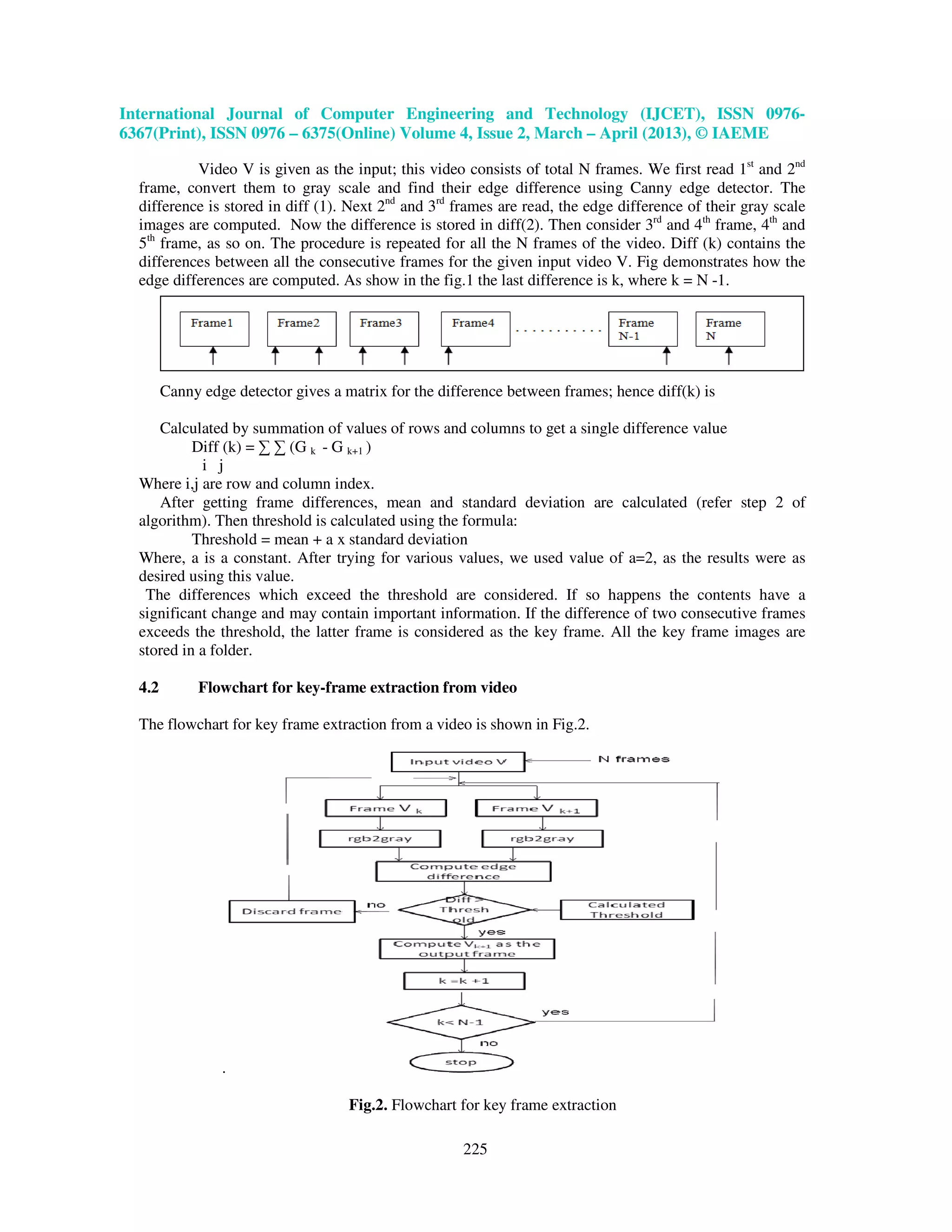

![International Journal of Computer Engineering and Technology (IJCET), ISSN 0976-

6367(Print), ISSN 0976 – 6375(Online) Volume 4, Issue 2, March – April (2013), © IAEME

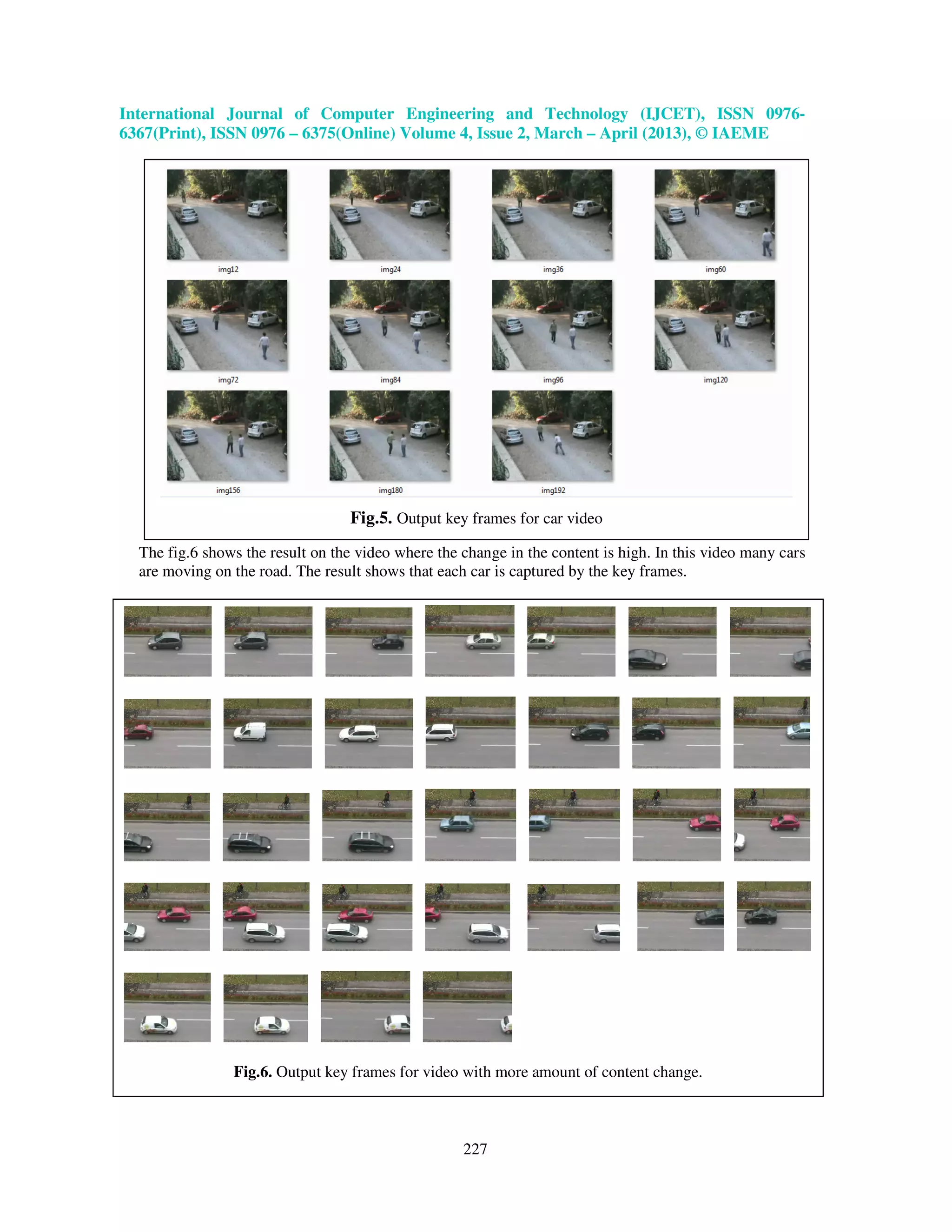

6. CONCLUSION AND FUTURE WORK

Depending upon the contents and the change in contents of the video, the key frames are

extracted. As seen in the first video the no. of key frames is less; this is because the change of content

in this video was very less. In the third video example above, the change of content or the amount of

information in the video is more so more number of frames are extracted as key frames.

As the key frames need to be processed for annotation purpose, the important information must

not be missed. Our algorithm can be improved by further reducing the number of key frames extracted.

This can be done by adding one more pass. After the phase 1 the key frames extracted can again be

given as input to the algorithm. This will reduce the redundant frames or the frames which contain

similar contents, but adding one more pass will increase the execution time. As the frames need to be

analyzed after key frame extraction for the purpose of annotation, some amount of redundancy can be

considered rather than increasing the execution time.

In future, we can design a video annotation system which will utilize the key frames obtained

from the above algorithm.

REFERENCES

[1] G. Liu, and J. Zhao, “Key Frame Extraction from MPEG Video Stream ”, Proceedings of the

Second Symposium International Computer Science and Computational Technology (ISCSCT

’09) China, 26-28, Dec. 2009, pp. 007-011.

[2] C. F. Lam, M. C. Lee, “Video segmentation using color difference histogram,” Lecture Notes in

Computer Science, New York: Springer Press, pp. 159–174., 1998.

[3] A. Hampapur, R. Jain, and T. Weymouth, “Production model based digital video segmentation,”

Multimedia Tools Application, vol. 1, no. 1, pp.9–46, 1995.

[4] T. Liu, H. Zhang, and F. Qi, “A novel video key-frame-extraction algorithm based on perceived

motion energy model,” IEEE Transactions on Circuits and Systems. For Video Technology, vol.

13, no. 10, pp. 1006-1013, 2003.

[5] Q. Zhang and G. Liu, “A key-frame-based error resilient coding scheme for video transmission

over differentiated services networks,” In proceeding of: Packet Video 2007, 12-13 Nov. 2007 ,

pp. 85 – 90.

[6] P. Mundur, Y. Rao, Y. Yesha, “Keyframe-based Video Summarization using Delaunay

Clustering,” International Journal on Digital Libraries , Volume 6 Issue 2, April 2006

pp 219 - 232.

[7] K. Khurana, M. B. Chandak, “Study of Various Video Annotation Techniques,” International

Journal of Advanced Research in Computer and Communication Engineering Vol. 2, Issue 1,

January 2013.

[8] S. Thakare, “Intelligent Processing and Analysis of Image for shot Boundary Detection”,

International Journal of Engineering Research and Applications, Vol. 2, Issue 2, Mar-Apr 2012,

pp.366-369.

[9] J. Jeong, H. Hong, and D. Lee, “Ontology-based Automatic Video Annotation Technique In

Smart TV Environment”, IEEE Transaction on consumer Electronics, Vol. 57, No. 4, November

2011

[10] J. Calic and E. Izquierdo, “Efficient Key-frame Extraction And Video Analysis”, International

Symposium On Information Technology, April 2002,IEEE.

[11] D. Borth, A. Ulges, C. Schulze, T. M. Breuel, “Key frame Extraction for Video Tagging &

Summarization”, 2008.

[12] Reeja S R and Dr. N. P Kavya, “Motion Detection for Video Denoising – The State of Art And

The Challenges” International journal of Computer Engineering & Technology (IJCET), Volume

3, Issue 2, 2012, pp. 518 - 525, ISSN Print: 0976 – 6367, ISSN Online: 0976 – 6375.

228](https://image.slidesharecdn.com/keyframeextractionmethodologyforvideoannotation-130410094812-phpapp02/75/Key-frame-extraction-methodology-for-video-annotation-8-2048.jpg)