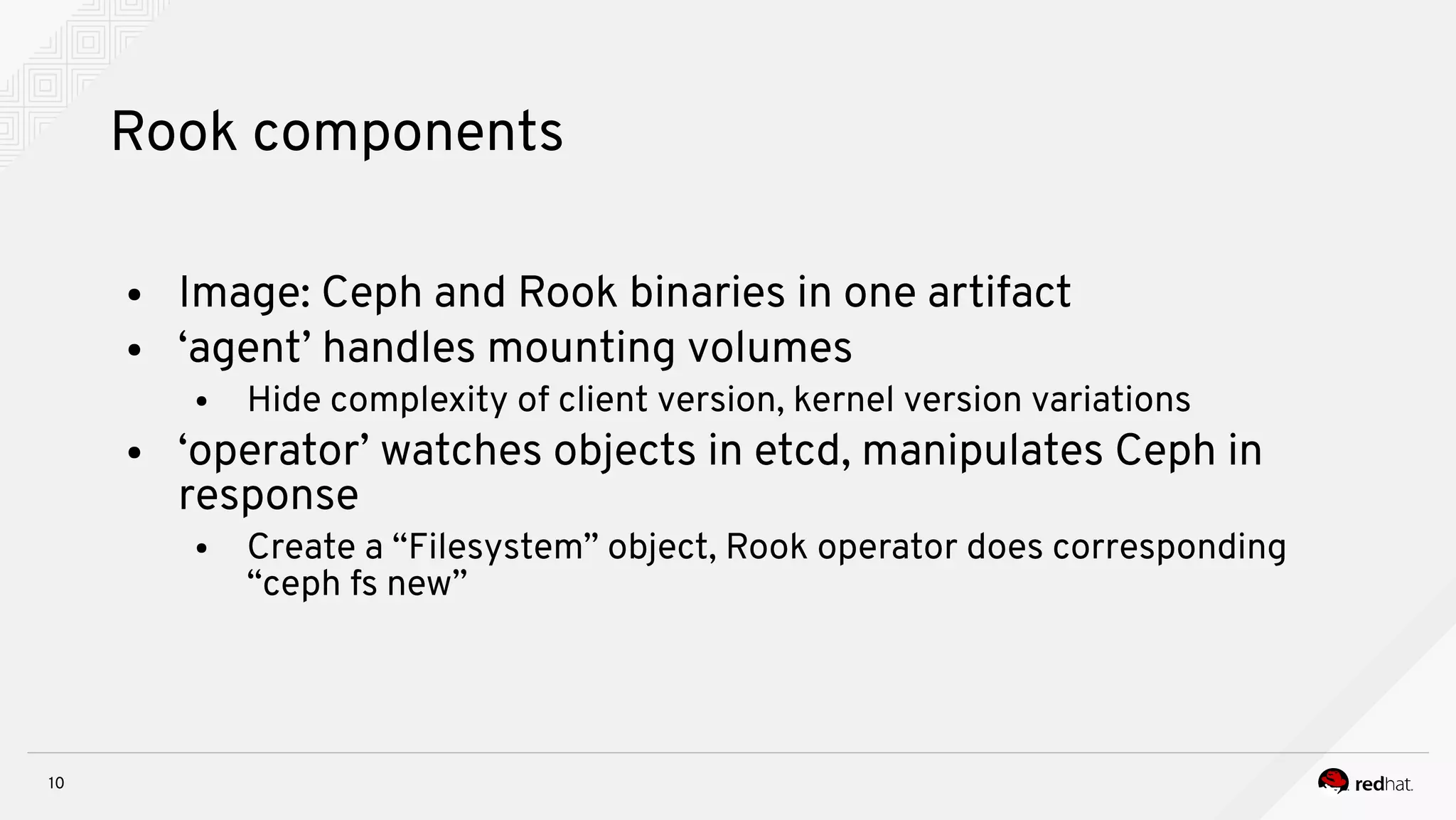

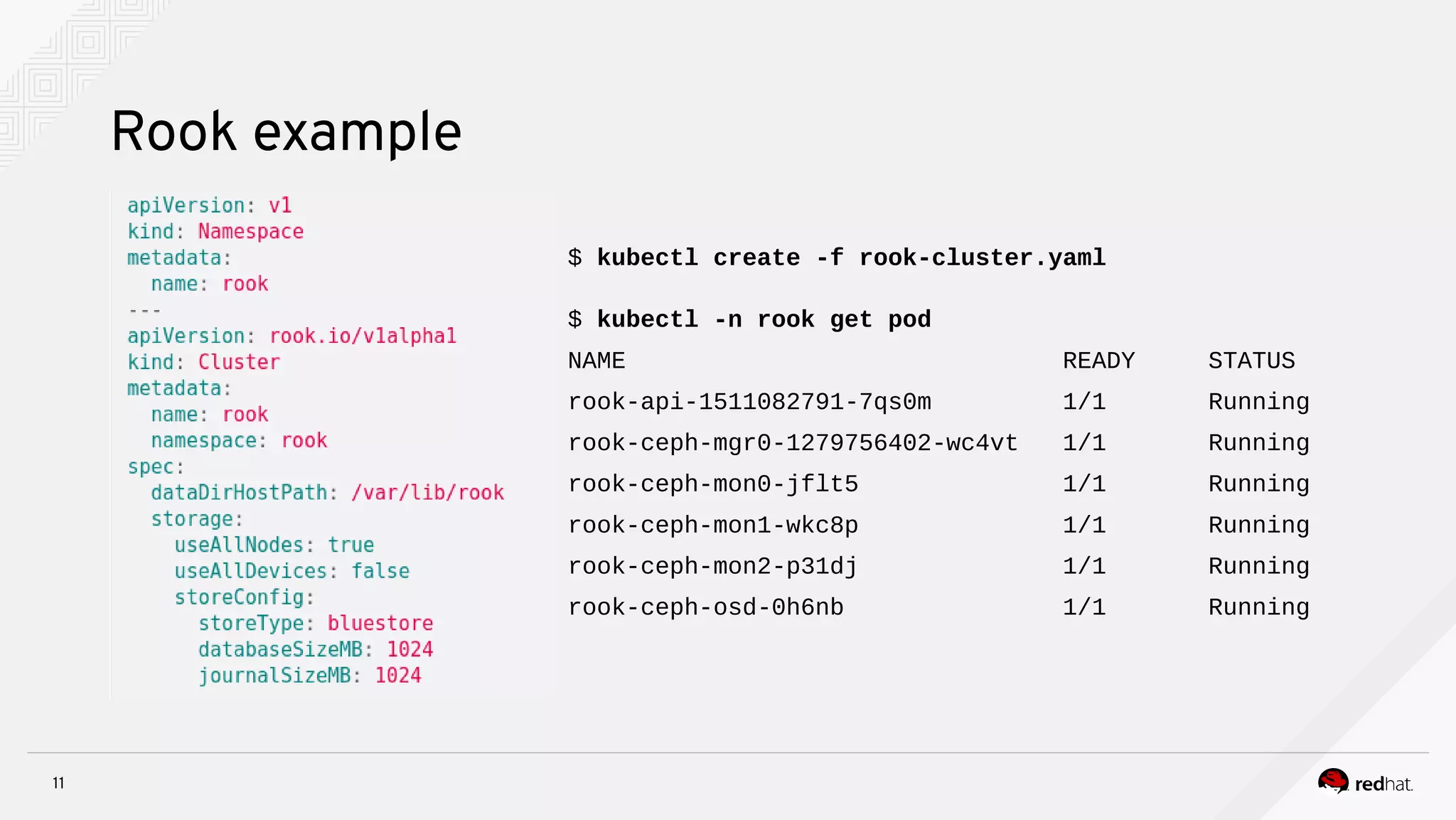

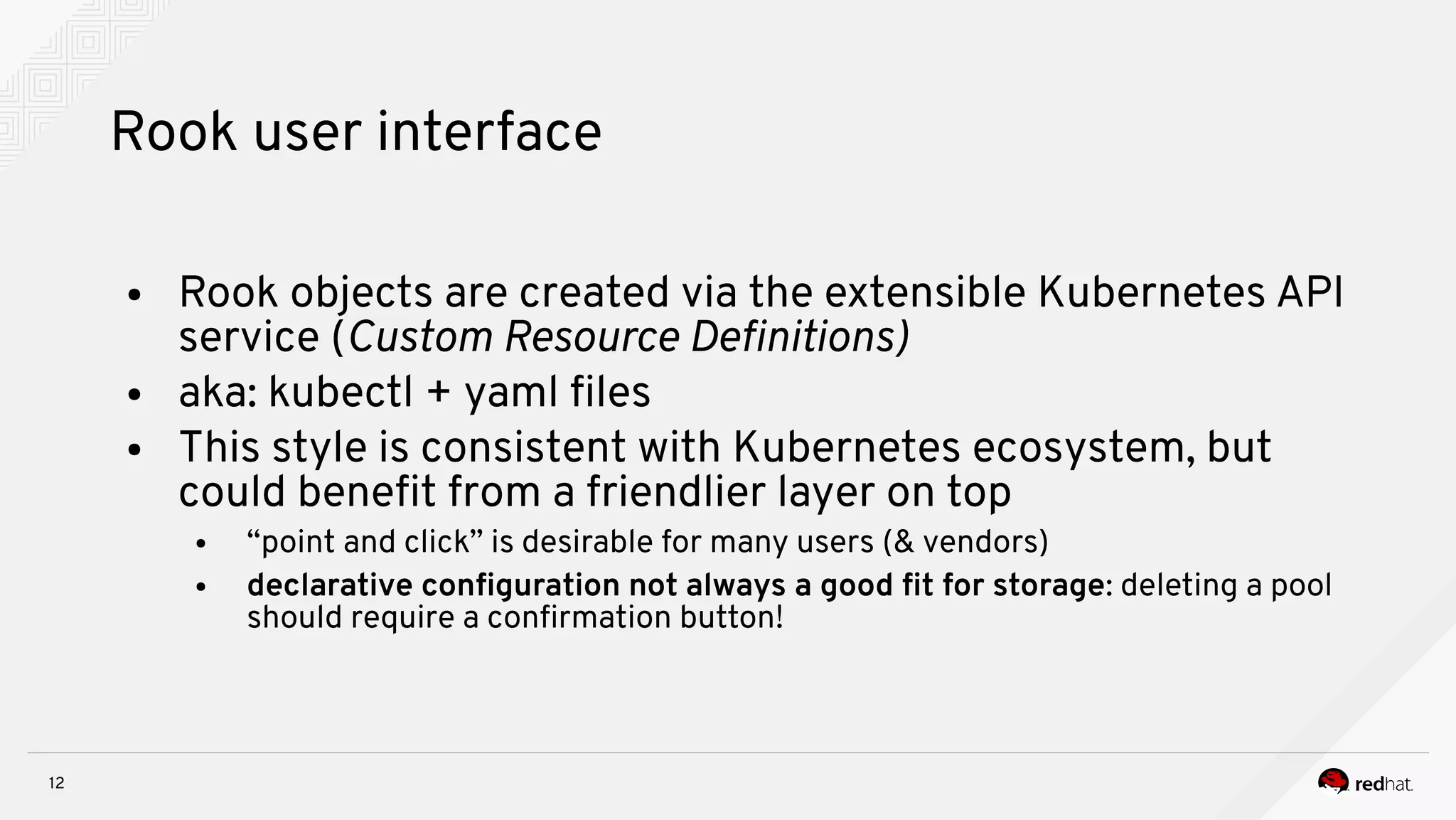

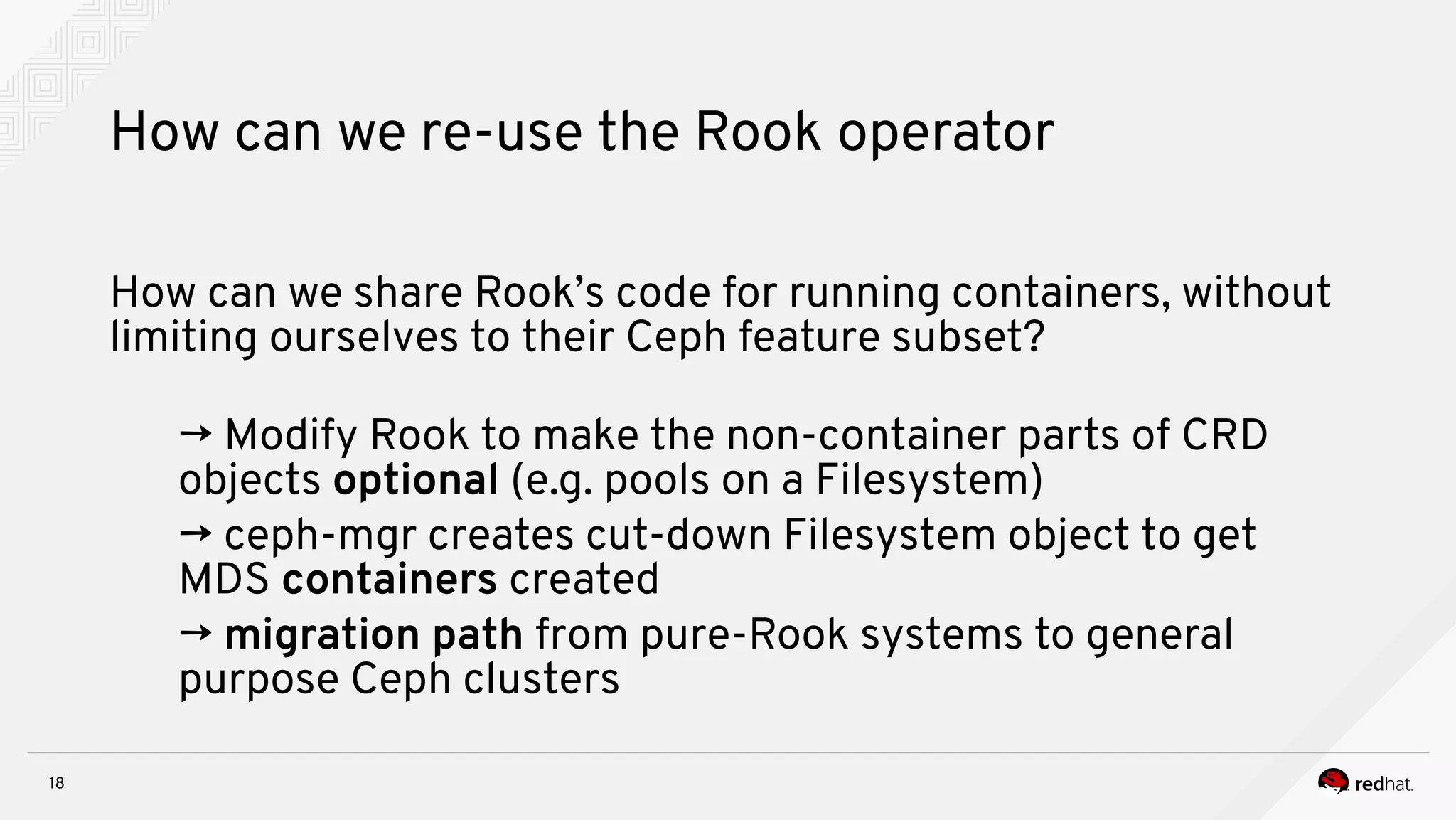

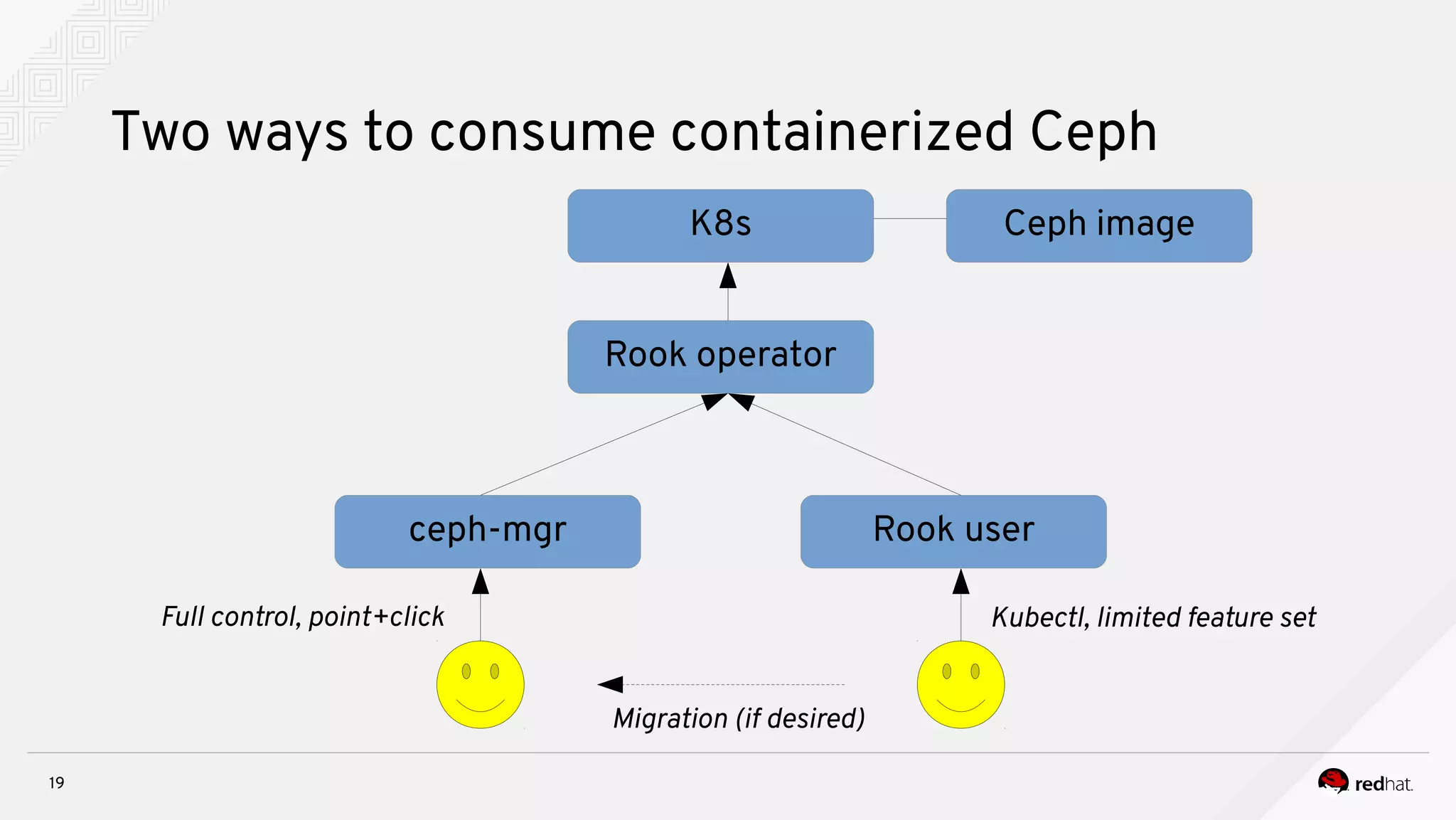

This document discusses combining Ceph with Kubernetes for container-based storage management. It describes how Rook provides a simplified way to consume Ceph storage using Kubernetes concepts and APIs. The Ceph dashboard and Ceph management daemon (ceph-mgr) could also be integrated with Rook to provide a unified management experience. This would allow configuring Ceph storage through Kubernetes and the dashboard without needing deep Ceph expertise. The document outlines several ongoing projects aimed at further simplifying Ceph management, such as automatic placement group configuration and progress monitoring. The overall goal is to reduce the cognitive load on users and enable more automated workflows.