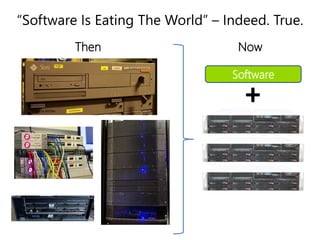

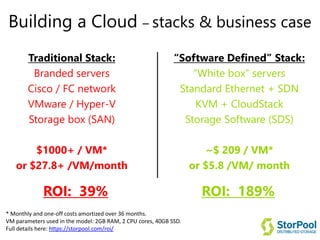

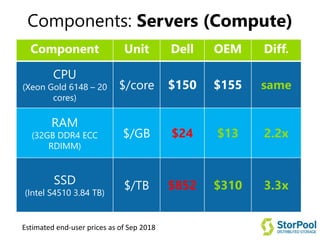

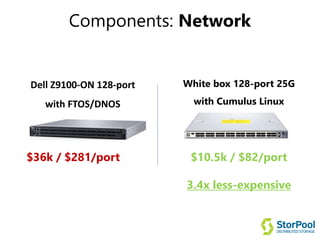

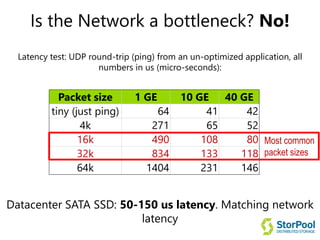

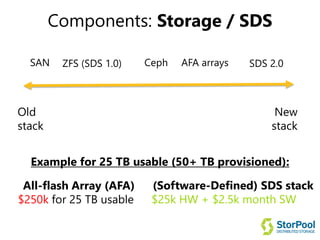

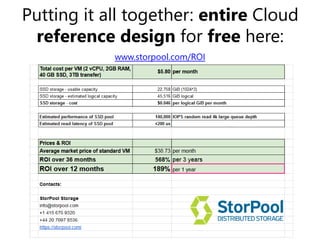

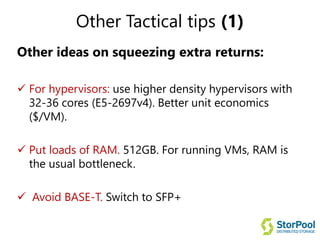

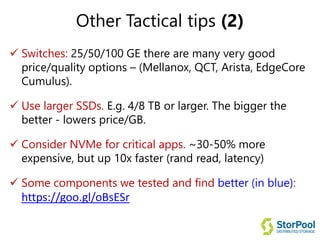

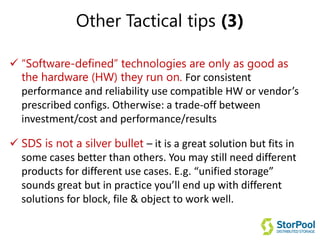

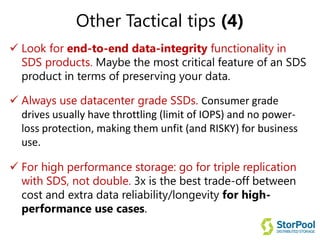

This document provides tactical advice on building software-defined clouds with a focus on cost savings. It discusses using standard hardware and software-defined technologies rather than proprietary solutions to reduce costs. Specific recommendations include using open-source hypervisors and storage software, whitebox servers, Ethernet networking, and larger SSDs. Building a cloud with these software-defined components can provide a significantly lower total cost of ownership compared to traditional hardware-centric approaches.