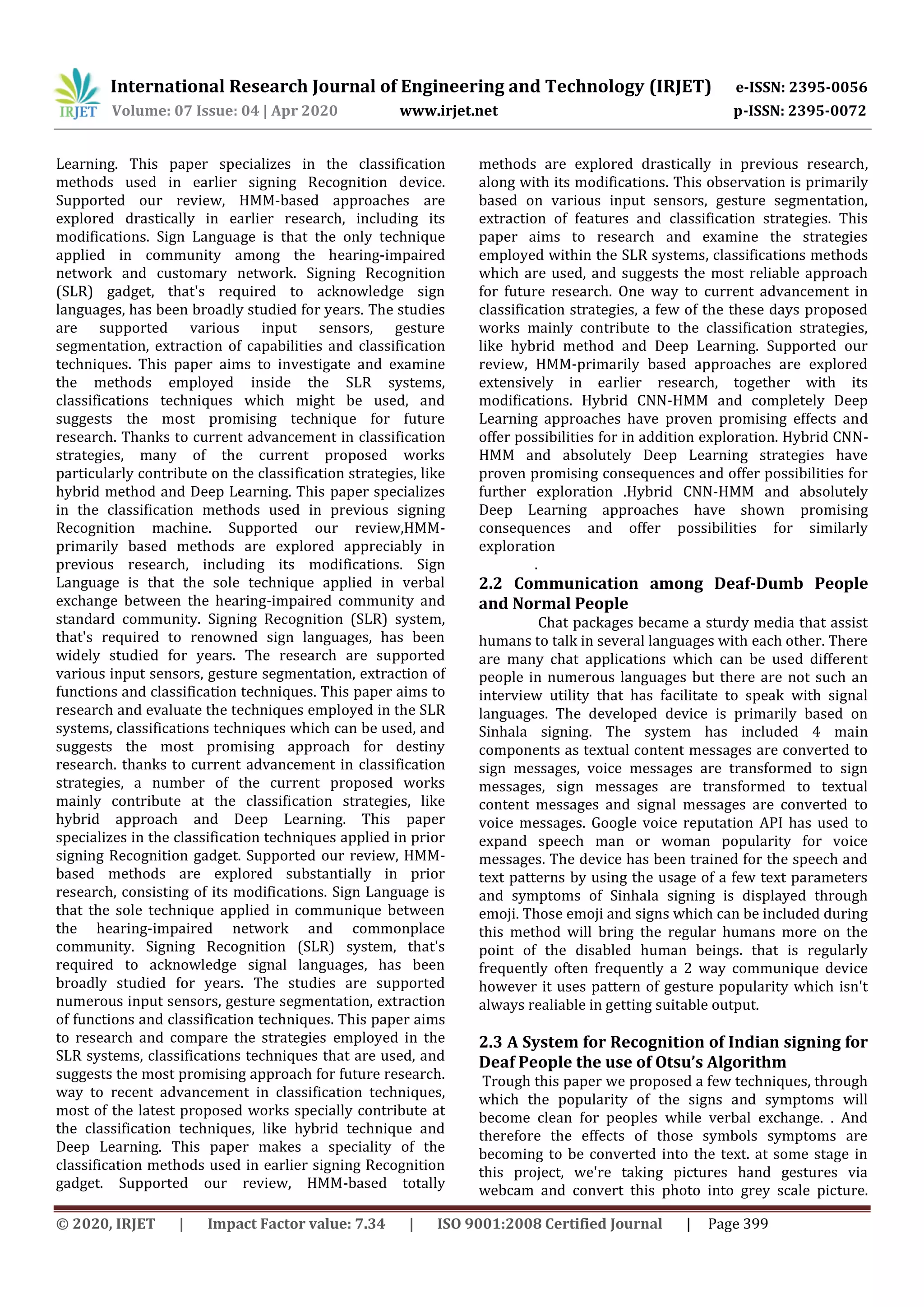

This document presents a robust sign language and hand gesture recognition system using convolutional neural networks. The system captures image frames and processes them through various neural network layers to classify hand gestures into letters, numbers, or other symbols. It segments the hand from images using color thresholds and edge detection. The images then undergo preprocessing like resizing before being classified by the CNN. The CNN is trained on a large dataset to accurately recognize gestures in sign language and provide text output to bridge communication between deaf and non-signing individuals. The system achieved good results classifying several alphabet letters but could be expanded to recognize word combinations.

![International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 07 Issue: 04 | Apr 2020 www.irjet.net p-ISSN: 2395-0072

© 2020, IRJET | Impact Factor value: 7.34 | ISO 9001:2008 Certified Journal | Page 401

these numbers. This value describes the intensity of the

pixel at each and every point.

To solve this problem the computer looks for the

characteristics of the base level. In human understanding

such characteristics are for example are the trunk or large

ears. For the computer, these characteristics are the

boundaries or curvatures. Then, it is passed through the

groups of convolutional layers the computer constructs

more abstract concepts. In more detail: the image is

passed through a series of convolutional layers

(nonlinear,pooling,fully,connected layers).

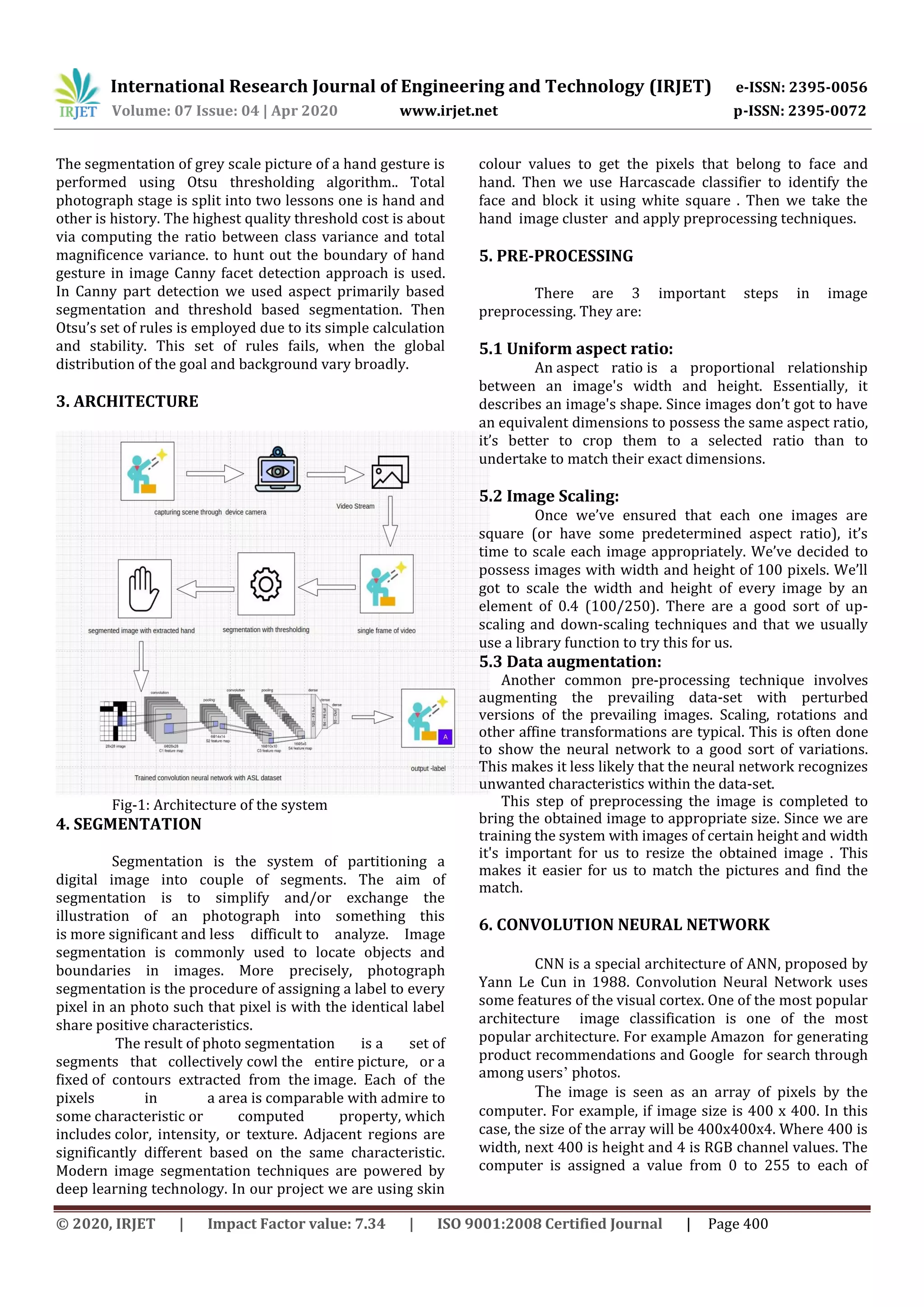

RESULTS

Our system worked well to provide appropriate results.

We trained our system using the American Sign Language

gestures. The samples of the obtained results are shown

below.

Fig-2: Result of Alphabet A

Fig-3: Result of Alphabet W

Fig-4: Result of Alphabet L

CONCLUSION

We obtained letters as output of the signs . The project

can be further extended such that we conjugate all the

letters to obtain statements. Thus making the output more

readable.

Nowadays, applications need several kinds of

images as sources of information for elucidation and

analysis. Several features are to be extracted so as to

perform various applications. The aim of this project is to

create an offline sign language recognition system that is

more robust in order to get the most appropriate output.

In our work we trained the system with a large data set of

images. Then we used cluster segmentation with respect

to colour and edges. Image then undergoes feature

extraction using various methods to make the image more

readable by the computer. The closest match from the

database is the obtained. Its label is displayed as output.

Sign language recognition system is a powerful tool to

prepare an expert knowledge, edge detect and the

combination of inaccurate information from different

sources. The intend of convolution neural network is to

get the appropriate classification and accurate output.

REFERENCES

[1] https://medium.com/@RaghavPrabhu/understandin

g-of-convolutional-neural-network-cnn-deep-

learning-99760835f148

[2] https://adeshpande3.github.io/adeshpande3.github.i

o/A-Beginner's-Guide-To-Understanding-

Convolutional-Neural-Networks/

[3] http://citeseerx.ist.psu.edu/viewdoc/download?doi=

10.1.1.734.8389&rep=rep1&type=pdf](https://image.slidesharecdn.com/irjet-v7i483-210128050212/75/IRJET-A-Robust-Sign-Language-and-Hand-Gesture-Recognition-System-using-Convolution-Neural-Network-4-2048.jpg)