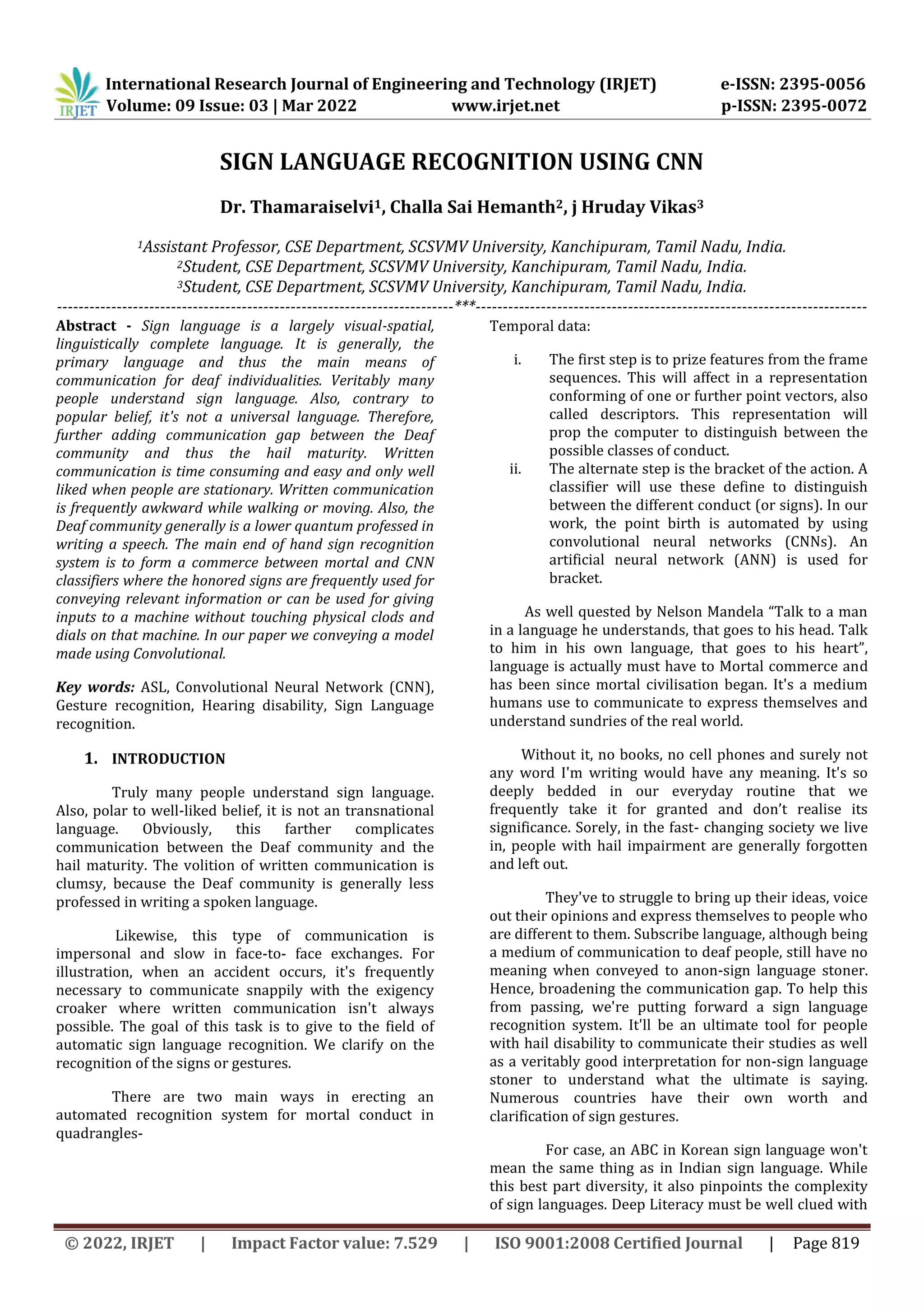

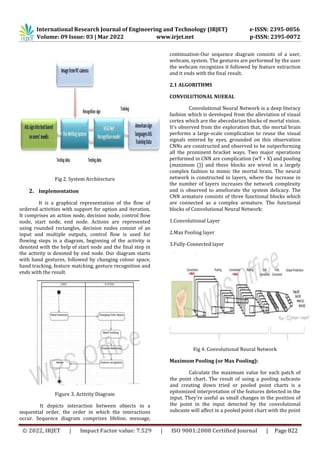

This document discusses a sign language recognition system using convolutional neural networks (CNNs). It aims to improve communication between deaf communities and others by automatically recognizing common sign language gestures without needing physical devices. The proposed system uses CNNs to extract spatial features from video frame sequences and classify a set of 9 Egyptian sign language gestures. A pre-trained GoogleNet model is used to extract general features from input images, which are then classified by the neural network. This transfer learning approach reduces training requirements. The system architecture includes preprocessing steps like image resizing and noise removal, followed by CNN layers for feature extraction and classification of hand gestures in real-time video streams.