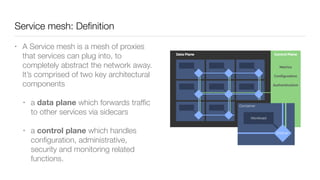

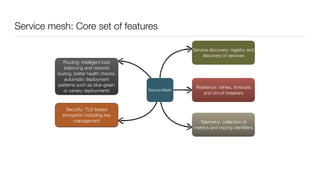

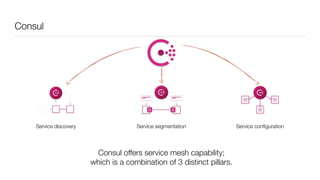

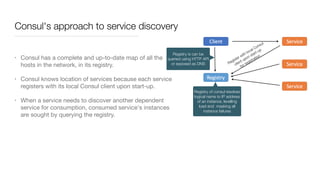

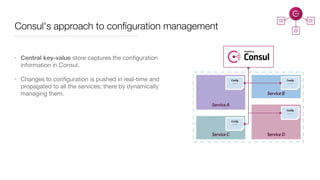

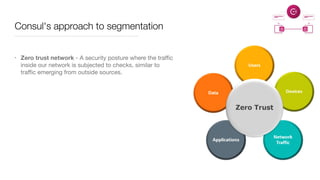

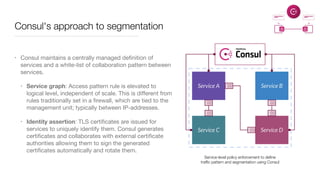

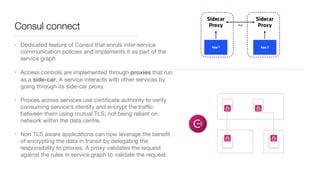

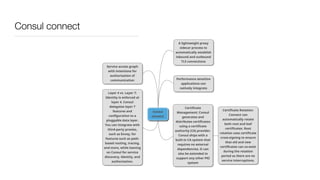

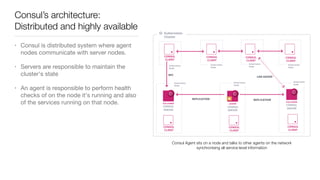

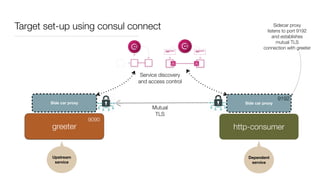

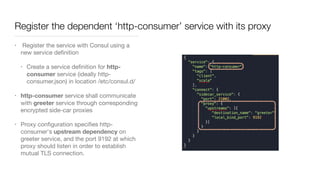

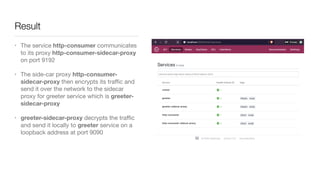

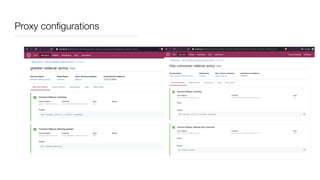

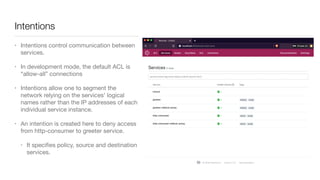

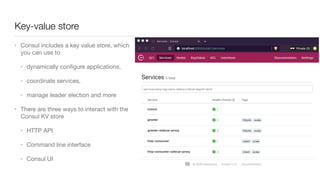

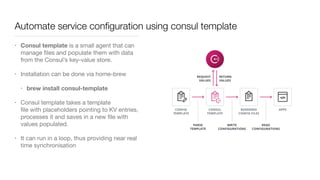

Consul is a service mesh that provides service discovery, configuration, and segmentation for microservices. It uses proxies and sidecars to abstractly manage inter-service communication and enforce network policies. Consul offers key features like service discovery, telemetry collection, security via TLS encryption, intelligent routing, and resilience through retries and circuit breakers. It provides these capabilities through its distributed architecture of agents and servers and uses proxies, intentions, and mutual TLS to implement a service mesh for microservices using Consul Connect.