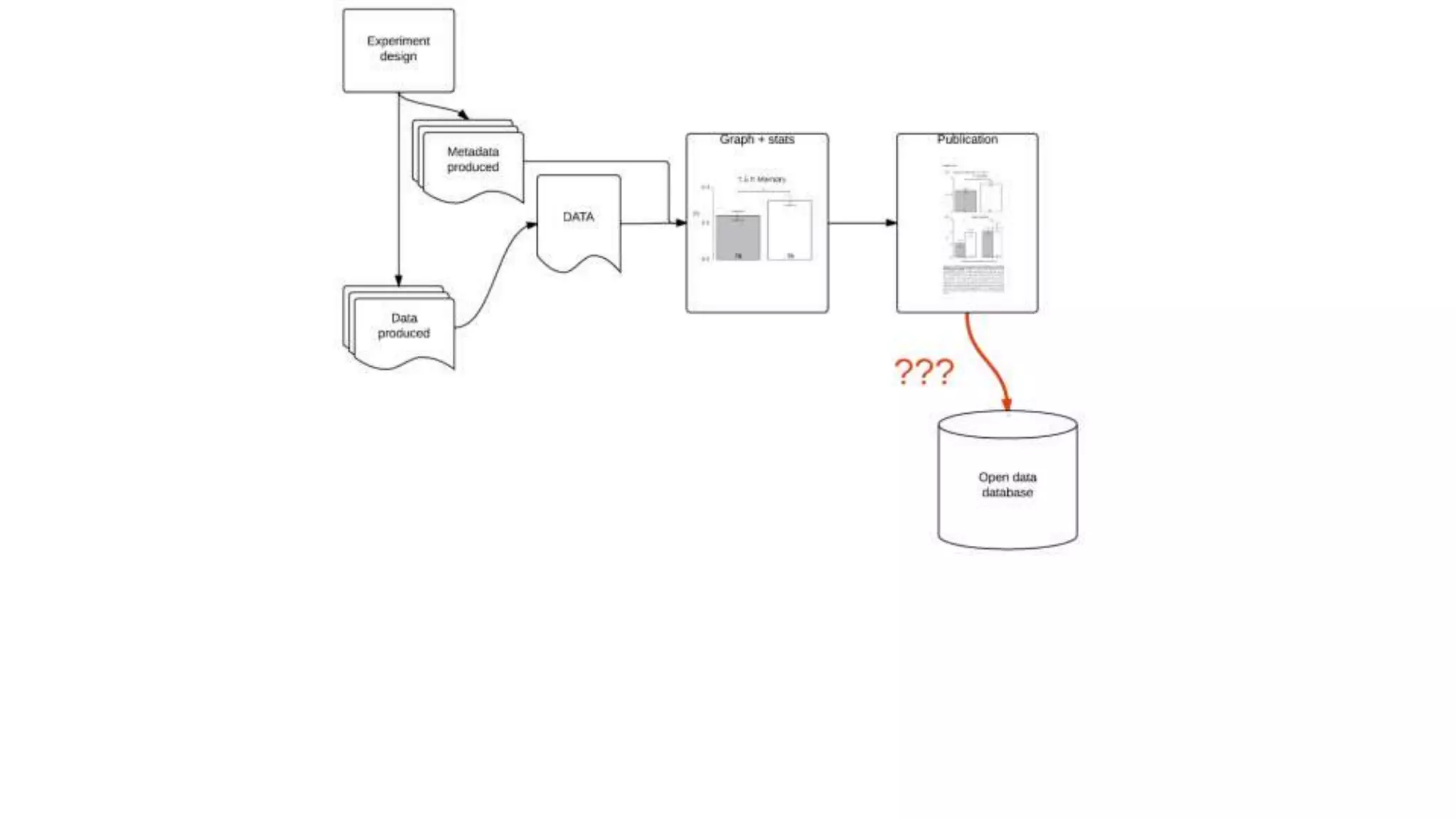

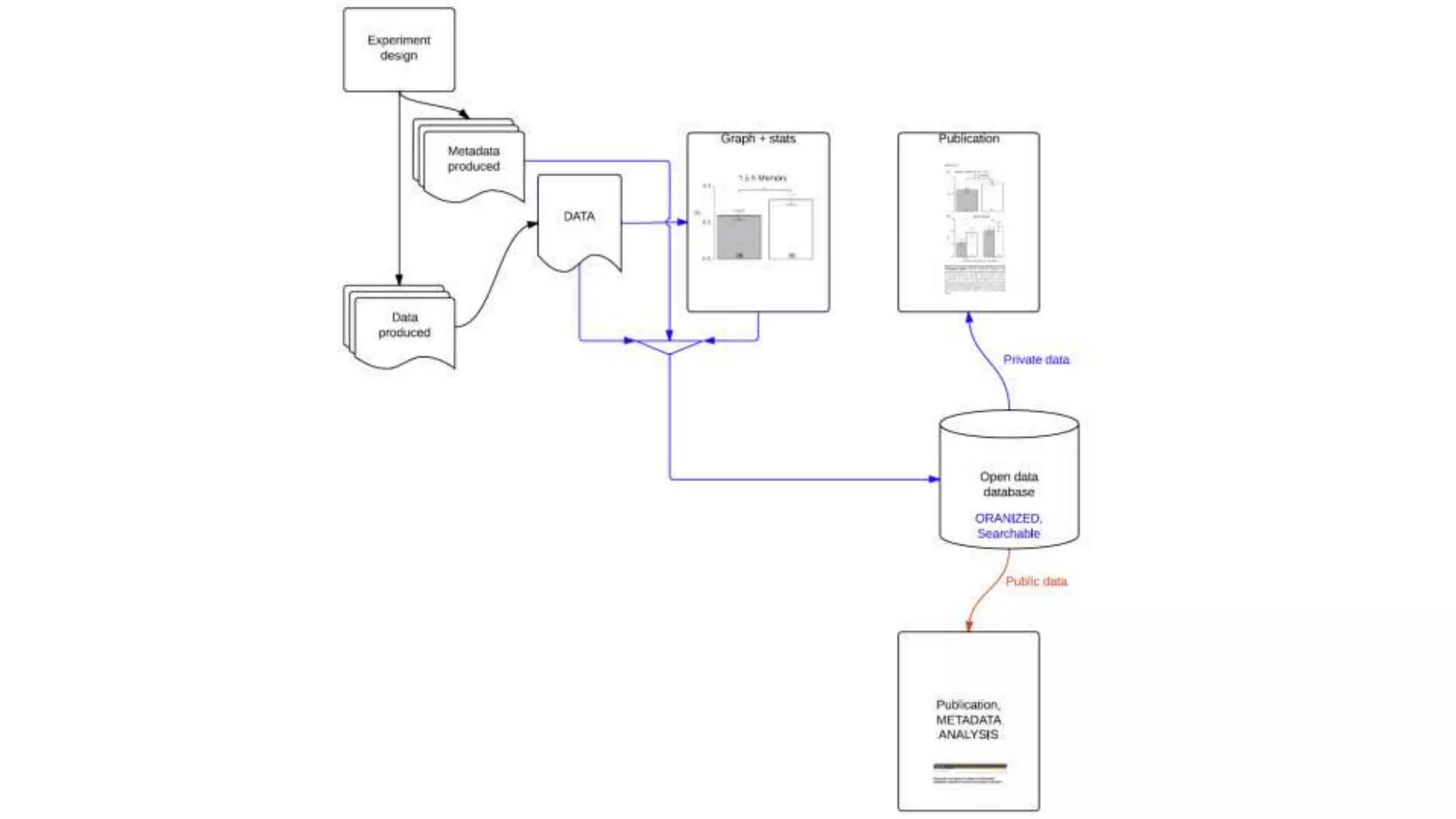

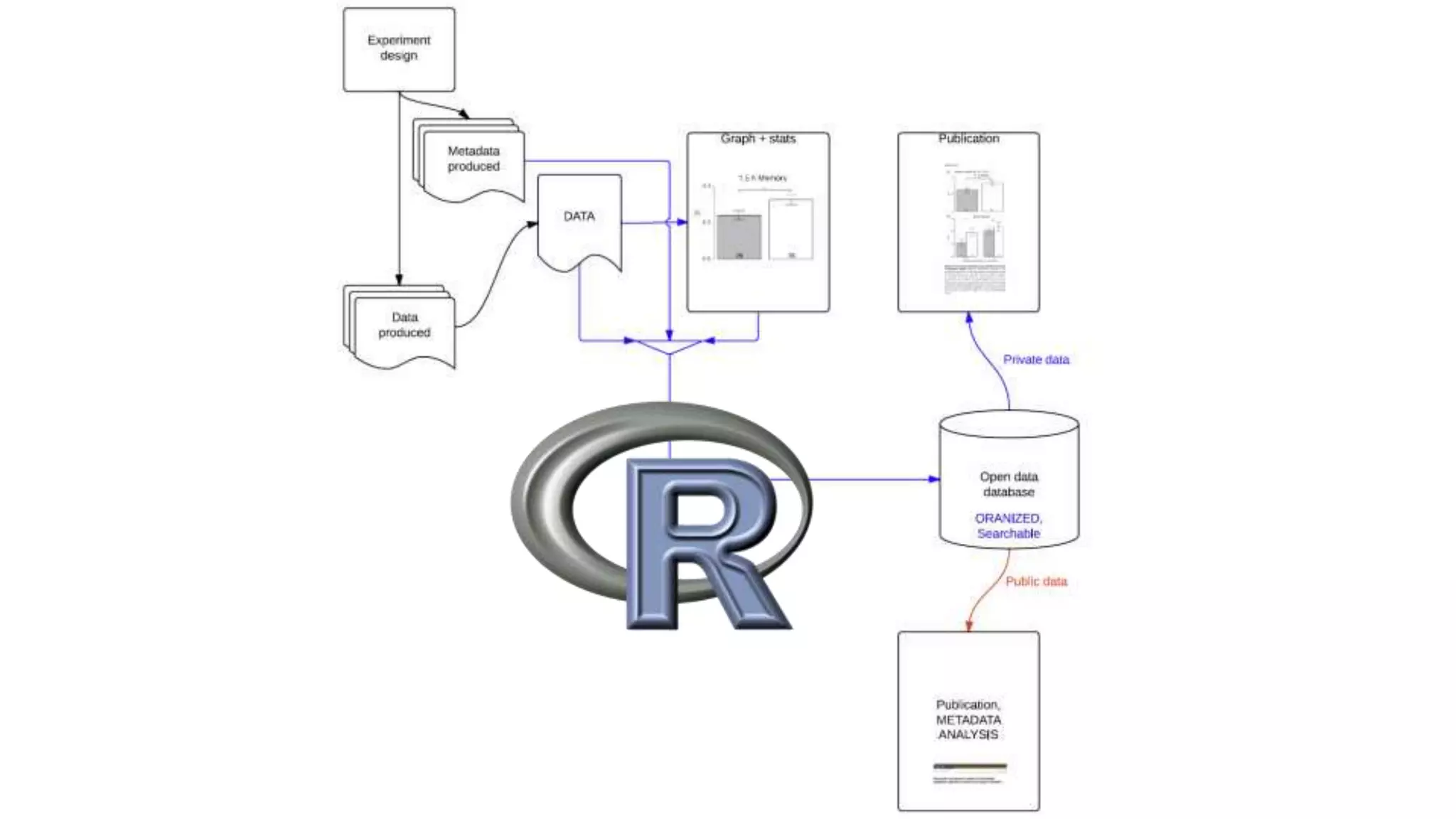

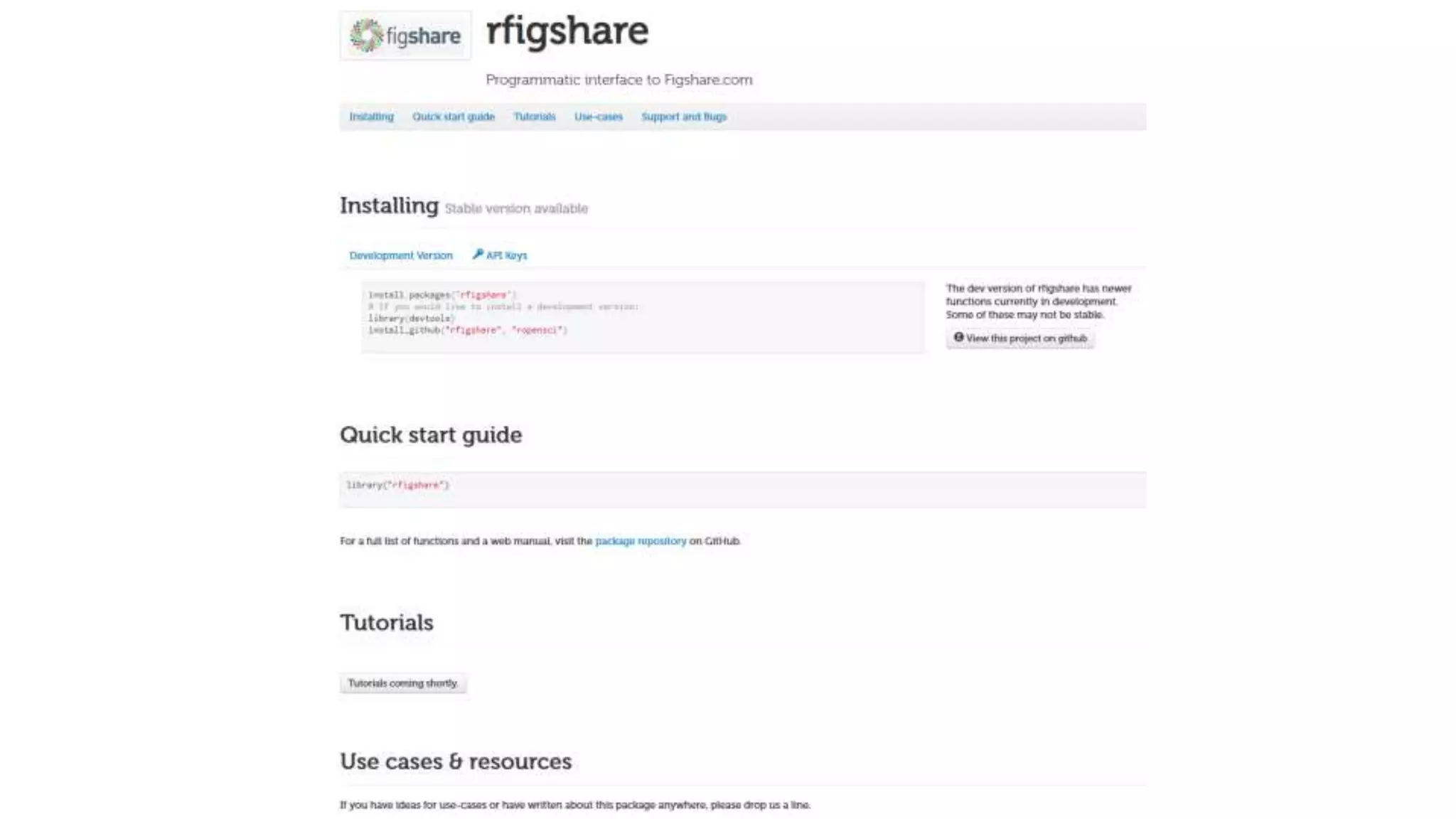

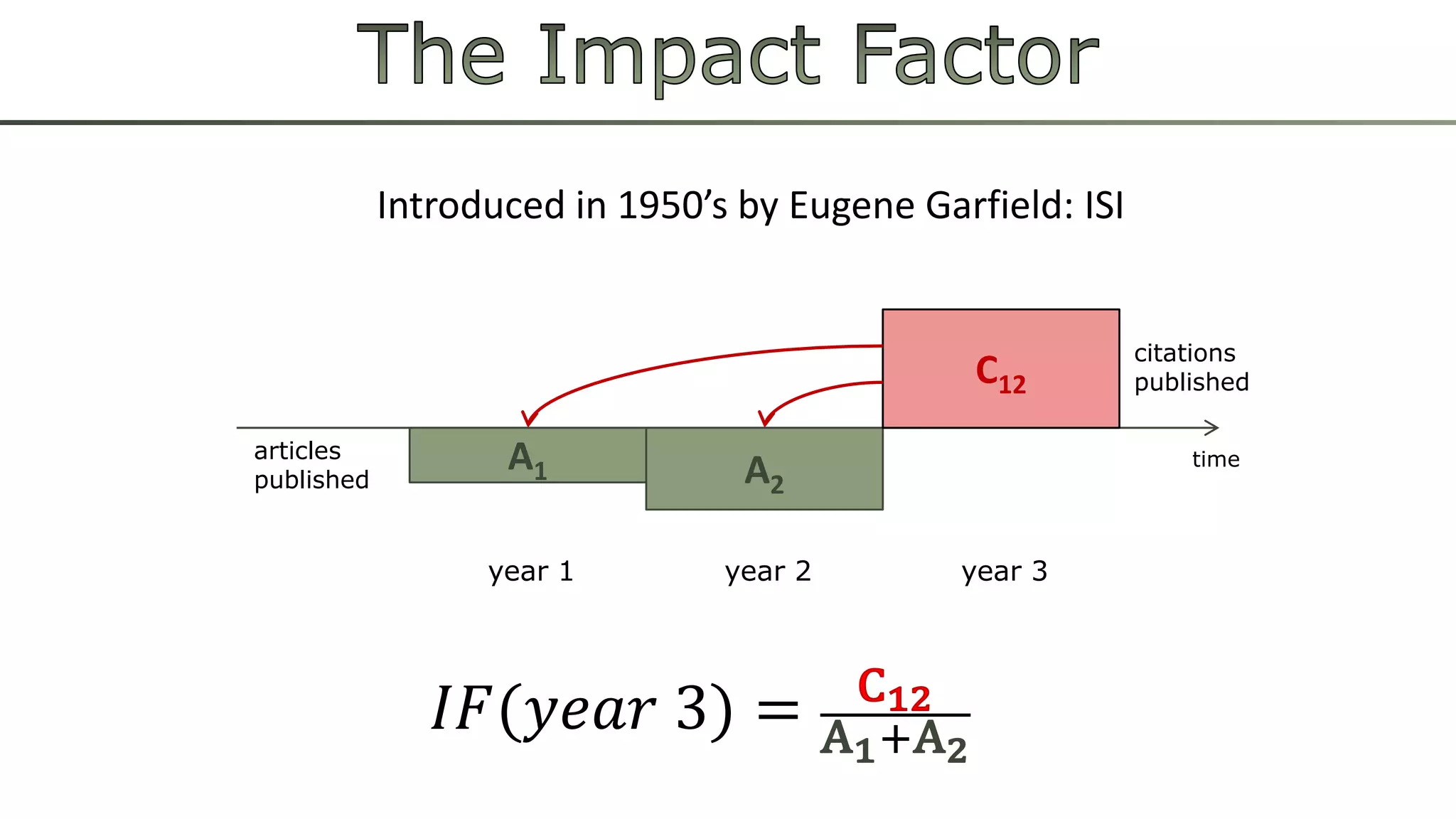

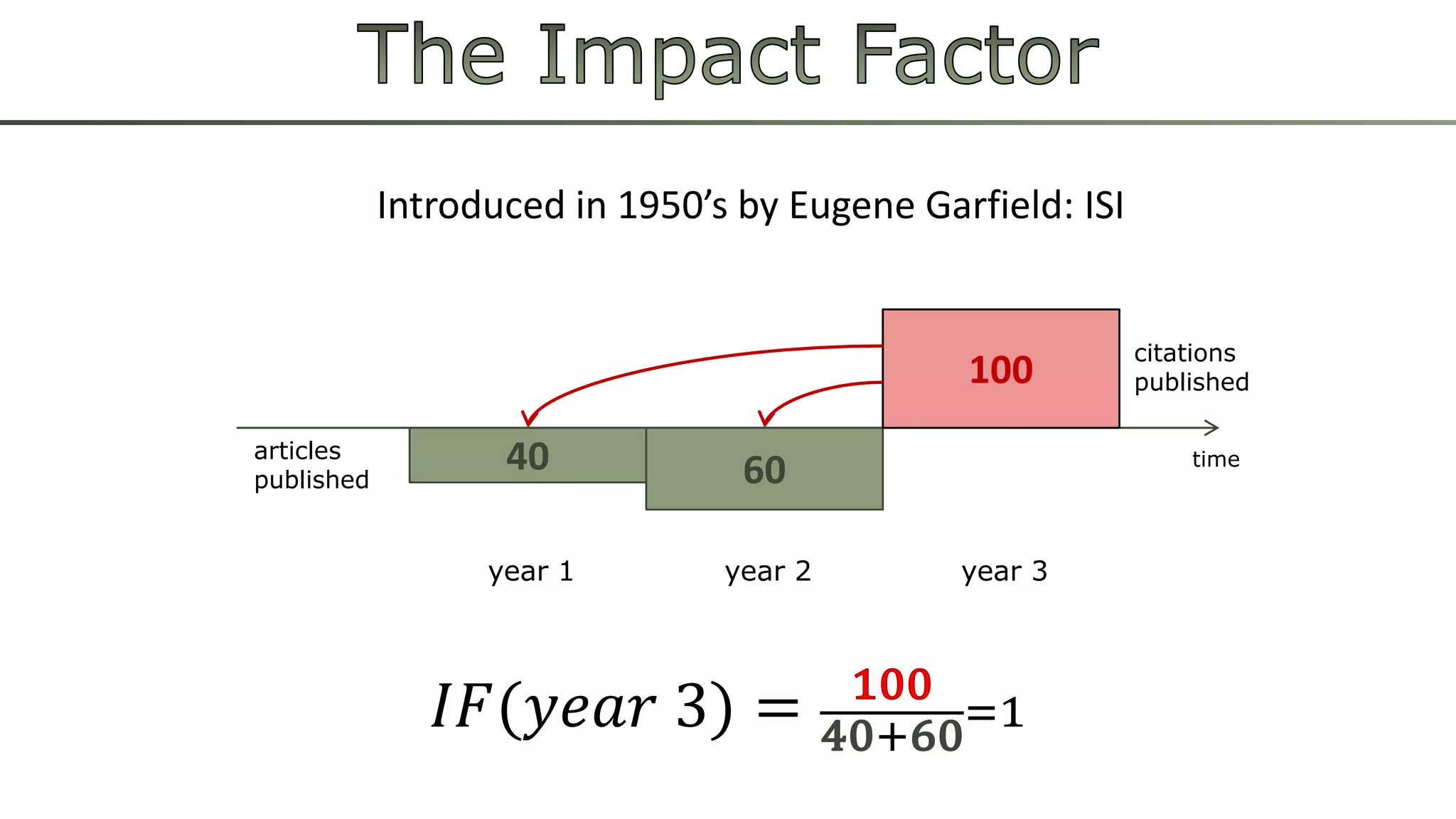

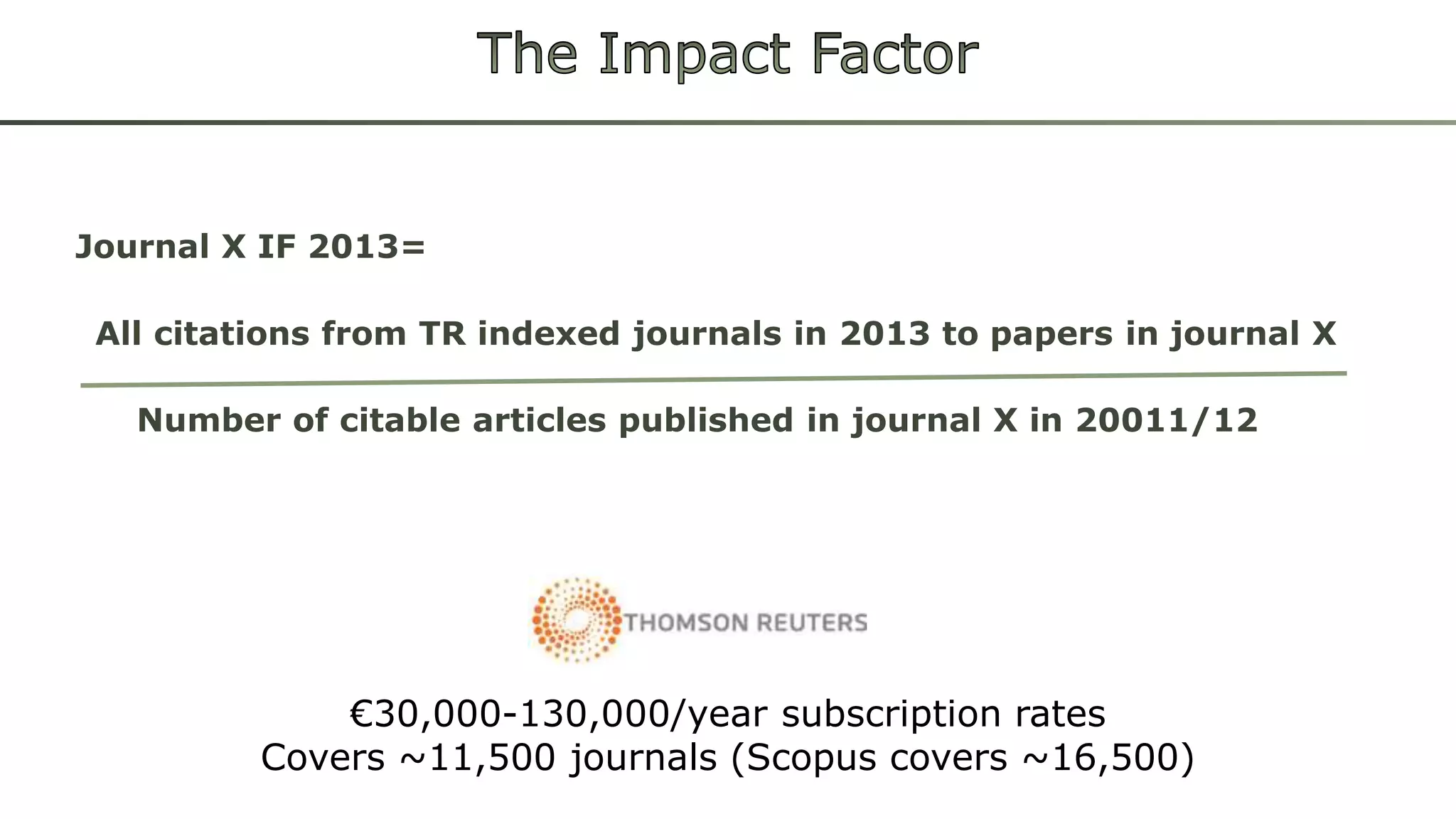

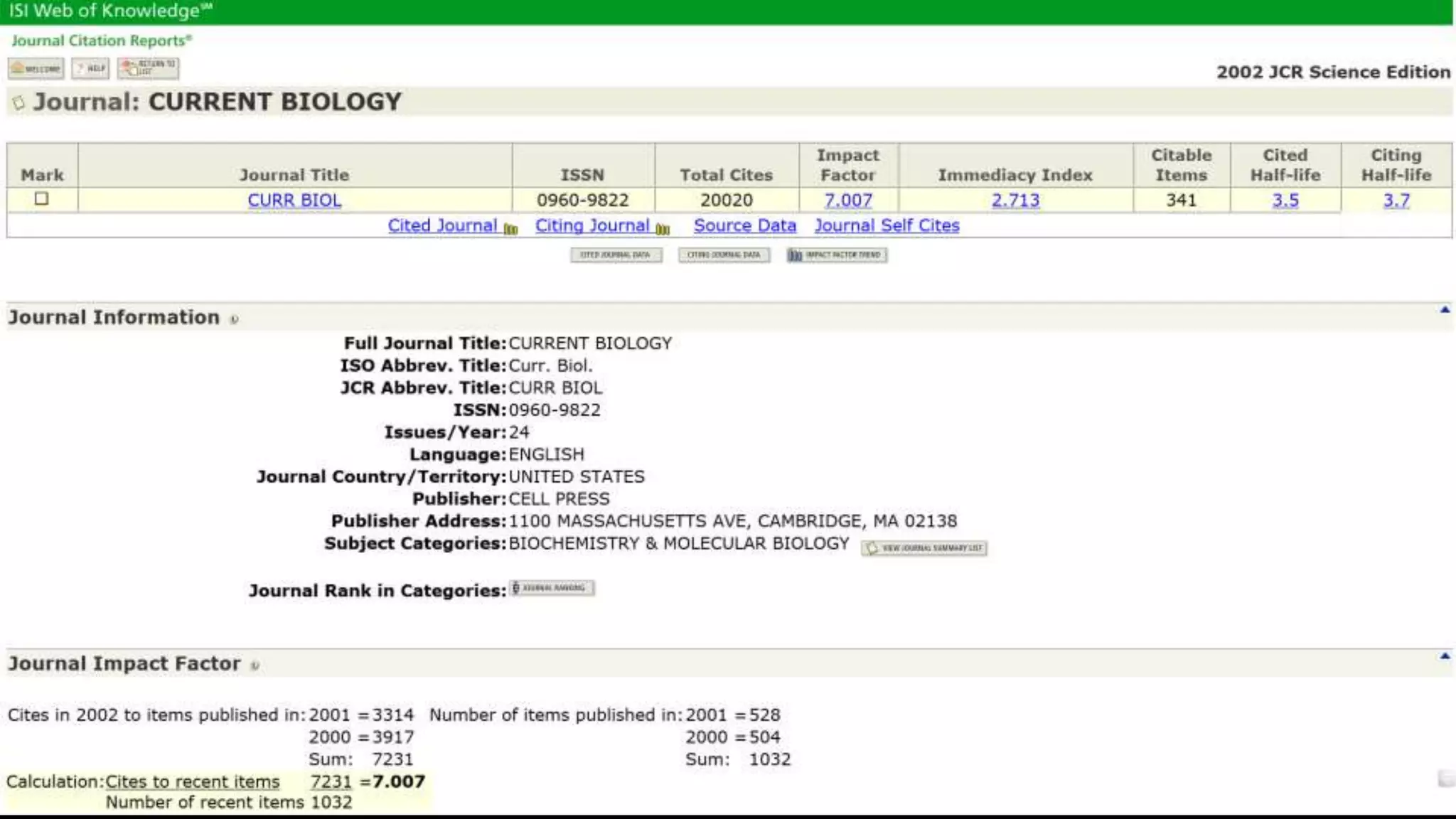

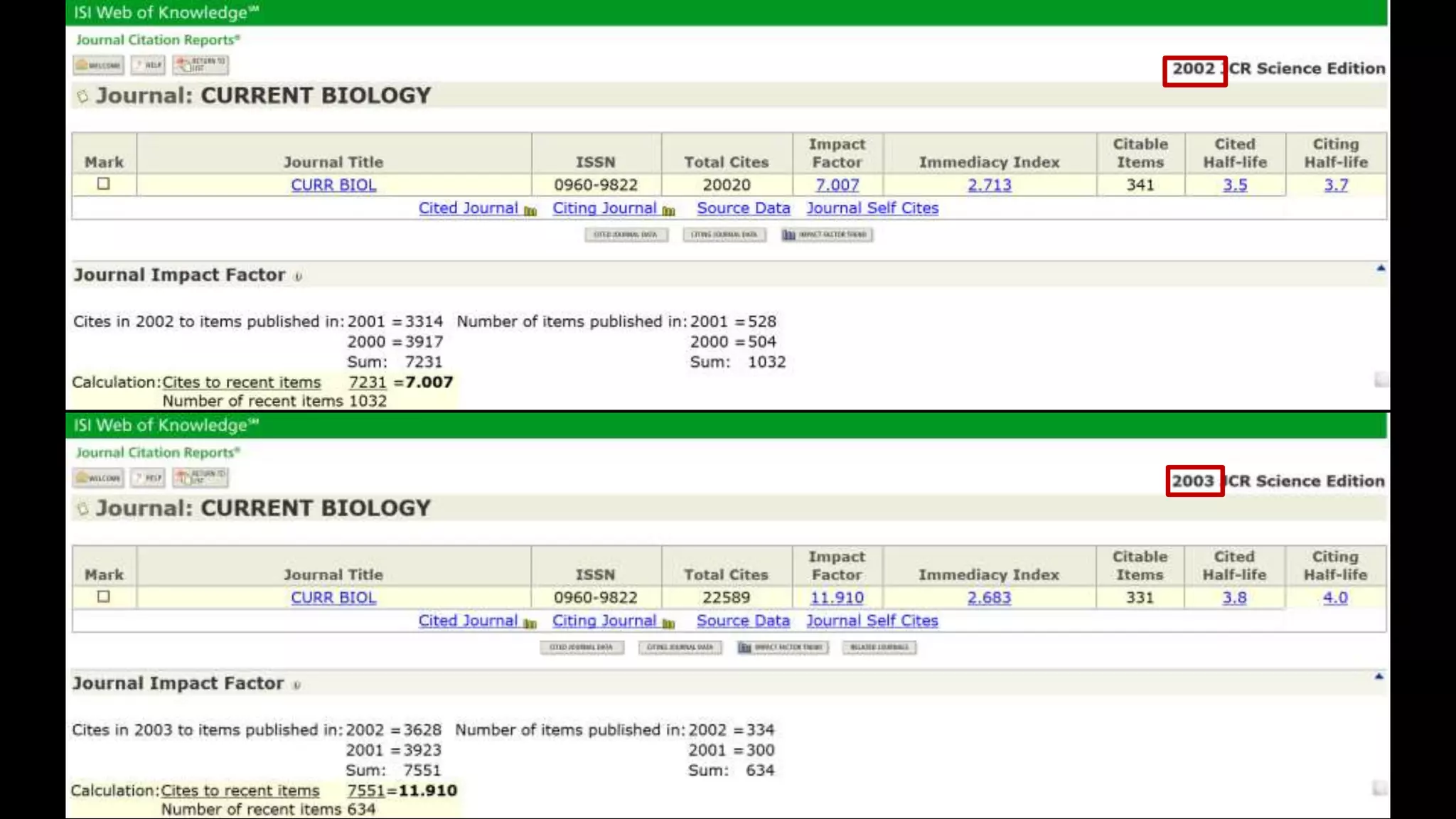

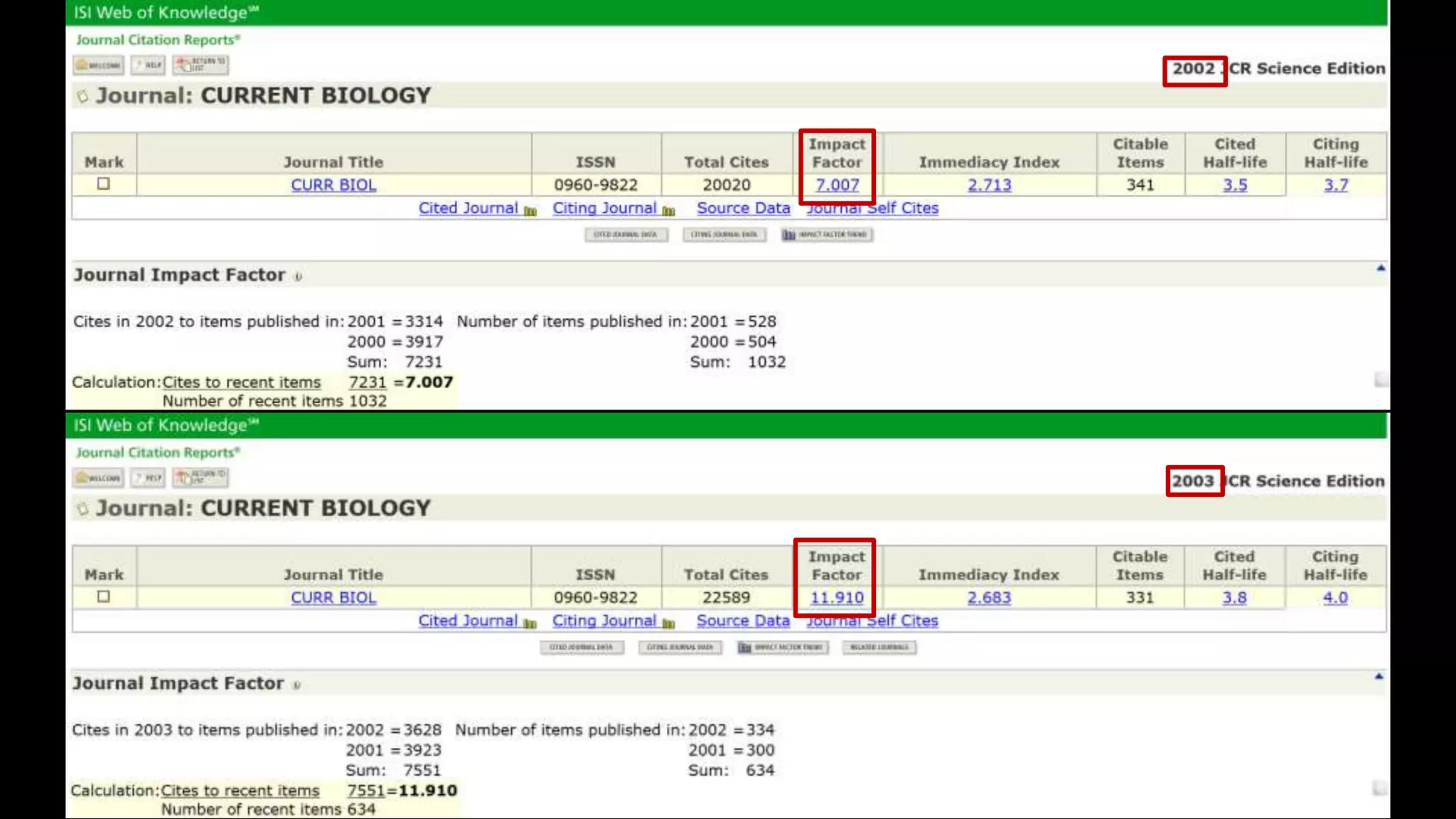

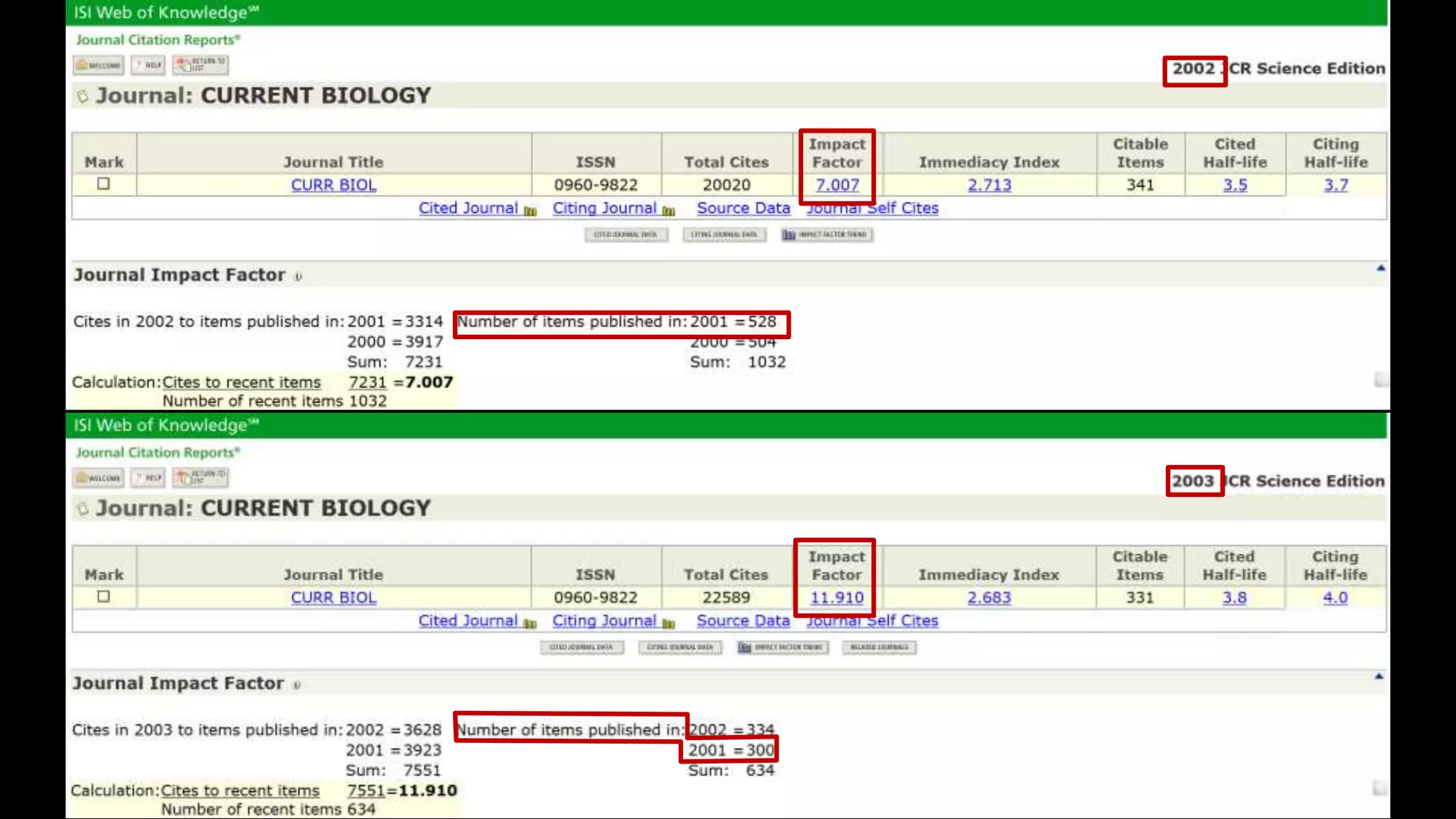

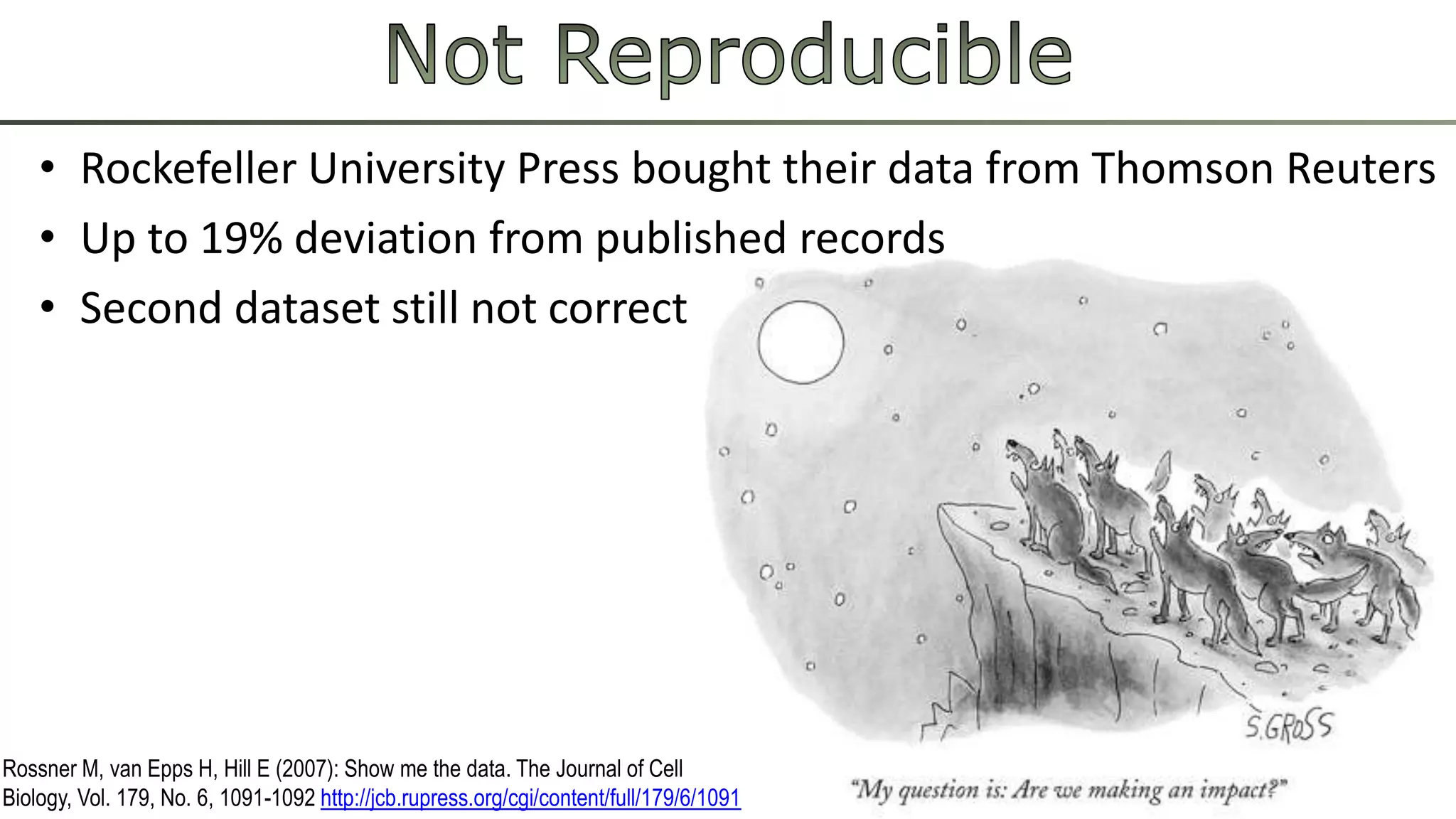

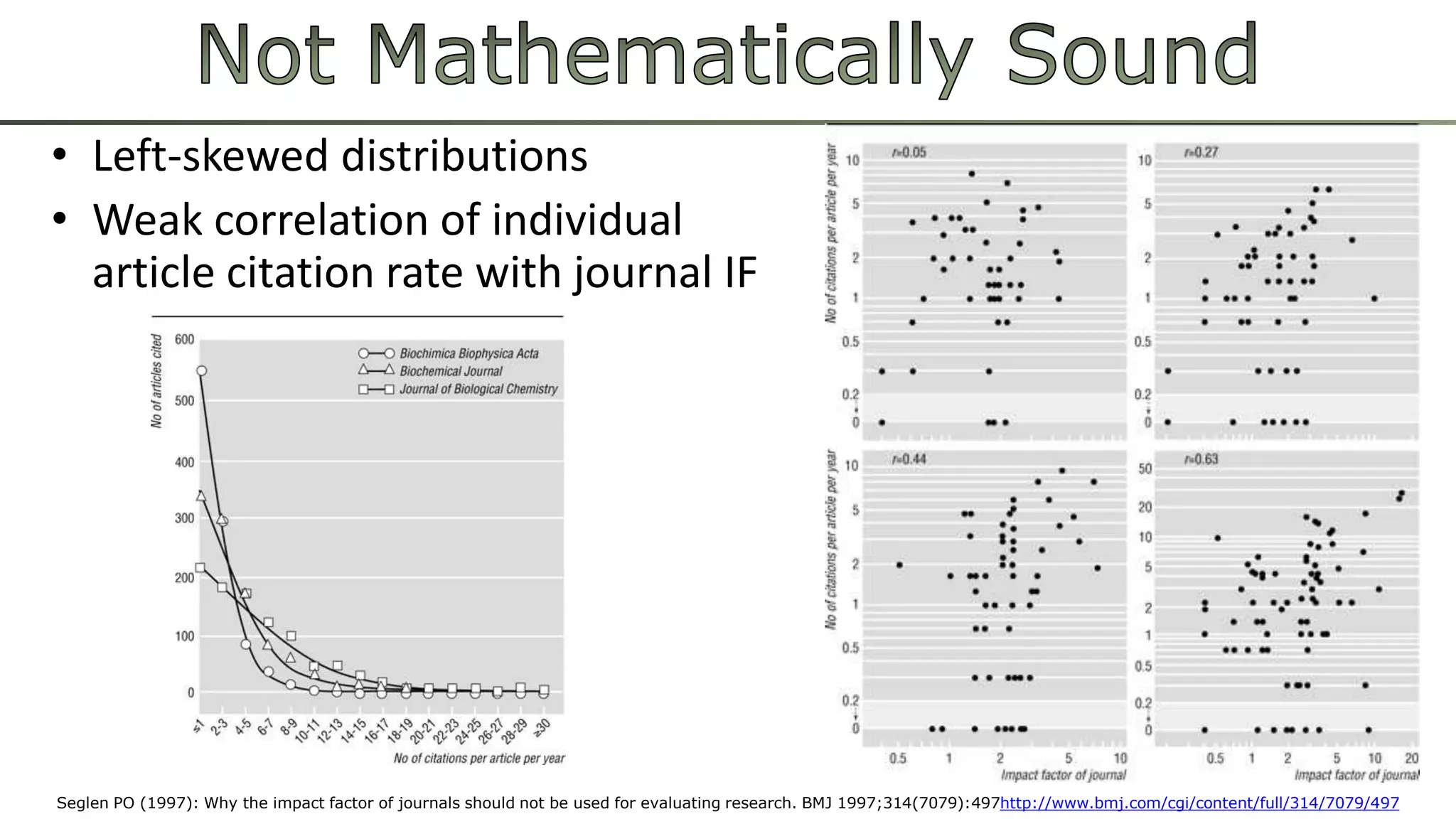

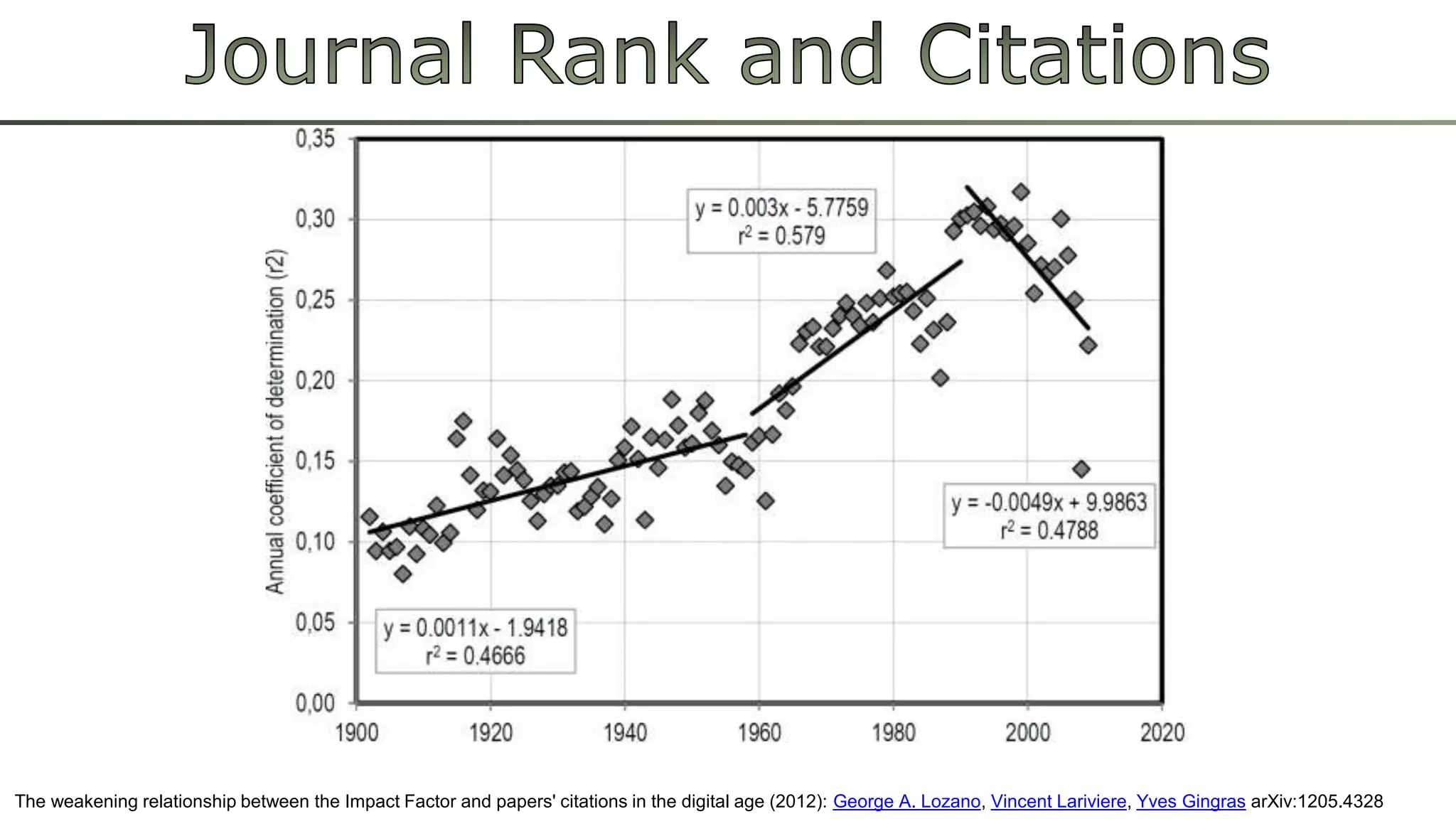

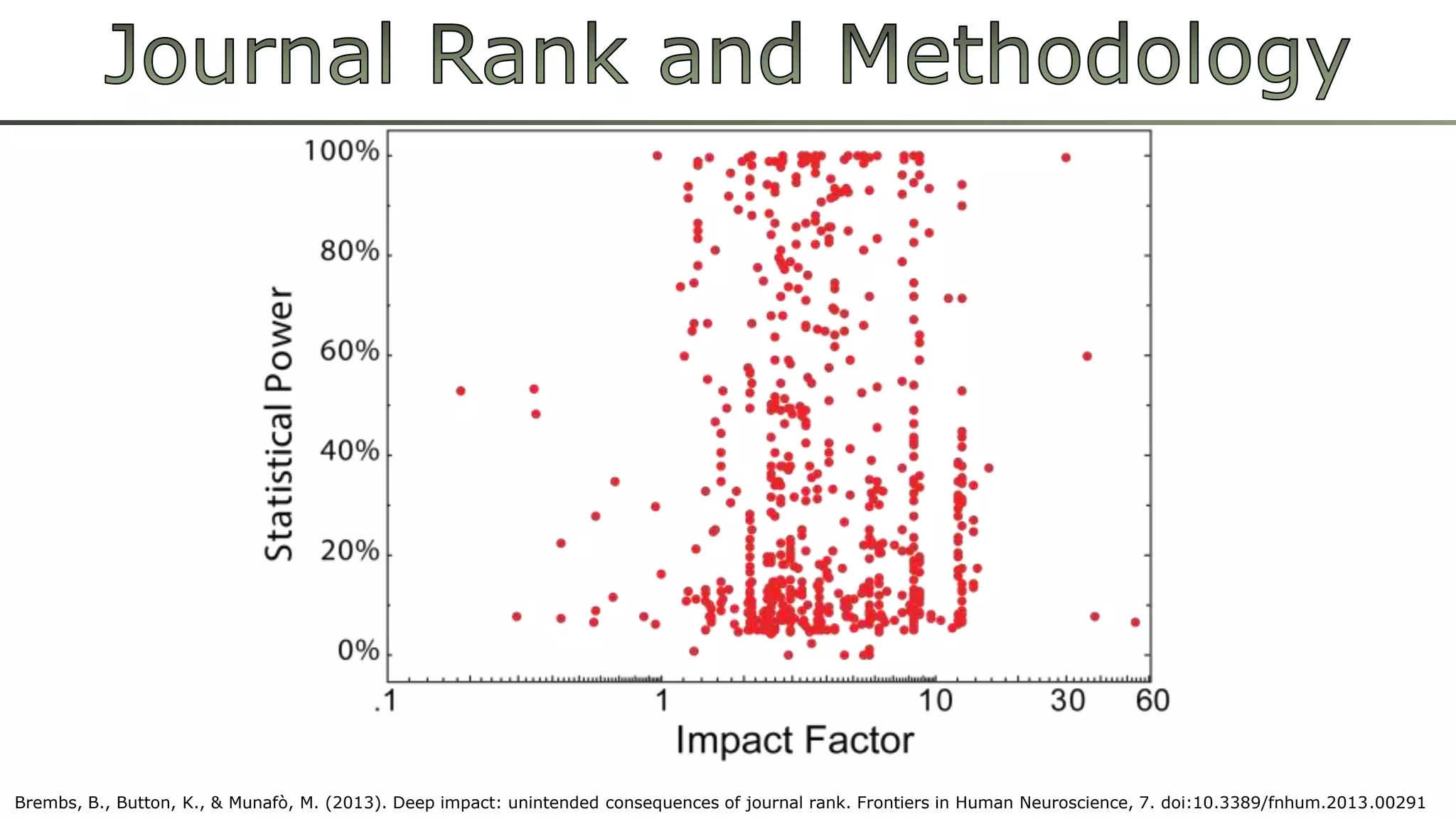

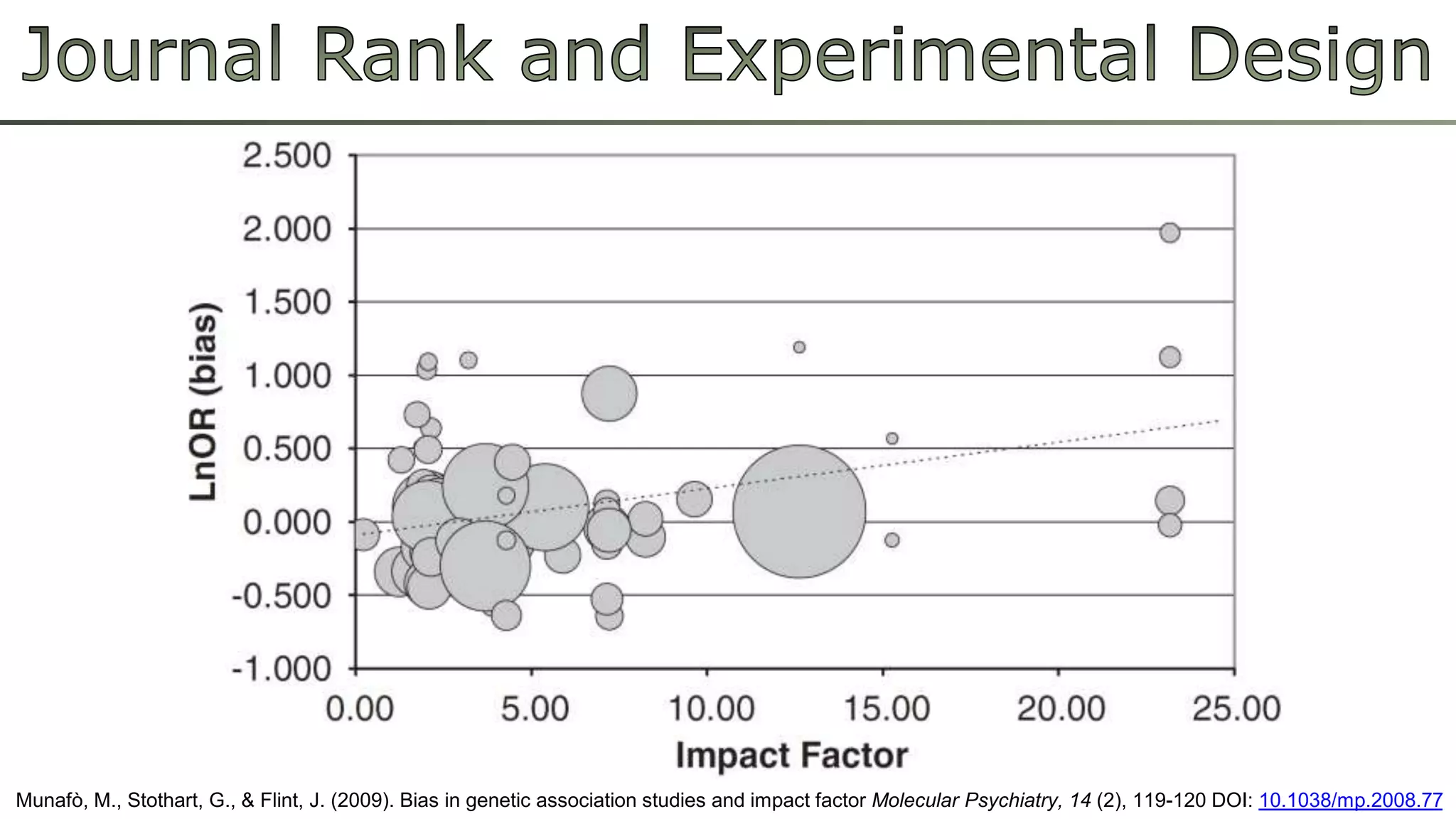

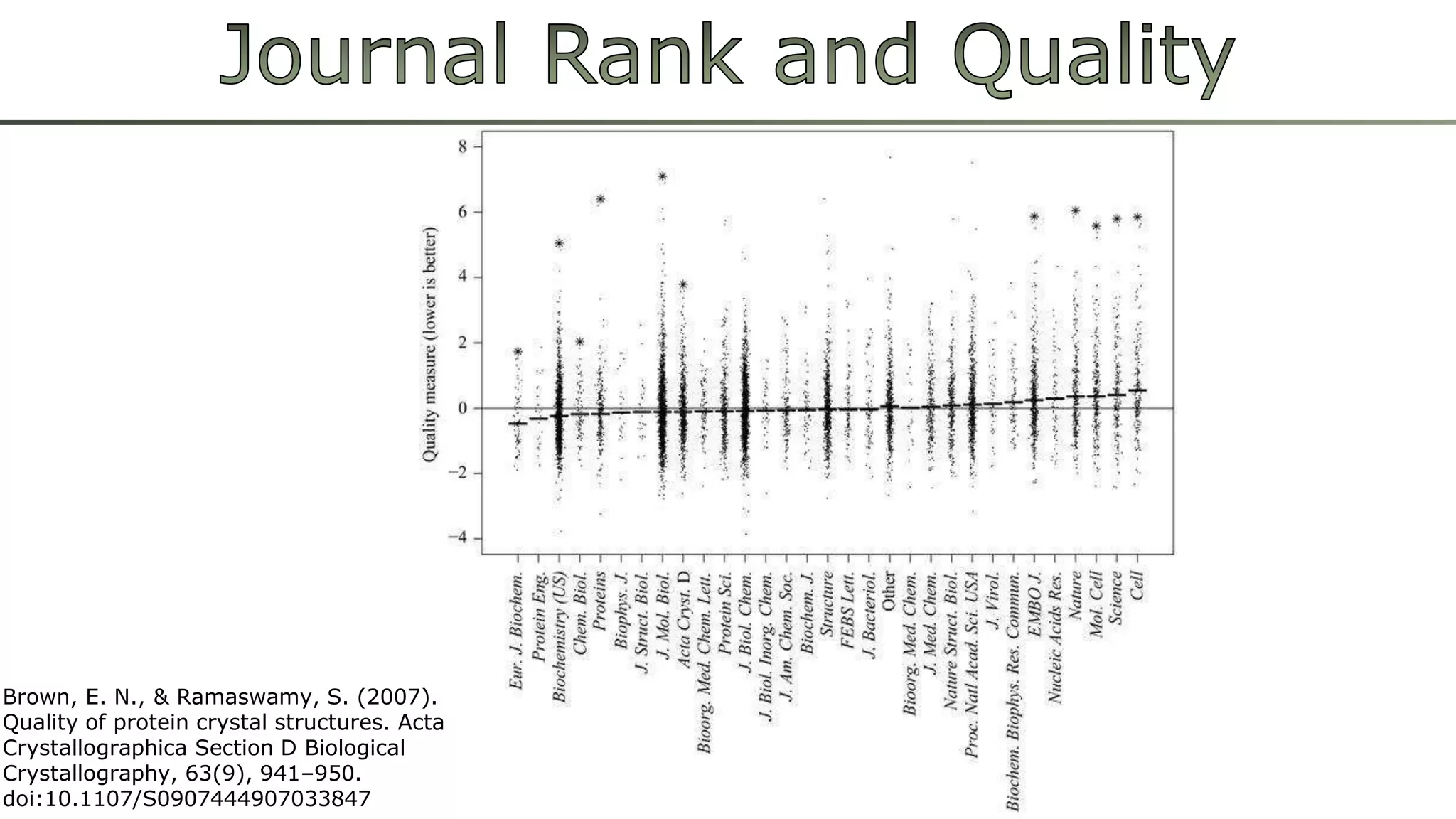

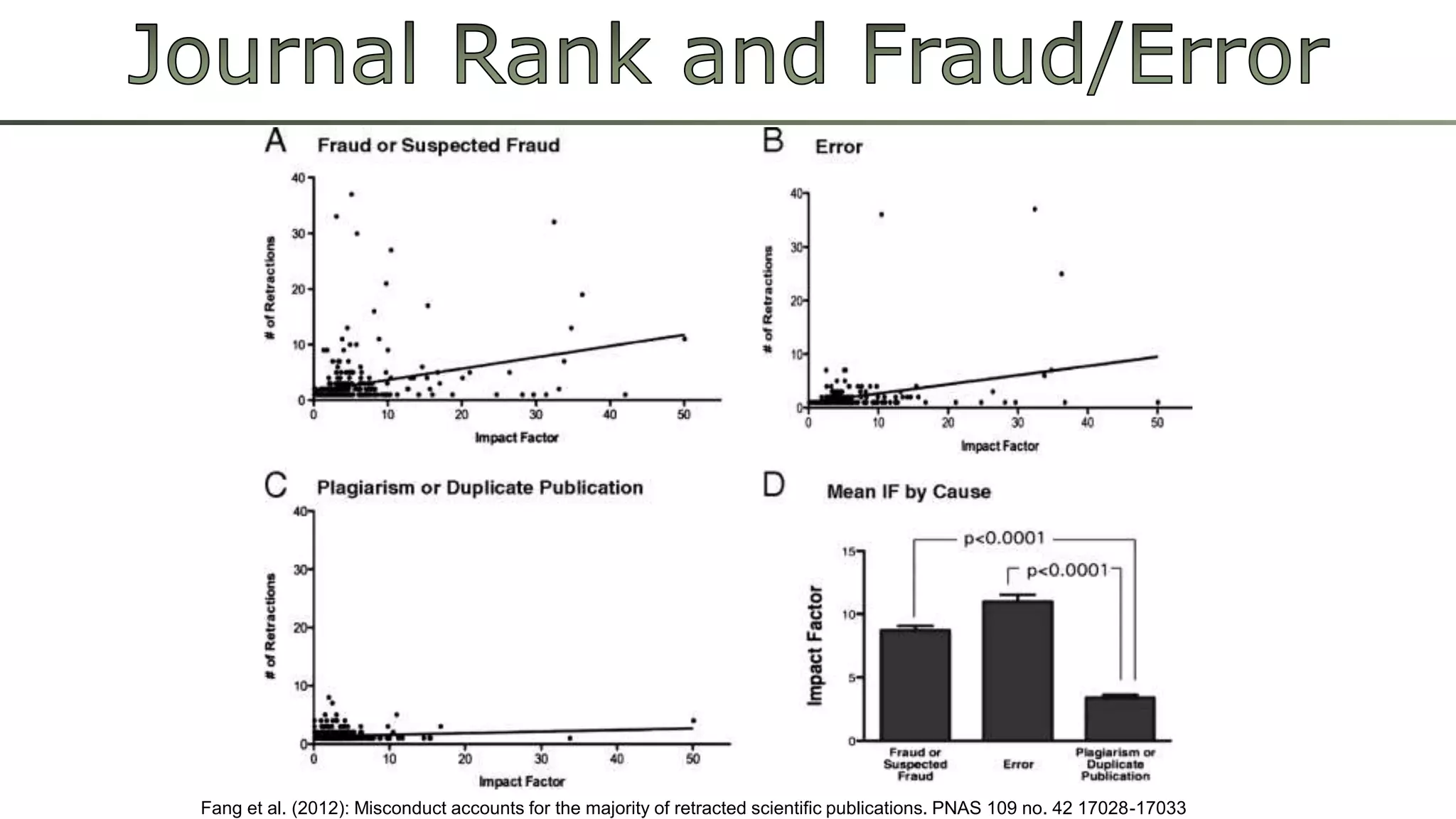

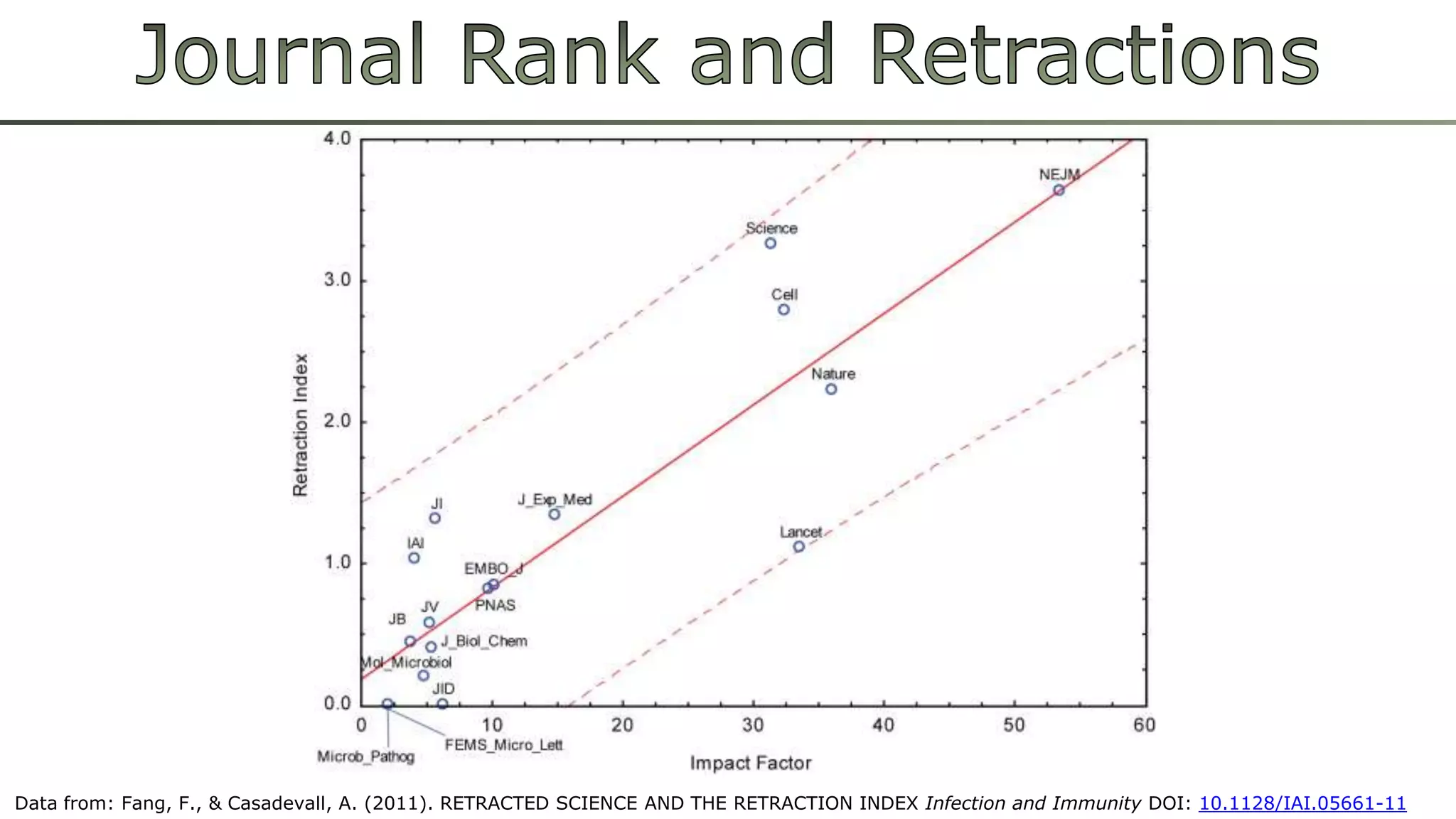

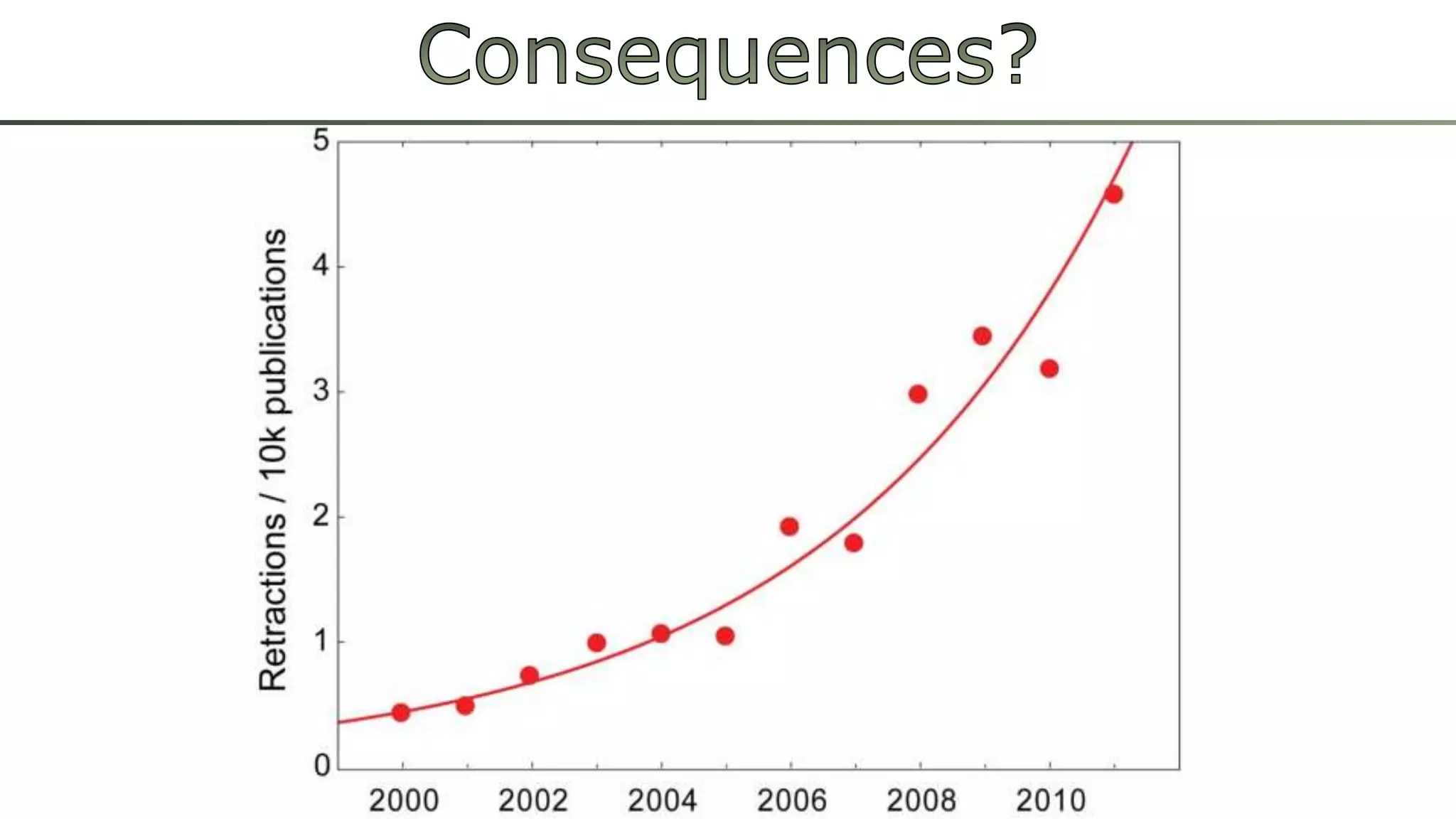

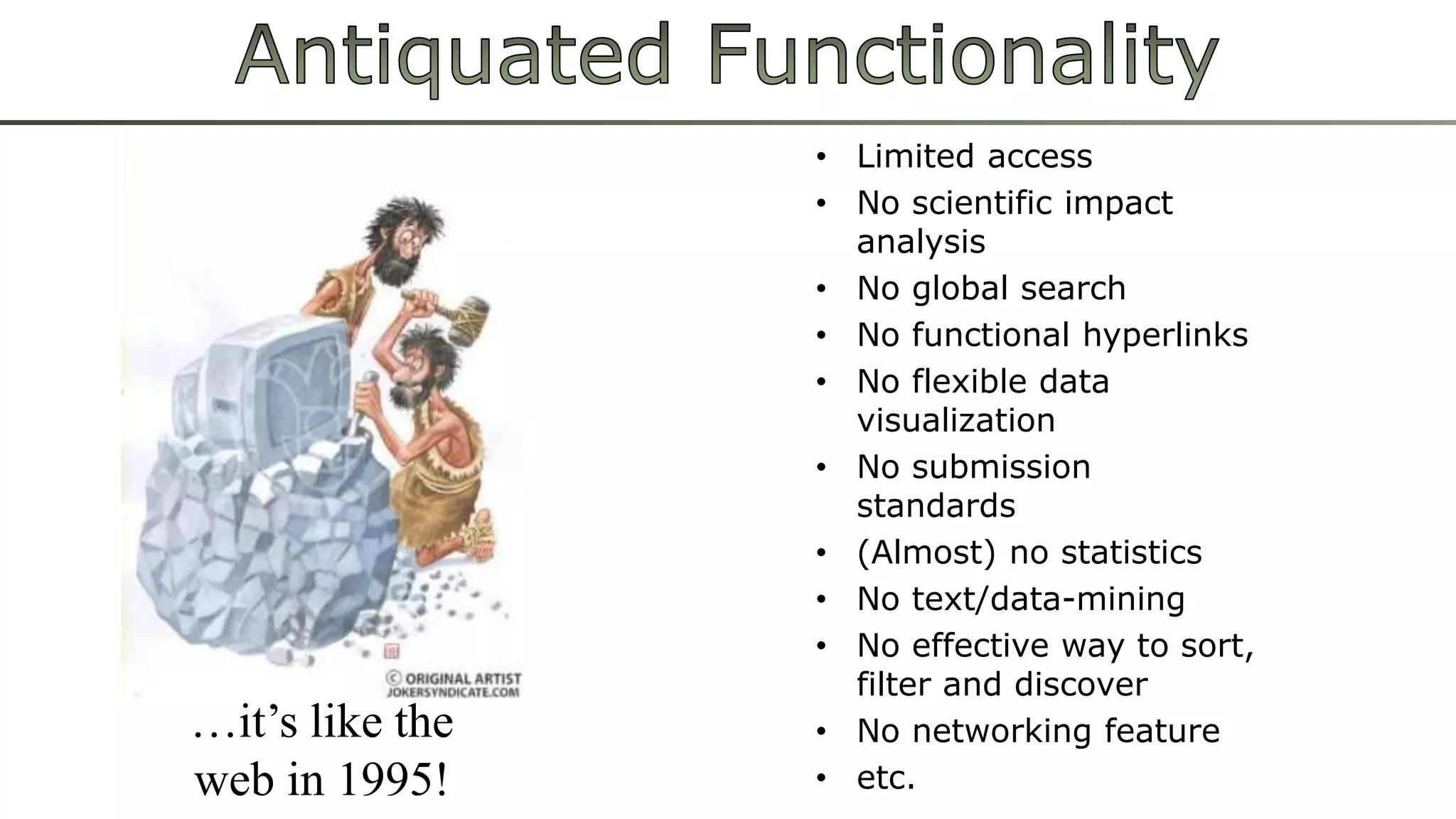

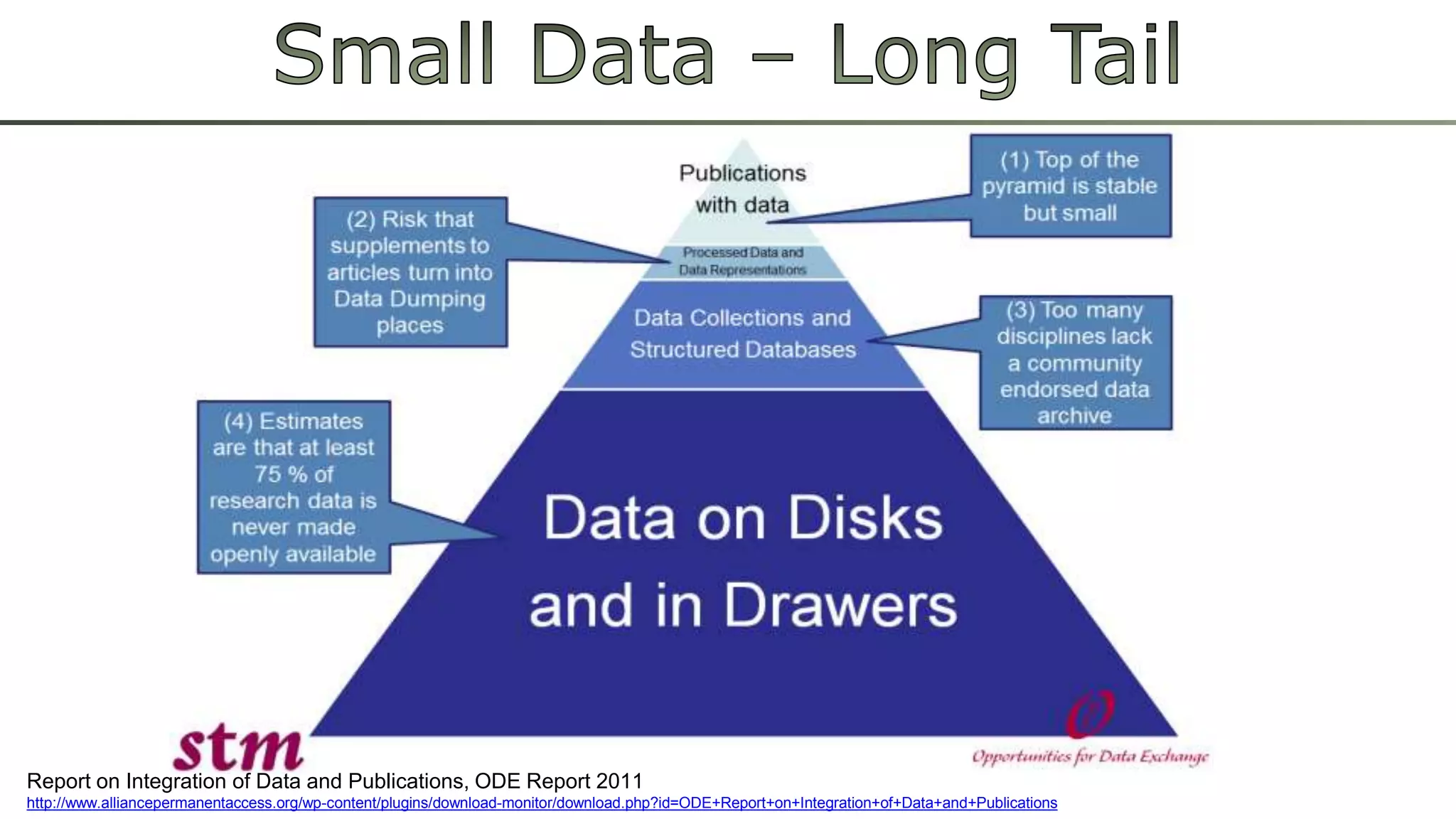

This document discusses issues with the current scholarly publishing system, including the impact factor metric and paywalls limiting access to research. It advocates for reforming the system by publishing in venues that are open access and evaluate works based on usage and sharing of data/code rather than journal prestige. Open and reproducible research could better serve scientists and maximize the impact of their findings.

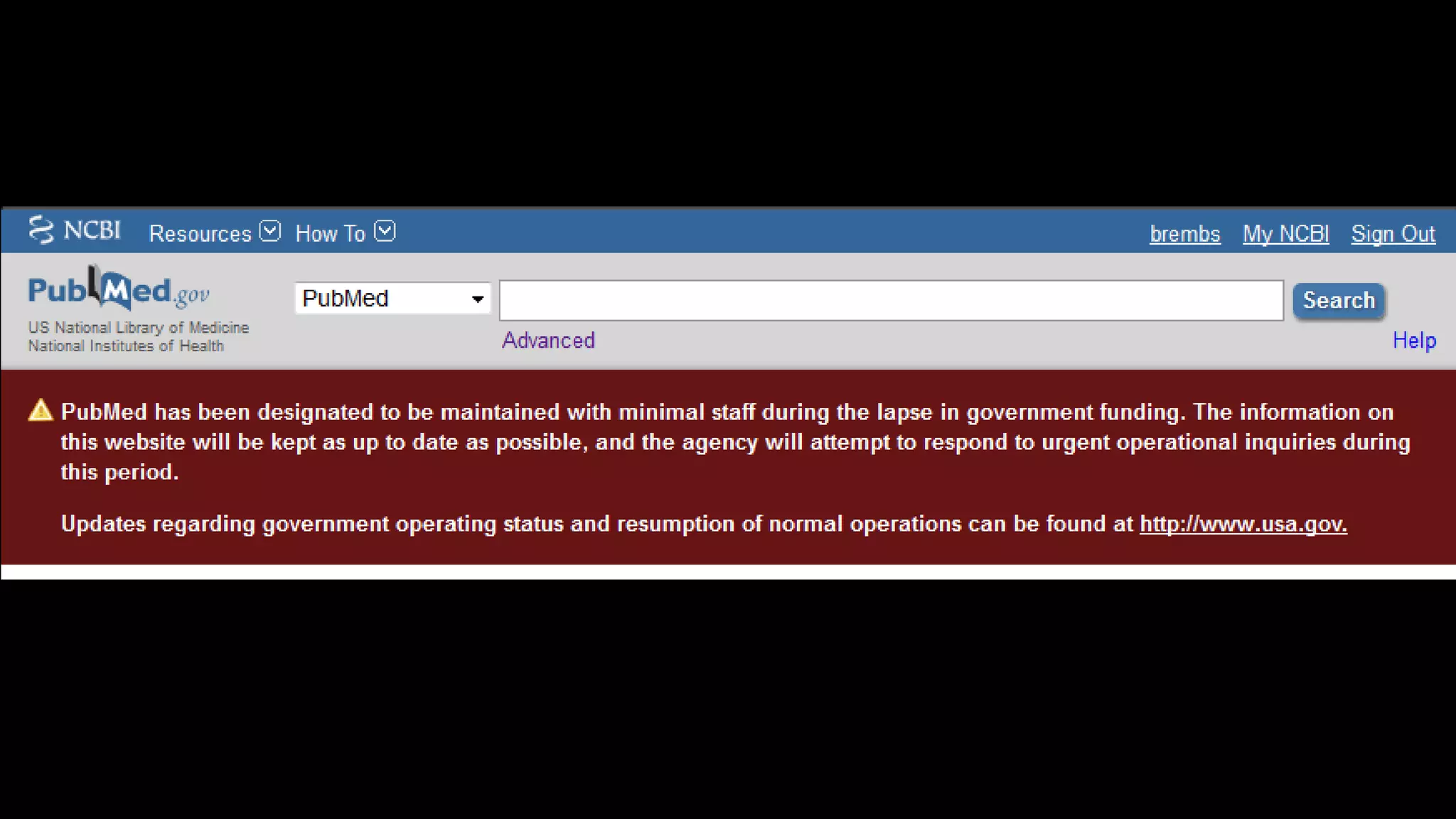

![Costs [thousand US$/article]

Legacy SciELO

(Sources: Van Noorden, R. (2013). Open access: The true cost of science publishing. Nature 495, 426–9; Packer, A. L. (2010). The SciELO Open

Access: A Gold Way from the South. Can. J. High. Educ. 39, 111–126)](https://image.slidesharecdn.com/ambassadorsmuc-141203031407-conversion-gate02/75/If-only-access-were-our-only-infrastructure-problem-27-2048.jpg)

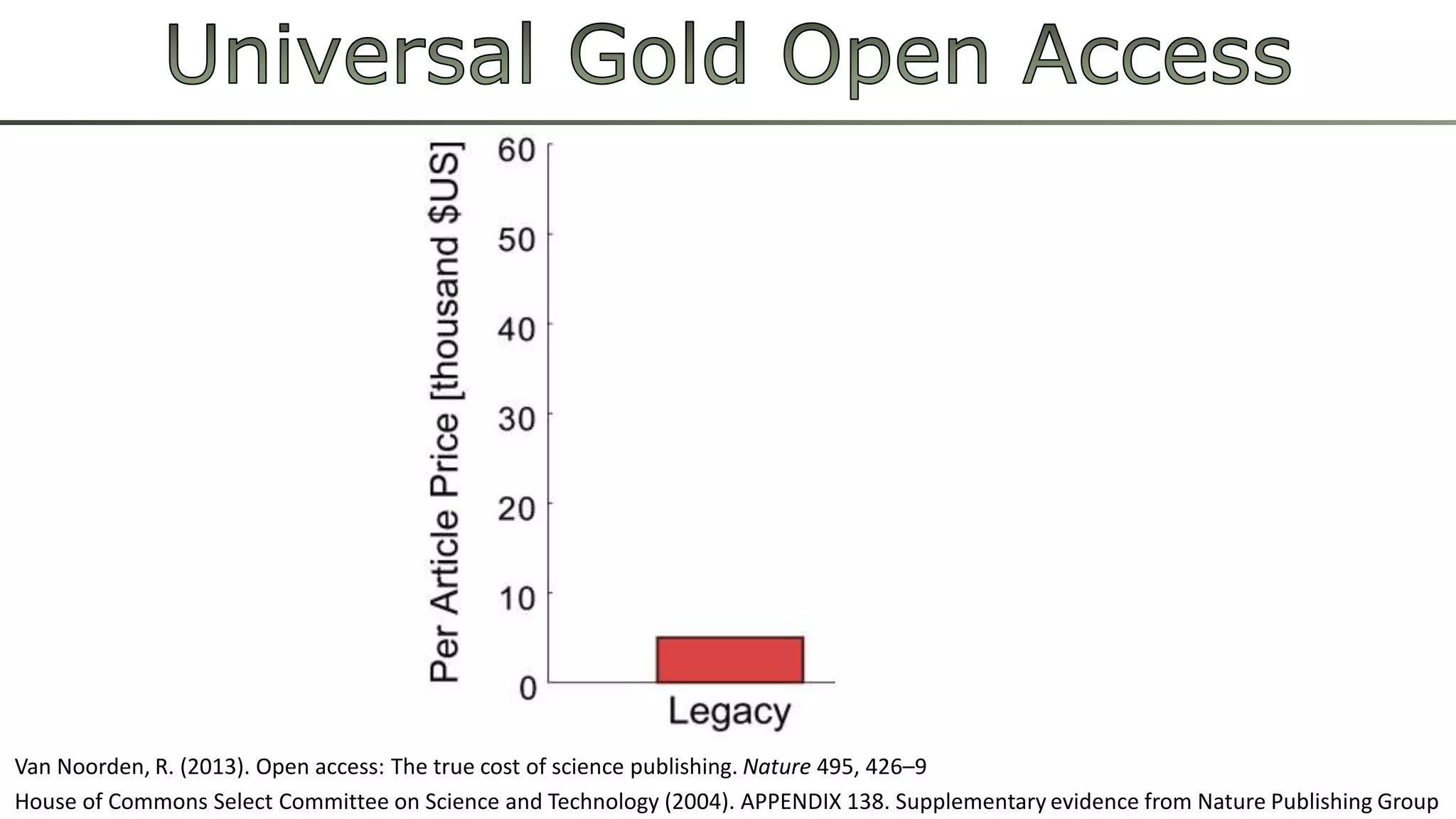

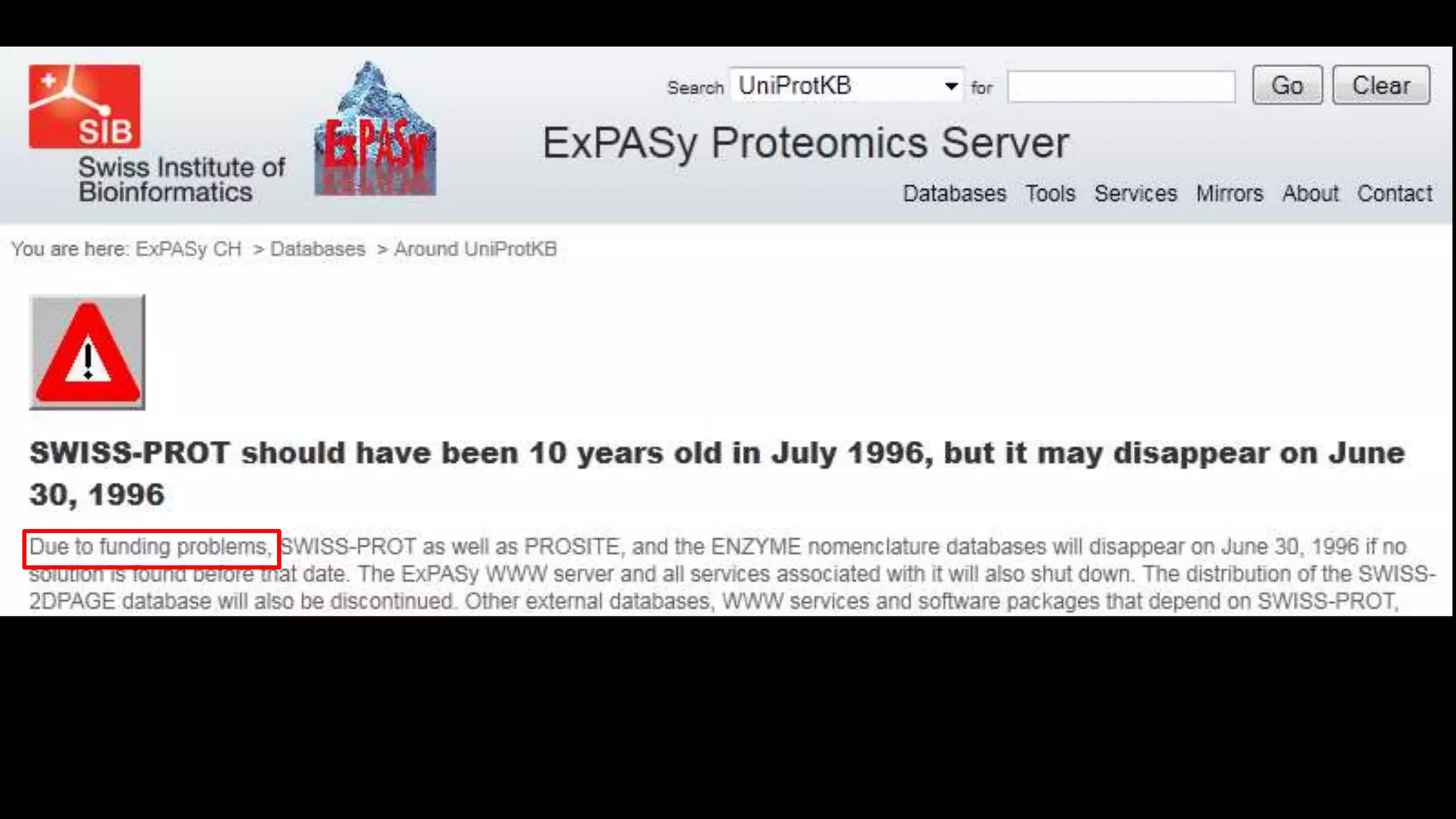

![Potential for innovation: 9.8b p.a.

Costs [thousand US$/article]

Legacy SciELO

(Sources: Van Noorden, R. (2013). Open access: The true cost of science publishing. Nature 495, 426–9; Packer, A. L. (2010). The SciELO Open

Access: A Gold Way from the South. Can. J. High. Educ. 39, 111–126)](https://image.slidesharecdn.com/ambassadorsmuc-141203031407-conversion-gate02/75/If-only-access-were-our-only-infrastructure-problem-38-2048.jpg)