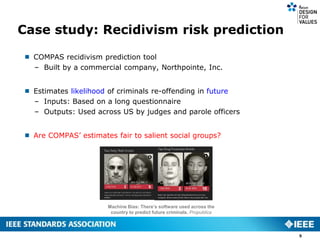

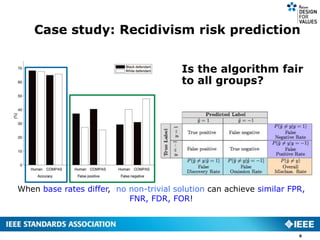

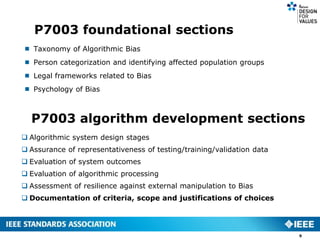

The IEEE P7003 addresses algorithmic bias, emphasizing the need to minimize unintended and unjustified discrimination within algorithm-driven systems. It discusses case studies such as the COMPAS recidivism prediction tool, raising questions about fairness and the impact of algorithms on different social groups. The document outlines key considerations for algorithm development, including the assurance of representativeness and the evaluation of outcomes to mitigate bias.