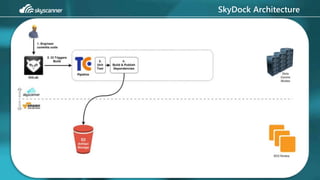

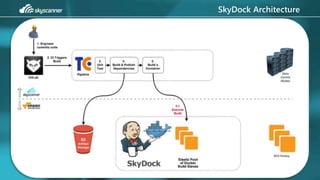

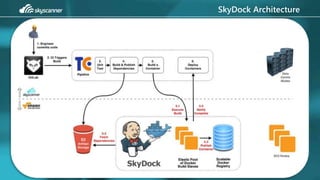

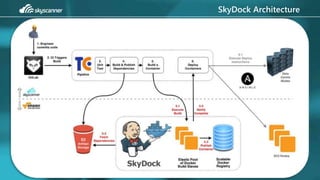

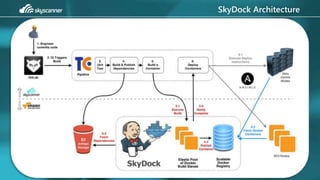

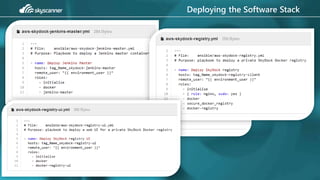

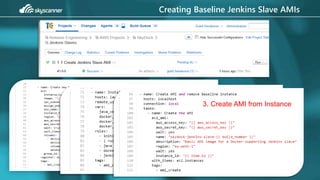

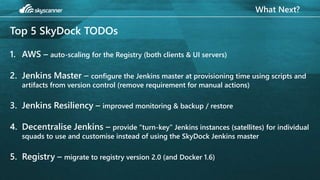

The document outlines the development and implementation of Skydock, an internal Docker registry and CI system designed for secure software releases within an organization. It emphasizes the importance of self-service, scalability, and resiliency compared to existing solutions like Docker Hub and Docker Hub Enterprise. The document also details the architecture, deployment process, and future improvements for Skydock.