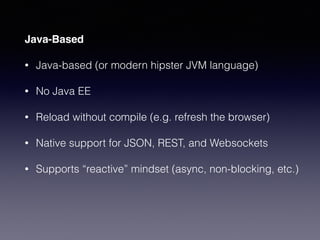

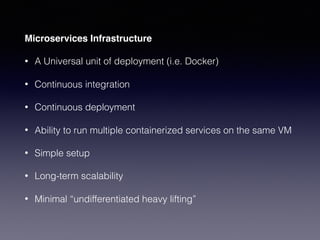

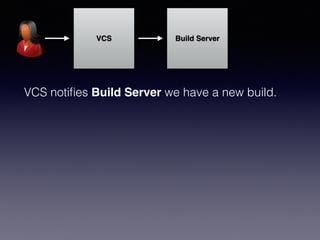

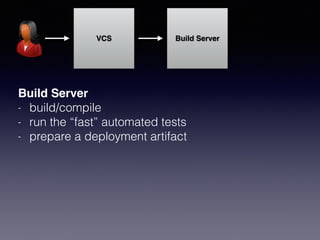

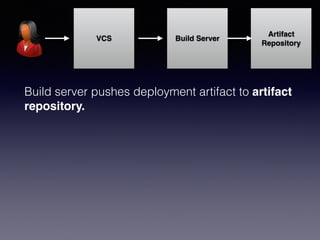

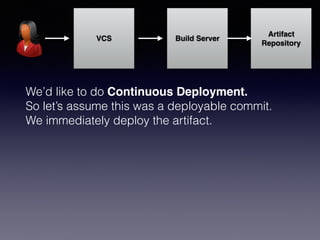

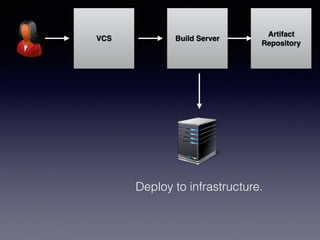

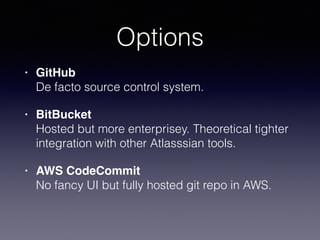

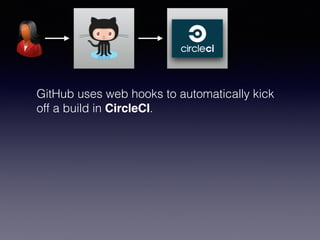

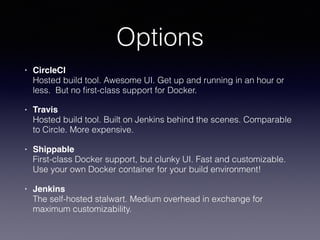

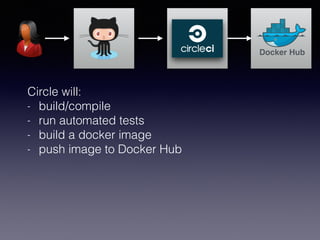

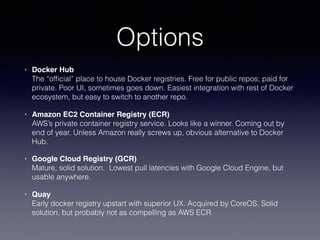

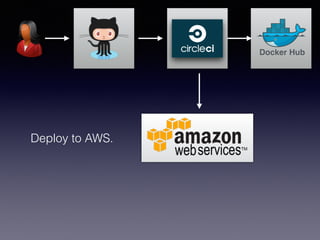

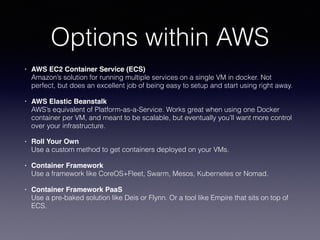

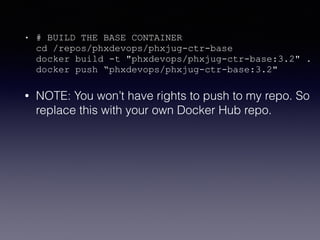

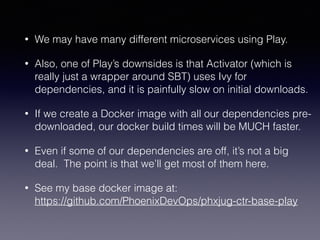

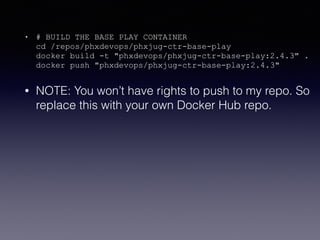

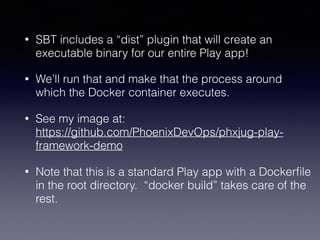

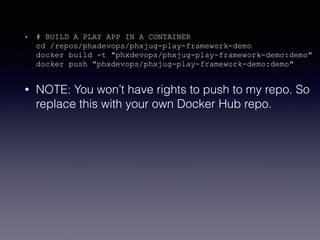

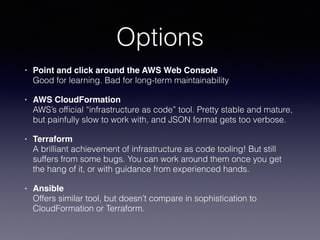

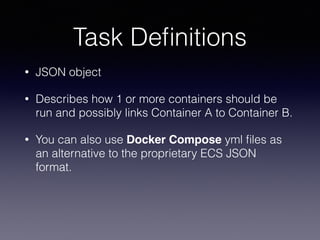

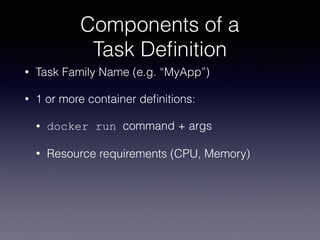

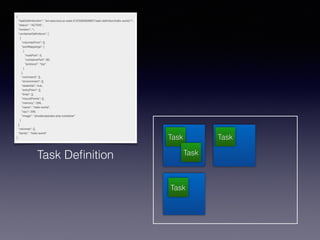

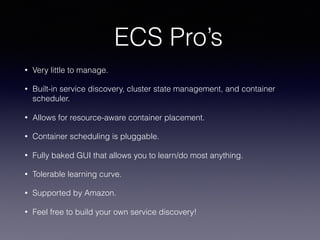

The document discusses using Play Framework, Docker, CircleCI, and AWS together to create an automated microservices build pipeline. Key aspects include using GitHub for source control, CircleCI for continuous integration to build Docker images, pushing images to Docker Hub, and deploying to AWS using ECS for container orchestration. The author demonstrates setting up each part of the pipeline live.