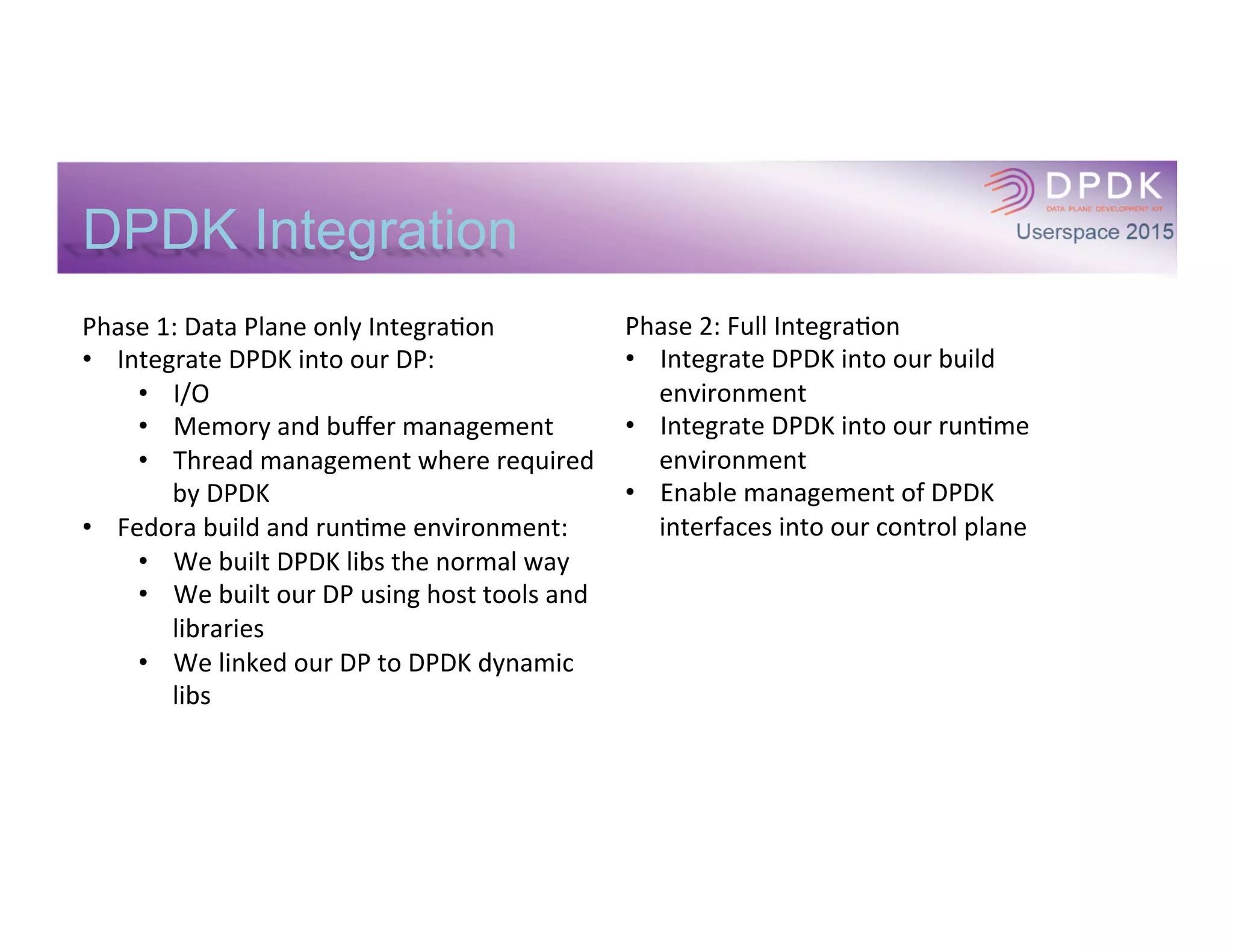

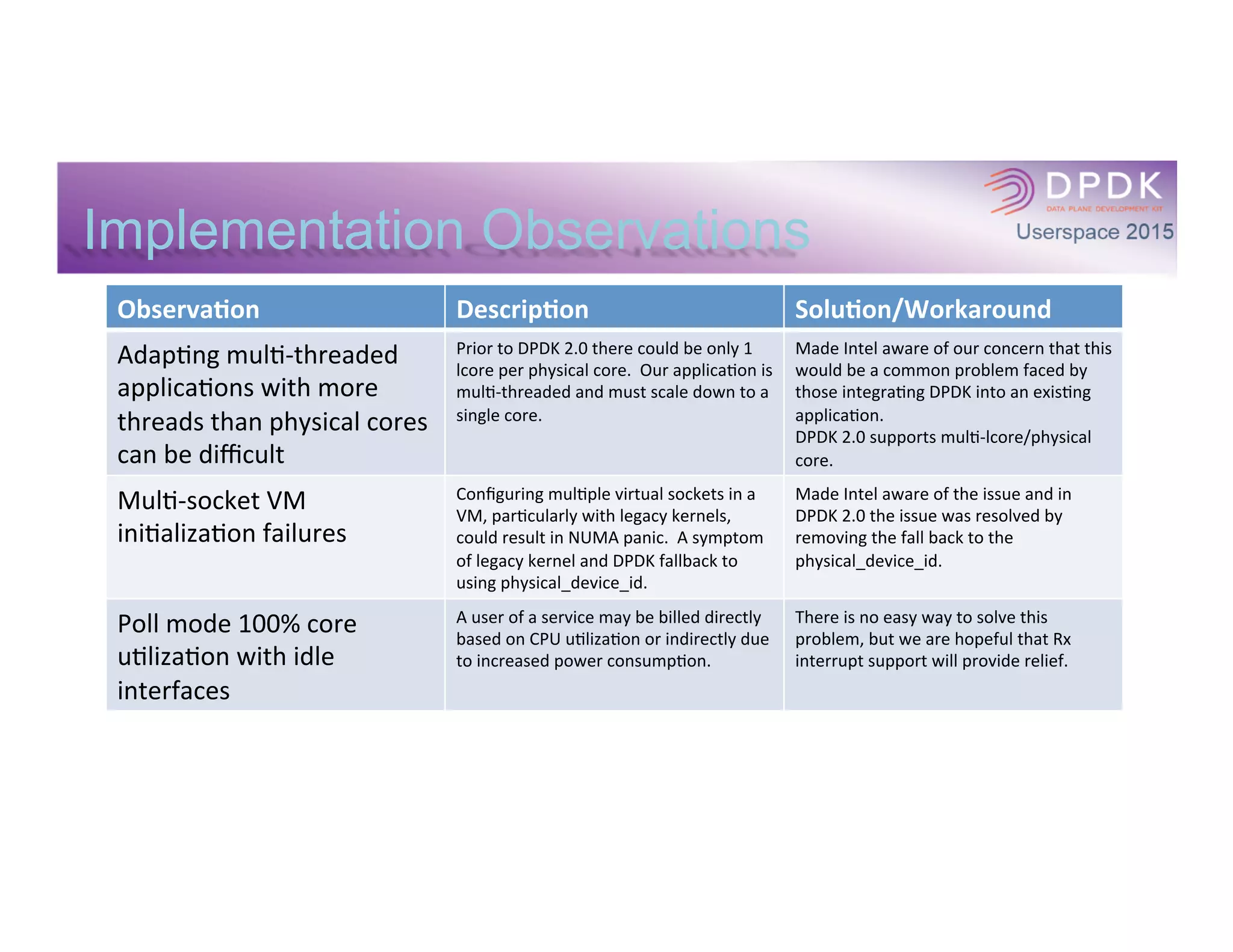

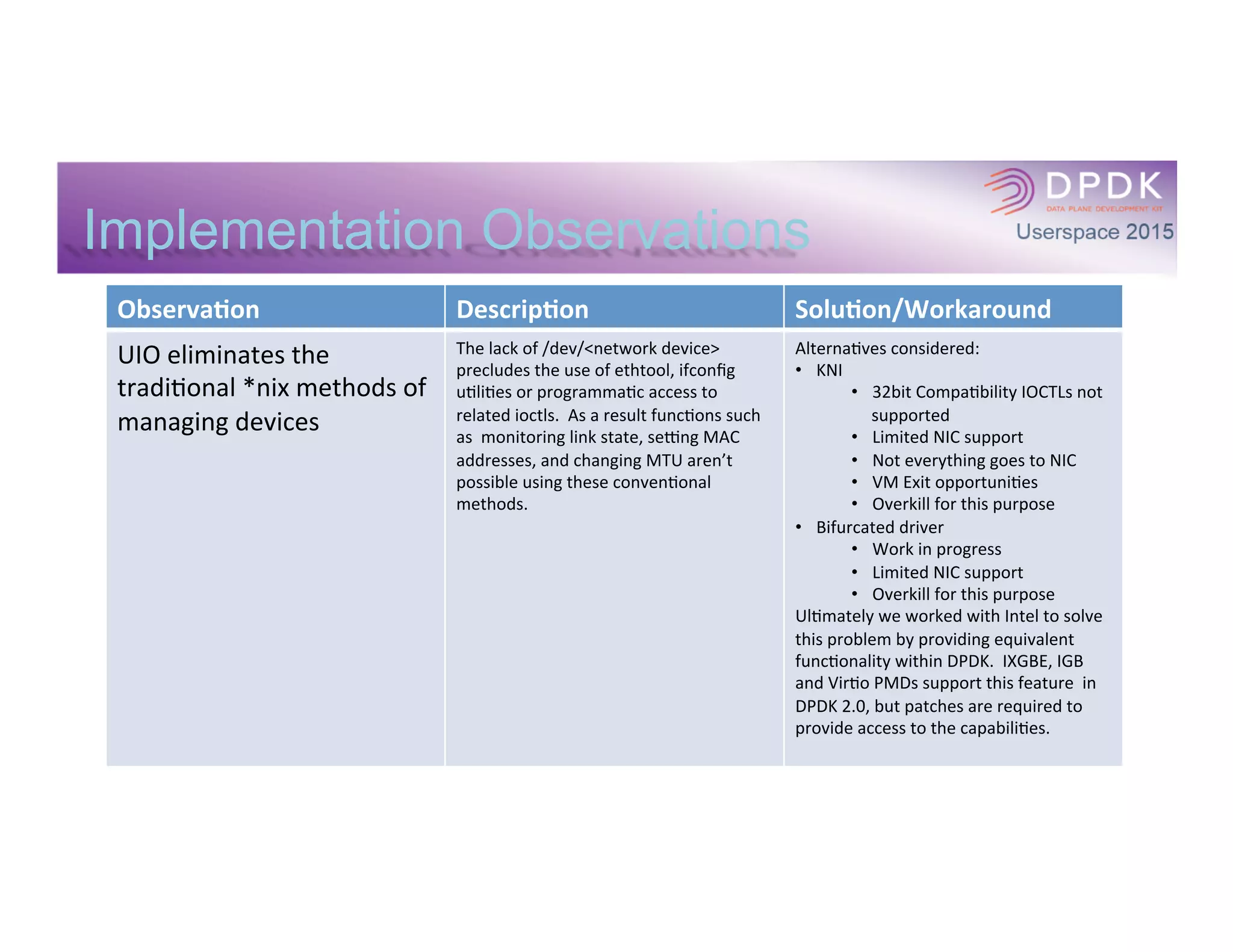

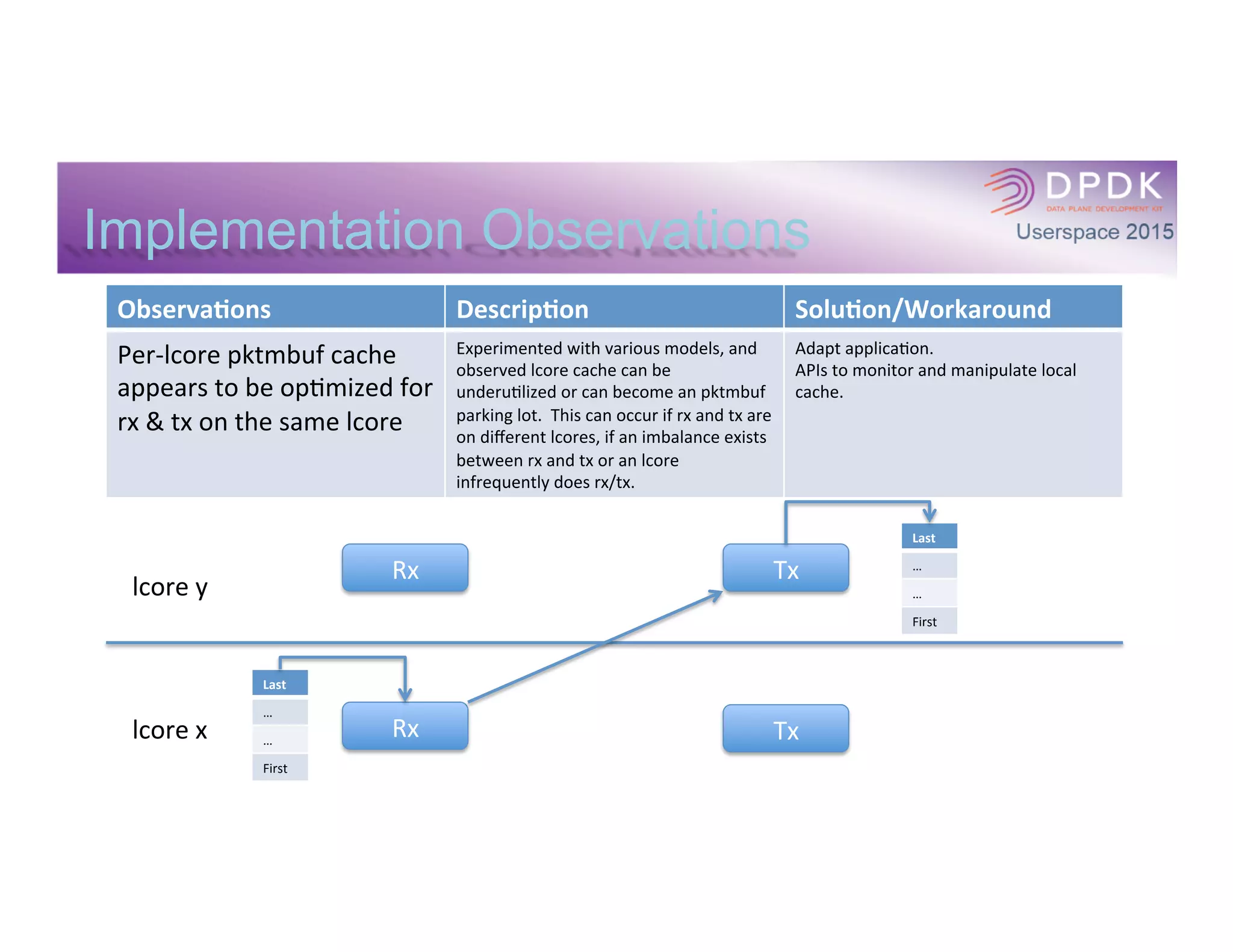

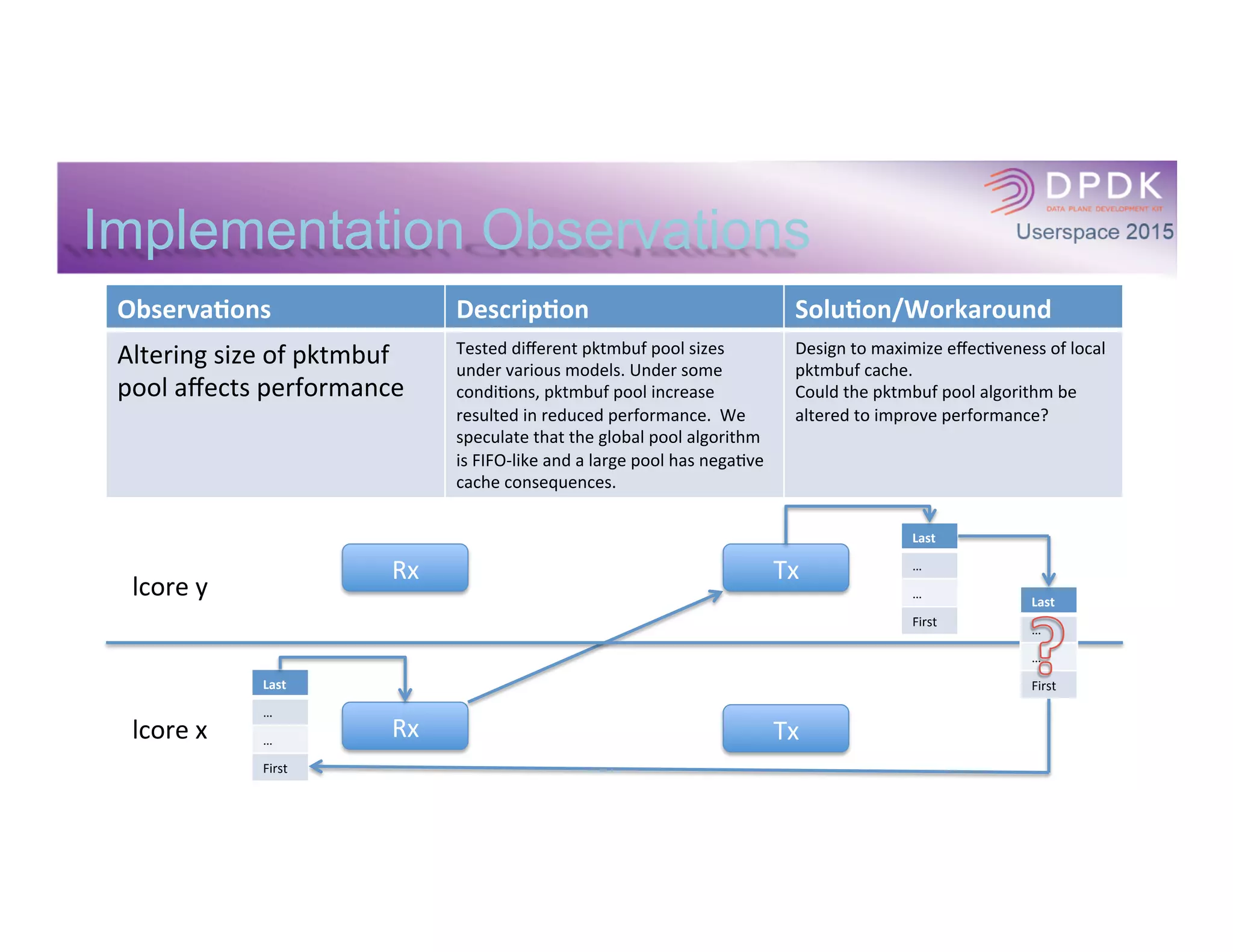

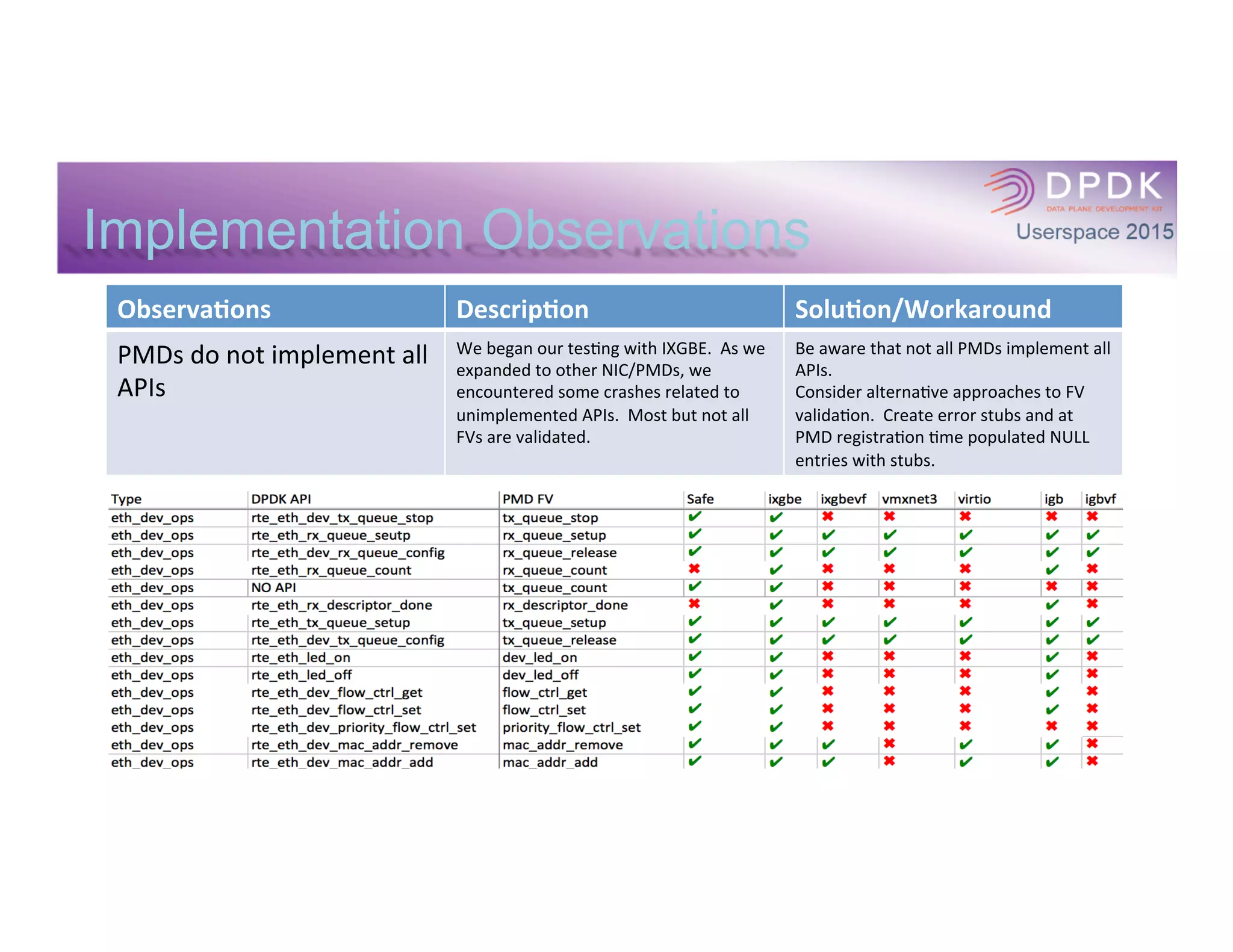

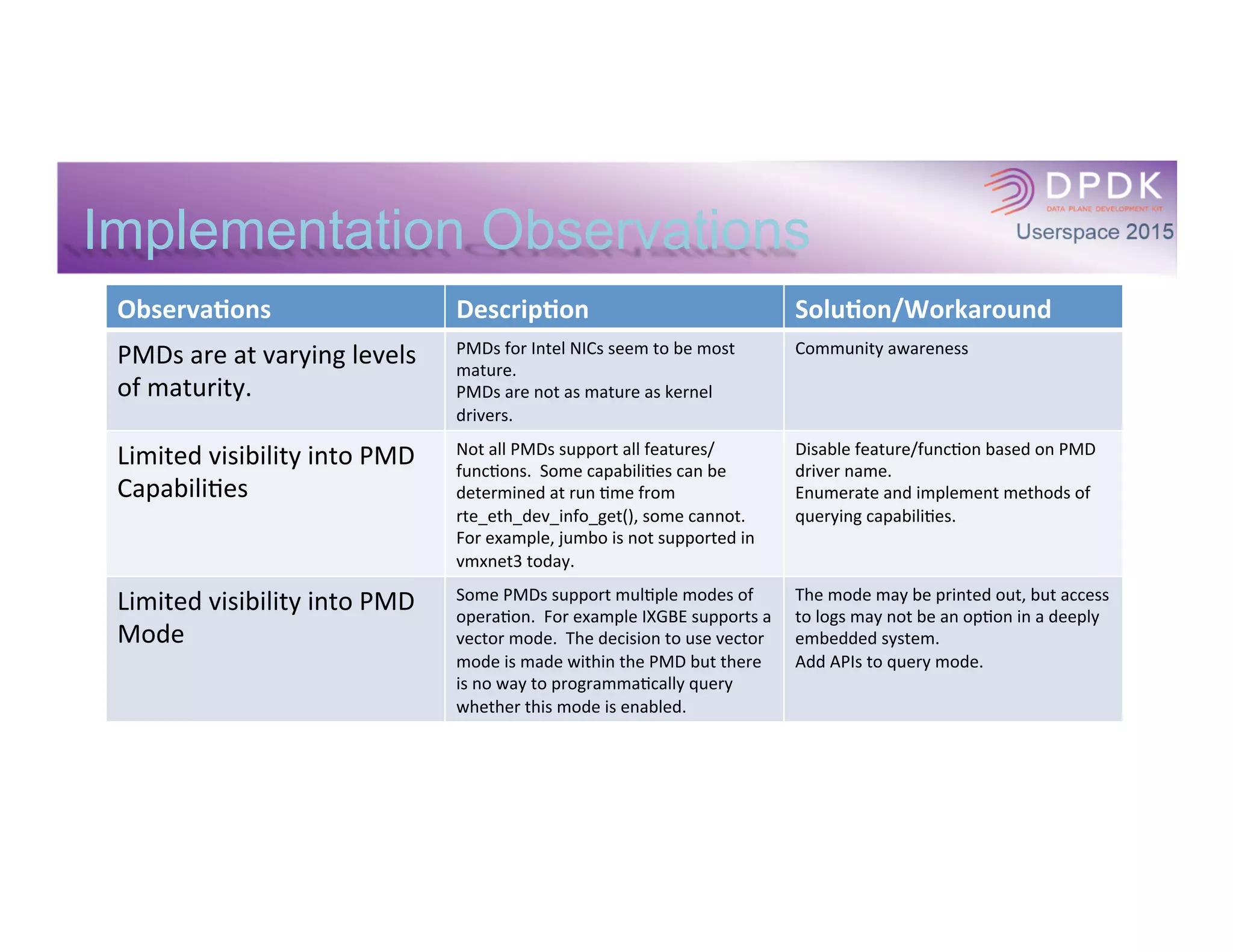

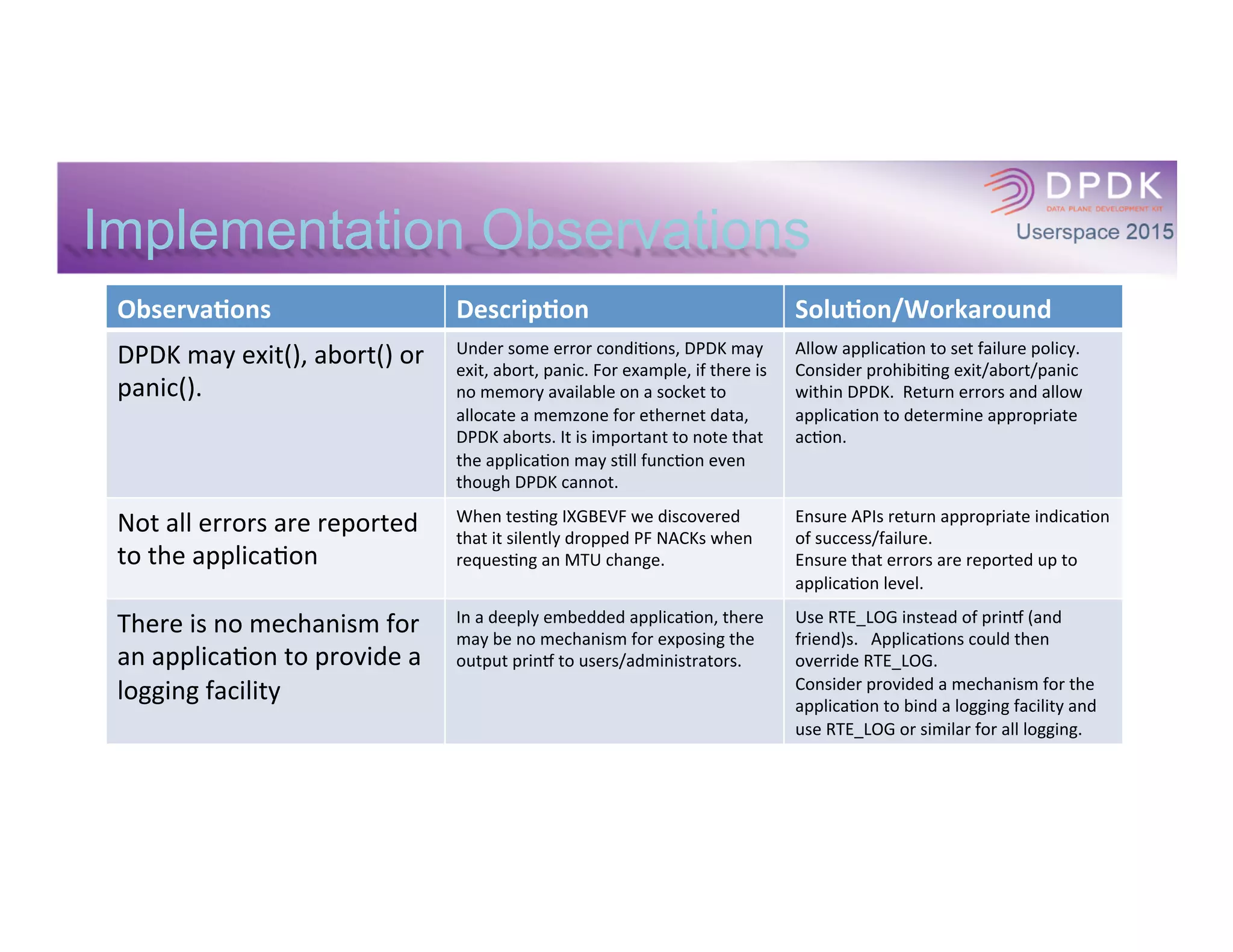

The document discusses Cisco's experience integrating their data plane product with DPDK. Key observations from implementing DPDK include: adapting multi-threaded applications was difficult in early DPDK versions; UIO eliminates traditional device management; per-core packet buffer caches may be underutilized if RX and TX are on different cores; altering packet buffer pool sizes can impact performance; and PMDs have varying levels of maturity and may not implement all APIs. The solutions involved updating to newer DPDK versions, adapting the application, and being aware of PMD limitations.