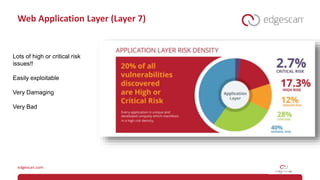

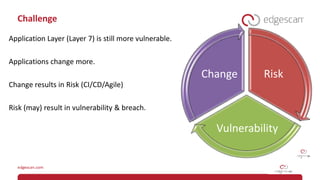

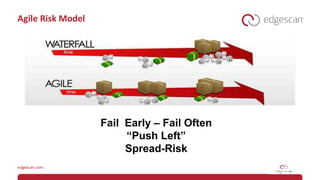

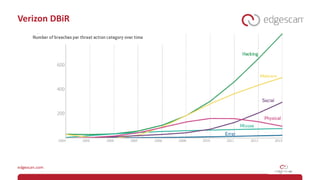

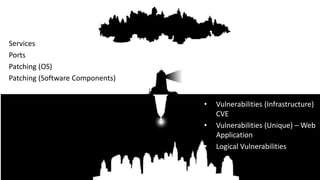

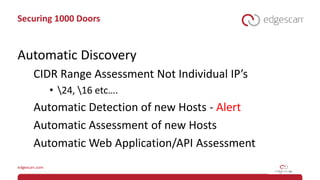

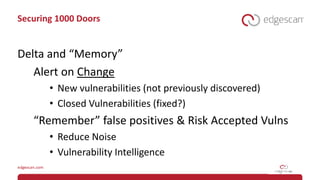

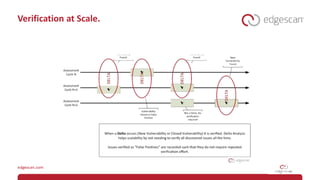

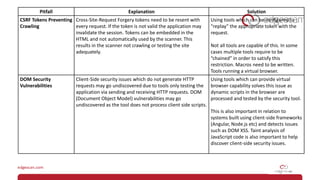

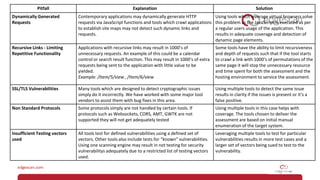

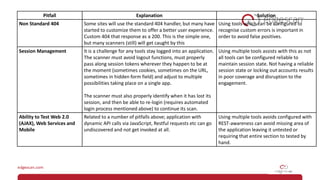

The document discusses cybersecurity challenges faced by enterprises, emphasizing the importance of visibility and continuous risk management in protecting digital assets. It highlights Edgescan, a cloud-based vulnerability assessment solution, and its capabilities in identifying vulnerabilities across varying enterprise environments. The text also outlines pitfalls associated with automation and assessments, providing insights on advanced security practices needed to address dynamic application vulnerabilities and improve overall security metrics.