1. The document presents Plug-and-Play priors for Bayesian imaging using Langevin-based sampling methods.

2. It introduces the Bayesian framework for image restoration and discusses challenges in modeling the prior.

3. A Plug-and-Play approach is proposed that uses an implicit prior defined by a denoising network in conjunction with Langevin sampling, termed PnP-ULA. Experiments demonstrate its effectiveness on image deblurring and inpainting tasks.

![Introduction.

Plug-and-Play priors for Bayesian imaging.

Theoritical results.

Experiments.

Conclusion.

Bayesian framework.

Sampling using Langevin based methods.

Bayesian paradigm.

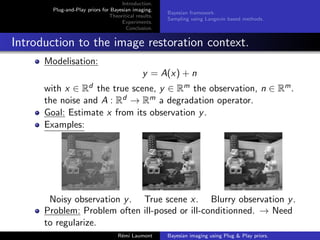

Bayesian formulation:

p(y|x) ∝ p(x)p(y|x)

where p(x) the prior and p(y|x) is the likelihood (assumed to

be known).

Maximum-A-Posteriori estimator:

x̂MAP =arg max

x∈Rd

p(x|y)=arg min

x∈Rd

{F(x, y) + λR(x)} (1)

where R(x) = − log p(x) and F(x, y) = − log p(y|x).

Minimum Mean Square Error (MMSE) estimator:

x̂MMSE =arg min

u∈Rd

E[kx − uk2

|y] =E[x|y] (2)

Rémi Laumont Bayesian imaging using Plug & Play priors.](https://image.slidesharecdn.com/gtti10032021-210310143356/85/Gtti-10032021-4-320.jpg)

![Introduction.

Plug-and-Play priors for Bayesian imaging.

Theoritical results.

Experiments.

Conclusion.

Bayesian framework.

Sampling using Langevin based methods.

Role of the discretization step-size δ.

δ controls the trade-off between the convergence speed and

the acuracy of the Markov chain.

1-D example:

π(x) ∝ exp [−x2

/(2σ2

)] with σ = 1.

We sample from πδ ∝ exp [−x2

/(2σ2

δ)] with

σ2

δ = σ2

/(2 − δ/σ2

).

Rémi Laumont Bayesian imaging using Plug & Play priors.](https://image.slidesharecdn.com/gtti10032021-210310143356/85/Gtti-10032021-8-320.jpg)

![Introduction.

Plug-and-Play priors for Bayesian imaging.

Theoritical results.

Experiments.

Conclusion.

Bayesian framework.

Sampling using Langevin based methods.

Plug-and-Play approaches.

Targetting p(x|y), Eq (4) becomes

Xk+1 = Xk + δ∇ log p(Xk) + δ∇ log p(y|Xk) +

√

2δZk+1. (5)

Problem: p(x) is unknown and difficult to model. → Implicit prior

such as neural networks to target ∇R e.g (Alain and Bengio,

2014), (Guo et al., 2019),(Romano et al., 2017) and

(Kadkhodaie and Simoncelli, 2021) based on the Tweedie’s

formula :

E[X|X + N = x̃] − x̃ = ∇ log(p ∗ g)(x̃) = ∇ log(p)(x̃), (6)

with X ∼ p, N ∼ N(0, Id) and g a Gaussian kernel (Efron, 2011).

But,

E[X|X + N = x̃] ' D(x̃),

with D a denoiser.

Rémi Laumont Bayesian imaging using Plug Play priors.](https://image.slidesharecdn.com/gtti10032021-210310143356/85/Gtti-10032021-9-320.jpg)

![Introduction.

Plug-and-Play priors for Bayesian imaging.

Theoritical results.

Experiments.

Conclusion.

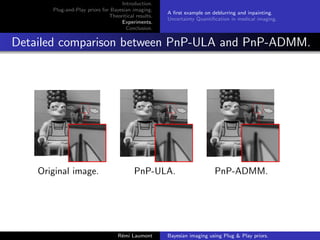

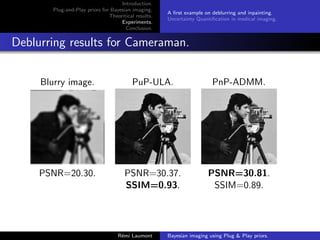

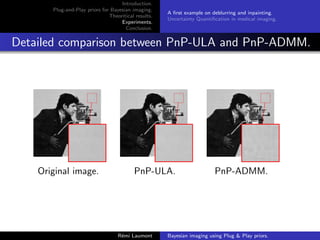

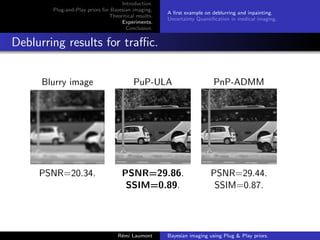

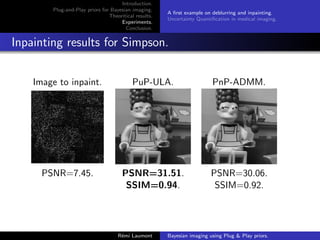

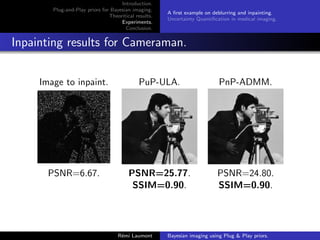

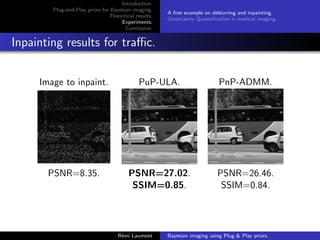

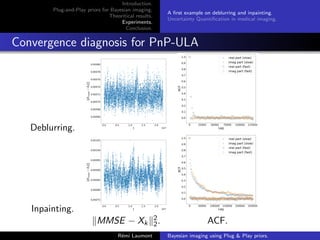

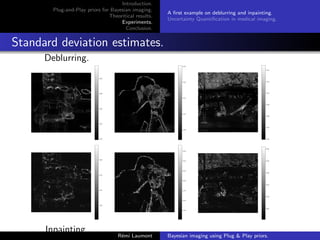

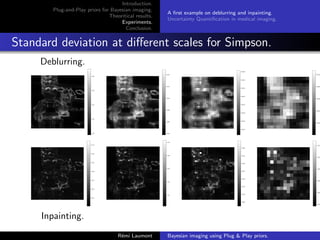

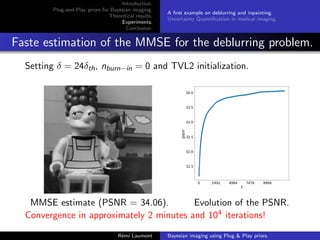

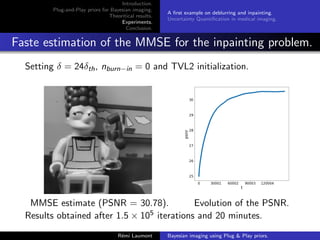

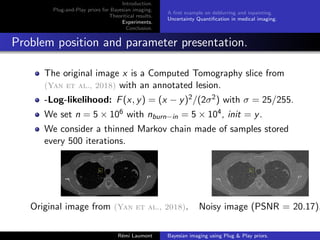

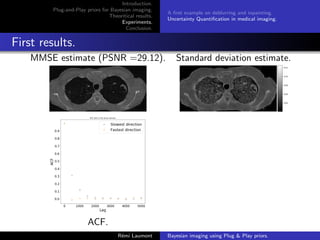

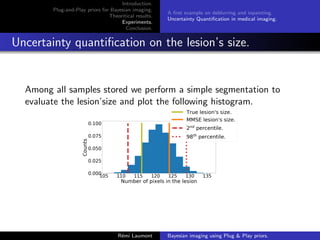

A first example on deblurring and inpainting.

Uncertainty Quantification in medical imaging.

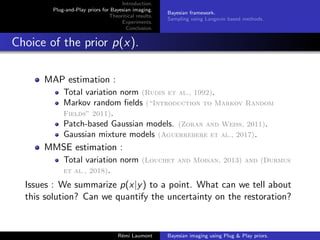

Algorithm parameters

D provided by (Ryu et al., 2019) and such that (D − Id) is

L-Lipschitz with L 1. = (5/255)2.

Comparison with PnP-ADMM with deblurring = (5/255)2 and

inpainting = (40/255)2.

C = [−1, 2]d .

A thinned version of the Markov chain is considered made of

samples stored every 2500 iterations.

n nburn−in δ Initialization

PnP-ULA 2.5e7 2.5e6 3δmax y

Rémi Laumont Bayesian imaging using Plug Play priors.](https://image.slidesharecdn.com/gtti10032021-210310143356/85/Gtti-10032021-14-320.jpg)

![References

Aguerrebere, Cecilia, Andres Almansa, Julie Delon, Yann Gousseau, and Pablo Muse

(2017). “A Bayesian Hyperprior Approach for Joint Image Denoising and

Interpolation, With an Application to HDR Imaging”. In: IEEE Transactions on

Computational Imaging 3.4, pp. 633–646. issn: 2333-9403. doi:

10.1109/TCI.2017.2704439. arXiv: 1706.03261 (cit. on p. 6).

Alain, Guillaume and Yoshua Bengio (2014). “What Regularized Auto-Encoders Learn

from the Data-Generating Distribution”. In: Journal of Machine Learning Research

15, pp. 3743–3773. issn: 1532-4435. arXiv: 1211.4246 (cit. on p. 9).

Bortoli, Valentin De and Alain Durmus (2020). Convergence of diffusions and their

discretizations: from continuous to discrete processes and back. arXiv: 1904.09808

[math.PR] (cit. on p. 7).

Durmus, Alain, Eric Moulines, and Marcelo Pereyra (2018). “Efficient bayesian

computation by proximal markov chain monte carlo: when langevin meets moreau”.

In: SIAM Journal on Imaging Sciences 11.1, pp. 473–506 (cit. on p. 6).

Efron, Bradley (2011). “Tweedie’s Formula and Selection Bias”. In: Journal of the

American Statistical Association 106.496, pp. 1602–1614. issn: 0162-1459. doi:

10.1198/jasa.2011.tm11181 (cit. on p. 9).

Guo, Bichuan, Yuxing Han, and Jiangtao Wen (2019). “AGEM : Solving Linear

Inverse Problems via Deep Priors and Sampling”. In: (NeurIPS) Advances in Neural

Rémi Laumont Bayesian imaging using Plug Play priors.](https://image.slidesharecdn.com/gtti10032021-210310143356/85/Gtti-10032021-35-320.jpg)

![References

Information Processing Systems. Ed. by Curran Associates Inc., pp. 545–556

(cit. on p. 9).

“Introduction to Markov Random Fields” (2011). In: Markov Random Fields for Vision

and Image Processing. The MIT Press. doi: 10.7551/mitpress/8579.003.0001

(cit. on p. 6).

Kadkhodaie, Zahra and Eero P. Simoncelli (2021). Solving Linear Inverse Problems

Using the Prior Implicit in a Denoiser. arXiv: 2007.13640 [cs.CV] (cit. on p. 9).

Louchet, Cécile and Lionel Moisan (2013). “Posterior expectation of the total variation

model: Properties and experiments”. In: SIAM Journal on Imaging Sciences 6.4,

pp. 2640–2684. issn: 19364954. doi: 10.1137/120902276 (cit. on p. 6).

Pereyra, Marcelo, Luis Vargas Mieles, and Konstantinos C. Zygalakis (2020).

“Accelerating proximal Markov chain Monte Carlo by using an explicit stabilized

method”. In: SIAM J. Imaging Sci. 13.2, pp. 905–935. doi: 10.1137/19M1283719

(cit. on p. 33).

Roberts, Gareth O, Richard L Tweedie, et al. (1996). “Exponential convergence of

Langevin distributions and their discrete approximations”. In: Bernoulli 2.4,

pp. 341–363 (cit. on p. 7).

Romano, Yaniv, Michael Elad, and Peyman Milanfar (2017). “The Little Engine That

Could: Regularization by Denoising (RED)”. In: SIAM Journal on Imaging Sciences

Rémi Laumont Bayesian imaging using Plug Play priors.](https://image.slidesharecdn.com/gtti10032021-210310143356/85/Gtti-10032021-36-320.jpg)