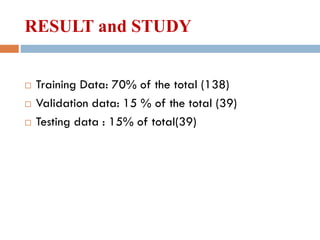

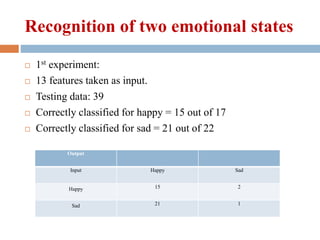

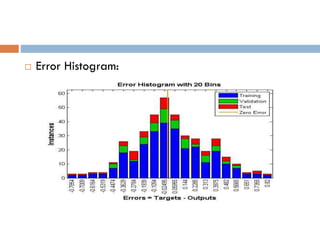

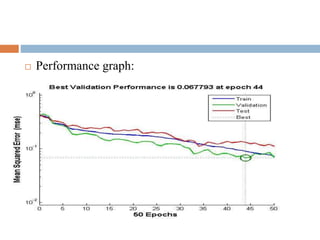

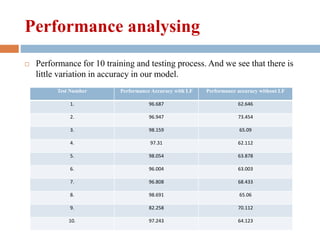

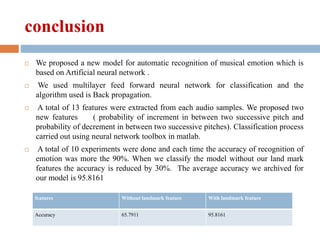

The document discusses graphical visualization of musical emotions using artificial neural networks. 13 audio features are extracted from Hindustani classical music clips labeled as happy or sad. An ANN model with backpropagation algorithm is trained on 70% of data, validated on 15% and tested on 15%. The model correctly classified 15 of 17 happy clips and 21 of 22 sad clips. Testing was repeated 10 times with over 90% accuracy each time, showing the model effectively recognizes musical emotions. Future work involves expanding the model to recognize additional emotions and incorporating physiological features.

![ANN(contd.)

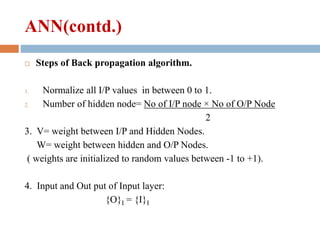

Input to the hidden layer is computed by multiplying I/P

values to their corresponding weights.

{I}H = [V ] {O}I

Output of Hidden layer is computed using sigmoidal function.](https://image.slidesharecdn.com/graphicalvisualizationofmusicalemotions-140527033557-phpapp02/85/Graphical-visualization-of-musical-emotions-40-320.jpg)

![ANN(contd.)

Input to output layer are computed by multiplying

corresponding weights.

{I}O = [W] {O}H

output of output layer is calculated as:](https://image.slidesharecdn.com/graphicalvisualizationofmusicalemotions-140527033557-phpapp02/85/Graphical-visualization-of-musical-emotions-41-320.jpg)

![ANN(Contd.)

[y] matrix is calculated :

[Y] = {O}H × d

Change in Weight:](https://image.slidesharecdn.com/graphicalvisualizationofmusicalemotions-140527033557-phpapp02/85/Graphical-visualization-of-musical-emotions-43-320.jpg)

![ANN(contd.)

Error in Hidden layer :

And the new d is :

Calculate [x] matrix:

[x]= ×](https://image.slidesharecdn.com/graphicalvisualizationofmusicalemotions-140527033557-phpapp02/85/Graphical-visualization-of-musical-emotions-44-320.jpg)

![Reference

[1] A. Srinivasan (2011). “Speech Recognition Using Hidden Markov

Model”. Applied Mathematical Sciences, Vol. 5, 2011, no. 79, 3943

– 3948

[2] BjörnSchuller, Manfred Lang, Gerhard Rigoll (2002): "Multimodal

Emotion Recognition in Audiovisual Communication", Proc. ICME

2002, 3rd International Conference on Multimedia and Expo, IEEE,

vol. 1, pp. 745-748, Lausanne, Switzerland,

[3] Coutinho, E. &Cangelosi, A. (2010). “A Neural Network Model for

the Prediction of Musical Emotions.”In S. Nefti-Meziani& J.G. Grey

(Ed.). Advances in Cognitive Systems (pp. 331-368). London: IET

Publisher. ISBN: 978-1849190756](https://image.slidesharecdn.com/graphicalvisualizationofmusicalemotions-140527033557-phpapp02/85/Graphical-visualization-of-musical-emotions-55-320.jpg)

![Reference(contd.)

[4] Daniela and Bernd Willimek. (2013). Music and Emotions Research

on the Theory of Musical Equilibration (die Strebetendenz-Theorie).

Copyright © 2011 Daniela und Bernd Willimek

[5] Deryaozkan, Stefan scherer and Louis-philippemorency. (2013)

“Step-wise emotion recognition using concatenated-HMM”, IEEE

Transactions on Multimedia 15(2): 326-338

[6] Gaurav Pandey, chaitanya Mishra and Paul Ipe, “TANSEN: A

system for automatic raga Identification “, (2003). PP.1350-1363.

Indian International conference on AI.

[7]Jack H. David Jr.(1995) , “The Mathematics of Music”. Spring,Math

1513.5097](https://image.slidesharecdn.com/graphicalvisualizationofmusicalemotions-140527033557-phpapp02/85/Graphical-visualization-of-musical-emotions-56-320.jpg)

![Reference(contd.)

[8] Keshi Dai, Harriet J. Fell, and Joel MacAuslan. (2012) “Recognizing emotion in

speech using neural network”

[9]Mohammad abd- alrahman mahmaoud Abushariah, Raja Noor

Ainon, RoziatiZainuddin, MoustafaElshafei, Othman OmranKhalifa: (2012) “ Arabic

speaker-independent continuous automatic speech recognition based on a

phonetically rich and balanced speech corpus” . Int. Arab J. Inf. Technol. 9(1): 84-93

[10] O. Lartillot and P. Toiviainen, “A matlab toolbox for musical feature extraction from

audio,” in Proc. Digital Audio Effects (DAFx-07), Bordeaux, France, Sep. 10-15

2007

[11] Sandeep bagchee, (1998) “Nad: Understanding Raga music” Eshwar, 1st edition.

ISBN-13: 978-8186982075](https://image.slidesharecdn.com/graphicalvisualizationofmusicalemotions-140527033557-phpapp02/85/Graphical-visualization-of-musical-emotions-57-320.jpg)

![Reference(contd.)

[12] www.wekepedia.org

[13] www.paragchordia.com

[14] www.swarganga.org

[15] www.mathworks.in

[16] www.shadjamadhyam.com

[17] www.22shruti.com

[18] www.knowyourraga.com

[19]www.skeptic.skepticgeek.com](https://image.slidesharecdn.com/graphicalvisualizationofmusicalemotions-140527033557-phpapp02/85/Graphical-visualization-of-musical-emotions-58-320.jpg)

![Reference(contd.)

[20] Yading Song, Simon Dixon, Marcus Pearce (2012) .”EVALUATION OF

MUSICAL FEATURES FOR EMOTION CLASSIFICATION”.13th

international society for Music Information Retrieval Conference (ISMIR).

[21]Yongjin Wang, Ling Guan(2008) : Recognizing Human Emotional State

From Audiovisual Signals. IEEE Transactions on Multimedia 10(4): 659-

668

[22] Zhen-GuoChe, Tzu-An Chiang and Zhen-Hua Che. (2010) .“Feed

forward neural networks training: A comparison between genetic algorithm

and back propagation learning algorithm”. International journal of

innovation and computing, information and control .volume 7](https://image.slidesharecdn.com/graphicalvisualizationofmusicalemotions-140527033557-phpapp02/85/Graphical-visualization-of-musical-emotions-59-320.jpg)