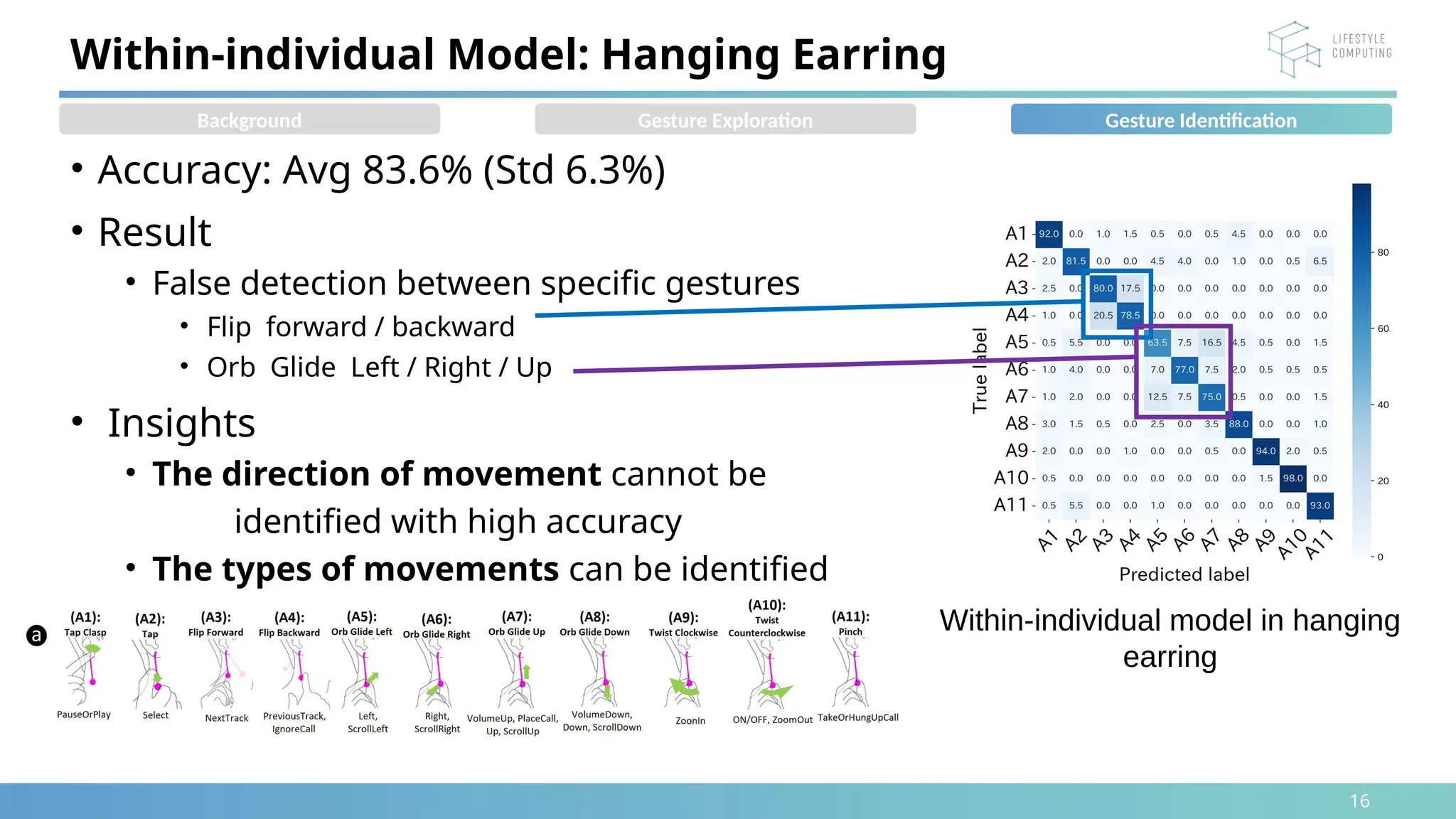

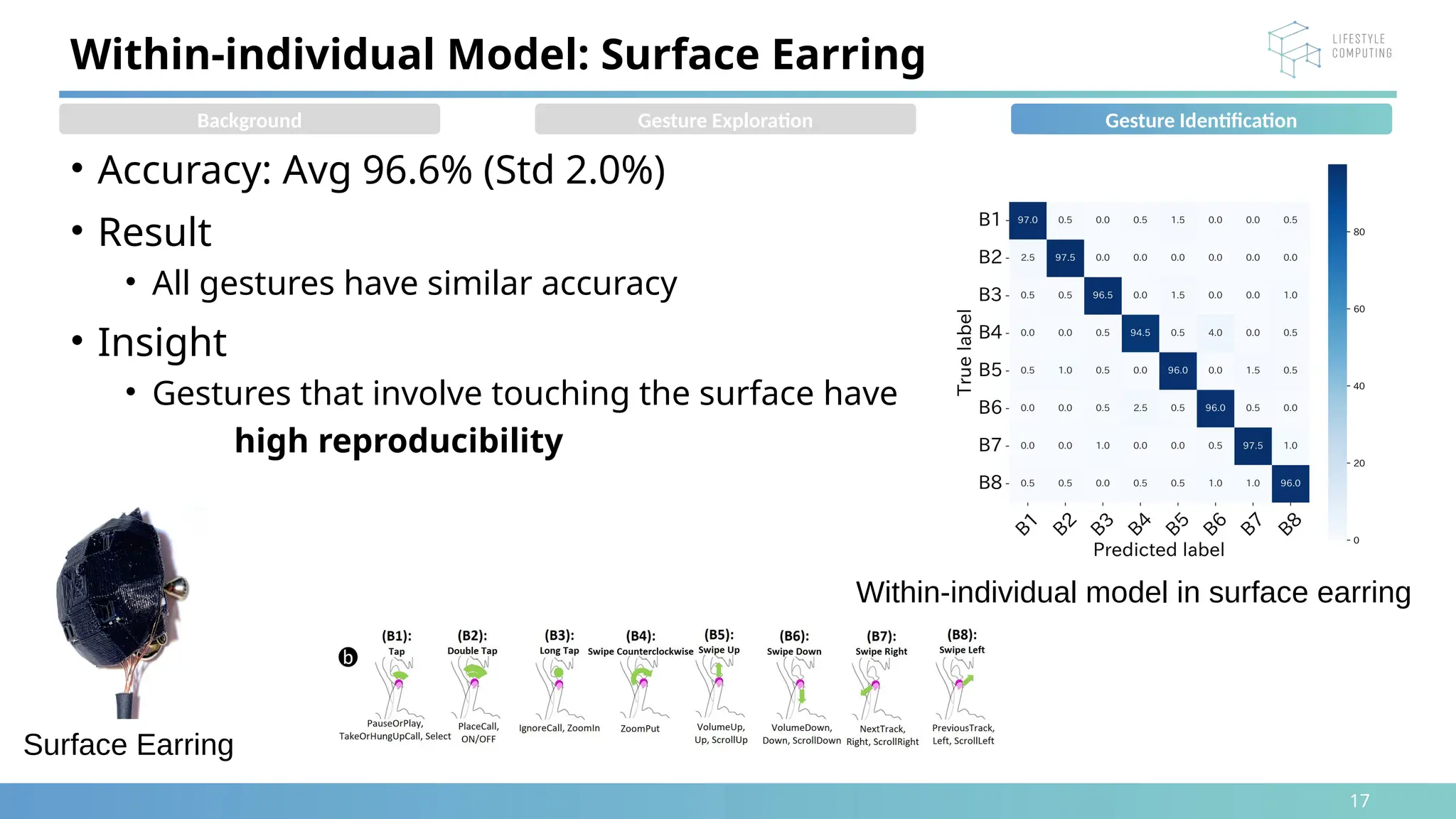

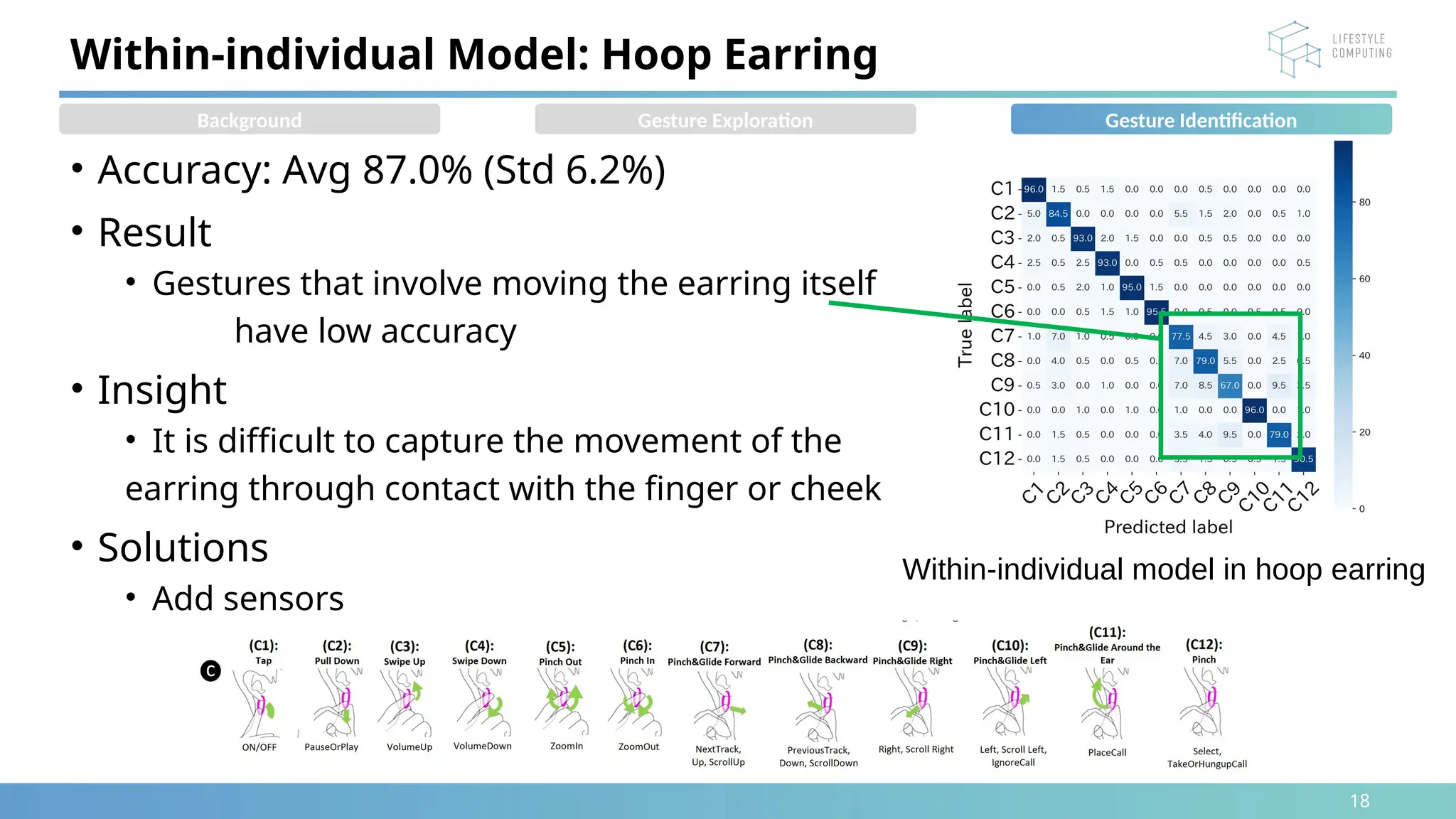

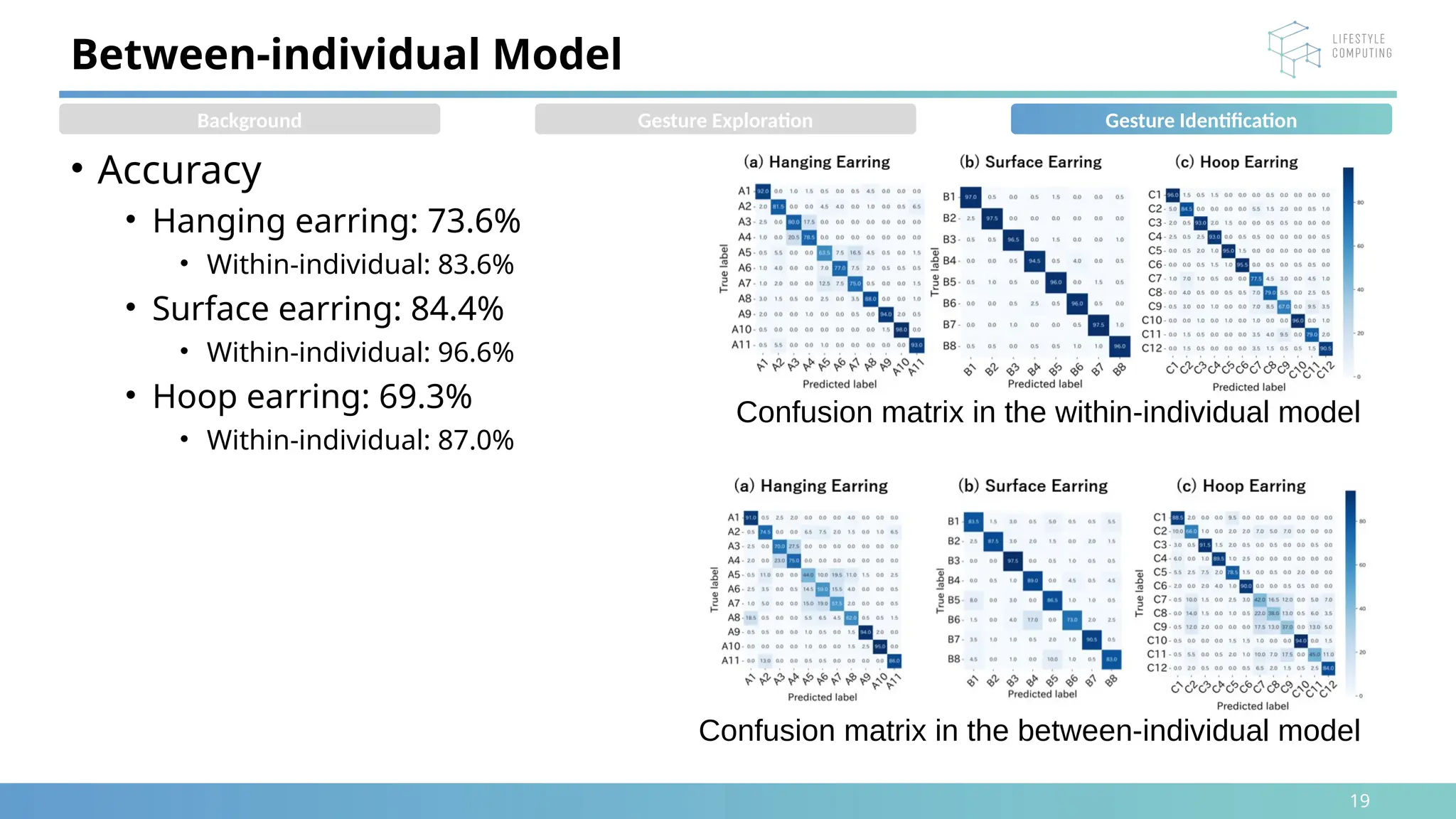

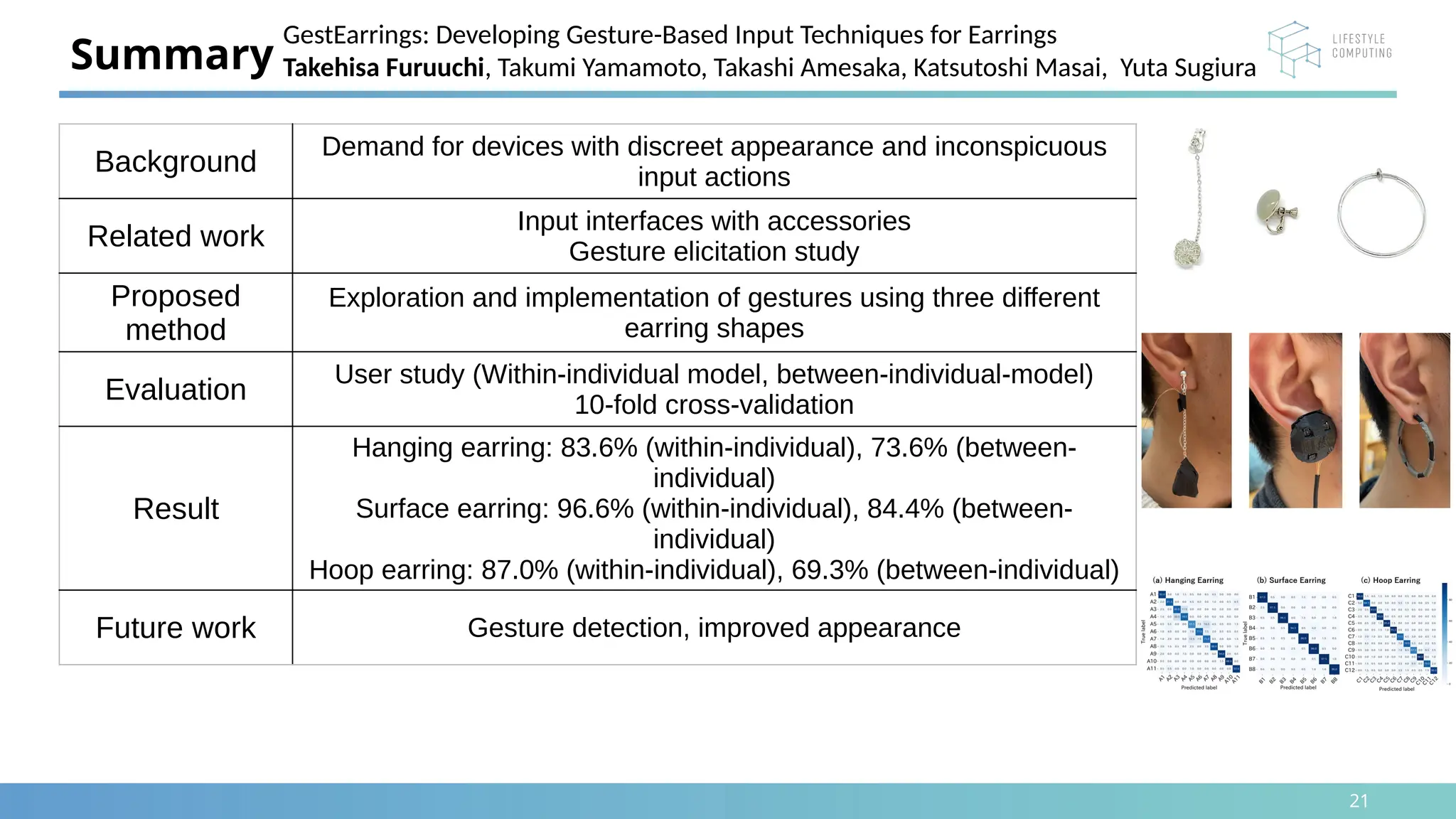

The document discusses the development of gesture-based input techniques for earrings, aiming to create discreet, unobtrusive wearable devices that integrate seamlessly into daily life. Three types of earrings were explored, leading to the identification of user-preferred gestures and the implementation of hardware and software for gesture recognition, achieving high accuracy rates. Future work includes refining gesture detection and enhancing the appearance of the devices.

![4

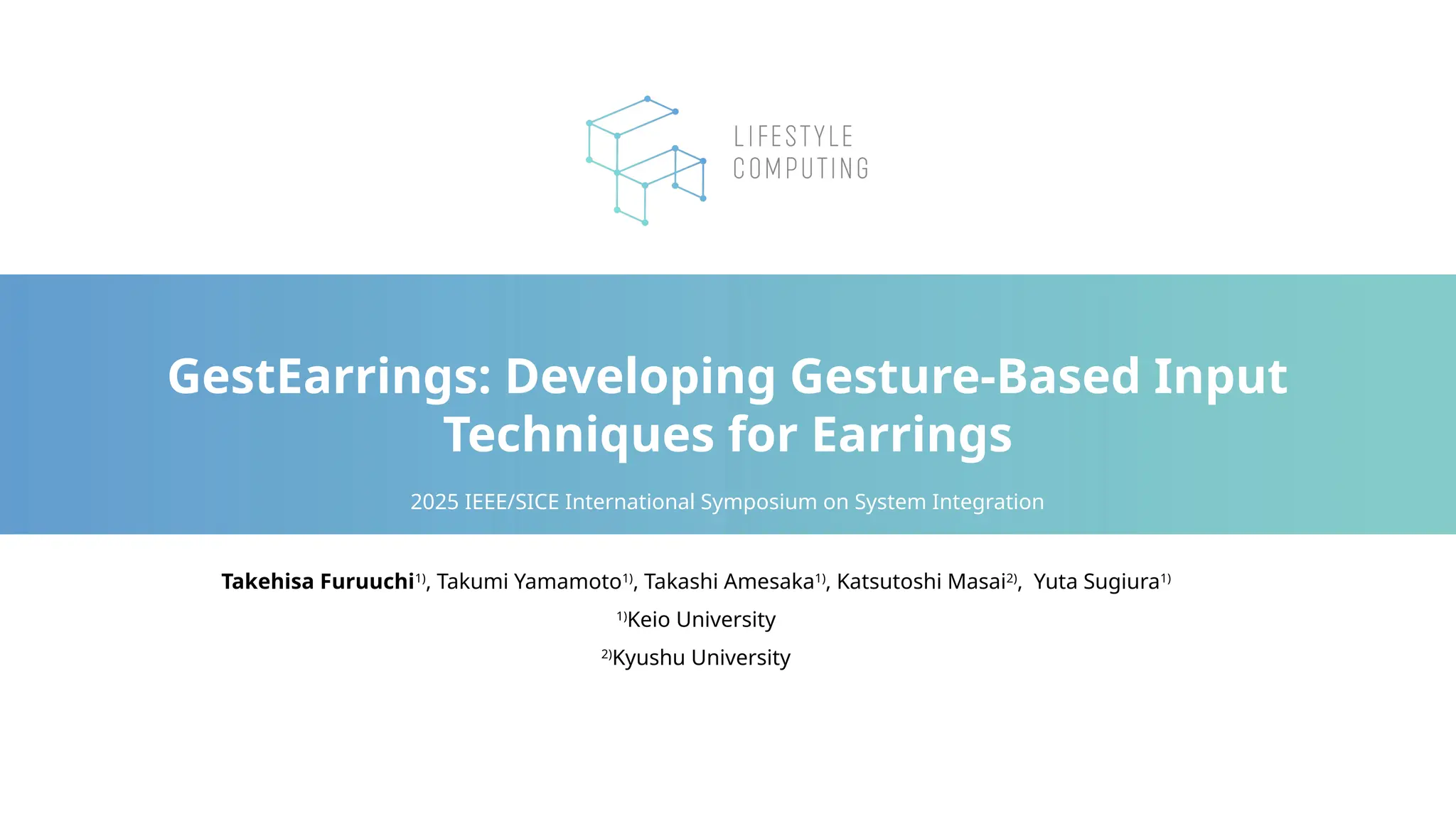

• Transforming existing items into interfaces

• The appearance of wearing the device is socially accepted

• Positioning of this study

• Gesture input by touching the earrings

• Discreet input operation

• Eyes-free input operation

• Diverse designs

[1] Christine Dierk, Scott Carter, Patrick Chiu, Tony Dunnigan, and Don Kimber. Use Your Head! Exploring Interaction Modalities for Hat Technologies. DIS '19: Proceedings of the 2019 on Designing Interactive Systems Conference.

[2] Katia Vega, Marcio Cunha, Hugo Fuks. Hairware: Conductive Hair Extensions as a Capacitive Touch Input Device. IUI '15 Companion: Companion Proceedings of the 20th International Conference on Intelligent User Interfaces.

[3] Takumi Yamamoto, Katsutoshi Masai, Anusha Withana, Yuta Sugiura. Masktrap: Designing and Identifying Gestures to Transform Mask Strap into an Input Interface. IUI '23: Proceedings of the 28th International Conference on Intelligent User Interfaces.

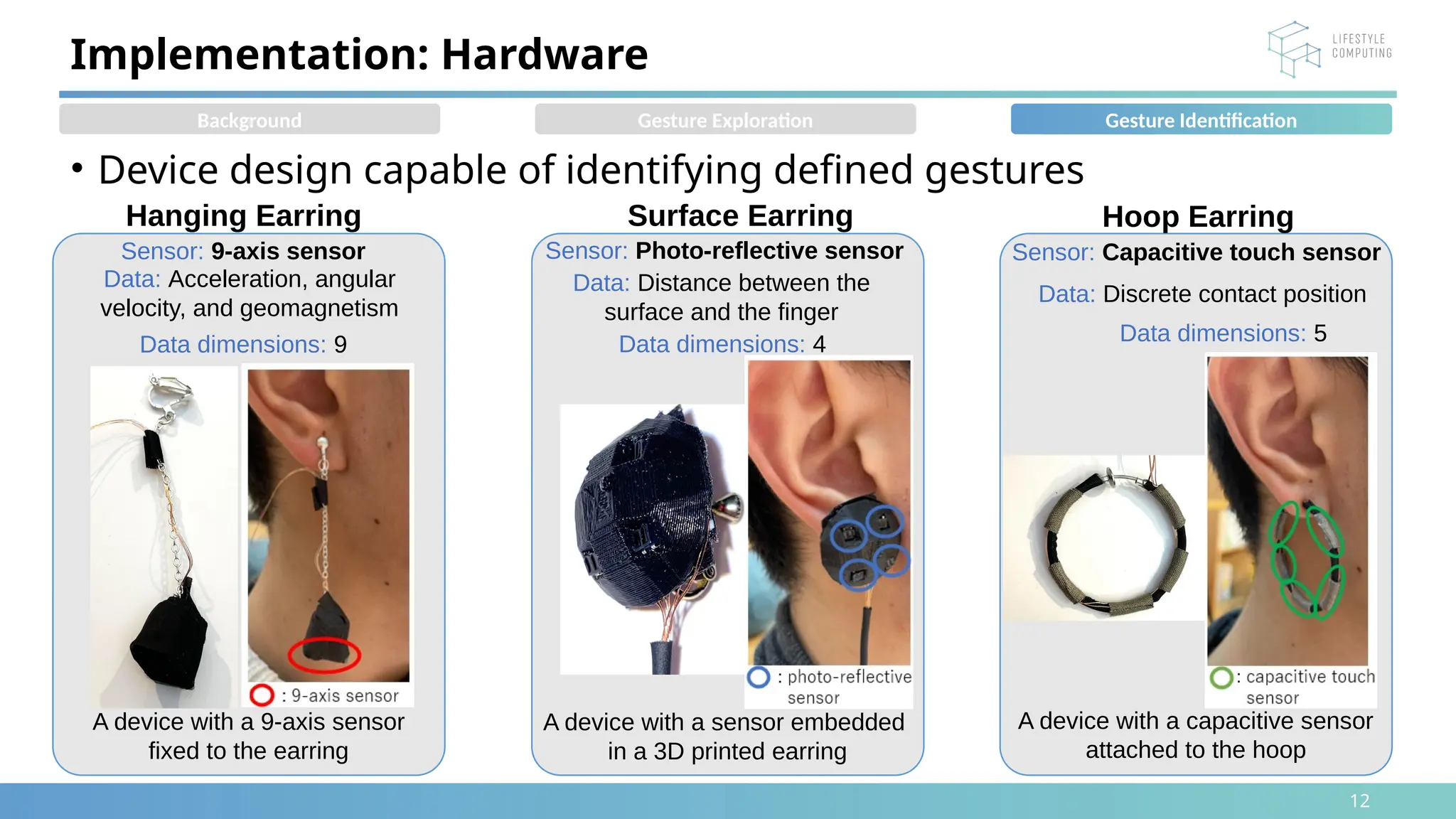

Related Work: Input Interfaces with Accessories

Interaction between the hat

and the wearer[1]

Hat Hair extension

Inputting information by

touching the extensions[2]

Mask

Inputting information by

moving the mask straps[3]

Background Gesture Exploration Gesture Identification](https://image.slidesharecdn.com/slideshare-250204090510-9ad0d443/75/GestEarrings-Developing-Gesture-Based-Input-Techniques-for-Earrings-4-2048.jpg)

![5

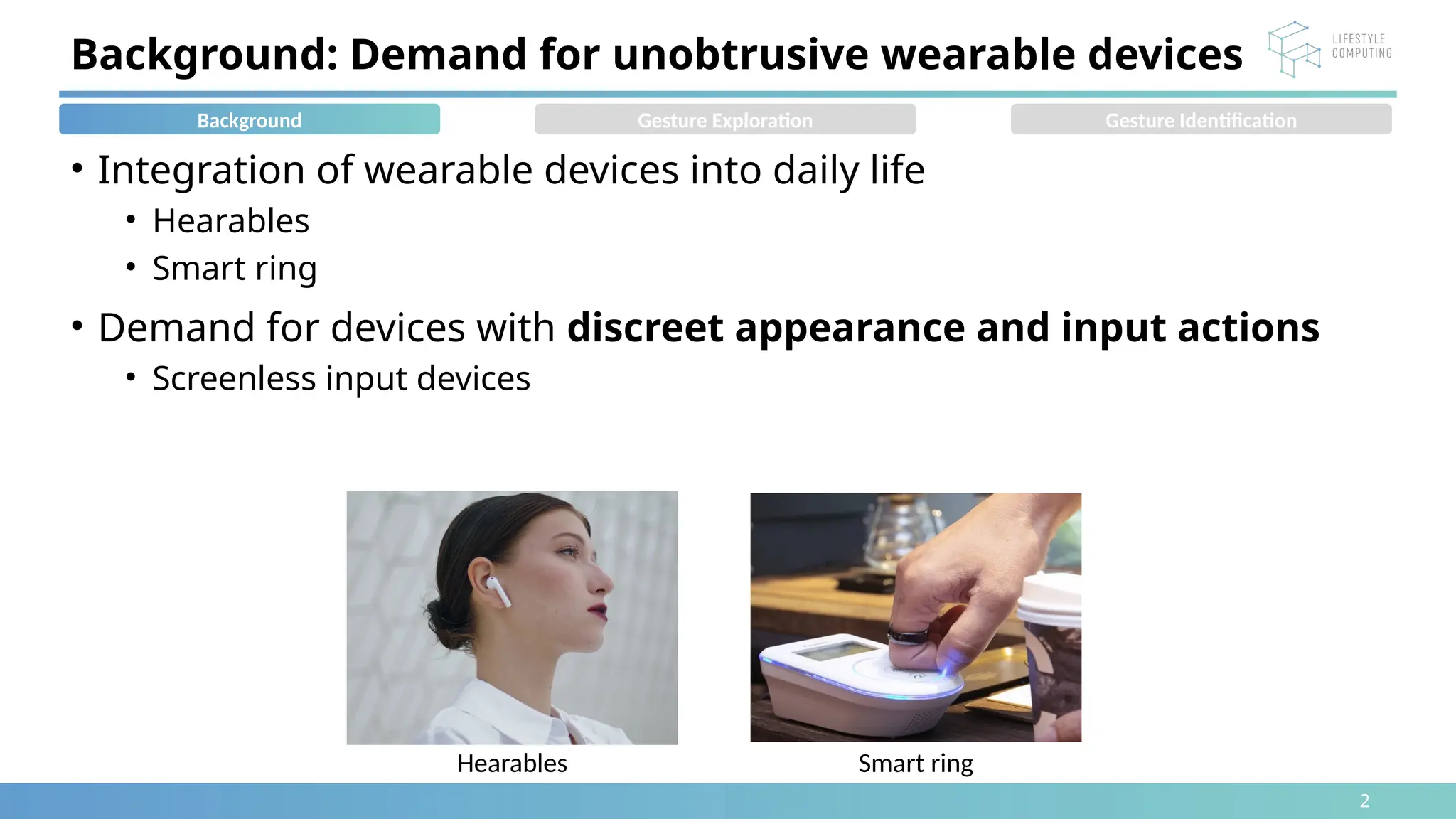

• Limitations

• Conspicuous facial gesture

input

[4] Kyosuke Futami, Kohei Oyama, and Kazuya Murao. Augmenting Ear Accessories for Facial Gesture Input Using Infrared Distance Sensor Array. Electronics 2022, 11(9), 1480; https://doi.org/10.3390/electronics11091480.

[5] Jatin Arora, Kartik Mathur, Aryan Saini, and Aman Parnami. Gehna: Exploring the Design Space of Jewelry as an Input Modality. CHI '19: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems

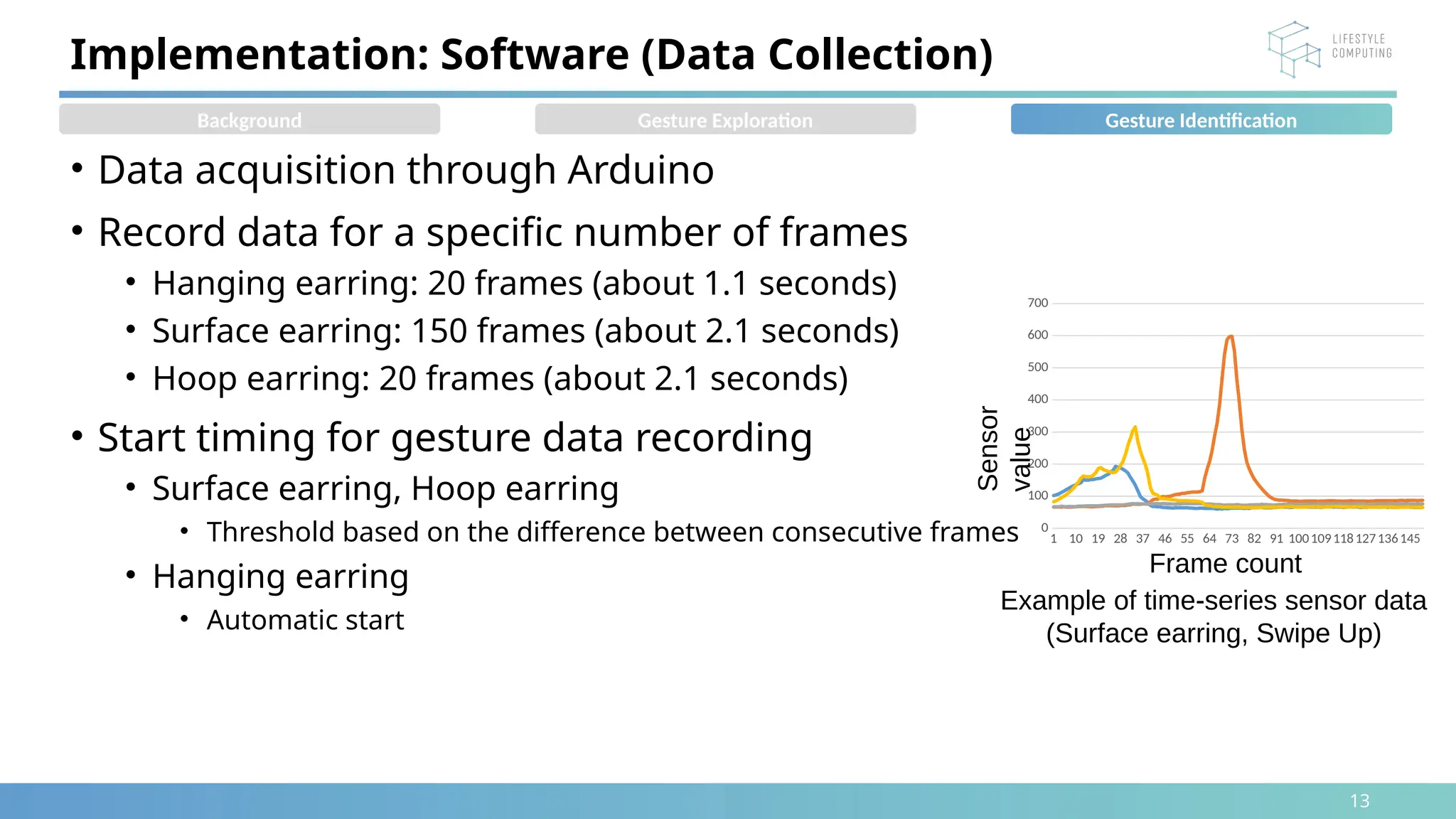

Related Work: Earring Device

Facial expression recognition

using infrared distance sensors[4]

Facial Gesture Gesture suggestions

Exploring the gesture design space[5]

• Limitations

• Gestures were designed by researchers,

not users

• Not implemented

Background Gesture Exploration Gesture Identification](https://image.slidesharecdn.com/slideshare-250204090510-9ad0d443/75/GestEarrings-Developing-Gesture-Based-Input-Techniques-for-Earrings-5-2048.jpg)

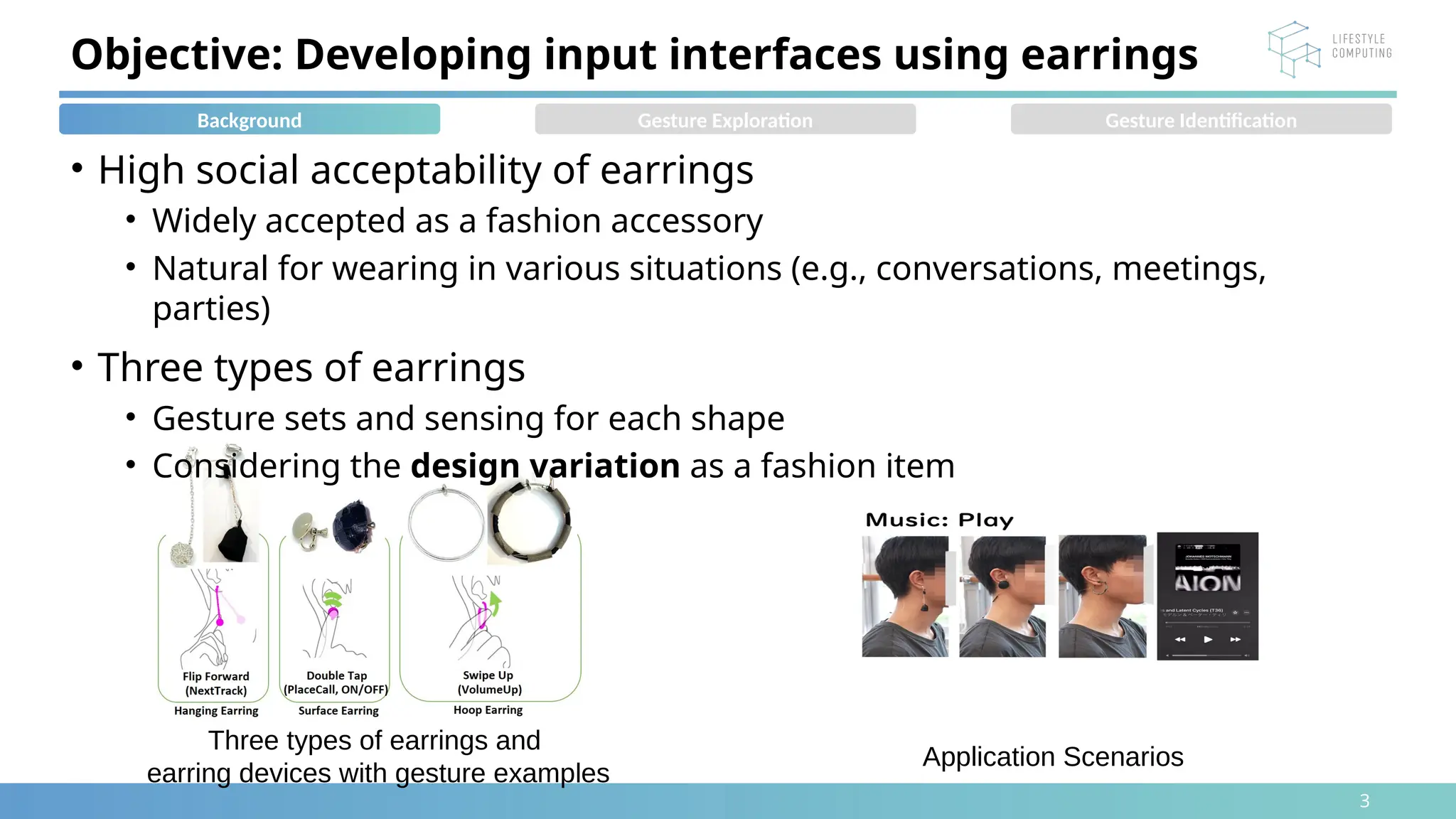

![7

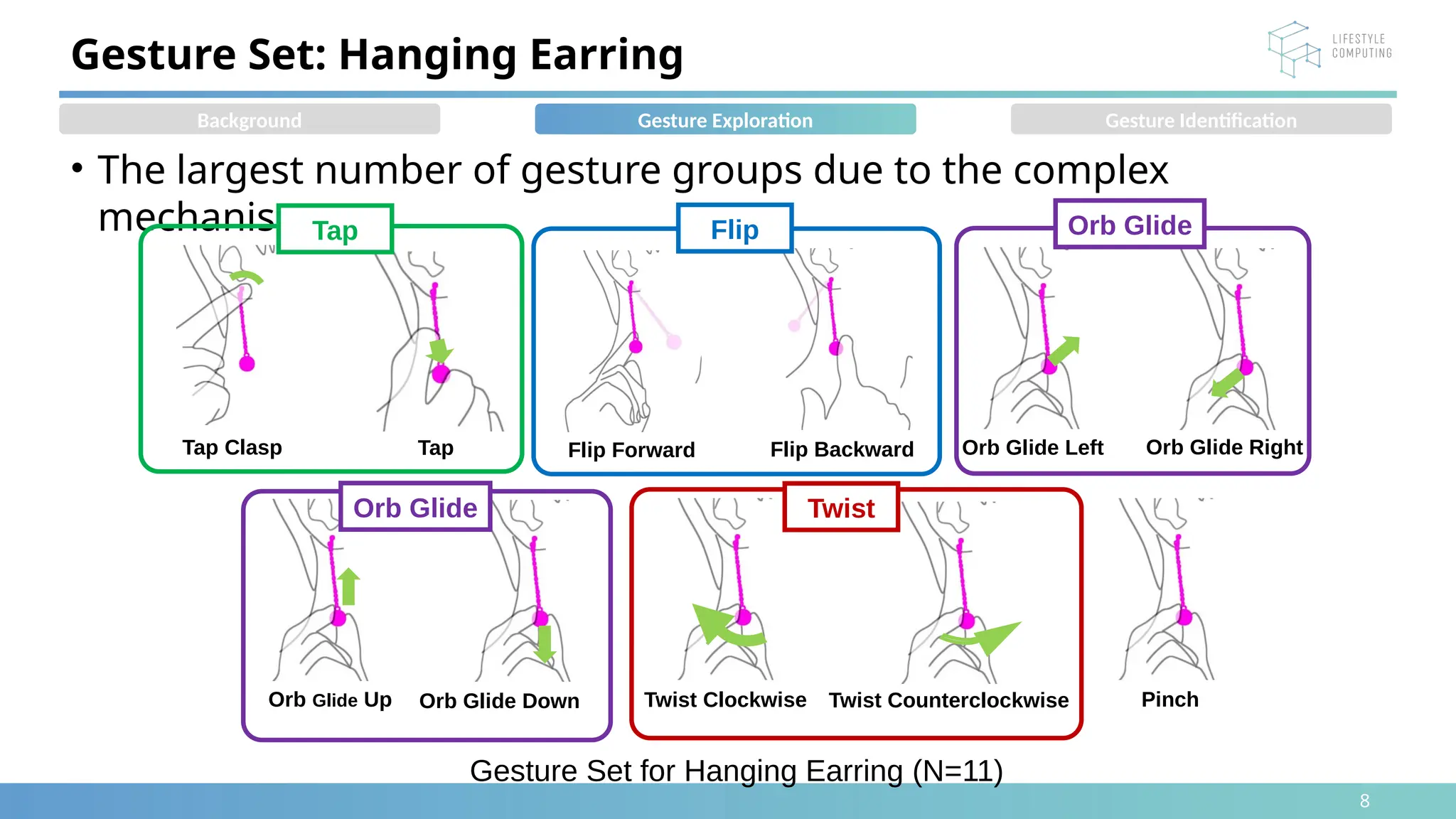

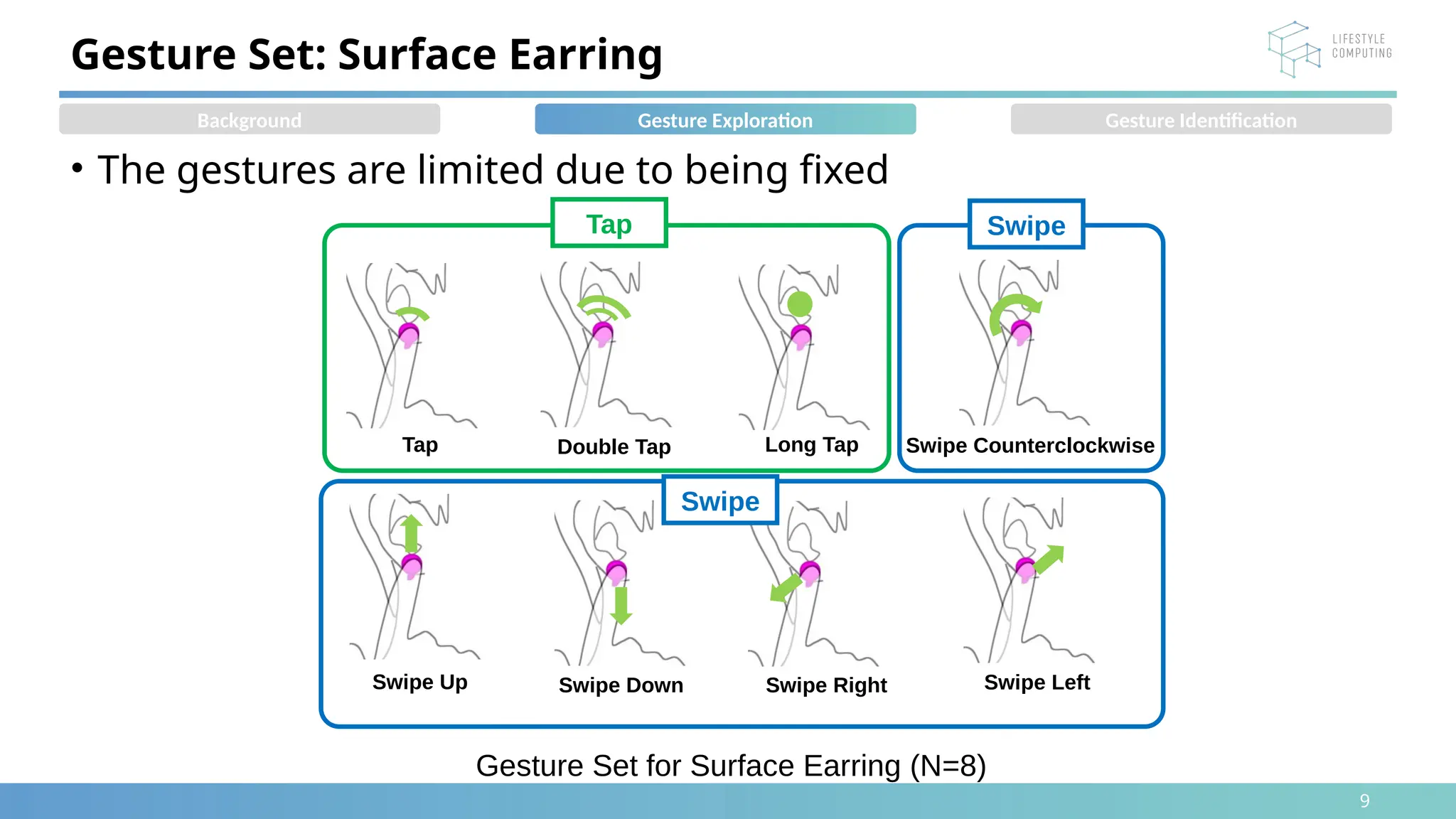

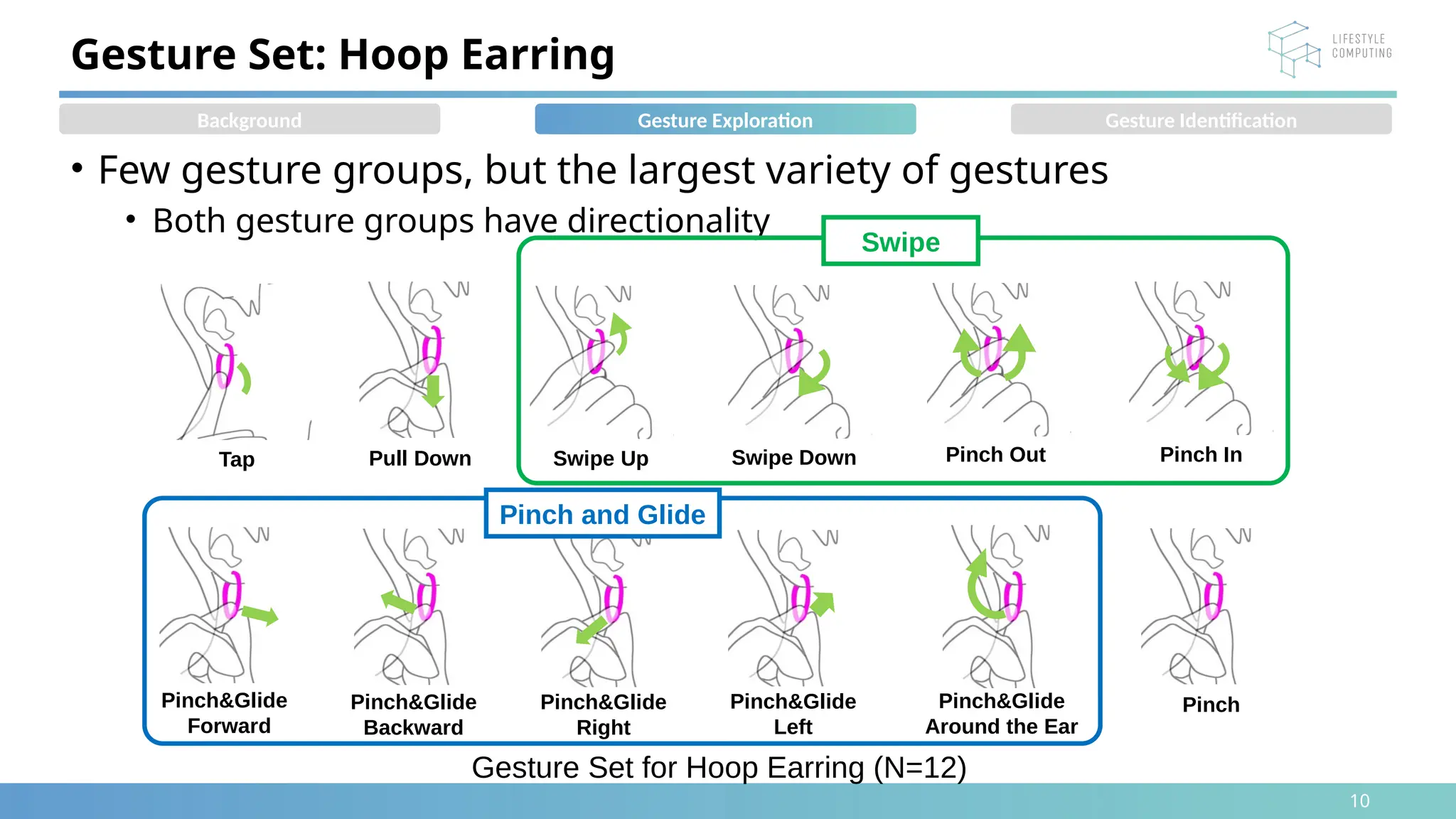

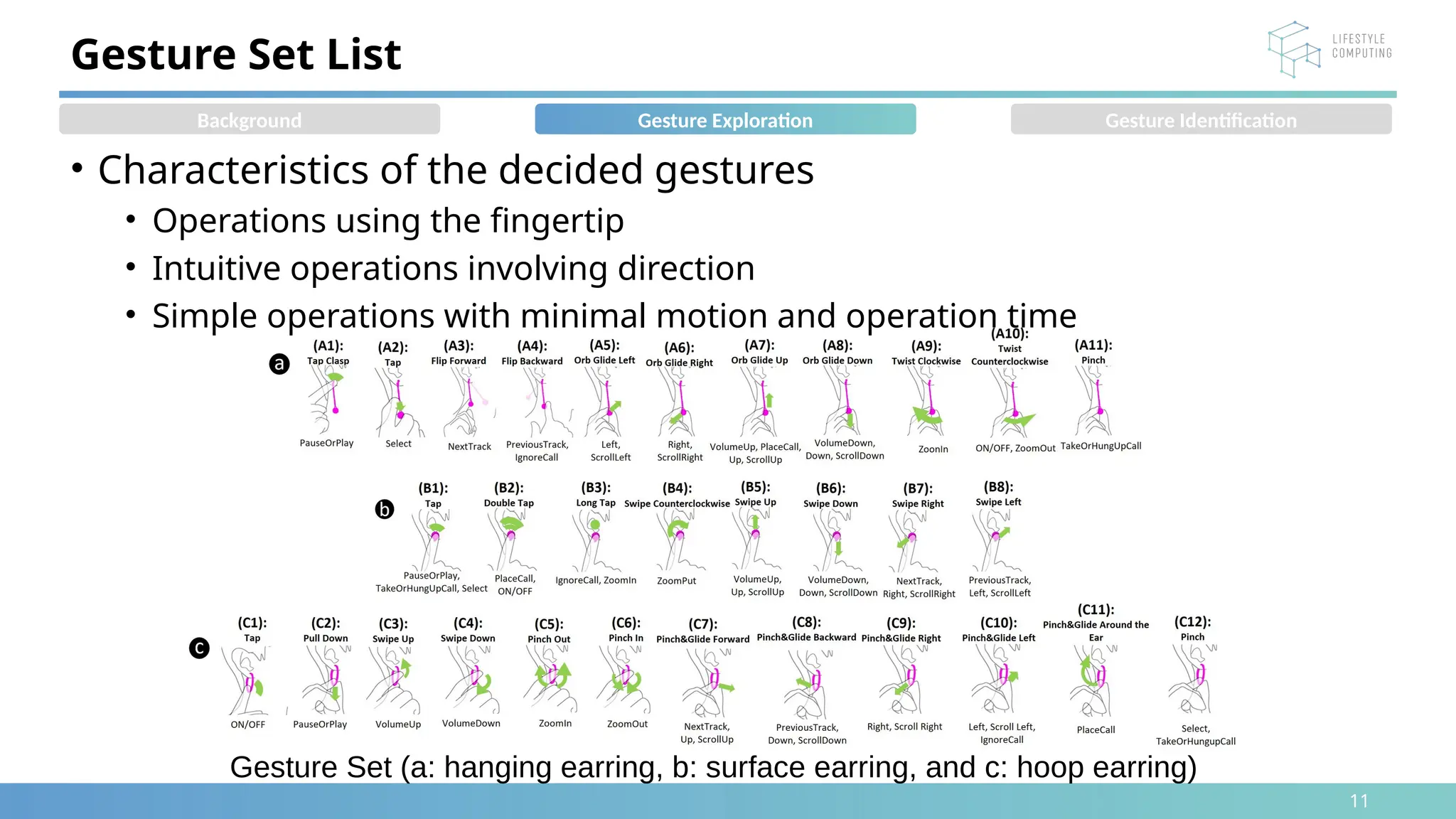

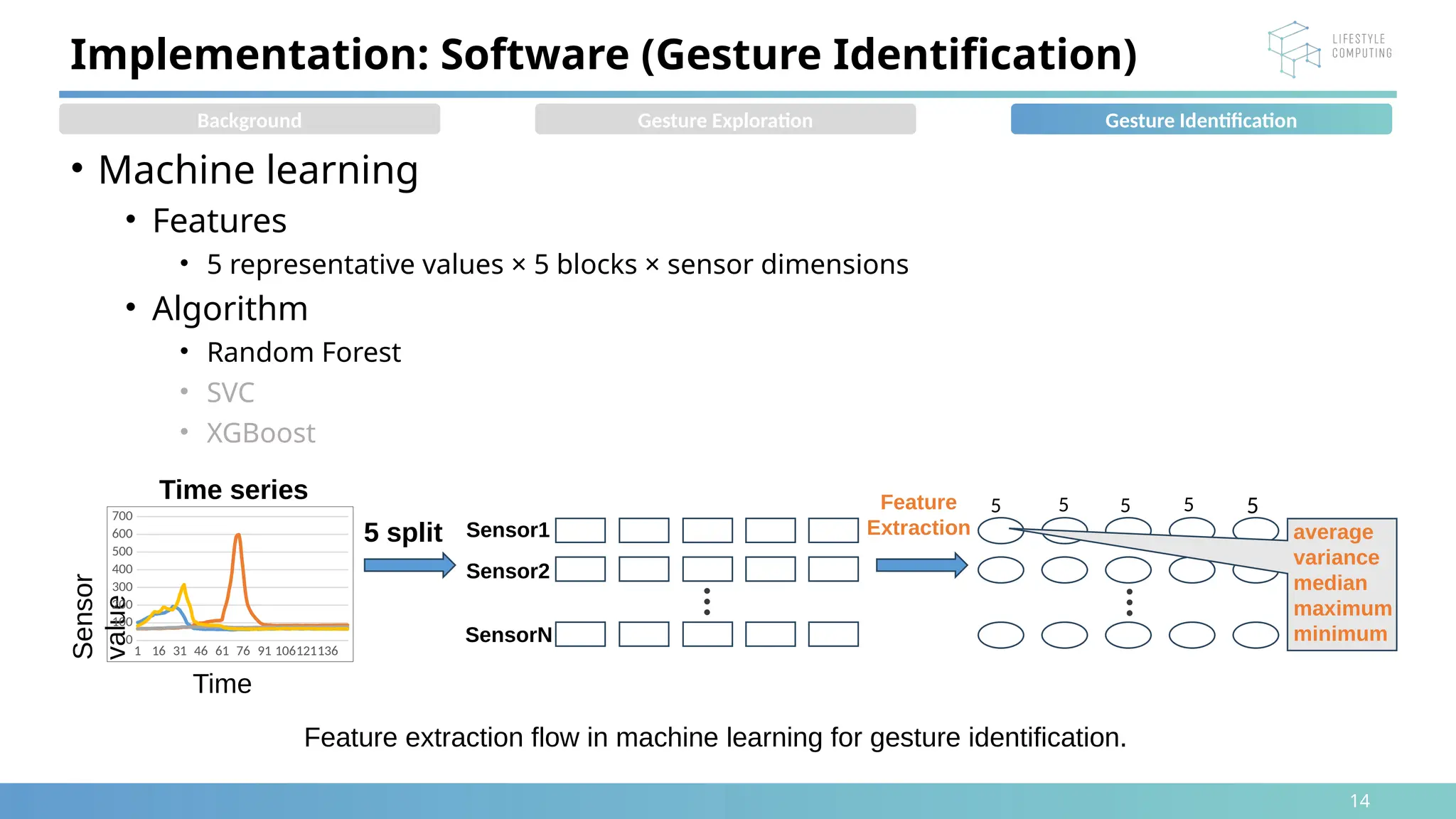

• Objective

• Survey of gestures defined by users

• Gesture devise by experiment participants

• Three types of earrings

• Final gesture set

• Decided by majority vote

Overview of Gesture Exploring Study

Background Gesture Exploration

Target actions

[Note] Approved by the Keio University Research Ethics Committee

The three different types of earrings used in the study

Hanging earring

Surface earring

Hoop earring

Participant information

Gesture Identification](https://image.slidesharecdn.com/slideshare-250204090510-9ad0d443/75/GestEarrings-Developing-Gesture-Based-Input-Techniques-for-Earrings-7-2048.jpg)

![24

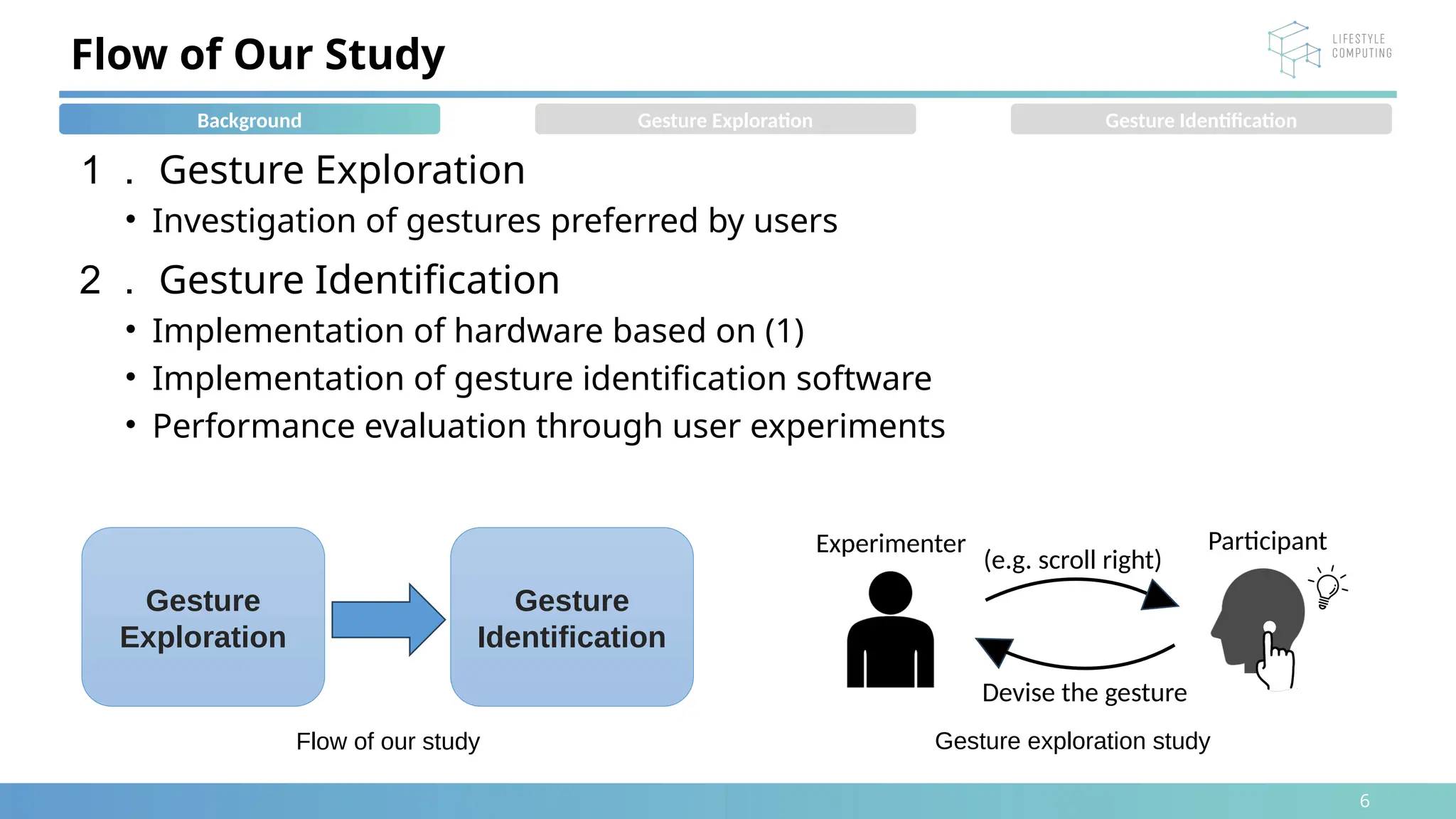

[6] J.-L. Perez-Medina, S. Villarreal, and J. Vanderdonckt, “A gesture ´ elicitation study of nose-based gestures,” Sensors, vol. 20, no. 24, 2020. [Online]. Available: https://www.mdpi.com/1424-8220/20/24/ 7118

[7] ] K. Masai, K. Kunze, D. Sakamoto, Y. Sugiura, and M. Sugimoto, “Face commands - user-defined facial gestures for smart glasses,” in 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2020, pp. 374–

386.

[8] Y.-C. Chen, C.-Y. Liao, S.-w. Hsu, D.-Y. Huang, and B.-Y. Chen, “Exploring user defined gestures for ear-based interactions,” Proc. ACM Hum.-Comput. Interact., vol. 4, no. ISS, nov 2020. [Online]. Available:

https://doi.org/10.1145/3427314

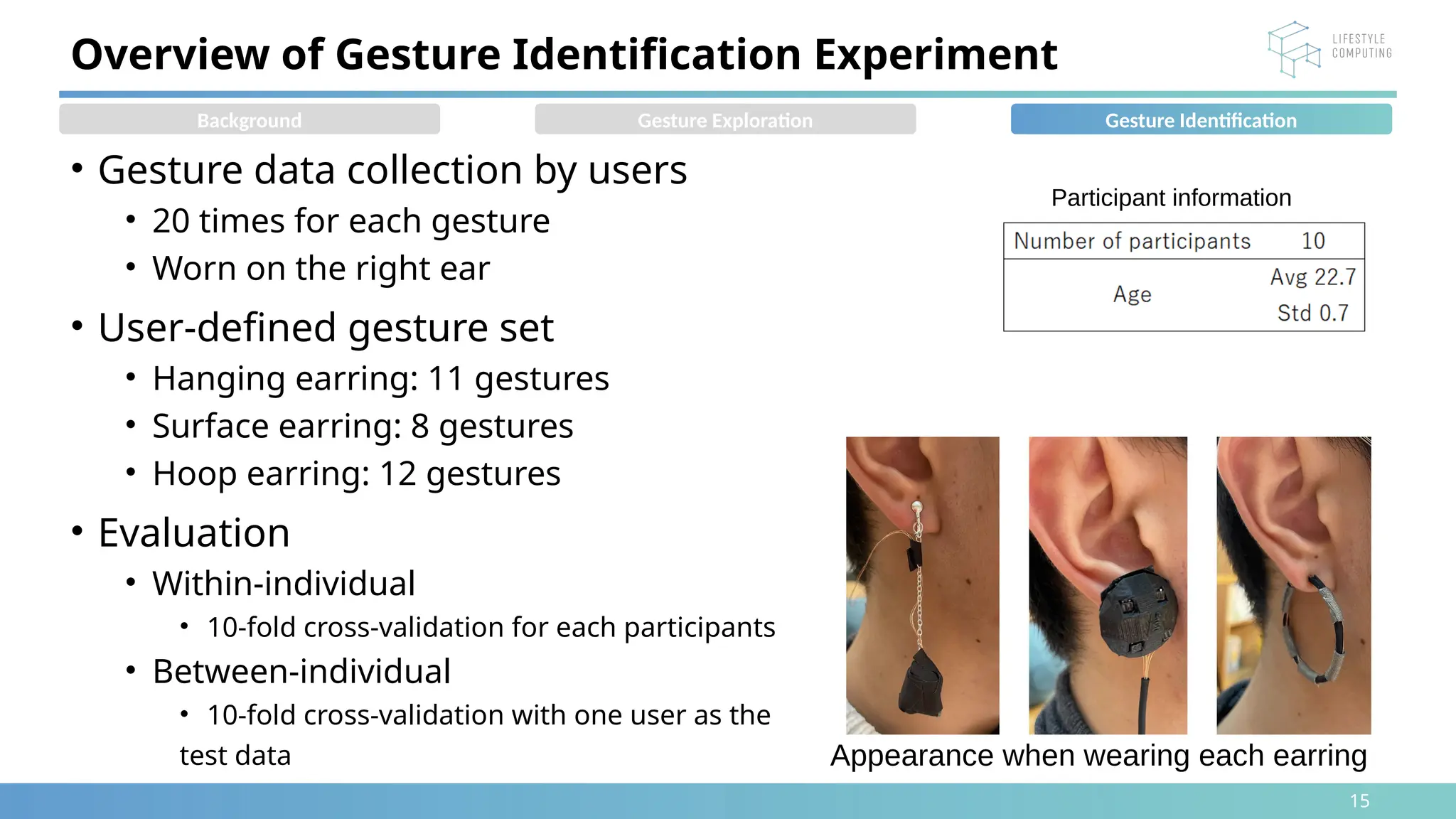

Related Work: Gesture Elicitation Study

• Gesture elicitation study

• Construction of user-defined gesture set

• Flow

1. The experimenter presents the task (e.g. place a call)

2. The participants design gestures for the task

3. The experimenter categorizes the gestures

4. The final gesture set is determined

Background Gesture Exploration Gesture Identification

Nose-based interaction[6]

Facial gestures for

smart glasses[7]

Ear-based interaction[8]](https://image.slidesharecdn.com/slideshare-250204090510-9ad0d443/75/GestEarrings-Developing-Gesture-Based-Input-Techniques-for-Earrings-24-2048.jpg)