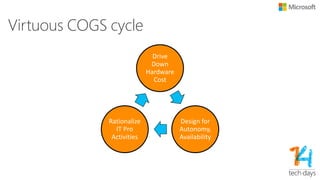

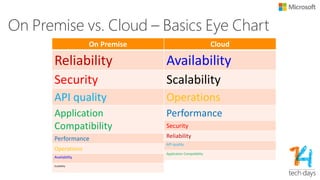

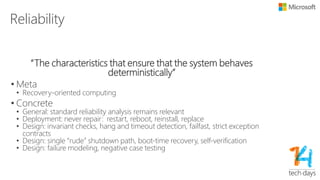

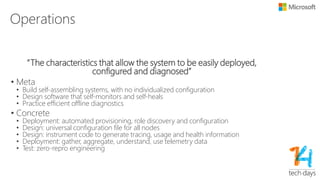

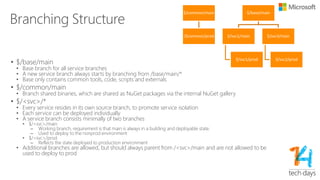

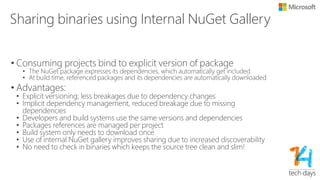

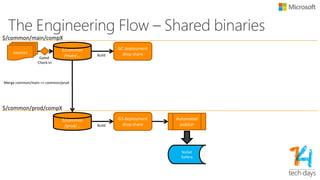

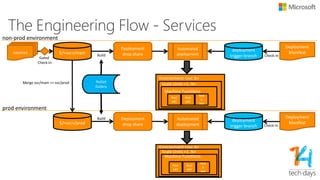

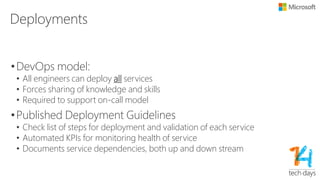

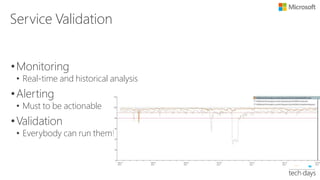

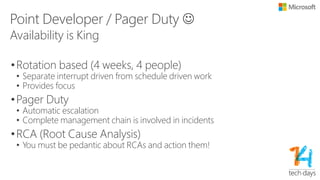

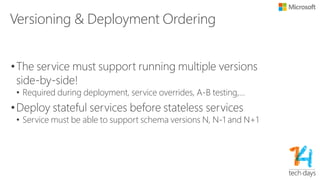

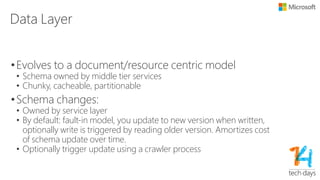

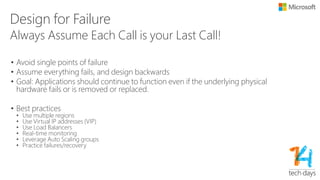

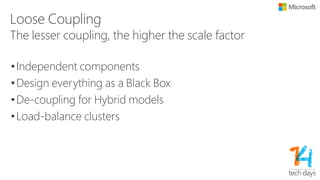

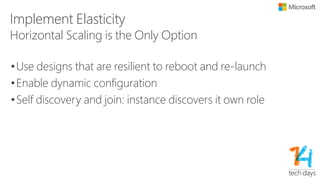

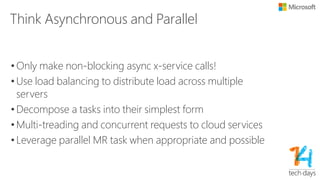

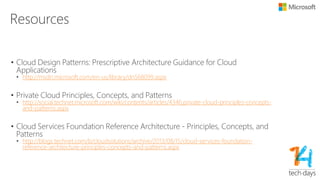

The document outlines principles for software design and cloud computing, emphasizing simplicity, reliability, and scalability in engineering practices. It promotes self-assembling systems and highlights the importance of efficient operations, including automated deployments and proactive maintenance strategies. Key best practices recommended include designing for failure, loose coupling, and effective versioning to support dynamic service environments.