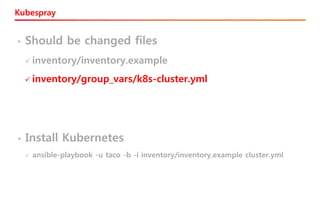

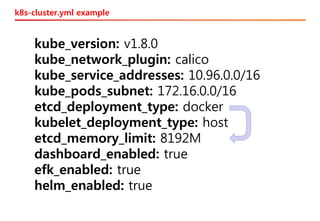

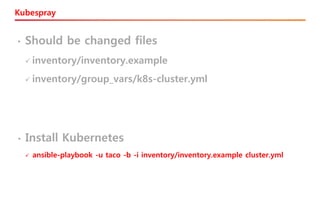

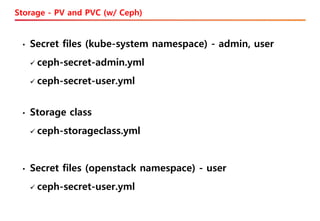

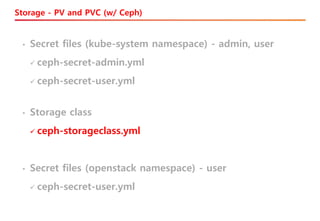

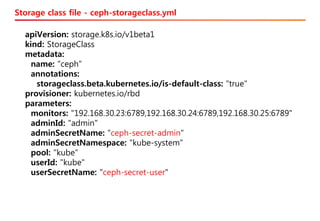

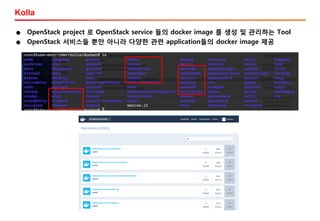

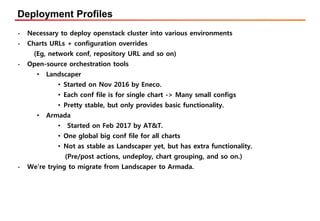

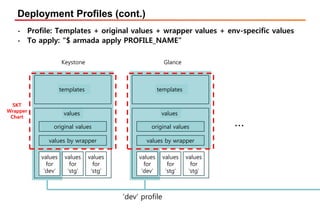

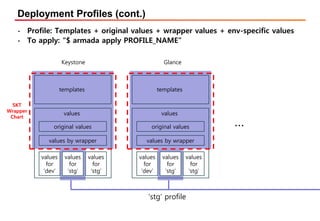

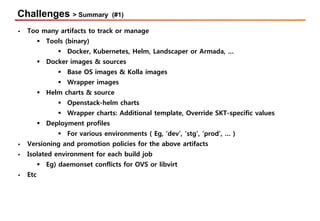

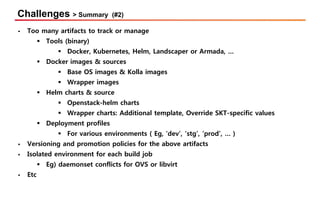

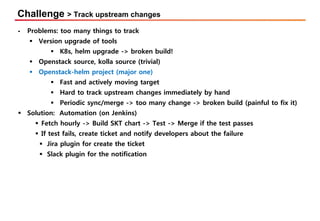

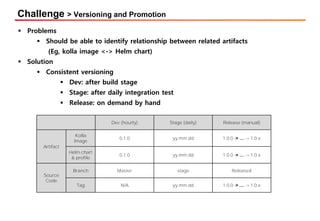

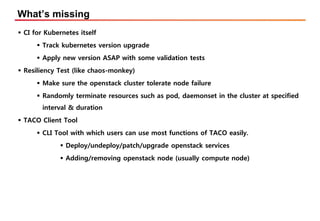

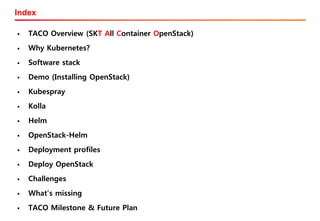

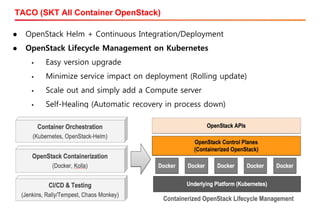

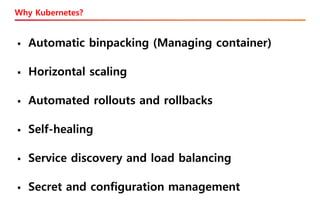

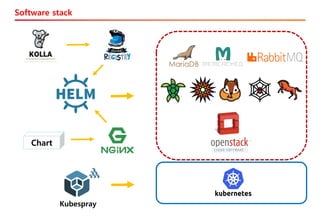

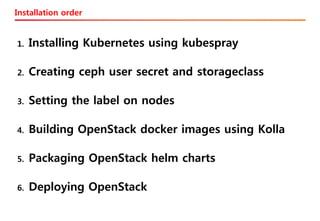

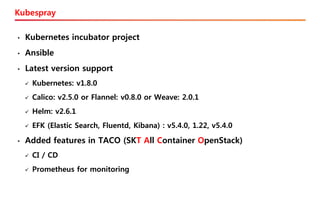

The document discusses the integration of Kubernetes with OpenStack through a project called TACO, which focuses on managing OpenStack services in a containerized environment. It outlines the benefits of using Kubernetes, software stacks, installation processes, challenges faced, and future milestones for the project, including plans for production deployment and continuous improvement. The document also addresses the need for automation in managing versioning, artifacts, and ensuring system resiliency.

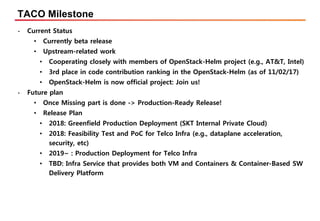

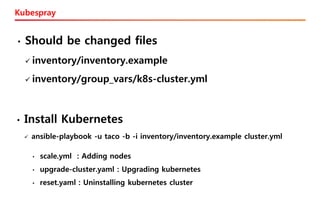

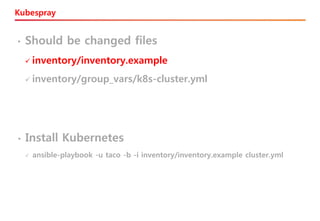

![Inventory example

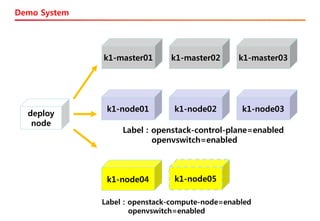

k1-master01 ansible_port=22 ansible_host=k1-master01 ip=192.168.30.13

k1-master02 ansible_port=22 ansible_host=k1-master02 ip=192.168.30.14

k1-master03 ansible_port=22 ansible_host=k1-master03 ip=192.168.30.15

k1-node01 ansible_port=22 ansible_host=k1-node01 ip=192.168.30.12

k1-node02 ansible_port=22 ansible_host=k1-node02 ip=192.168.30.17

k1-node03 ansible_port=22 ansible_host=k1-node03 ip=192.168.30.18

k1-node04 ansible_port=22 ansible_host=k1-node04 ip=192.168.30.21

[etcd]

k1-master01

k1-master02

k1-master03

[kube-master]

k1-master01

k1-master02

k1-master03

[kube-node]

k1-node01

k1-node02

k1-node03

k1-node04

[k8s-cluster:children]

kube-master

kube-node](https://image.slidesharecdn.com/fromkubernetestoopenstack171103-180223101420/85/From-Kubernetes-to-OpenStack-in-Sydney-12-320.jpg)