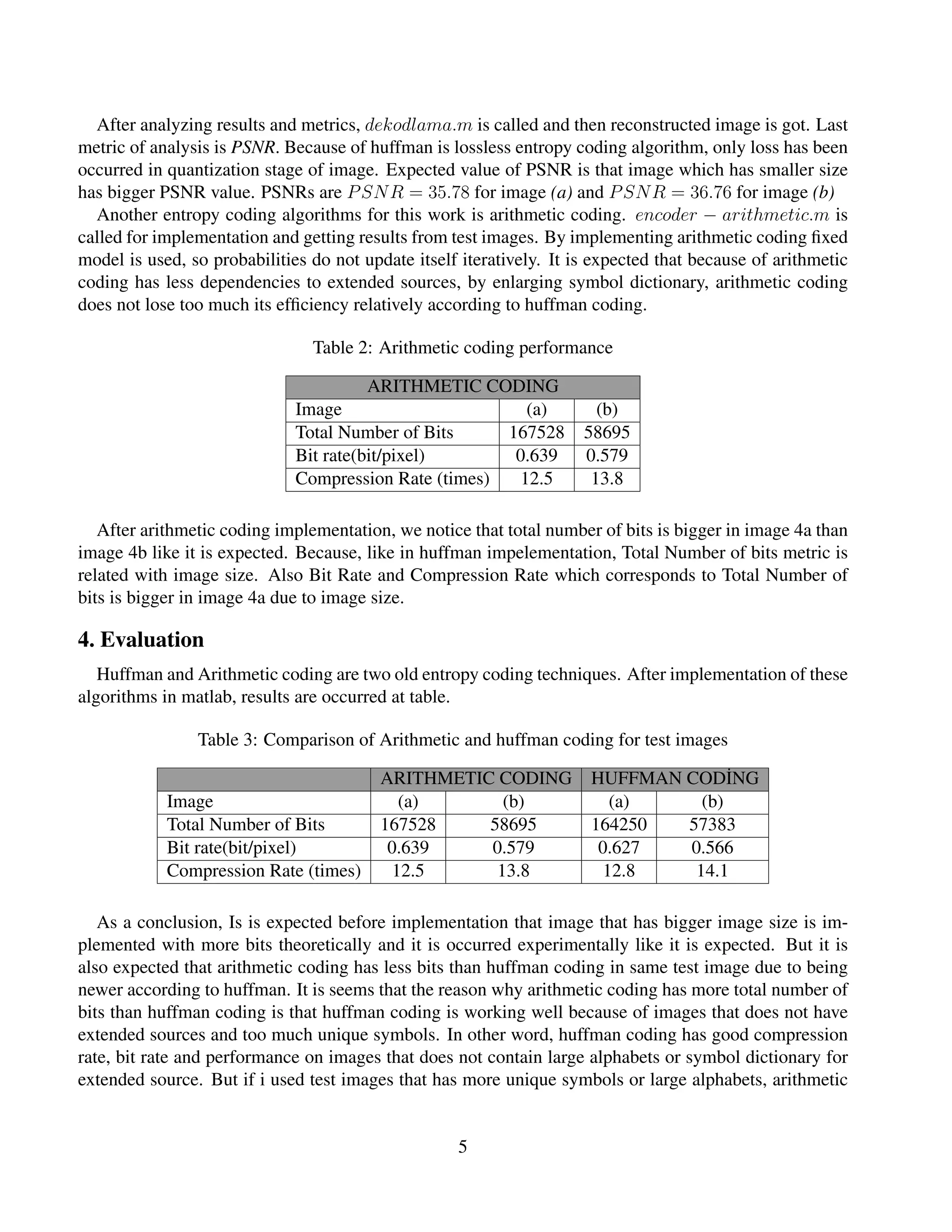

This document discusses intra-frame compression using Huffman and arithmetic entropy coding techniques. It implements both techniques in MATLAB on two test images and evaluates the results. Huffman coding performed slightly better, with lower bit rates and higher compression rates. However, arithmetic coding would likely perform better on images with larger symbol dictionaries or alphabets due to its adaptive modeling capabilities. Both techniques achieved high efficiency for the test images.

![coding would be more useful and well performance against huffman coding. Another advantage of arith-

metic coding against huffman is the chance of choose of adaptive arithmetic model while in huffman it is

not possible. In other word, adaptive model of arithmetic coding provides varying symbol probabilities

by time while huffman provides only stable symbol probabilities.

References

[1] IAN H. WITTEN, RADFORD M. NEAL, and JOHN G. CLEARY ARITHMETIC CODING FOR

DATA COMPRESSION 30.6.520. 1987.

[2] Rafael C. Gonzalez, Richard E. Woods and S.Eddins Digital Image Processing Using MATLAB 2nd

Edition 2009: Gatesmark Publishing

6](https://image.slidesharecdn.com/c12414d5-0ed6-4b83-b4b7-93f391ba1b51-160729112455/75/first_assignment_Report-6-2048.jpg)