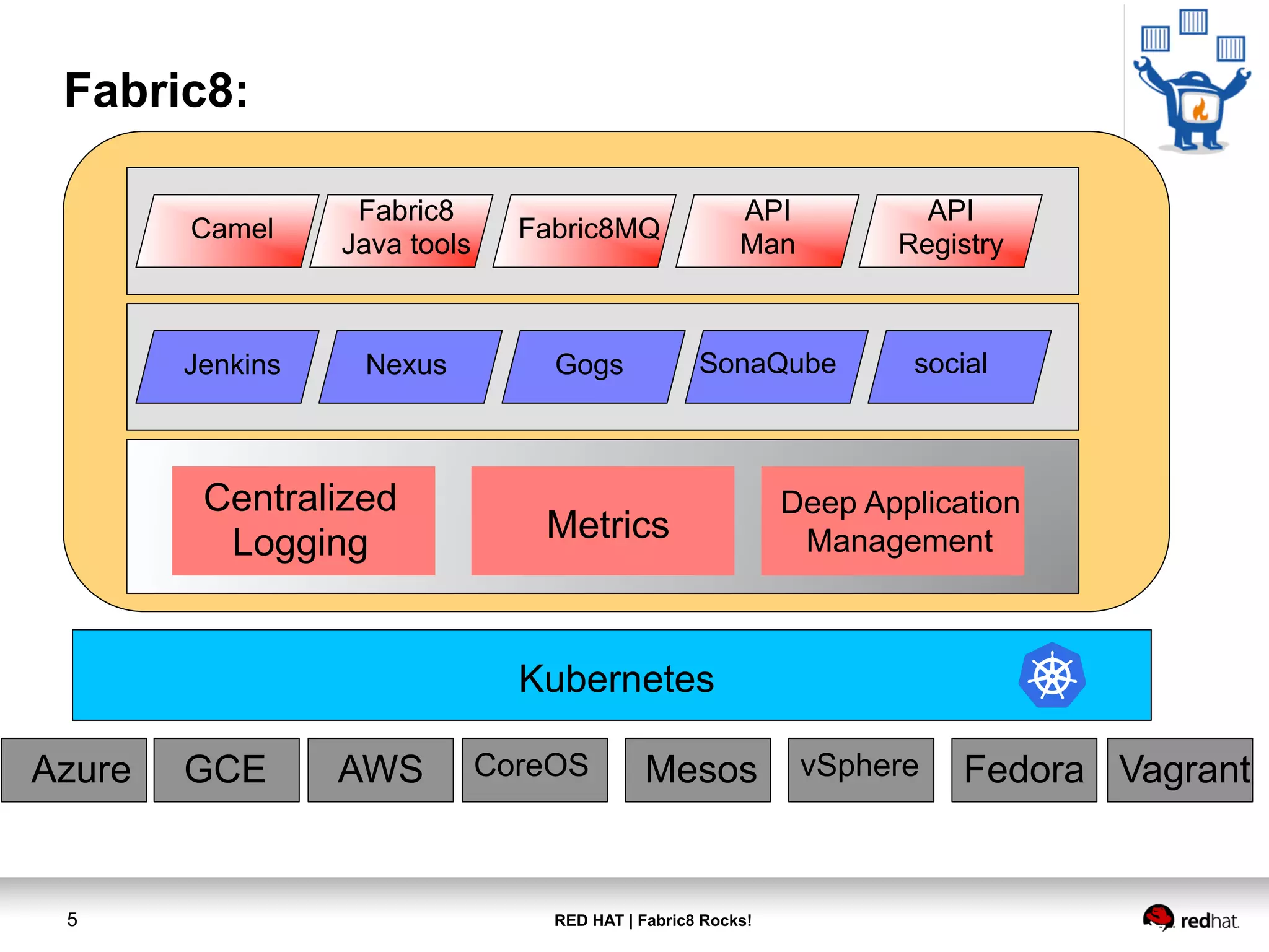

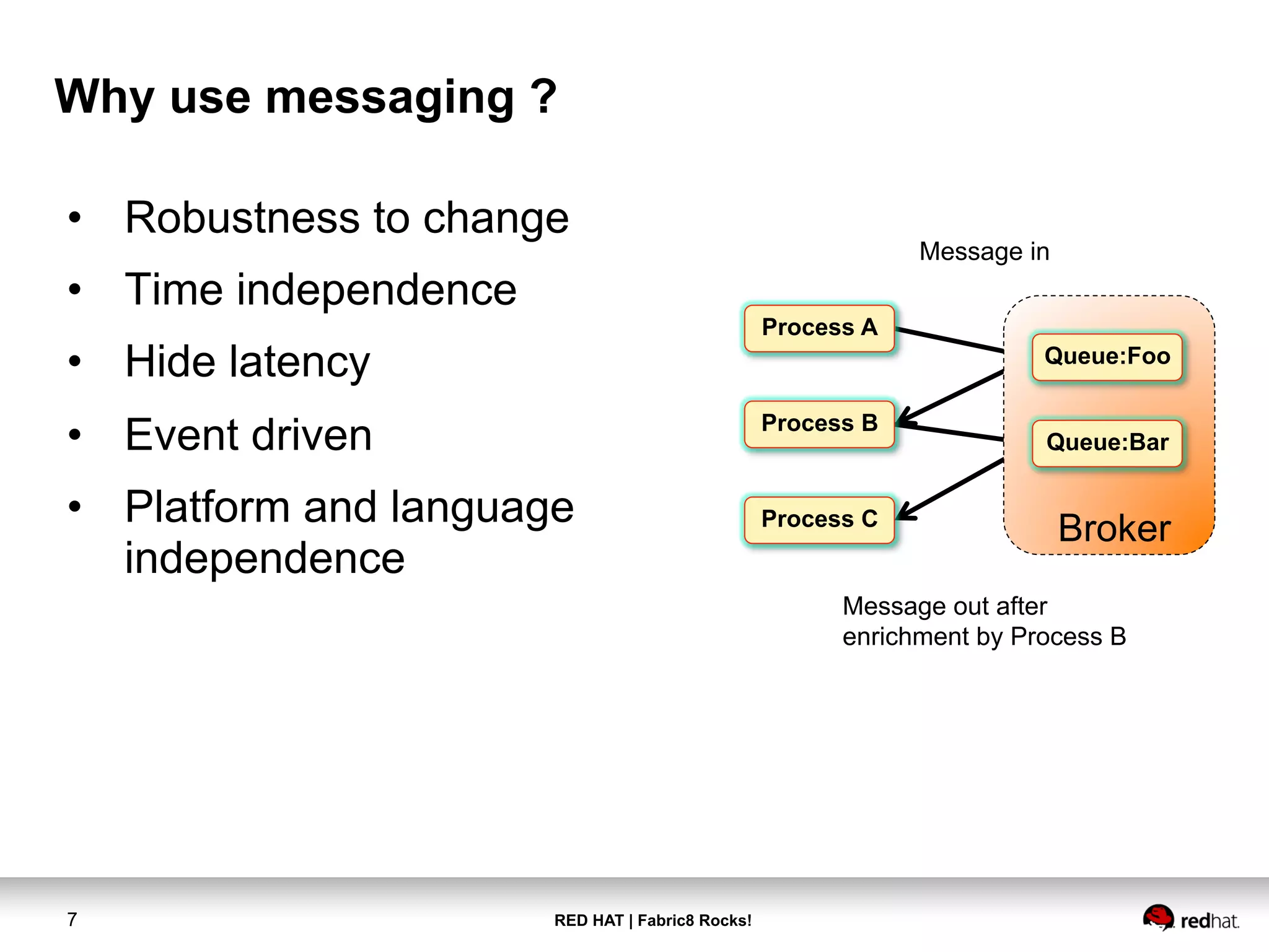

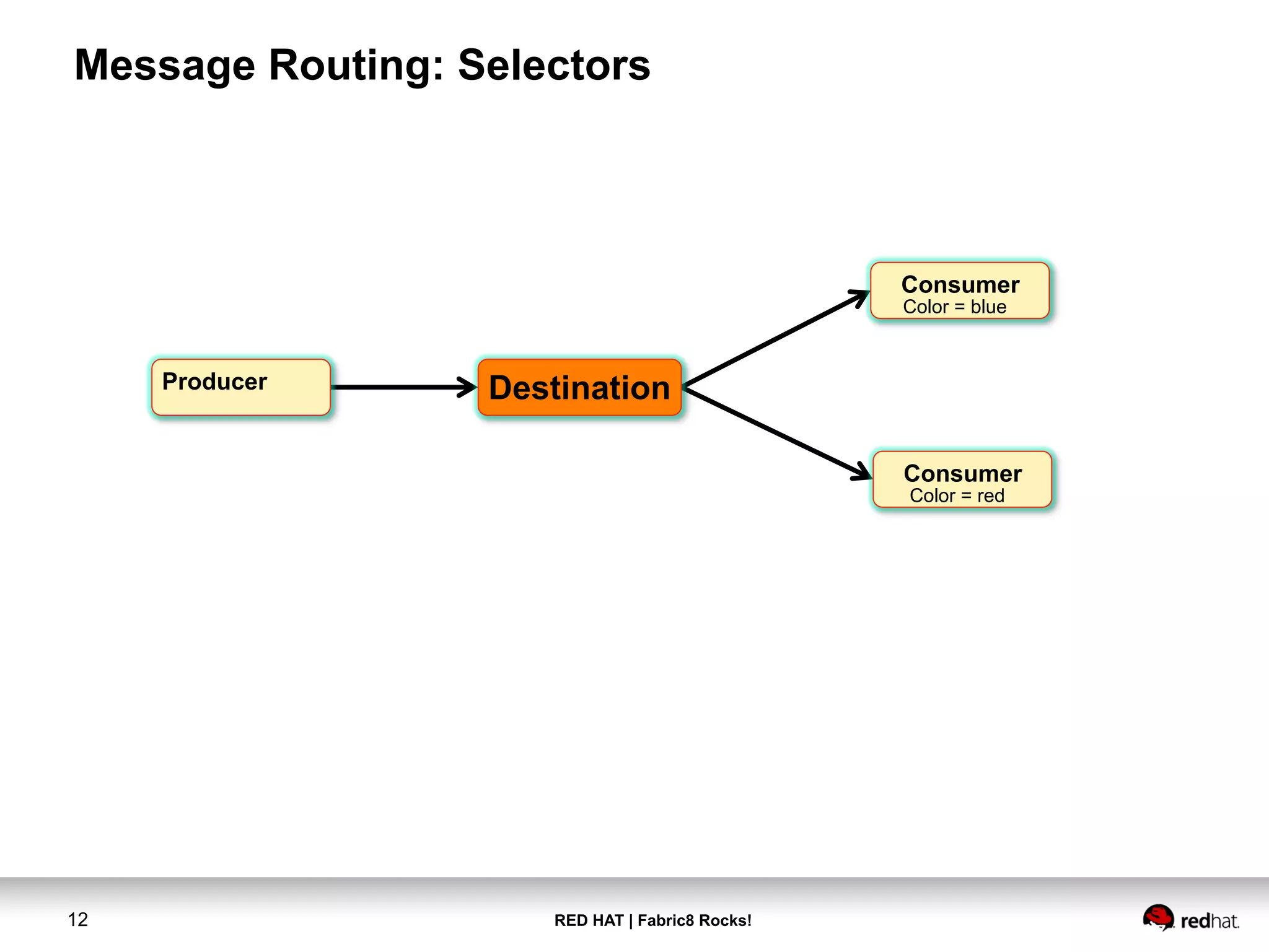

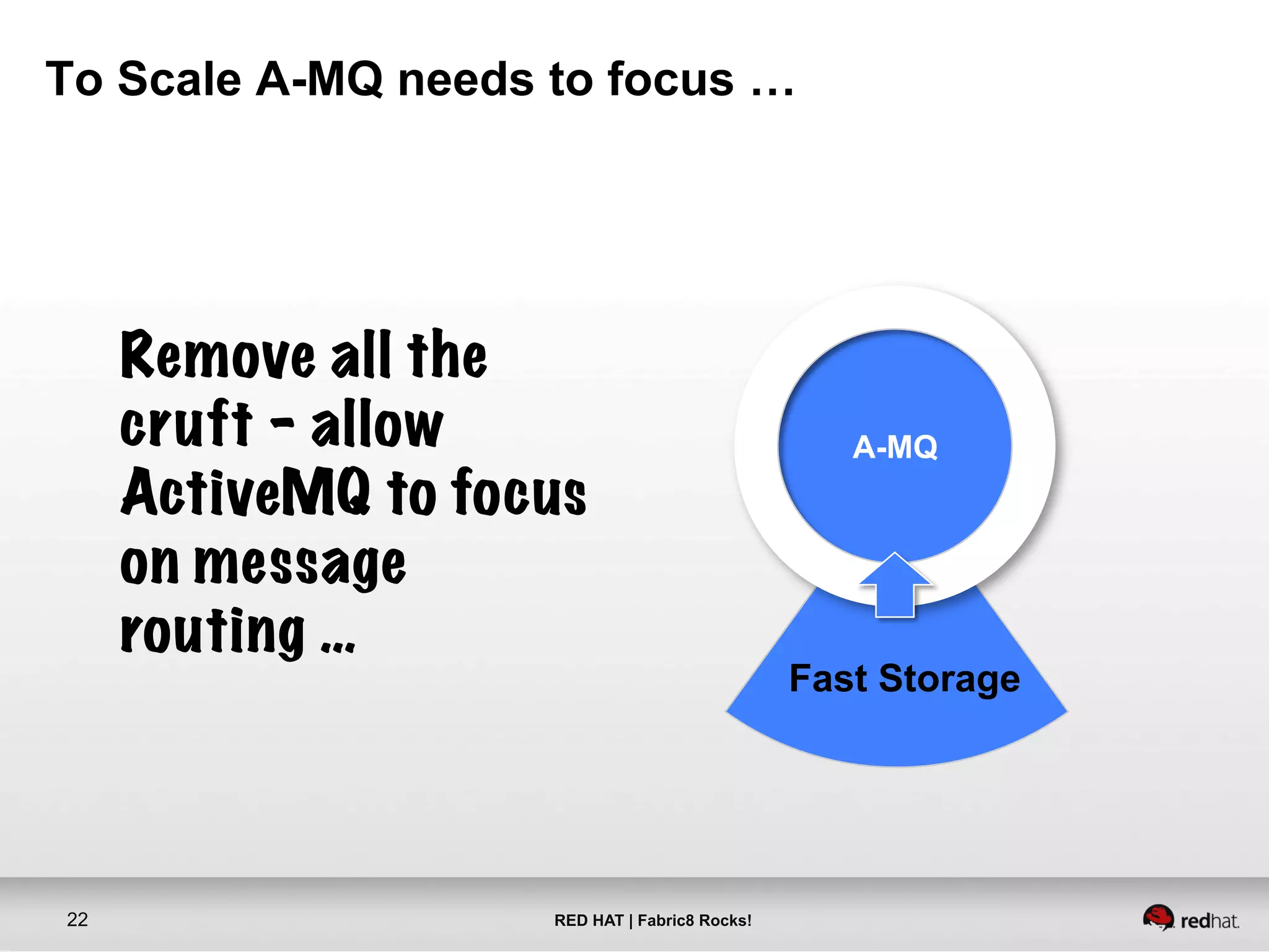

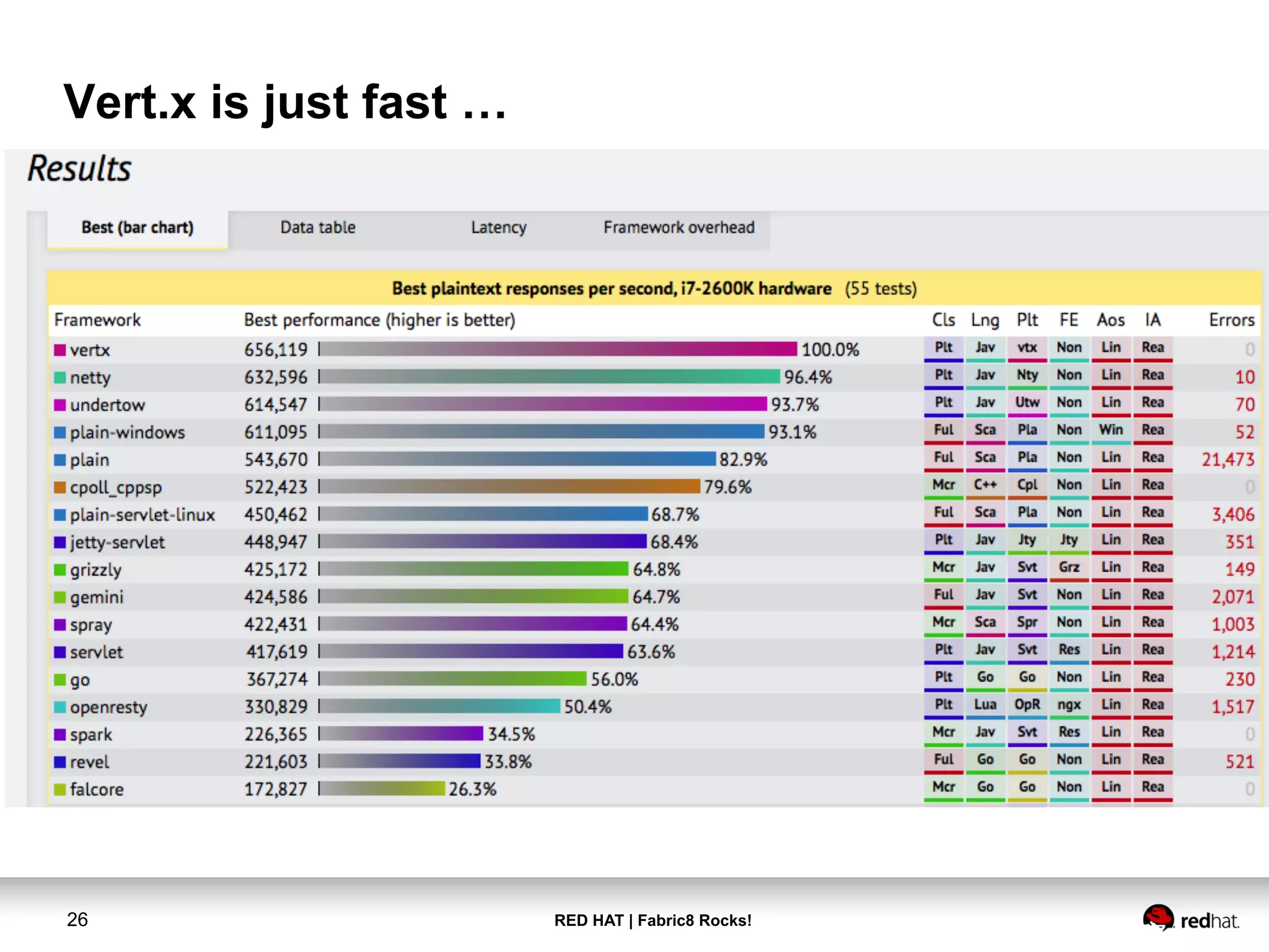

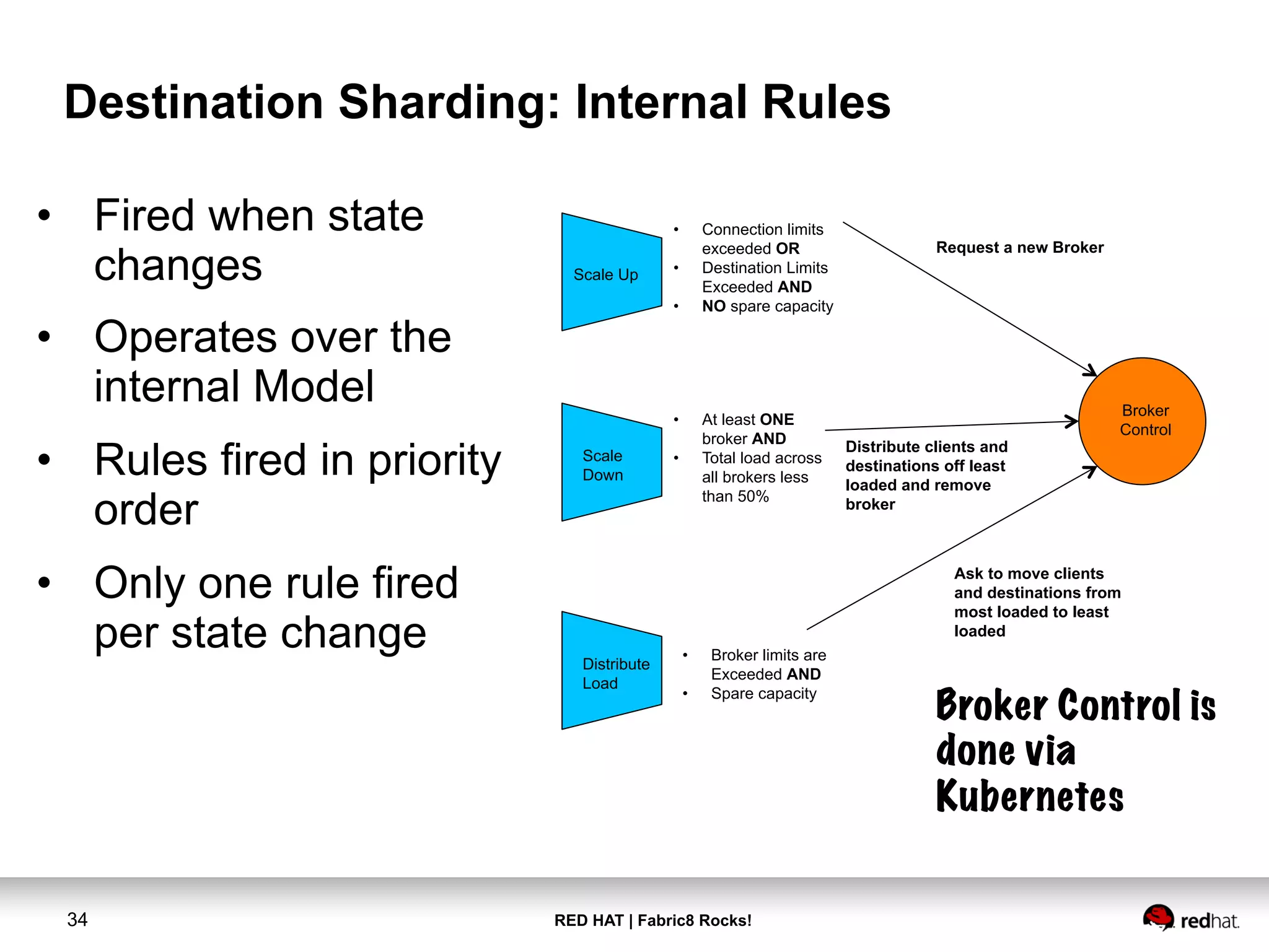

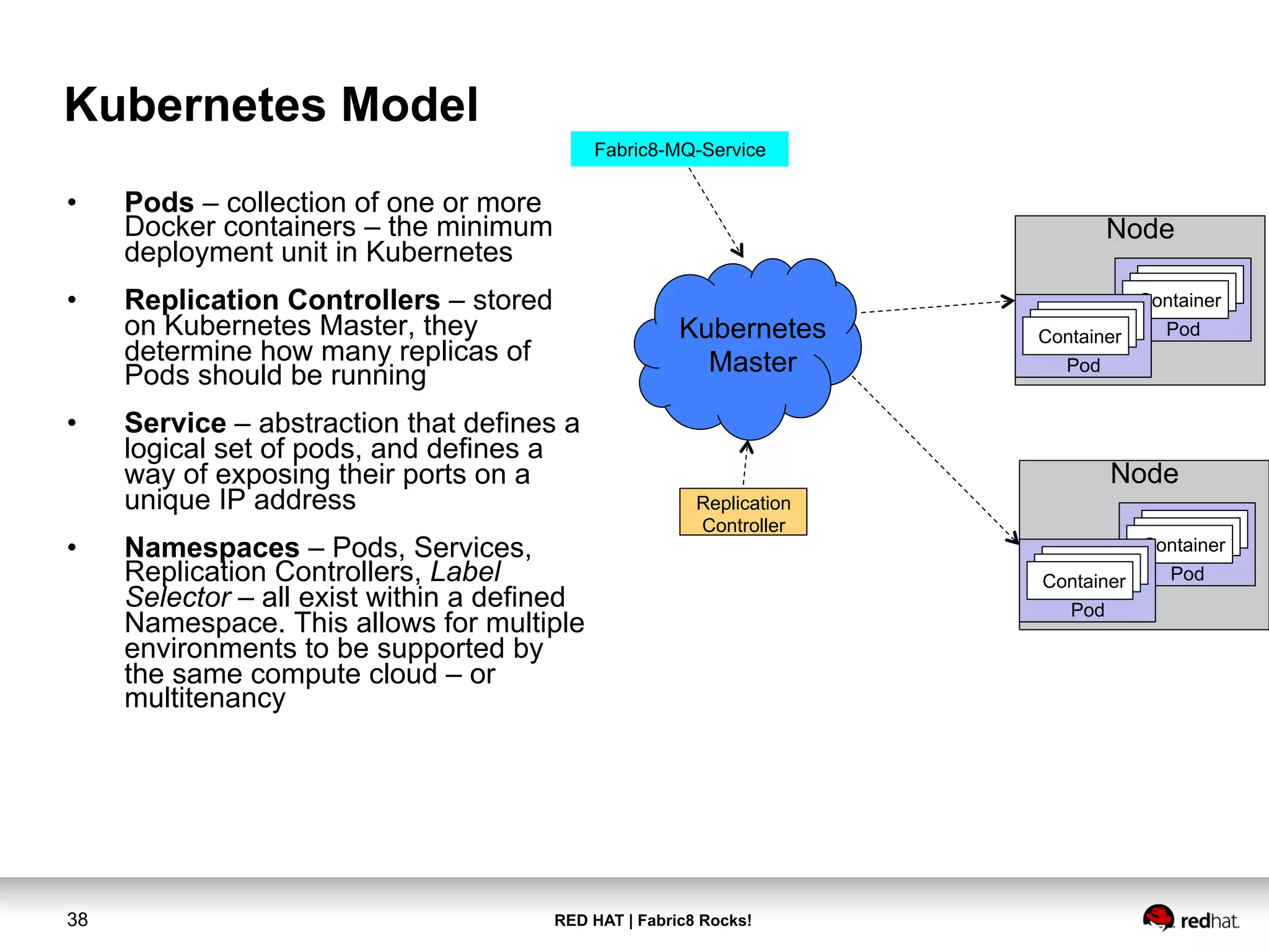

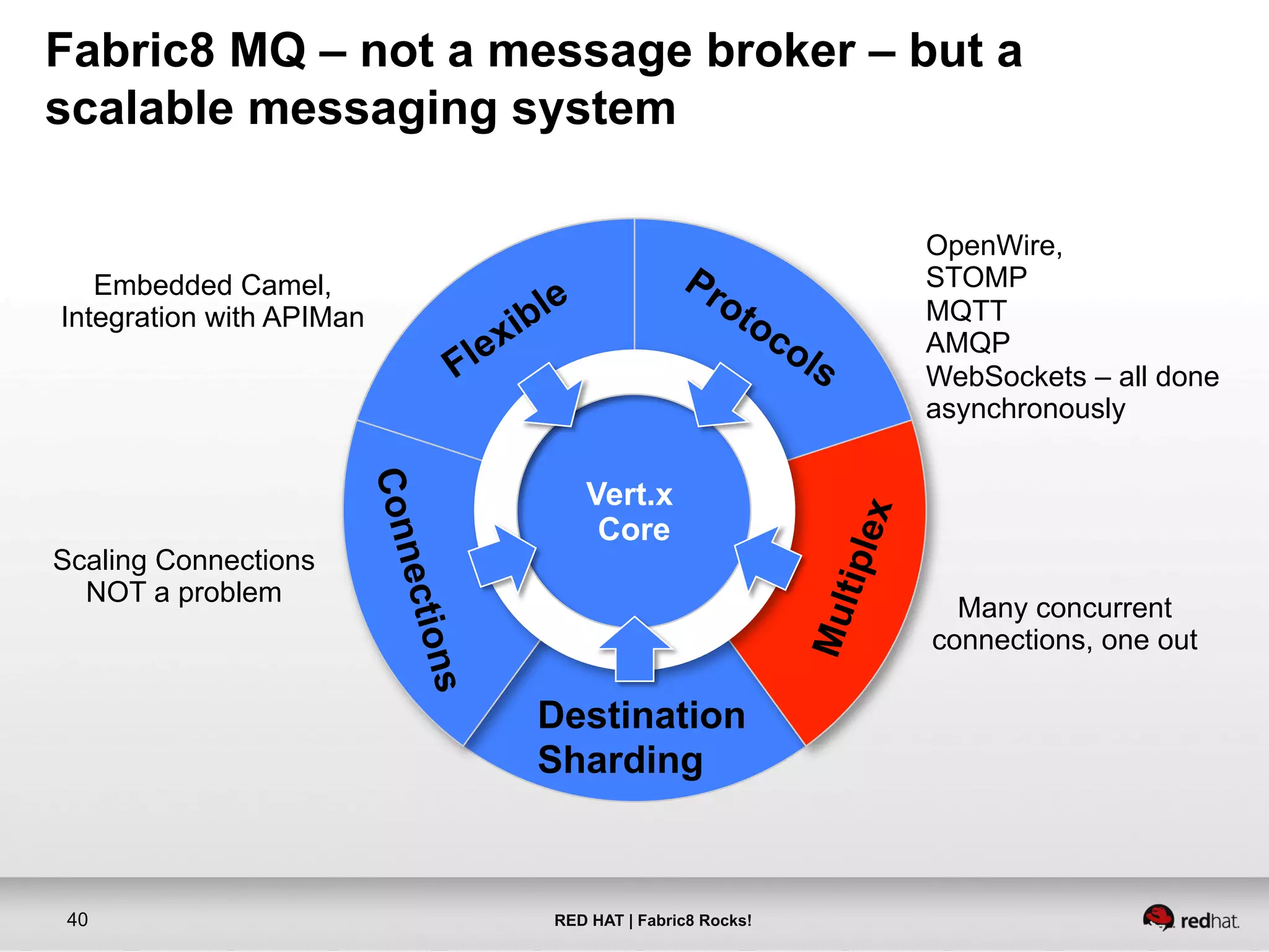

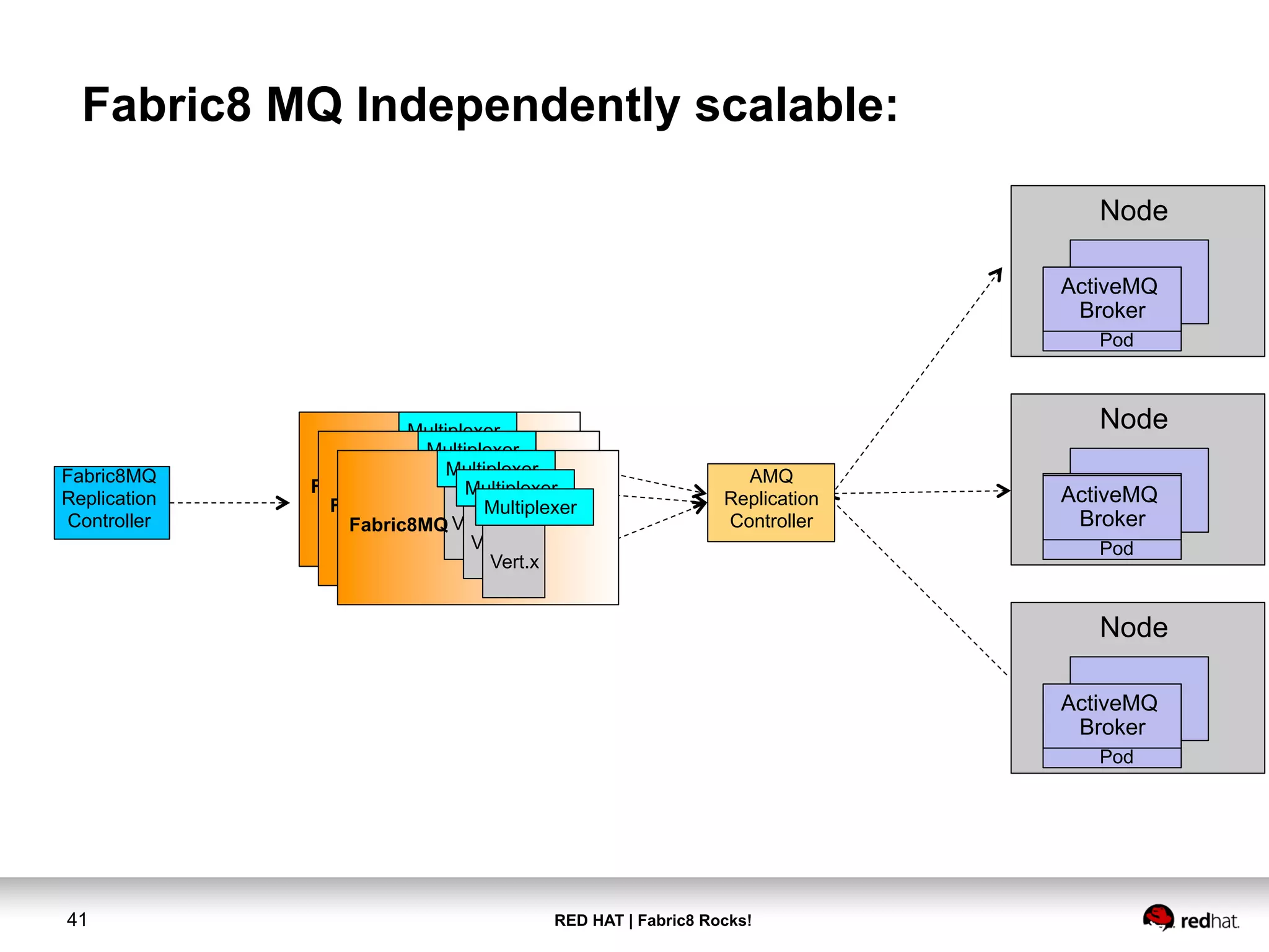

This document discusses achieving horizontal scaling for enterprise messaging using Fabric8. It provides an introduction to Fabric8 and enterprise messaging concepts. It then describes how Fabric8MQ, which is built on Vert.x, provides horizontal scaling and load balancing for ActiveMQ by implementing features like protocol conversion, Camel routing, API management, multiplexing, and destination sharding across Kubernetes pods and nodes. The document concludes with a demo of Fabric8MQ's capabilities.