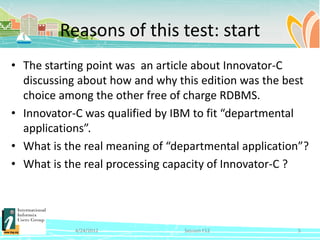

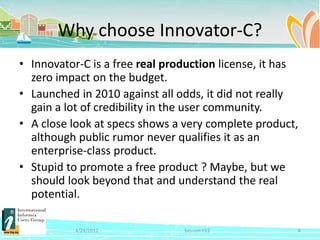

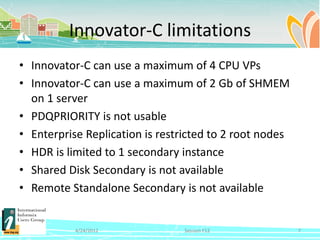

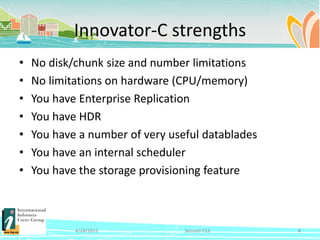

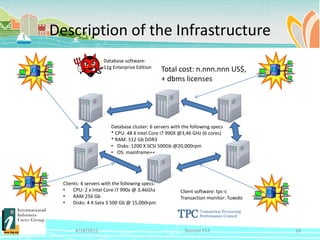

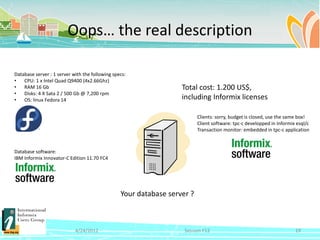

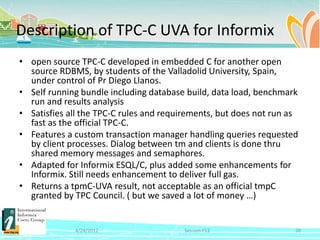

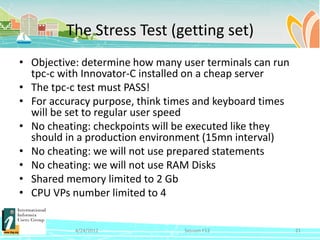

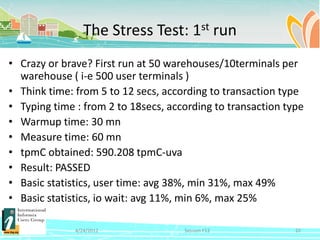

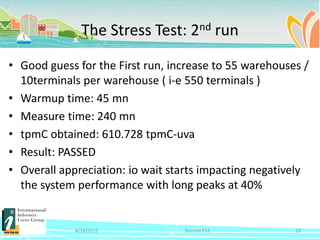

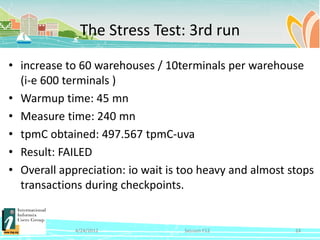

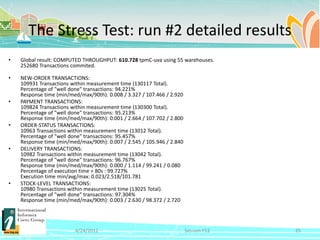

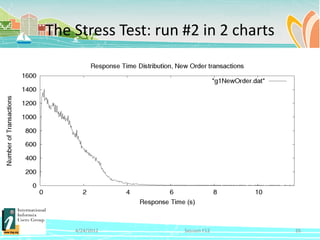

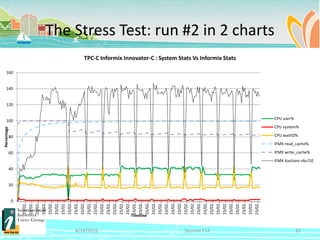

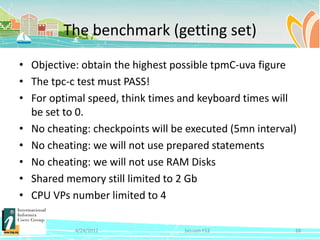

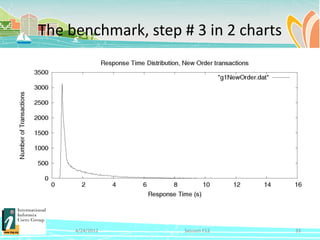

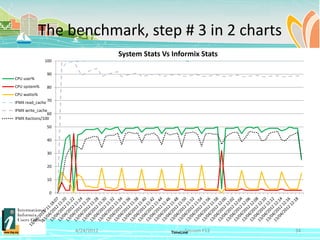

This document describes a case study using the TPC-C benchmark to evaluate the performance of IBM Informix Innovator-C Edition on a low-cost server. It outlines the reasons for conducting the study, defines stress testing versus benchmarking, and describes the scenario used, including conducting a stress test with increasing user loads and a TPC-C benchmark test. The goal is to determine the maximum number of users and cost per transaction that Innovator-C can support on inexpensive hardware.