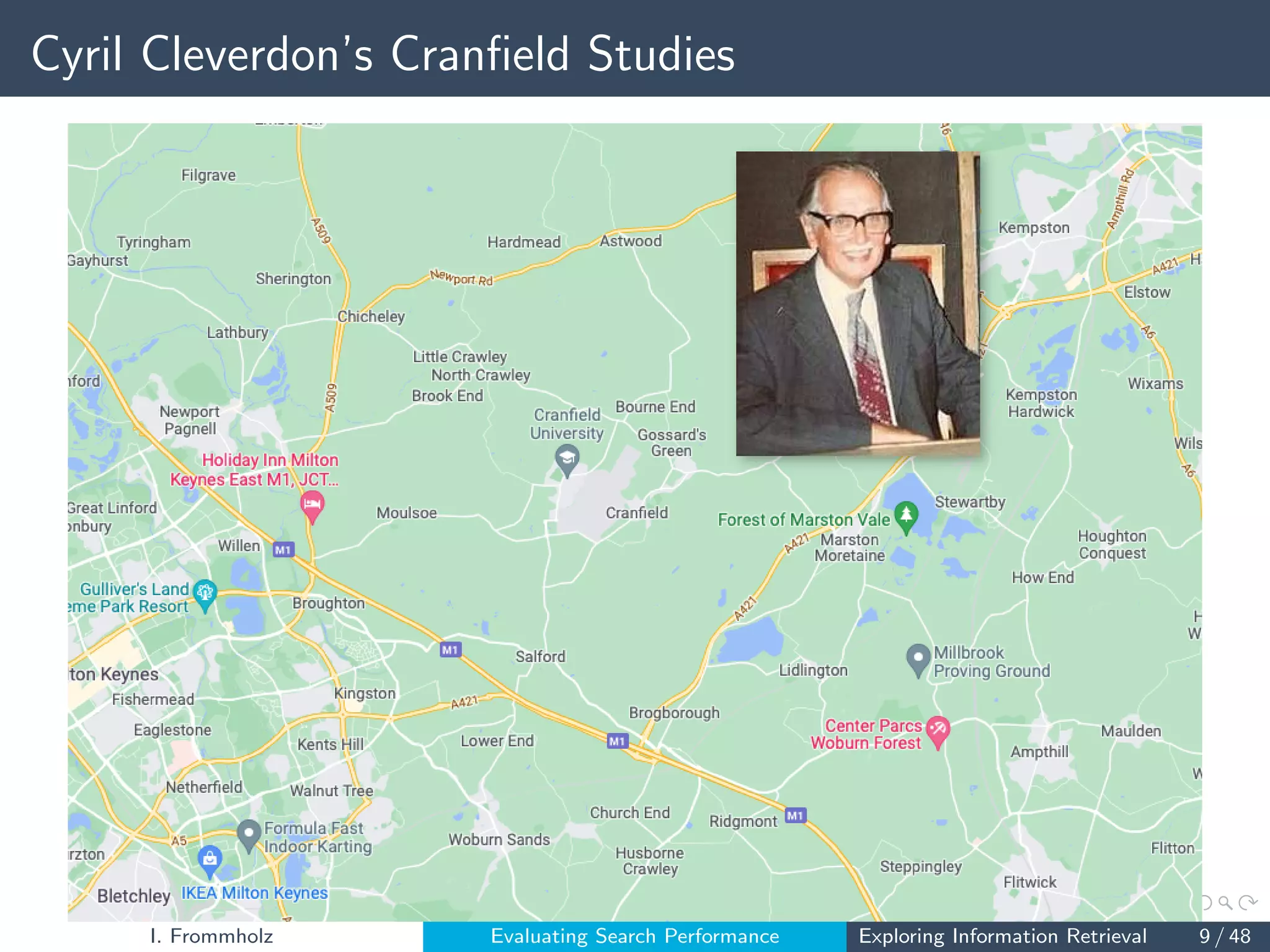

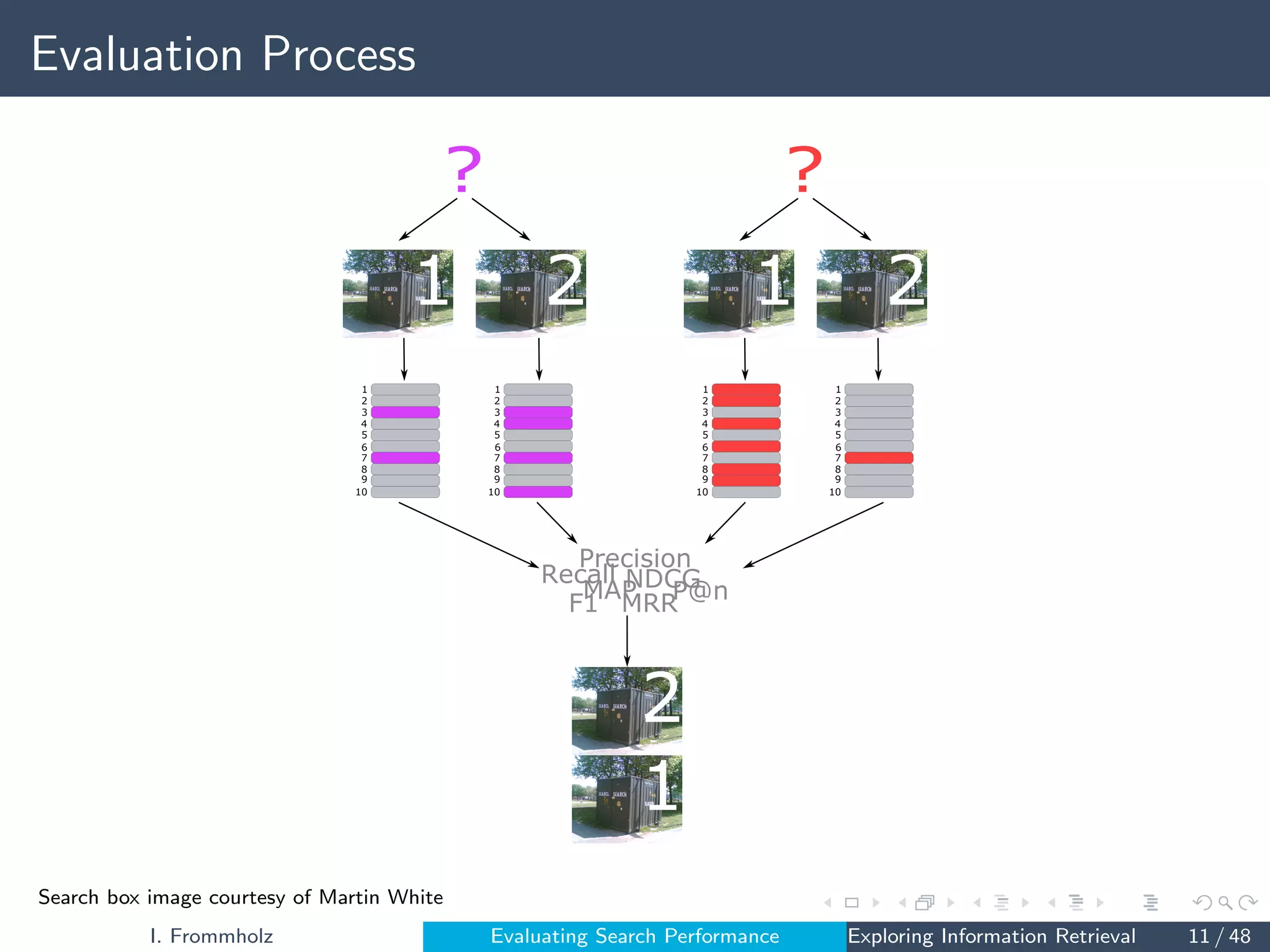

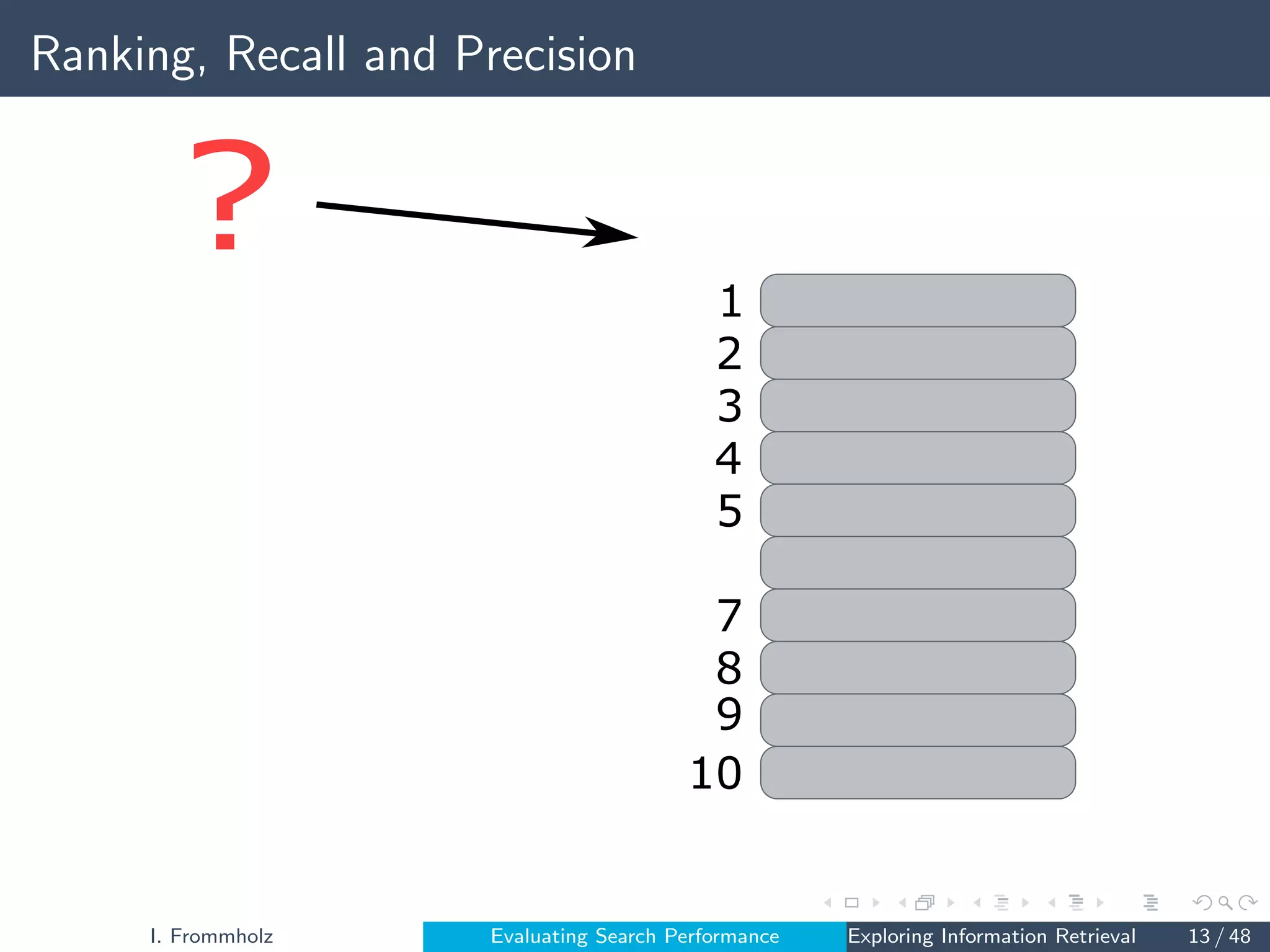

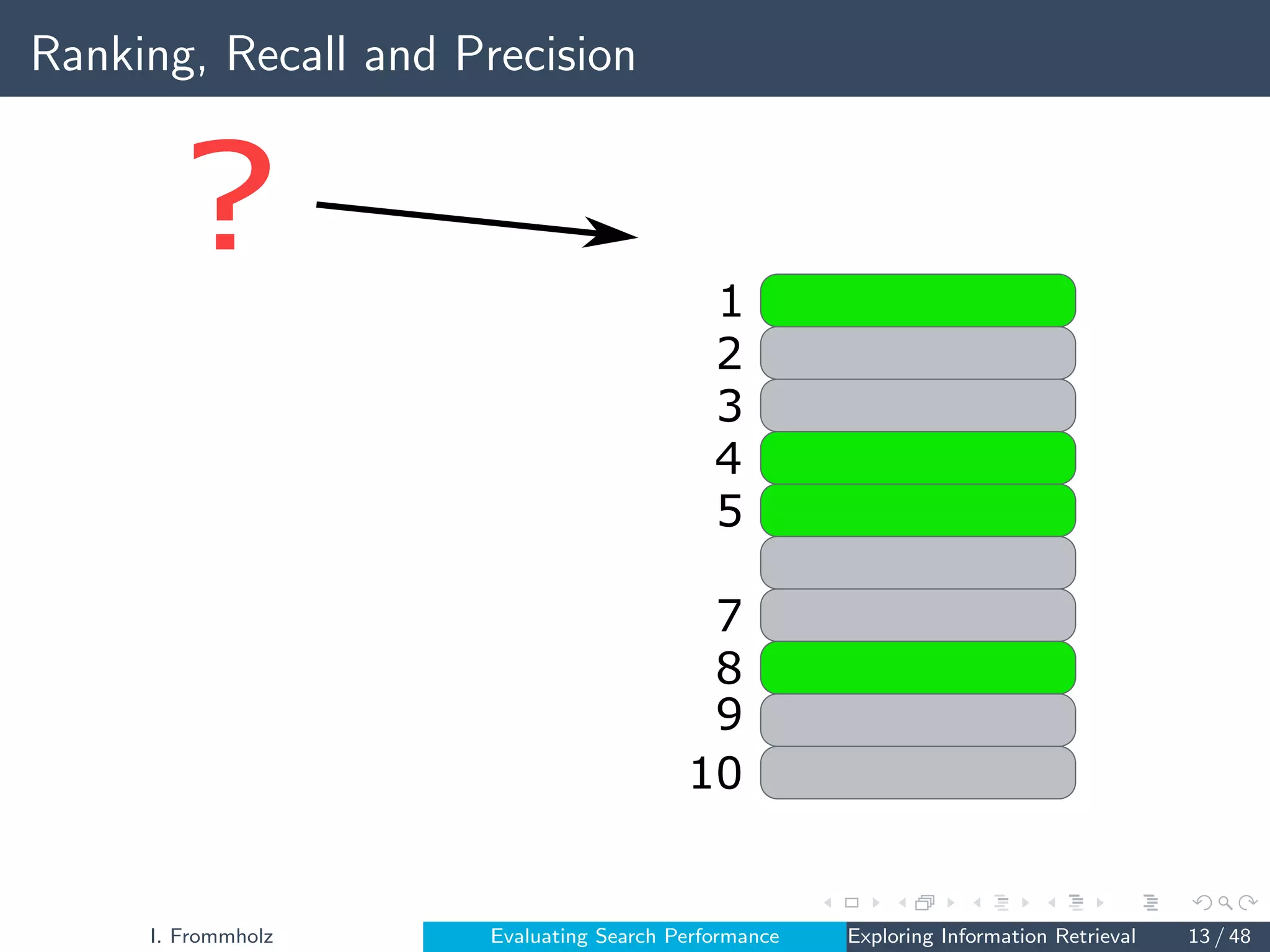

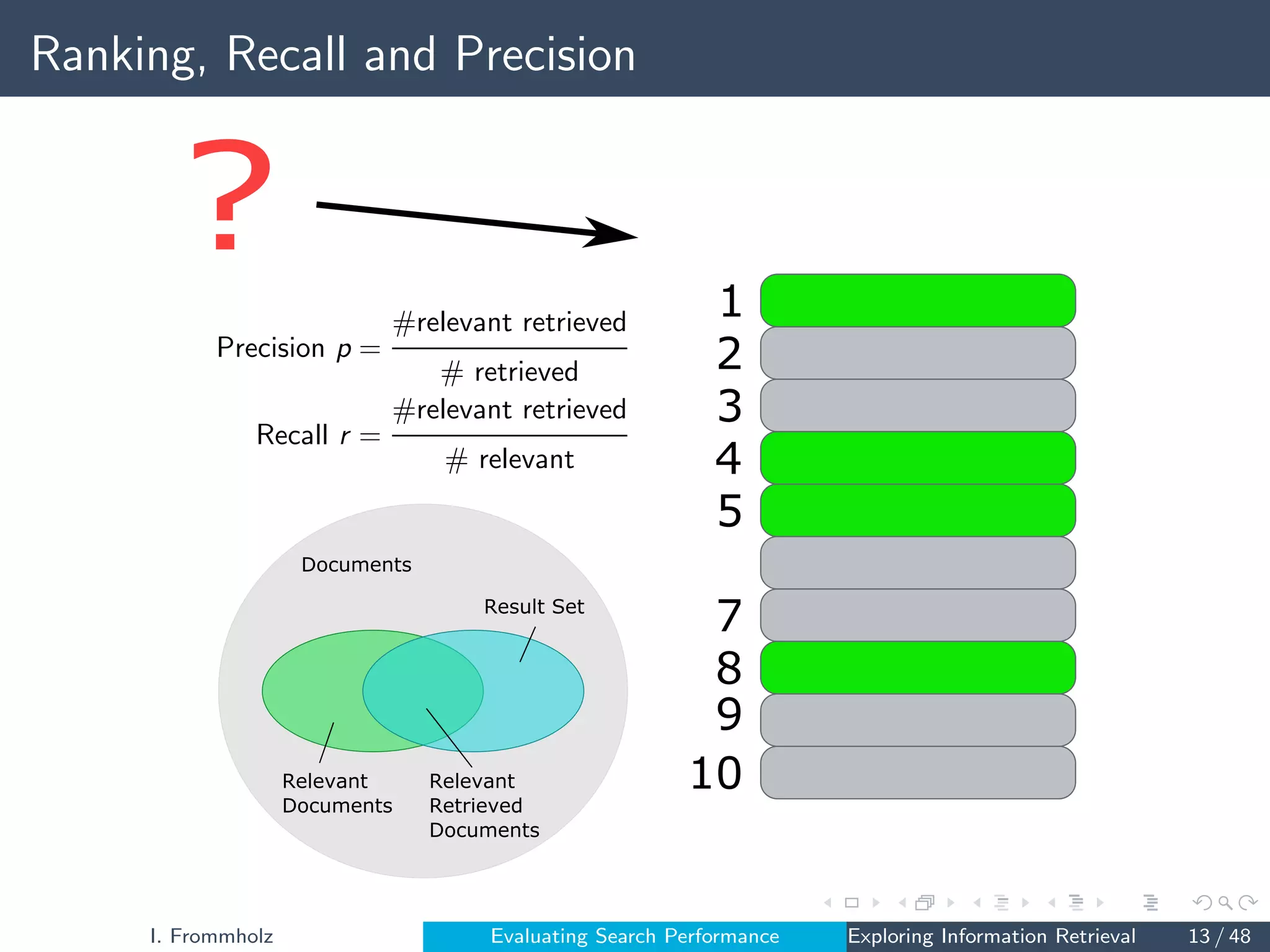

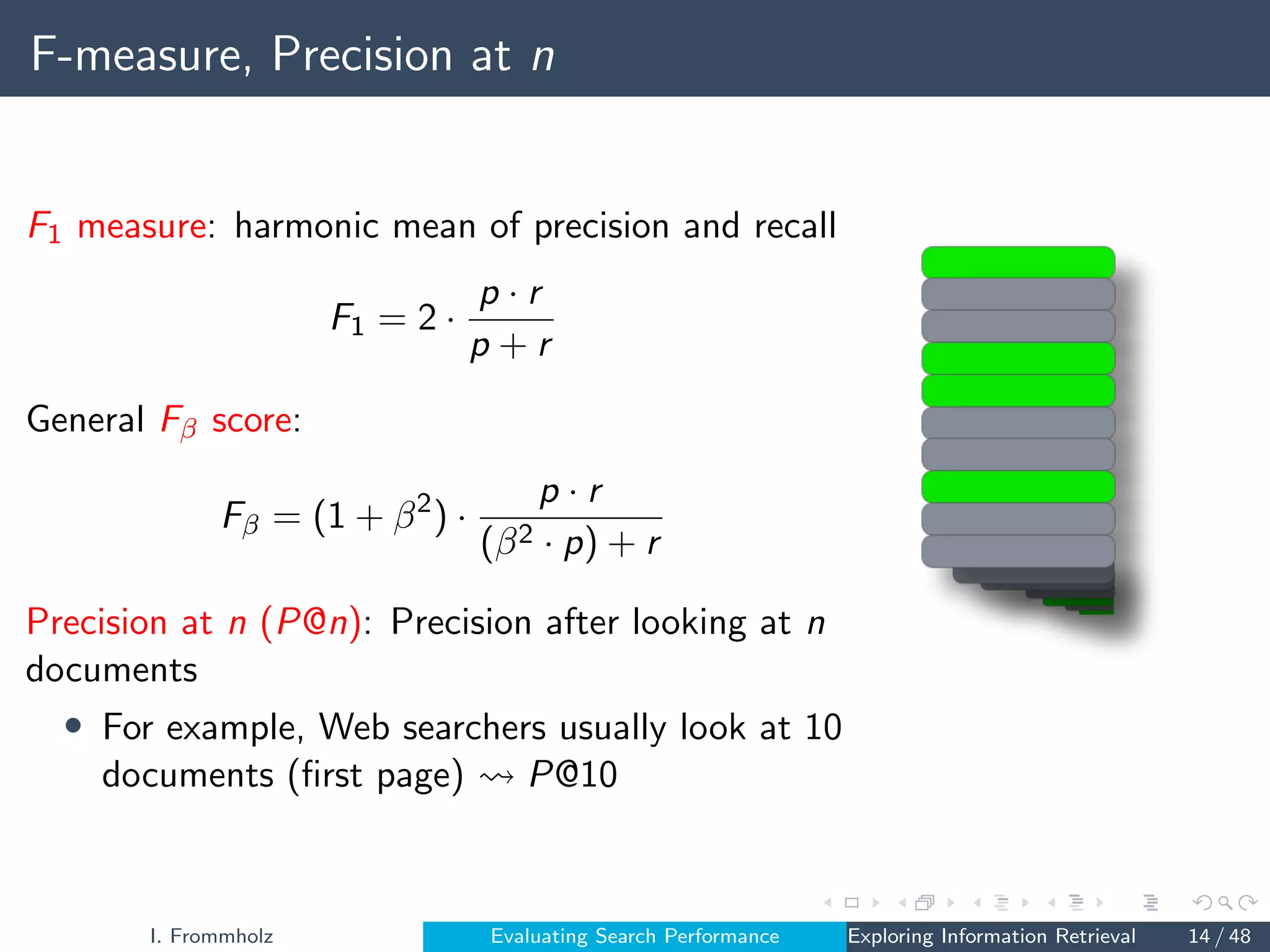

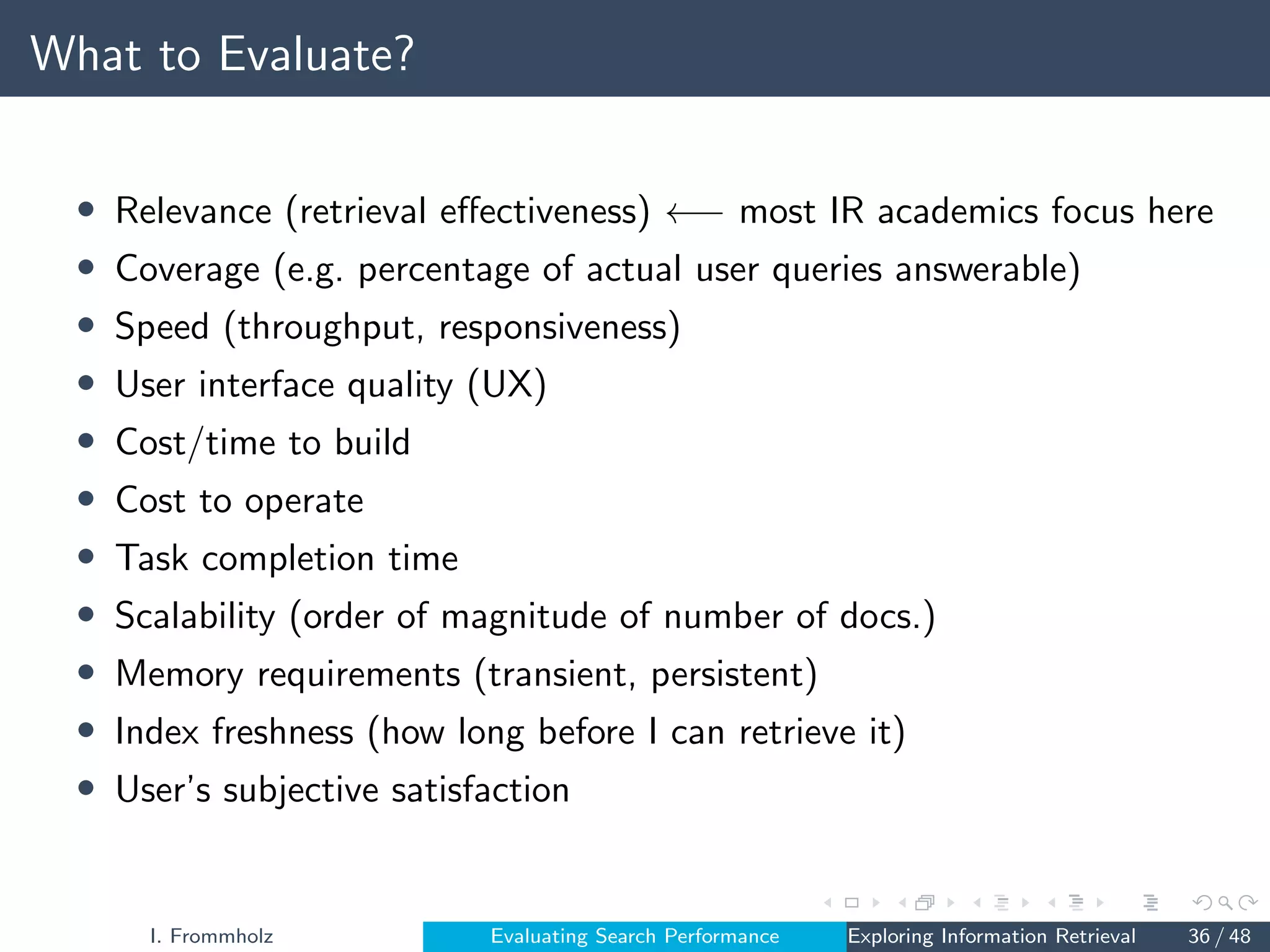

The document discusses evaluating search system performance. It begins with an overview of the Cranfield paradigm for evaluation, which involves test collections of documents, queries and relevance judgments. Common evaluation metrics are then explained, such as precision, recall, F-measure, mean average precision and normalized discounted cumulative gain. Several evaluation initiatives are also described, including TREC, NTCIR and CLEF. The document notes that the Cranfield paradigm focuses on system-oriented measures and effectiveness, but that users should be more central to evaluation. It suggests that relevance is subjective and user experience is also important.

![Retrieval Experiments, Cranfield Style [Har11]

Ingredients of a Test Collection

• A collection of documents

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 10 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-10-2048.jpg)

![Retrieval Experiments, Cranfield Style [Har11]

Ingredients of a Test Collection

• A set of queries

?

?

?

?

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 10 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-11-2048.jpg)

![Retrieval Experiments, Cranfield Style [Har11]

Ingredients of a Test Collection

• A set of relevance judgements ?

?

?

?

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 10 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-12-2048.jpg)

![Mean Average Precision (MAP), Mean Reciprocal Rank

(MRR)

Mean average precision (MAP) [BV05]:

1 Measure precision after each relevant document

2 Average over the precision values to get

average precision for one topic/query

3 Average over topics/queries

Mean Reciprocal Rank (MRR): Position of the first

correct (relevant) result (e.g. question answering):

Ri ranking of query qi

Nq number of queries

Scorr position of the first correct answer in the

ranking

MRR =

1

Nq

Nq

X

1

1

Scorr(Ri )

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 17 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-22-2048.jpg)

![Normalized Discounted Cumulative Gain (NDCG)

[JK02]

• Relevance judgements ∈ {0, 1, 2, 3}

1

2

3

4

5

7

8

9

10

6

1

0

0

3

1

0

0

0

2

1

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 20 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-25-2048.jpg)

![Normalized Discounted Cumulative Gain (NDCG)

[JK02]

• Relevance judgements ∈ {0, 1, 2, 3}

• Gain vector Gi for ranking Ri induced by query

qi

• Gain at postion j depends on level of relevance

• Cumulative gain vector CGi

CGi [j] =

Gi [1] if j = 1

Gi [j] + CGi [j − 1] if j 1

• Discounted cumulative gain vector DCGi :

taking the position j into account (discount

factor log2 j)

DCGi [j] =

(

Gi [1] if j = 1

Gi [j]

log2 j + DCGi [j − 1] if j 1

1

2

3

4

5

7

8

9

10

6

1

0

0

3

1

0

0

0

2

1

1

0

0

3

1

0

0

0

2

1

1

1

1

4

5

7

8

8

7

8

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 20 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-26-2048.jpg)

![Normalized Discounted Cumulative Gain (NDCG)

[JK02]

• Ideal gain vector

IGi = (3, 2, 1, 1, 1, 0, 0, 0, 0, 0)

• ICGi and IDCGi analogously

• Average over all queries to compute NDCG:

DCG[j] =

1

Nq

Nq

X

i=1

DCGi [j]

IDCG[j] =

1

Nq

Nq

X

i=1

IDCGi [j]

NDCG[j] =

DCG[j]

IDCG[j]

1

2

3

4

5

7

8

9

10

6

1

0

0

3

1

0

0

0

2

1

1

0

0

3

1

0

0

0

2

1

1

1

1

4

5

7

8

8

7

8

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 20 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-27-2048.jpg)

![NDCG – Comparing Systems

• Compare NDCG curves

• Compare at a given position,

e.g., NDGC[10] analogously to

P@10

Fig. 4(a). Normalized discounted cumulated gain (nDCG) curves, binary

Fig. 4(b). Normalized discounted cumulated gain (nDCG) curves, nonbina

lengths, the results of the statistical tests might have changed. Als

of topics (20) is rather small to provide reliable results. Howev

data illuminate the behavior of the (n)(D)CG measures.

(Taken from [JK02])

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 21 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-28-2048.jpg)

![Some Evaluation Metrics

• Accuracy

• Precision (p)

• Recall (r)

• Fall-out (converse of Specificity)

• F-score (F-measure, converse of Effectiveness) (Fβ)

• Precision at k (P@k)

• R-precision (RPrec)

• Mean average precision (MAP)

• Mean Reciprocal Rank (MRR)

• Normalized Discounted Cumulative Gain (NDCG)

• Maximal Marginal Relevance (MMR)

• Other Metrics: bpref, GMAP, . . .

Some metrics (e.g., MRR) are controversially discussed [Fuh17; Sak20;

Fuh20].

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 22 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-29-2048.jpg)

![“Hacking Your Measures” – Evaluating Structured

Document Retrieval

INEX – INitiative for the Evaluation of XML Retrieval [LÖ09]

• Find smallest component that is

highly relevant

• INEX created long discussion

threads on suitable evaluation

measures

• Specificity: Extend to which a

document component is focused

on the information need

• Exhaustivity: Extend to which

the information contained in a

document component satisfies

the information need

Book

Chapter

Section

Subsection

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 24 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-31-2048.jpg)

![TREC – Text REtrieval Conference

• US DARPA funded public benchmark and

associated workshop series (1992-) [VH99;

VH05]

• Some past TREC tracks

• Ad hoc

• Filtering

• Interactive tracks

• Cross-lingual tracks

• Very large collection / Web track

• Enterprise Track

• Question answering

• TREC 2022

• Clinical Trials Track

• Conversational Assistance Track

• CrisisFACTS Track

• Deep Learning Track

• Fair Ranking Track

• Health Misinformation Track

• NeuCLIR Track

http://trec.nist.gov/

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 27 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-34-2048.jpg)

![Other Common Evaluation Initiatives

• NTCIR [SOK21]: Japanese

language initiative (1999-)

• CLEF [FP19]: European

initiative on monolingual and

cross-lingual search (2000-);

• MediaEval [Lar+17]:

Benchmarking Initiative for

Multimedia Evaluation

• CORD-19 dataset [Wan+20],

used in TREC-COVID [Voo+20]

Source: client of Intranet Focus Ltd. Thanks again Martin White!

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 28 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-35-2048.jpg)

![Where is the User?

• Only little user involvement in the Cranfield paradigm

• The client, customer or user should be at the centre.

• Not a single stakeholder:

• People are different (user diversity)

• People search differently

• People have different information needs (objective diversity)

• People hold different professional roles (role diversity)

• People differ with respect to skills experiences (skill diversity)

• Relevance is subjective and situational and there is more than topical

relevance [Miz98]

• Usefulness, appropriateness, accessibility, comprehensibility, novelty, etc.

• The user also cares about the user experience (UX)

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 30 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-37-2048.jpg)

![Search as “Berrypicking”

Marcia Bates [Bat89]

Search is an iterative process

formation need cannot be satisfied by a single result se

stead: sequence of selections and collection of pieces o

formation during search

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 31 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-38-2048.jpg)

![Information Seeking Searching Context

Ingwersen/Järvelin: The Turn [IJ05]

Integration of Information Seeking and Retrieval in Context

these sources/systems and tools needs to take their joint usability, quality

of information and process into account. One may ask what is the contribu-

tion of an IR system at the end of a seeking process – over time, over seek-

ing tasks, and over seekers. Since the knowledge sources, systems and

tools are not used in isolation they should not be designed nor evaluated in

t

isolation. They affect each other’s utility in context.

Fig. 7.2. Nested contexts and evaluation criteria for task-based ISR (extension of

Docs

Repr

DB

Request

Query

Matc

h

Repr

Result

A: Recall, precision, efficiency, quality of information/process

B: Usability, quality of information/process

C: Qualityof info work process/result

Work Task

Seeking

Task

Seeking

Process

Work

Process

Task

Result

Seeking

Result

Evaluation

Criteria:

Work task context

Seeking context

IR context

Socio-organizational cultural context

D:Socio-cognitive relevance; quality

of work task result

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 32 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-39-2048.jpg)

![Interactive IR Research Continuum

Diane Kelly [Kel07]

10 What is IIR?

System

Focus

Human

Focus

TREC-style

Studies

Information-Seeking

Behavior

in

Context

Log

Analysis

TREC

Interactive

Studies

Experimental

Information

Behavior

Information-Seeking

Behavior

with

IR

Systems

“Users”

make

relevance

assessments

Filtering

and

SDI

Archetypical IIR

Study

Fig. 2.1 Research continuum for conceptualizing IIR research.

search experiences and behaviors, and their interactions with systems.

This type of study differs from a pure system-centered study because

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 33 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-40-2048.jpg)

![Simulated Tasks

Pia Borlund [Bor03]

• Use of realistic scenarios

• Simulated work tasks:

Simulated work task situation: After your graduation you will

be looking for a job in industry. You want information to help you

focus your future job seeking. You know it pays to know the mar-

ket. You would like to find some information about employment

patterns in industry and what kind of qualifications employers will

be looking for from future employees.

Indicative request: Find, for instance, something about future

employment trends in industry, i.e., areas of growth and decline.

• Simulated work tasks should be realistic (e.g., not “imagine you’re

the first human on Mars...”)

• However, a less relatable task may be outweighed by a topically very

interesting situation

• Taking into account situational factors (situational relevance)

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 34 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-41-2048.jpg)

![Living Labs

[BS21; JBD18]

• Offline evaluation

(Cranfield):

reproducible, not taking

users into account

• Online evaluation:

Involving live users, lack

of reproducibility

• Living labs: aims at

making online evaluation

fully reproducible

• TREC OpenSearch,

CLEF LL4IR, CLEF

NewsREEL, NCTIR-13

OpenLive Q

qid -

docid rank

score

identifier

-

(from [BS21])

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 35 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-42-2048.jpg)

![Challenges to Practitioners

• Legacy: old code-base with strange/non-standard/non-effective

evaluation metrics in use combined with a resistance to change;

• Knowledge gap: lack of skills/expertise/experience in the core team;

• Resources: no gold data available;

• Planning: evaluation was not budgeted for;

• Awareness: team consists of traditional managers and software

engineers that do not realise quantitative evaluation is a thing;

• Infrastructure: evaluation needs to be done “in vivo” as there is no

second system instance available; and

• Scaffolding: search component is deeply embedded in the overall

system and cannot be run as a batch script.

• Standard evaluation metrics do not assess domain peculiarities [LC14]

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 38 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-45-2048.jpg)

![What is Different in Industry?

• Effectiveness is only one of many concerns (sometimes even

forgotten!)

• Functionality-oriented view (search seen as one of many functions, no

or little awareness of effectiveness issues)

• Purchase as success criterium!

• Search is often (and wrongly) considered a “solved” problem

• Frequently held developer sentiment: “Just use Elastic, Solr or

Lucene, and we’re done.”

• Developers unskilled in IR may integrate libraries with default settings

inappropriate for a given use case

• Evaluation often done online (on a running system) - A/B testing

[KL17]

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 39 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-46-2048.jpg)

![References and Further Reading

• Introduction to Information Retrieval - Evaluation (slides)

https://web.stanford.edu/class/cs276/handouts/

EvaluationNew-handout-6-per.pdf

• Online Controlled Experiments and A/B Testing

https://www.researchgate.net/profile/Ron-Kohavi/publication/

316116834_Online_Controlled_Experiments_and_AB_Testing

• Enterprise Search – Evaluation (Chapter 4 of [KH])

https://www.flax.co.uk/wp-content/uploads/2017/11/ES_book_

final_journal_version.pdf

• TREC Conferences https://trec.nist.gov

• CLEF Initiative http://www.clef-initiative.eu

• NTCIR Workshops

http://research.nii.ac.jp/ntcir/data/data-en.html

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 40 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-47-2048.jpg)

![Bibliography I

[Bat89] M J Bates. “The design of browsing and berrypicking

techniques for the online search interface”. In: Online Review

13.5 (1989), pp. 407–424.

[Bor03] Pia Borlund. “The IIR evaluation model: a framework for

evaluation of interactive information retrieval systems”. In:

Information Research 8.3 (2003). url:

http://informationr.net/ir/8-3/paper152.html.

[BS21] Timo Breuer and Philipp Schaer. “A Living Lab Architecture

for Reproducible Shared Task Experimentation”. In:

Information between Data and Knowledge. Vol. 74. Schriften

zur Informationswissenschaft. Session 6: Emerging

Technologies. Glückstadt: Werner Hülsbusch, 2021,

pp. 348–362. doi: 10.5283/epub.44953.

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 42 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-49-2048.jpg)

![Bibliography II

[BV05] Chris Buckley and Ellen Vorhees. “Retrieval System

Evaluation”. In: TREC Exp. Eval. Inf. Retr. Ed. by

Ellen Vorhees and Donna Harman. MIT Press, 2005, Chapter

3.

[FP19] Nicola Ferro and Carol Peters, eds. Information Retrieval

Evaluation in a Changing World: Lessons Learned from 20

Years of CLEF. Cham, CH: Springer-Nature, 2019.

[Fuh17] Norbert Fuhr. “Some Common Mistakes In IR Evaluation,

And How They Can Be Avoided”. In: SIGIR Forum 33 (2017).

url: http://www.is.informatik.uni-

duisburg.de/bib/pdf/ir/Fuhr_17b.pdf.

[Fuh20] Norbert Fuhr. “SIGIR Keynote: Proof By Experimentation?

Towards Better IR Research”. In: SIGIR Forum 54.2 (2020),

pp. 1–4. url: http://sigir.org/wp-

content/uploads/2020/12/p04.pdf.

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 43 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-50-2048.jpg)

![Bibliography III

[Har11] Donna Harman. Information Retrieval Evaluation. Synthesis

Lectures on Information Concepts, Retrieval, and Services.

San Francisco, CA, USA: Morgan Claypool, 2011. doi:

https:

//doi.org/10.2200/S00368ED1V01Y201105ICR019.

[IJ05] Peter Ingwersen and Kalvero Järvelin. The turn: integration of

information seeking and retrieval in context. Secaucus, NJ,

USA: Springer-Verlag New York, Inc., 2005. isbn:

140203850X.

[JBD18] Rolf Jagerman, Krisztian Balog, and Maarten De Rijke.

“OpenSearch: Lessons learned from an online evaluation

campaign”. In: J. Data Inf. Qual. 10.3 (2018). issn:

19361963. doi: 10.1145/3239575.

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 44 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-51-2048.jpg)

![Bibliography IV

[JK02] Kalvero Järvelin and Jaana Kekäläinen. “Cumulated

Gain-Based Evaluation of IR Techniques”. In: ACM Trans. Inf.

Syst. 20.4 (2002), pp. 422–446. url: https://www.cc.

gatech.edu/$%5Csim$zha/CS8803WST/dcg.pdf.

[Kel07] Diane Kelly. “Methods for Evaluating Interactive Information

Retrieval Systems with Users”. In: Found. Trends Inf. Retr.

3.1—2 (2007), pp. 1–224. issn: 1554-0669. doi:

10.1561/1500000012. url: http:

//www.nowpublishers.com/article/Details/INR-012.

[KH] Udo Kruschwitz and Charlie Hull. Searching the Enterprise.

Foundations and Trends in Information Retrieval. Now

Publishers. isbn: 978-1680833041. doi:

http://dx.doi.org/10.1561/1500000053.

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 45 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-52-2048.jpg)

![Bibliography V

[KL17] Ron Kohavi and Roger Longbotham. “Online Controlled

Experiments and A/B Testing”. In: Encyclopedia of Machine

Learning and Data Mining. Ed. by Claude Sammut and

Geoffrey I. Webb. Boston, MA, USA: Springer, 2017,

pp. 922–929. doi: 10.1007/978-1-4899-7687-1_891.

[Lar+17] Martha Larson et al. “The Benchmarking Initiative for

Multimedia Evaluation: MediaEval 2016”. In: IEEE Multimed.

24.1 (2017), pp. 93–96. issn: 1070986X. doi:

10.1109/MMUL.2017.9.

[LC14] Bo Long and Yi Chang. Relevance Ranking for Vertical Search

Engines. Morgan Kaufmann, 2014. isbn: 978-0124071711.

[LÖ09] Ling Liu and M. Tamer Özsu. “XML Information Retrieval”.

In: Encyclopedia of Database Systems. Ed. by Ling Liu and

M. Tamer Özsu. Springer, 2009. doi:

https://doi.org/10.1007/978-0-387-39940-9_4084.

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 46 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-53-2048.jpg)

![Bibliography VI

[Miz98] Stefano Mizzaro. “How many relevances in information

retrieval?” In: Interact. Comput. 10 (1998), pp. 303–320.

[Sak20] Tetsuya Sakai. “On Fuhr’s Guideline for IR Evaluation”. In:

SIGIR Forum 54.1 (2020).

[SOK21] Tetsuya Sakai, Douglas W. Oard, and Noriko Kando, eds.

Evaluating Information Retrieval and Access Tasks: NTCIR’s

Legacy of Research Impact. The Information Retrieval Series,

43. Heidelberg, Germany: Springer, 2021. isbn:

978-9811555534.

[VH05] Ellen M. Voorhees and Donna K. Harman, eds. TREC -

Experiment and Evaluation in Information: Experiment and

Evaluation in Information Retrieval. Cambridge, MA, USA:

MIT Press, 2005.

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 47 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-54-2048.jpg)

![Bibliography VII

[VH99] Ellen M. Voorhees and Donna K. Harman. “The Text

REtrieval Conference (TREC): history and plans for TREC-9”.

In: SIGIR Forum 33.2 (1999), pp. 12–15. doi:

10.1145/344250.344252.

[Voo+20] Ellen Voorhees et al. “TREC-COVID: Constructing a

Pandemic Information Retrieval Test Collection”. In: SIGIR

Forum 54.1 (2020), pp. 1–12. arXiv: 2005.04474. url:

http://arxiv.org/abs/2005.04474.

[Wan+20] Lucy Lu Wang et al. “CORD-19: The Covid-19 Open

Research Dataset.”. In: ArXiv (2020). issn: 2331-8422. arXiv:

2004.10706. url: http://www.pubmedcentral.nih.gov/

articlerender.fcgi?artid=PMC7251955.

I. Frommholz Evaluating Search Performance Exploring Information Retrieval 48 / 48](https://image.slidesharecdn.com/isko2022-evaluation-230222144741-bf91f50c/75/Evaluating-Search-Performance-55-2048.jpg)