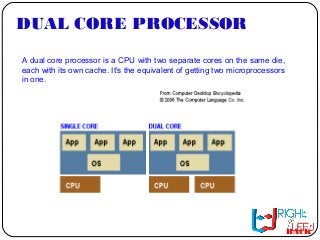

A dual core processor for a computer is a central processing unit (CPU) that has two separate cores on the same die, each with its own cache. It essentially is two microprocessors in one. This type of CPU is widely available from many manufacturers. Other types of multi-core processors also have been developed, including quad-core processors with four cores each, hexa-core processors with six, octa-core processors with eight and many-core processors with an even larger number of cores.