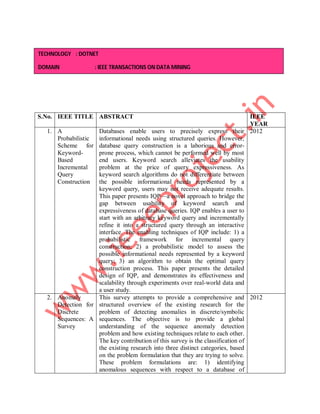

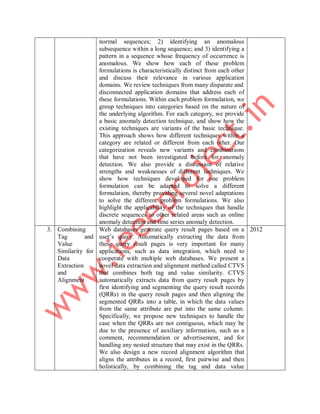

The document outlines SBGC's offerings of IEEE projects for final year students across various technical disciplines, emphasizing support for project selection and implementation. It details project categories, associated technologies, and various project support deliverables including abstracts, reports, and training. Additionally, the document summarizes several IEEE papers related to data mining, anomaly detection, data extraction, user behavior profiling, SQL data preparation, privacy preservation, and interaction mining.