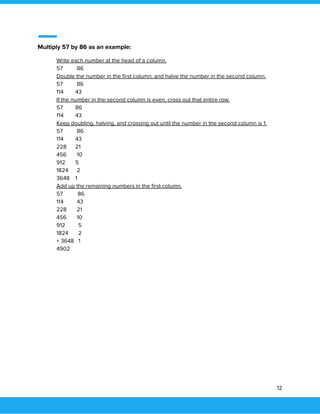

The document discusses the divide and conquer algorithm design paradigm and provides examples of algorithms that use this approach, including binary search, matrix multiplication, and sorting algorithms like merge sort and quicksort. It explains the three main steps of divide and conquer as divide, conquer, and combine. Advantages include solving difficult problems efficiently, enabling parallelization, and optimal memory usage. Disadvantages include issues with recursion, stack size, and choosing base cases. The La Russe method for multiplication is provided as a detailed example that uses doubling and halving to multiply two numbers without the multiplication operator.

![1. Introduction

Most of the algorithms are recursive by nature, for solution of any given problem, they try

to break it into smaller parts and solve them individually and at last building solution from

these subsolutions.

In computer science, divide and conquer is an algorithm design paradigm based on

multi-branched recursion. A divide and conquer algorithm works by recursively breaking

down a problem into two or more subproblems of the same or related type, until these

become simple enough to be solved directly. The solutions to the subproblems are then

combined to give a solution to the original problem.

This divide and conquer technique is the basis of efficient algorithms for all kinds of

problems, such as sorting (e.g., quicksort, merge sort), multiplying large numbers (e.g. the

Karatsuba algorithm), finding the closest pair of points, syntactic analysis (e.g., top-down

parsers), and computing the discrete Fourier transform (FFTs).

Understanding and designing divide and conquer algorithms is a complex skill that

requires a good understanding of the nature of the underlying problem to be solved. As

when proving a theorem by induction, it is often necessary to replace the original

problem with a more general or complicated problem in order to initialize the recursion,

and there is no systematic method for finding the proper generalization[clarification

needed]. These divide and conquer complications are seen when optimizing the

calculation of a Fibonacci number with efficient double recursion.

The correctness of a divide and conquer algorithm is usually proved by mathematical

induction, and its computational cost is often determined by solving recurrence relations.

2. Understanding Approach

Broadly, we can understand divide-and-conquer approach in a three-step process.

Divide/Break

This step involves breaking the problem into smaller sub-problems. Sub-problems should

represent a part of the original problem. This step generally takes a recursive approach to divide

3](https://image.slidesharecdn.com/dc-casestudy-171119061540/85/Divide-and-Conquer-Case-Study-4-320.jpg)

![5. D&C Algorithms

5.1 Binary Search

Binary search is a fast search algorithm with run-time complexity of Ο(log n). This search

algorithm works on the principle of divide and conquer. For this algorithm to work properly, the

data collection should be in the sorted form

Algorithm

if(l=i) then

{

if(x=a[i]) return i;

else return 0;

}

else

{

//reduce to smaller subproblems

mid=(mid+1)/2;

if(x=a[mid]) then return mid;

else if(x<a[mid]) then return BinSearch(a,i,mid-1,x)

else return BinSearch(a,mid+1,l,x)

}

8](https://image.slidesharecdn.com/dc-casestudy-171119061540/85/Divide-and-Conquer-Case-Study-9-320.jpg)

![Example

# Python Program for recursive binary search.

# Returns index of x in arr if present, else -1.

def binarySearch (arr, l, r, x):

# Check base case

if r >= l:

mid = l + (r - l)/2

# If element is present at the middle itself

if arr[mid] == x:

return mid

# If element is smaller than mid, then it can only

# be present in left subarray

elif arr[mid] > x:

return binarySearch(arr, l, mid-1, x)

# Else the element can only be present in right subarray

else:

return binarySearch(arr, mid+1, r, x)

else:

# Element is not present in the array

return -1

# Test array

arr = [ 2, 3, 4, 10, 40 ]

x = 10

# Function call

result = binarySearch(arr, 0, len(arr)-1, x)

if result != -1:

print "Element is present at index %d" % result

else:

print "Element is not present in array"

Output

10](https://image.slidesharecdn.com/dc-casestudy-171119061540/85/Divide-and-Conquer-Case-Study-11-320.jpg)

![Source code:

import java.io.*;

class laRusseMultAlgo

{

static int russianPeasant(int a, int b)

{

int res = 0;

while (b > 0)

{

if ((b & 1) != 0)

res = res + a;

a = a << 1;

b = b >> 1;

}

return res;

}

public static void main (String[] args)

{

System.out.println(russianPeasant(18, 1));

System.out.println(russianPeasant(20, 12));

}

}

Output:

18

240

13](https://image.slidesharecdn.com/dc-casestudy-171119061540/85/Divide-and-Conquer-Case-Study-14-320.jpg)

![5.3 Sorting Algorithms

There are a few algorithms for sorting which follow Divide & Conquer Approach. We will

study two major ones, Merge Sort and Quicksort.

5.3.1 Merge Sort

Merge Sort is a Divide and Conquer algorithm. It divides input array in two halves, calls

itself for the two halves and then merges the two sorted halves. The merge() function is

used for merging two halves. The merge(arr, l, m, r) is key process that assumes that

arr[l..m] and arr[m+1..r] are sorted and merges the two sorted sub-arrays into one.

Merge Sort Approach

•Divide

–Divide the n-element sequence to be sorted into two subsequences of n/2 elements

each

•Conquer

–Sort the subsequences recursively using merge sort

–When the size of the sequences is 1 there is nothing more to do

•Combine

–Merge the two sorted subsequences

Algorithm

MERGE-SORT(A, p, r)

if p < r Check for base case

then q ← [(p + r)/2] Divide

MERGE-SORT(A, p, q) Conquer

MERGE-SORT(A, q + 1, r) Conquer

MERGE(A, p, q, r) Combine

14](https://image.slidesharecdn.com/dc-casestudy-171119061540/85/Divide-and-Conquer-Case-Study-15-320.jpg)

![MERGE(A, p, q, r)

➔ Create copies of the subarrays L ← A[p..q] and M ← A[q+1..r].

➔ Create three pointers i,j and k

◆ i maintains current index of L, starting at 1

◆ j maintains current index of M, starting at 1

◆ k maintains current index of A[p..q], starting at p

➔ Until we reach the end of either L or M, pick the larger among the elements from L

and M and place them in the correct position at A[p..q]

➔ When we run out of elements in either L or M, pick up the remaining elements and

put in A[p..q]

MERGE-SORT Running Time

•Divide:

–compute q as the average of p and r: D(n) = θ (1)

•Conquer:

–recursively solve 2 subproblems, each of size n/2 => 2T (n/2)

•Combine:

–MERGE on an n-element subarray takes θ (n) time C(n) = θ (n)

θ (1) if n =1

T(n) =

2T(n/2) + θ (n) if n > 1

Use Master’s Theorem:

Compare n with f(n) = cn

Case 2: T(n) = Θ(n log n)

15](https://image.slidesharecdn.com/dc-casestudy-171119061540/85/Divide-and-Conquer-Case-Study-16-320.jpg)

![5.3.2 Quicksort

QuickSort is a Divide and Conquer algorithm. It picks an element as pivot and partitions

the given array around the picked pivot. There are many different versions of quickSort

that pick pivot in different ways.

Quicksort Approach

•Divide

–Partition the array A into 2 subarrays A[p..q] and A[q+1..r], such that each element

of A[p..q] is smaller than or equal to each element in A[q+1..r]

–Need to find index q to partition the array

•Conquer

–Recursively sort A[p..q] and A[q+1..r] using Quicksort

•Combine

–Trivial: the arrays are sorted in place

–No additional work is required to combine them

–The entire array is now sorted.

Algorithm

QUICKSORT(A, p, r)

if p < r

then q ← PARTITION(A, p, r)

QUICKSORT (A, p, q)

QUICKSORT (A, q+1, r)

17](https://image.slidesharecdn.com/dc-casestudy-171119061540/85/Divide-and-Conquer-Case-Study-18-320.jpg)

![PARTITION (A, p, r)

x ← A[p]

i ← p – 1

j ← r + 1

while TRUE

do repeat j ← j – 1

until A[j] ≤ x

do repeat i ← i + 1

until A[i] ≥ x

if i < j

then exchange A[i] ↔ A[j]

else return j

QUICK-SORT Running Time

For Worst-case partitioning

–One region has one element and the other has n – 1 elements

–Maximally unbalanced

T(n) = T(1) + T(n – 1) + n,

T(1) = Q(1)

18](https://image.slidesharecdn.com/dc-casestudy-171119061540/85/Divide-and-Conquer-Case-Study-19-320.jpg)

![Example

Consider following example of Tournament Method in Java:

class pair{

public int max, min;

}

public class HelloWorld

{

public static void main(String[] args)

{

float[] arr = {-34, 43,45,2,46};

pair p = getMinMax(arr, 0, arr.length-1);

System.out.println(p.max+" "+p.min);

}

public static pair getMinMax(float[] arr, int start, int end){

pair minmax =new pair(), mml=new pair(), mmr=new pair();

int mid;

if (start == end){

minmax.max = arr[start];

minmax.min = arr[start];

return minmax;

}

if (end == start+ 1){

if (arr[start] > arr[end]) {

minmax.max = arr[start];

minmax.min = arr[end];

}

else{

minmax.max = arr[end];

minmax.min = arr[start];

}

return minmax;

}

mid = (start + end)/2;

mml = getMinMax(arr, start, mid);

23](https://image.slidesharecdn.com/dc-casestudy-171119061540/85/Divide-and-Conquer-Case-Study-24-320.jpg)

![5.5 Closest Pair of points problem

Given a finite set of n points in the plane, the goal is to find

the closest pair, that is, the distance between the two closest

points. Formally, we want to find a distance δ such that there

exists a pair of points (ai , aj) at distance δ of each other, and

such that any other two points are separated by a distance

greater than or equal to δ. The above stated can be achieved

by two approaches-

5.5.1 Naive method/ Brute force method

Given the set of points we iterate over each and every point in the plain calculating the

distances between them and storing them. Then the minimum distance is chosen which

gets us the solution to the problem.

Algorithm

minDist = infinity

for i = 1 to length(P) - 1

for j = i + 1 to length(P)

let p = P[i], q = P[j]

if dist(p, q) < minDist:

minDist = dist(p, q)

closestPair = (p, q)

return closestPair

25](https://image.slidesharecdn.com/dc-casestudy-171119061540/85/Divide-and-Conquer-Case-Study-26-320.jpg)

![Example

Consider the following python code demonstrating the naive method-

import math

def calculateDistance(x1, y1, x2, y2):

dist = math.sqrt((x2 - x1) ** 2 + (y2 - y1) ** 2)

return dist

def closest(x,y):

result=[0,0]

min=999999

for i in range(len(x)):

for j in range(len(x)):

if i==j:

pass

else:

dist=calculateDistance(x[i],y[i],x[j],y[j]);

if dist<=min:

min=dist;

result[0]=i

result[1]=j

print("Closest distance is between point "+str(result[0]+1)+" and "+str(result[1]+1)+"

and distance is-"+str(min))

x=[5,-6,8,9,-10,0,20,6,7,8,9]

y=[0,-6,7,2,-1,5,-3,6,3,8,-9]

closest(x,y)

26](https://image.slidesharecdn.com/dc-casestudy-171119061540/85/Divide-and-Conquer-Case-Study-27-320.jpg)