Embed presentation

Download as PDF, PPTX

![TensorFlow

Advanced features

• Construction phase

• Execution phase

import tensorflow as tf

# Create a Constant op that produces a 1x2 matrix. The op is

# added as a node to the default graph.

#

# The value returned by the constructor represents the output

# of the Constant op.

matrix1 = tf.constant([[3., 3.]])

# Create another Constant that produces a 2x1 matrix.

matrix2 = tf.constant([[2.],[2.]])

with tf.Session() as sess:

result = sess.run([product])

print(result)](https://image.slidesharecdn.com/20160304-ntnu-dldive-200214172444/85/Dive-into-Deep-Learning-7-320.jpg)

![TensorFlow

Advanced features

• Working with Variables

# Create two variables.

weights = tf.Variable(tf.random_normal([784, 200], stddev=0.35),

name="weights")

biases = tf.Variable(tf.zeros([200]), name="biases")

...

# Add an op to initialize the variables.

init_op = tf.initialize_all_variables()

# Later, when launching the model

with tf.Session() as sess:

# Run the init operation.

sess.run(init_op)

...

# Use the model

...](https://image.slidesharecdn.com/20160304-ntnu-dldive-200214172444/85/Dive-into-Deep-Learning-8-320.jpg)

![TensorFlow

Advanced features

• Graph Visualization

• Using GPUs

• Sharing variables

https://www.tensorflow.org/

with tf.Session() as sess:

with tf.device("/gpu:1"):

matrix1 = tf.constant([[3., 3.]])

matrix2 = tf.constant([[2.],[2.]])](https://image.slidesharecdn.com/20160304-ntnu-dldive-200214172444/85/Dive-into-Deep-Learning-9-320.jpg)

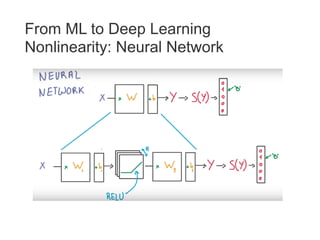

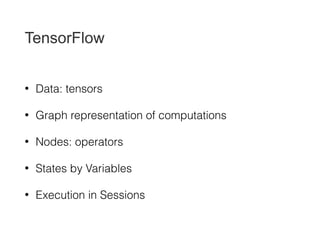

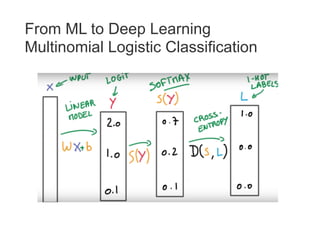

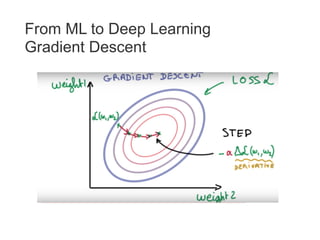

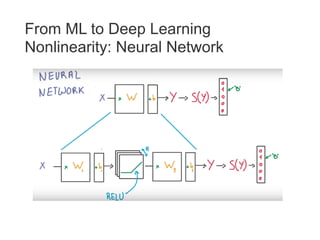

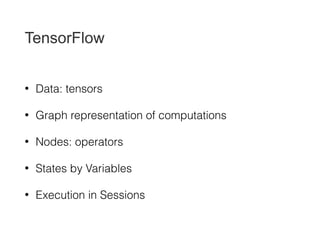

The document discusses deep learning as a significant machine learning paradigm that leverages data and GPUs. It highlights TensorFlow's framework, including its graph representation of computations and advanced features like variable management and GPU usage. Code examples demonstrate how to create and manipulate constants and variables within TensorFlow sessions.

![TensorFlow

Advanced features

• Construction phase

• Execution phase

import tensorflow as tf

# Create a Constant op that produces a 1x2 matrix. The op is

# added as a node to the default graph.

#

# The value returned by the constructor represents the output

# of the Constant op.

matrix1 = tf.constant([[3., 3.]])

# Create another Constant that produces a 2x1 matrix.

matrix2 = tf.constant([[2.],[2.]])

with tf.Session() as sess:

result = sess.run([product])

print(result)](https://image.slidesharecdn.com/20160304-ntnu-dldive-200214172444/85/Dive-into-Deep-Learning-7-320.jpg)

![TensorFlow

Advanced features

• Working with Variables

# Create two variables.

weights = tf.Variable(tf.random_normal([784, 200], stddev=0.35),

name="weights")

biases = tf.Variable(tf.zeros([200]), name="biases")

...

# Add an op to initialize the variables.

init_op = tf.initialize_all_variables()

# Later, when launching the model

with tf.Session() as sess:

# Run the init operation.

sess.run(init_op)

...

# Use the model

...](https://image.slidesharecdn.com/20160304-ntnu-dldive-200214172444/85/Dive-into-Deep-Learning-8-320.jpg)

![TensorFlow

Advanced features

• Graph Visualization

• Using GPUs

• Sharing variables

https://www.tensorflow.org/

with tf.Session() as sess:

with tf.device("/gpu:1"):

matrix1 = tf.constant([[3., 3.]])

matrix2 = tf.constant([[2.],[2.]])](https://image.slidesharecdn.com/20160304-ntnu-dldive-200214172444/85/Dive-into-Deep-Learning-9-320.jpg)