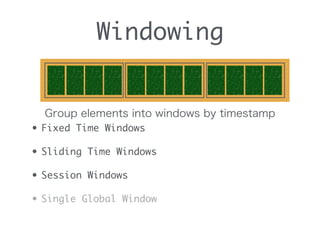

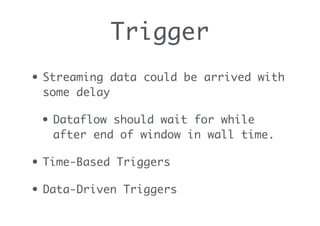

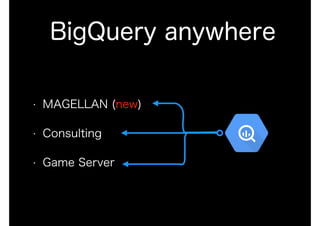

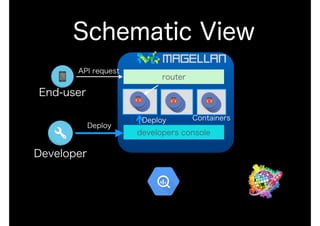

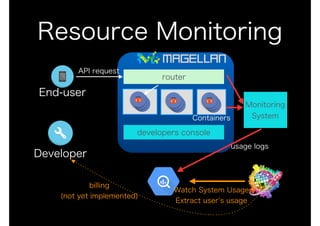

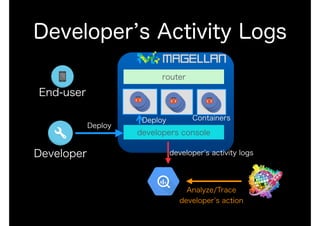

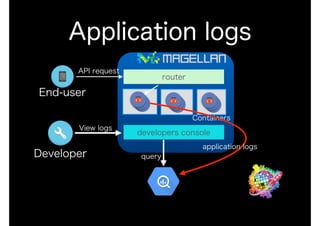

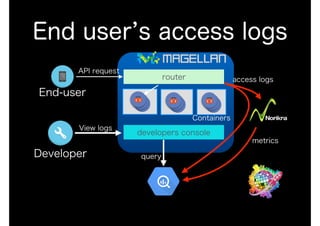

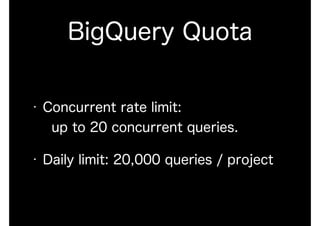

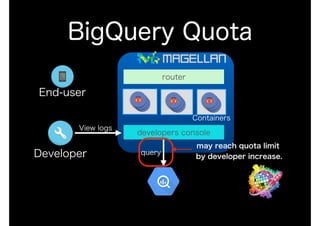

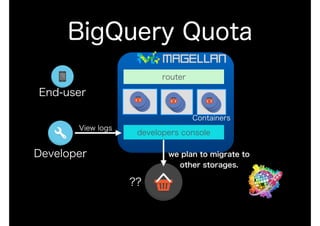

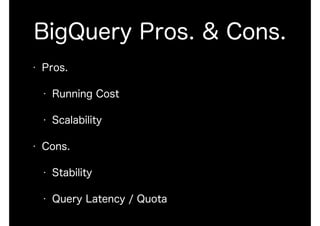

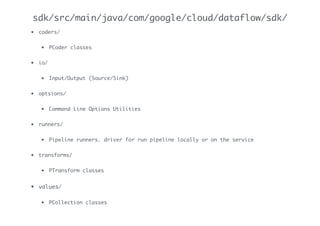

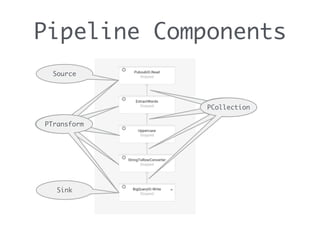

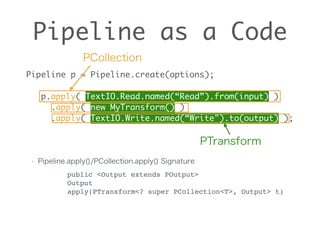

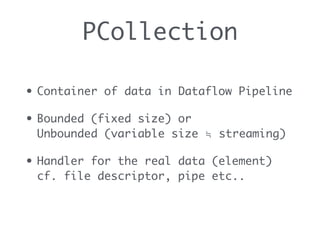

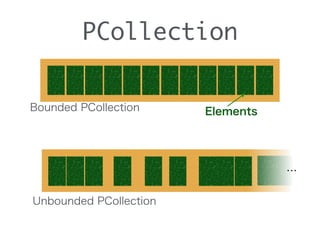

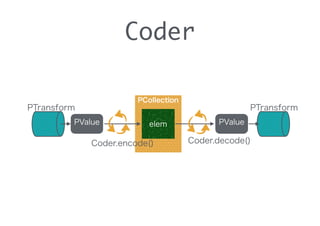

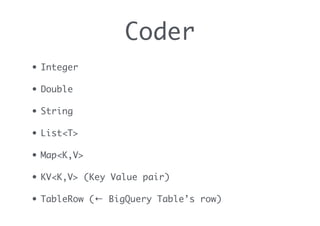

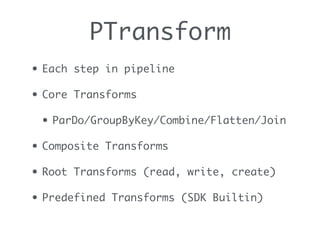

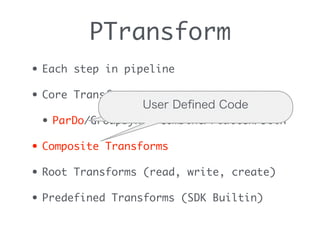

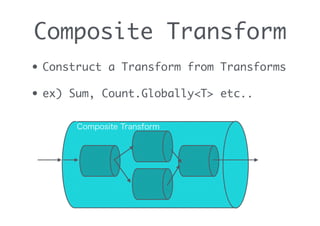

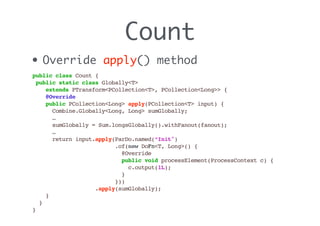

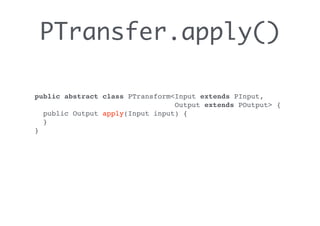

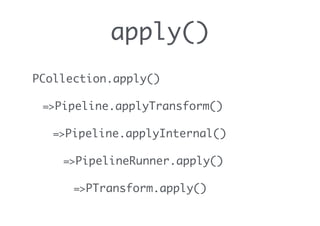

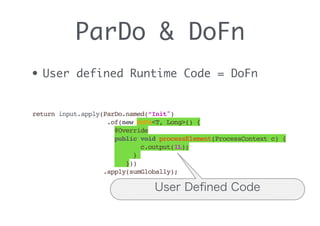

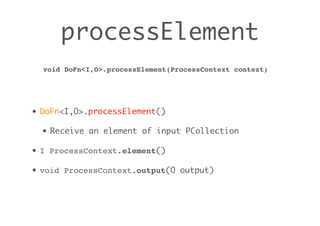

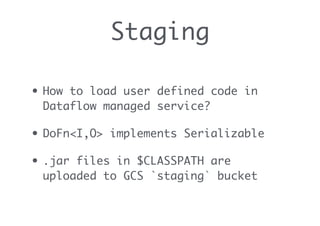

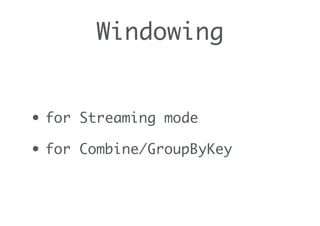

This document summarizes a presentation about using BigQuery and the Dataflow Java SDK. It discusses how Groovenauts uses BigQuery to analyze data from their MAGELLAN container hosting service, including resource monitoring, developer activity logs, application logs, and end-user access logs. It then provides an overview of the Dataflow Java SDK, including the key concepts of PCollections, coders, PTransforms, composite transforms, ParDo and DoFn, and windowing.

![Example of DoFn

static class ExtractWordsFn extends DoFn<String, String> {

public void processElement(ProcessContext c) {

String[] words = c.element().split(“[^a-zA-Z']+");

for (String word : words) {

if (!word.isEmpty()) {

c.output(word);

}

}

}

}

static class FormatCountsFn extends DoFn<KV<String, Long>, String> {

public void processElement(ProcessContext c) {

c.output(c.element().getKey() + ": " + c.element().getValue());

}

}

from WordCount.java](https://image.slidesharecdn.com/diveintodataflowjavasdk-150424130023-conversion-gate01/85/BigQuery-case-study-in-Groovenauts-Dive-into-the-DataflowJavaSDK-54-320.jpg)

![Windowing

k1: 1

k1: 2

k1: 3

k2: 2

Group

by

Key

k1: [1,2,3]

k2: [2]

Combine

k1: 3

k2: 1

k1: [1,2,3]

k2: [2]

• These transforms require all elements of input.

" In streaming mode inputs are unbounded.](https://image.slidesharecdn.com/diveintodataflowjavasdk-150424130023-conversion-gate01/85/BigQuery-case-study-in-Groovenauts-Dive-into-the-DataflowJavaSDK-66-320.jpg)